Yahoo’s Mayer: “Our Search Share Has Declined” But She’s Optimistic About Opportunities

Yahoo is making progress in a number of product areas under CEO Marissa Mayer, but search isn’t one of them — a fact that Mayer herself admitted during today’s Q3 earnings call. Yahoo’s US share of the search market was just 11.3 percent in September, according to…

Please visit Search Engine Land for the full article.

SearchCap: The Day In Search, October 15, 2013

Below is what happened in search today, as reported on Search Engine Land and from other places across the Web. From Search Engine Land: Google AdWords Third-Party “Review Extensions” Start Rolling Out To All Accounts In June, Google announced the beta release of Review Extensions which…

Please visit Search Engine Land for the full article.

Foursquare Self-Serve Ads Now Open to All Local Businesses

Location-based social networking brand Foursquare has rolled out self-serve ads to all advertisers, in an effort to monetize their audience of 40 million users. Here’s a look at how the new ads work, and the ad creation process.

Importing Multiple CSVs – An Excel Macro for Generating Reports

As I’ve already made clear, I spend a lot of time using Screaming Frog. I love the copy & paste functionality that it offers, which allows me to move data directly from the crawler into Excel, but sometimes I’d rather have Excel build my technical reports for me… With that in mind, I wanted to […]

Google’s Matt Cutts on Search vs. Social: Don’t Rely on Just One Channel

Rather than spending your time trying to figure out every little nuance of Google’s search algorithm and seeking shortcuts, Matt Cutts says you should build a fantastic site people love and have a well-rounded portfolio of ways to get leads/traffic.

Search Revenues Hit $8.7 Billion in First Half of 2013

The first half of 2013 was a record high for online advertising revenues, coming in at $20.1 billion, according to the Interactive Advertising Bureau.

Revenue from search increased 7 percent from $8.1 billion for the first half of 2012.

Google’s Hummingbird Algorithm Ten Years Ago

Back in September, Google announced that they had started using an algorithm that rewrites queries submitted by searchers which they had given the code name “Hummingbird.” At the time, I was writing a blog post about a patent from Google that seemed like it might be very related to the update because the focus was […]

The post Google’s Hummingbird Algorithm Ten Years Ago appeared first on SEO by the Sea.

Twitter Ad Users Can Now Schedule Tweets a Year in Advance

Twitter announced this week what many marketers may have been waiting for – a way to schedule tweets one year in advanced right through Twitter. But there is a catch: only Twitter advertisers have the option to use this new functionality.

Yes Virginia, Google+ Can Directly Impact Your Search Rankings

Every time I see an article about how Google +1s currently have no direct impact on search rankings, I cringe a little bit. Not because I believe Google +1′s DO currently have a direct impact on your search engine rankings. The reason I cringe is because so many people seem to read a quote like […]

The post Yes Virginia, Google+ Can Directly Impact Your Search Rankings appeared first on Sugarrae.

U.S. Paid Search Spend Continues Rising in Q3 2013

Several reports offer a healthy roundup of paid search spending and a positive outlook for the remainder of 2013. Insights from RKG, IgnitionOne, and Kenshoo all report that U.S. marketers continue to increase their investments in paid search.

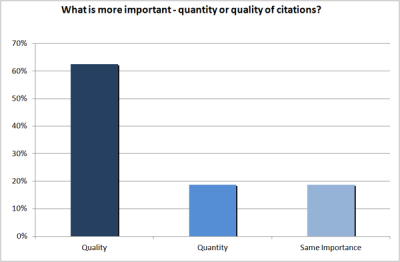

Local Citations: Quality v. Quantity?

Source: BrightLocal Citation Building Survey 2013 Nyagoslav Zhevkov has a great overview of a survey on local citations Bright Local conducted in September. There are a few good insights in the post, but this one stood out for me: “For the question “What is more important – quantity or quality of citations?” I would have […]

Source: BrightLocal Citation Building Survey 2013 Nyagoslav Zhevkov has a great overview of a survey on local citations Bright Local conducted in September. There are a few good insights in the post, but this one stood out for me: “For the question “What is more important – quantity or quality of citations?” I would have […]

The post Local Citations: Quality v. Quantity? appeared first on Local SEO Guide.

Adobe Analytics Adds 6 New Features

Adobe has revealed an extensive list of new features that extend functionality for their customers – including predictive analytics and anomaly detection, expanded real-time reporting and mobile analytics capabilities, and video metrics reports.

Google AdWords Third-Party “Review Extensions” Start Rolling Out To All Accounts

In June, Google announced the beta release of Review Extensions which allow advertisers to append a quote of endorsement from a reputable publication in their AdWords ads. Today, these Review Extensions will begin to roll out to all AdWords accounts. Y…

Google Disavow Removal After Penalty Removal

A WebmasterWorld thread asks the question most SEOs and webmasters try not to ask in a public forum. After a penalty is lifted, should you go ahead and remove your disavow file or even remove some of the links from the disavow file?

Why would you do th…

Bringing you a world class data strategy and table tennis champ all in one video

With SearchLove London just around the corner, we’re getting more than a little excited to welcome this year’s gamut of industry speakers back to The Brewery – so much so, we’ve handed over the blog reins to them for the whole month.

Bringing you a world class data strategy and table tennis champ all in one video

With SearchLove London just around the corner, we’re getting more than a little excited to welcome this year’s gamut of industry speakers back to The Brewery – so much so, we’ve handed over the blog reins to them for the Continue reading »

Be Ready for 2014 – Attend SMX Social Media Marketing!

In less than 90 days, 2014 budgeting will be complete, Black Friday will be a distant memory, and new marketing objectives will be the measure of your success. Be ready! Hone your paid, earned, and owned social media marketing skills at SMX Social Medi…

Ecommerce Product Pages: How to Fix Duplicate, Thin & Too Much Content

If you run an ecommerce site and you’ve seen traffic flat-line, slowly erode, or fall off a cliff, then product page content issues may be the culprit. Here’s a closer look at some of the most common ecommerce content woes and how to fix them.

10 Quick PPC Tips, Improvements & Fixes

Want to make some positive changes to your account but got no time? Try these 7 quick PPC fixes

Post from Jackie Hole on State of Digital

10 Quick PPC Tips, Improvements & Fixes