Our goal: helping webmasters and content creators

- Google Web Fundamentals: Provides technical guidance on building a modern website that takes advantage of open web standards.

- Google Search developer documentation: Describes how Google crawls and indexes a website. Includes authoritative guidance on building a site that is optimized for Google Search.

- Search Console Help Center: Provides detailed information on how to use and take advantage of Search Console, the best way for a website owner to understand how Google sees their site.

- The Search Engine Optimization (SEO) Starter Guide: Provides a complete overview of the basics of SEO according to our recommended best practices.

- Google webmaster guidelines: Describes policies and practices that may lead to a site being removed entirely from the Google index or otherwise affected by an algorithmic or manual spam action that can negatively affect their Search appearance.

- Google Webmasters YouTube Channel

Launching SEO Audit category in Lighthouse Chrome extension

Lighthouse is an open-source, automated auditing tool for improving the quality of web pages. It provides a well-lit path for improving the quality of sites by allowing developers to run audits for performance, accessibility, progressive web apps compatibility and more. Basically, it “keeps you from crashing into the rocks”, hence the name Lighthouse.

The SEO audit category within Lighthouse enables developers and webmasters to run a basic SEO health-check for any web page that identifies potential areas for improvement. Lighthouse runs locally in your Chrome browser, enabling you to run the SEO audits on pages in a staging environment as well as on live pages, public pages and pages that require authentication.

Bringing SEO best practices to you

The current list of SEO audits is not an exhaustive list, nor does it make any SEO guarantees for Google websearch or other search engines. The current list of audits was designed to validate and reflect the SEO basics that every site should get right, and provides detailed guidance to developers and SEO practitioners of all skill levels. In the future, we hope to add more and more in-depth audits and guidance — let us know if you have suggestions for specific audits you’d like to see!

How to use it

Currently there are two ways to run these audits.

- Install the Lighthouse Chrome Extension

- Click on the Lighthouse icon in the extension bar

- Select the Options menu, tick “SEO” and click OK, then Generate report

- Open Chrome Developer Tools

- Go to Audits

- Click Perform an audit

- Tick the “SEO” checkbox and click Run Audit.

The current Lighthouse Chrome extension contains an initial set of SEO audits which we’re planning to extend and enhance in the future. Once we’re confident of its functionality, we’ll make the audits available by default in the stable release of Chrome Developer Tools.

We hope you find this functionality useful for your current and future projects. If these basic SEO tips are totally new to you and you find yourself interested in this area, make sure to read our complete SEO starter-guide! Leave your feedback and suggestions in the comments section below, on GitHub or on our Webmaster forum.

Happy auditing!

Posted by Valentyn, Webmaster Outreach Strategist.

Real-world data in PageSpeed Insights

PageSpeed Insights provides information about how well a page adheres to a set of best practices. In the past, these recommendations were presented without the context of how fast the page performed in the real world, which made it hard to understand when it was appropriate to apply these optimizations. Today, we’re announcing that PageSpeed Insights will use data from the Chrome User Experience Report to make better recommendations for developers and the optimization score has been tuned to be more aligned with the real-world data.

The PSI report now has several different elements:

- The Speed score categorizes a page as being Fast, Average, or Slow. This is determined by looking at the median value of two metrics: First Contentful Paint (FCP) and DOM Content Loaded (DCL). If both metrics are in the top one-third of their category, the page is considered fast.

- The Optimization score categorizes a page as being Good, Medium, or Low by estimating its performance headroom. The calculation assumes that a developer wants to keep the same appearance and functionality of the page.

- The Page Load Distributions section presents how this page’s FCP and DCL events are distributed in the data set. These events are categorized as Fast (top third), Average (middle third), and Slow (bottom third) by comparing to all events in the Chrome User Experience Report.

- The Page Stats section describes the round trips required to load the page’s render-blocking resources, the total bytes used by the page, and how it compares to the median number of round trips and bytes used in the dataset. It can indicate if the page might be faster if the developer modifies the appearance and functionality of the page.

- Optimization Suggestions is a list of best practices that could be applied to this page. If the page is fast, these suggestions are hidden by default, as the page is already in the top third of all pages in the data set.

For more details on these changes, see About PageSpeed Insights. As always, if you have any questions or feedback, please visit our forums and please remember to include the URL that is being evaluated.

Posted by Mushan Yang (杨沐杉) and Xiangyu Luo (罗翔宇), Software Engineers

Real-world data in PageSpeed Insights

PageSpeed Insights provides information about how well a page adheres to a set of best practices. In the past, these recommendations were presented without the context of how fast the page performed in the real world, which made it hard to understand when it was appropriate to apply these optimizations. Today, we’re announcing that PageSpeed Insights will use data from the Chrome User Experience Report to make better recommendations for developers and the optimization score has been tuned to be more aligned with the real-world data.

The PSI report now has several different elements:

- The Speed score categorizes a page as being Fast, Average, or Slow. This is determined by looking at the median value of two metrics: First Contentful Paint (FCP) and DOM Content Loaded (DCL). If both metrics are in the top one-third of their category, the page is considered fast.

- The Optimization score categorizes a page as being Good, Medium, or Low by estimating its performance headroom. The calculation assumes that a developer wants to keep the same appearance and functionality of the page.

- The Page Load Distributions section presents how this page’s FCP and DCL events are distributed in the data set. These events are categorized as Fast (top third), Average (middle third), and Slow (bottom third) by comparing to all events in the Chrome User Experience Report.

- The Page Stats section describes the round trips required to load the page’s render-blocking resources, the total bytes used by the page, and how it compares to the median number of round trips and bytes used in the dataset. It can indicate if the page might be faster if the developer modifies the appearance and functionality of the page.

- Optimization Suggestions is a list of best practices that could be applied to this page. If the page is fast, these suggestions are hidden by default, as the page is already in the top third of all pages in the data set.

For more details on these changes, see About PageSpeed Insights. As always, if you have any questions or feedback, please visit our forums and please remember to include the URL that is being evaluated.

Posted by Mushan Yang (杨沐杉) and Xiangyu Luo (罗翔宇), Software Engineers

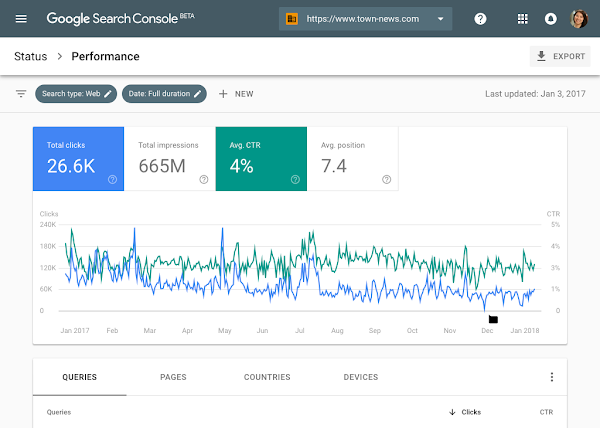

Introducing the new Search Console

A few months ago we released a beta version of a new Search Console experience to a limited number of users. We are now starting to release this beta version to all users of Search Console, so that everyone can explore this simplified process of optimizing a website’s presence on Google Search. The functionality will include Search performance, Index Coverage, AMP status, and Job posting reports. We will send a message once your site is ready in the new Search Console.

We started by adding some of the most popular functionality in the new Search Console (which can now be used in your day-to-day flow of addressing these topics). We are not done yet, so over the course of the year the new Search Console (beta) will continue to add functionality from the classic Search Console. Until the new Search Console is complete, both versions will live side-by-side and will be easily interconnected via links in the navigation bar, so you can use both.

The new Search Console was rebuilt from the ground up by surfacing the most actionable insights and creating an interaction model which guides you through the process of fixing any pending issues. We’ve also added ability to share reports within your own organization in order to simplify internal collaboration.

Search Performance: with 16 months of data!

If you’ve been a fan of Search Analytics, you’ll love the new Search Performance report. Over the years, users have been consistent in asking us for more data in Search Analytics. With the new report, you’ll have 16 months of data, to make analyzing longer-term trends easier and enable year-over-year comparisons. In the near future, this data will also be available via the Search Console API.

Index Coverage: a comprehensive view on Google’s indexing

The updated Index Coverage report gives you insight into the indexing of URLs from your website. It shows correctly indexed URLs, warnings about potential issues, and reasons why Google isn’t indexing some URLs. The report is built on our new Issue tracking functionality

that alerts you when new issues are detected and helps you monitor their fix.

So how does that work?

- When you drill down into a specific issue you will see a sample of URLs from your site. Clicking on error URLs brings up the page details with links to diagnostic-tools that help you understand what is the source of the problem.

- Fixing Search issues often involves multiple teams within a company. Giving the right people access to information about the current status, or about issues that have come up there, is critical to improving an implementation quickly. Now, within most reports in the new Search Console, you can do that with the share button on top of the report which will create a shareable link to the report. Once things are resolved, you can disable sharing just as easily.

- The new Search Console can also help you confirm that you’ve resolved an issue, and help us to update our index accordingly. To do this, select a flagged issue, and click validate fix. Google will then crawl and reprocess the affected URLs with a higher priority, helping your site to get back on track faster than ever.

- The Index Coverage report works best for sites that submit sitemap files. Sitemap files are a great way to let search engines know about new and updated URLs. Once you’ve submitted a sitemap file, you can now use the sitemap filter over the Index Coverage data, so that you’re able to focus on an exact list of URLs.

Search Enhancements: improve your AMP and Job Postings pages

The new Search Console is also aimed at helping you implement Search Enhancements such as AMP and Job Postings (more to come). These reports provide details into the specific errors and warnings that Google identified for these topics. In addition to the functionally described in the index coverage report, we augmented the reports with two extra features:

- The first feature is aimed at providing faster feedback in the process of fixing an issue. This is achieved by running several instantaneous tests once you click the validate fix button. If your pages don’t pass this test we provide you with an immediate notification, otherwise we go ahead and reprocess the rest of the affected pages.

- The second new feature is aimed at providing positive feedback during the fix process by expanding the validation log with a list of URLs that were identified as fixed (in addition to URLs that failed the validation or might still be pending).

Similar to the AMP report, the new Search Console provides a Job postings report. If you have jobs listings on your website, you may be eligible to have those shown directly through Google for Jobs (currently only available in certain locations).

Feedback welcome

We couldn’t have gotten so far without the ongoing feedback from our diligent trusted testers (we plan to share more on how their feedback helped us dramatically improve Search Console). However, your continued feedback is critical for us: if there’s something you find confusing or wrong, or if there’s something you really like, please let us know through the feedback feature in the sidebar. Also note that the mobile experience in the new Search Console is still a work in progress.

We want to end this blog sharing an encouraging response we got from a user who has been testing the new Search Console recently:

“The UX of new Search Console is clean and well laid out, everything is where we expect it to be. I can even kick-off validation of my fixes and get email notifications with the result. It’s been a massive help in fixing up some pesky AMP errors and warnings that were affecting pages on our site. On top of all this, the Search Analytics report now extends to 16 months of data which is a total game changer for us” – Noah Szubski, Chief Product Officer, DailyMail.com

Are there any other tools that would make your life as a webmaster easier? Let us know in the comments here, and feel free to jump into our webmaster help forums to discuss your ideas with others!

Posted by John Mueller, Ofir Roval and Hillel Maoz

Introducing the new Search Console

A few months ago we released a beta version of a new Search Console experience to a limited number of users. We are now starting to release this beta version to all users of Search Console, so that everyone can explore this simplified process of optimizing a website’s presence on Google Search. The functionality will include Search performance, Index Coverage, AMP status, and Job posting reports. We will send a message once your site is ready in the new Search Console.

We started by adding some of the most popular functionality in the new Search Console (which can now be used in your day-to-day flow of addressing these topics). We are not done yet, so over the course of the year the new Search Console (beta) will continue to add functionality from the classic Search Console. Until the new Search Console is complete, both versions will live side-by-side and will be easily interconnected via links in the navigation bar, so you can use both.

The new Search Console was rebuilt from the ground up by surfacing the most actionable insights and creating an interaction model which guides you through the process of fixing any pending issues. We’ve also added ability to share reports within your own organization in order to simplify internal collaboration.

Search Performance: with 16 months of data!

If you’ve been a fan of Search Analytics, you’ll love the new Search Performance report. Over the years, users have been consistent in asking us for more data in Search Analytics. With the new report, you’ll have 16 months of data, to make analyzing longer-term trends easier and enable year-over-year comparisons. In the near future, this data will also be available via the Search Console API.

Index Coverage: a comprehensive view on Google’s indexing

The updated Index Coverage report gives you insight into the indexing of URLs from your website. It shows correctly indexed URLs, warnings about potential issues, and reasons why Google isn’t indexing some URLs. The report is built on our new Issue tracking functionality that alerts you when new issues are detected and helps you monitor their fix.

So how does that work?

- When you drill down into a specific issue you will see a sample of URLs from your site. Clicking on error URLs brings up the page details with links to diagnostic-tools that help you understand what is the source of the problem.

- Fixing Search issues often involves multiple teams within a company. Giving the right people access to information about the current status, or about issues that have come up there, is critical to improving an implementation quickly. Now, within most reports in the new Search Console, you can do that with the share button on top of the report which will create a shareable link to the report. Once things are resolved, you can disable sharing just as easily.

- The new Search Console can also help you confirm that you’ve resolved an issue, and help us to update our index accordingly. To do this, select a flagged issue, and click validate fix. Google will then crawl and reprocess the affected URLs with a higher priority, helping your site to get back on track faster than ever.

- The Index Coverage report works best for sites that submit sitemap files. Sitemap files are a great way to let search engines know about new and updated URLs. Once you’ve submitted a sitemap file, you can now use the sitemap filter over the Index Coverage data, so that you’re able to focus on an exact list of URLs.

Search Enhancements: improve your AMP and Job Postings pages

The new Search Console is also aimed at helping you implement Search Enhancements such as AMP and Job Postings (more to come). These reports provide details into the specific errors and warnings that Google identified for these topics. In addition to the functionally described in the index coverage report, we augmented the reports with two extra features:

- The first feature is aimed at providing faster feedback in the process of fixing an issue. This is achieved by running several instantaneous tests once you click the validate fix button. If your pages don’t pass this test we provide you with an immediate notification, otherwise we go ahead and reprocess the rest of the affected pages.

- The second new feature is aimed at providing positive feedback during the fix process by expanding the validation log with a list of URLs that were identified as fixed (in addition to URLs that failed the validation or might still be pending).

Similar to the AMP report, the new Search Console provides a Job postings report. If you have jobs listings on your website, you may be eligible to have those shown directly through Google for Jobs (currently only available in certain locations).

Feedback welcome

We couldn’t have gotten so far without the ongoing feedback from our diligent trusted testers (we plan to share more on how their feedback helped us dramatically improve Search Console). However, your continued feedback is critical for us: if there’s something you find confusing or wrong, or if there’s something you really like, please let us know through the feedback feature in the sidebar. Also note that the mobile experience in the new Search Console is still a work in progress.

We want to end this blog sharing an encouraging response we got from a user who has been testing the new Search Console recently:

“The UX of new Search Console is clean and well laid out, everything is where we expect it to be. I can even kick-off validation of my fixes and get email notifications with the result. It’s been a massive help in fixing up some pesky AMP errors and warnings that were affecting pages on our site. On top of all this, the Search Analytics report now extends to 16 months of data which is a total game changer for us” – Noah Szubski, Chief Product Officer, DailyMail.com

Are there any other tools that would make your life as a webmaster easier? Let us know in the comments here, and feel free to jump into our webmaster help forums to discuss your ideas with others!

Posted by John Mueller, Ofir Roval and Hillel Maoz

Make your site’s complete jobs information accessible to job seekers

In June, we announced a new experience that put the convenience of Search into the hands of job seekers. Today, we are taking the next step in improving the job search experience on Google by adding a feature that shows estimated salary information from the web alongside job postings, as well as adding new UI features for users.

Salary information has been one of the most requested additions from job seekers. This helps people evaluate whether a job is a good fit, and is an opportunity for sites with estimated salary information to:

- Increase brand awareness: Estimated salary information shows a representative logo from the estimated salary provider.

- Get more referral traffic: Users can click through directly to salary estimate pages when salary information surfaces in job search results.

If your site provides salary estimates, you can take advantage of these changes in the following ways:

Specify actual salary information

Actual salary refers to the base salary information that is provided by the employer. If your site publishes job listings, you can add JobPosting structured data and populate the baseSalary property to be eligible for inclusion in job search results.

This salary information will be made available in both the list and the detail views.

Provide estimated salary information

In cases where employers don’t provide actual salary, job seekers may see estimated salaries sourced from multiple partners for the same or similar occupation. If your site provides salary estimate information, you can add Occupation structured data to be eligible for inclusion in job search results.

Include exact location information

We’ve heard from users that having accurate, street-level location information helps them to focus on opportunities that work best for them. Sites that publish job listings can do this can do this by using the jobLocation property in JobPosting structured data.

Validate your structured data

To double-check the structured data on your pages, we’ll be updating the Structured Data Testing Tool and the Search Console reports in the near future. In the meantime, you can monitor the performance of your job postings in Search Analytics. Stay tuned!

Since launching this summer, we’ve seen over 60% growth in number of companies with jobs showing on Google and connected tens of millions of people to new job opportunities. We are excited to help users find jobs with salaries that meet their needs, and to route them to your site for more information. We invite sites that provide salary estimates to mark up their salary pages using the Occupation structured data. Should you have any questions regarding the use of structured data on your site, feel free to drop by our webmaster help forums.

Posted by Nick Zakrasek, Product Manager

Make your site’s complete jobs information accessible to job seekers

In June, we announced a new experience that put the convenience of Search into the hands of job seekers. Today, we are taking the next step in improving the job search experience on Google by adding a feature that shows estimated salary information from the web alongside job postings, as well as adding new UI features for users.

Salary information has been one of the most requested additions from job seekers. This helps people evaluate whether a job is a good fit, and is an opportunity for sites with estimated salary information to:

- Increase brand awareness: Estimated salary information shows a representative logo from the estimated salary provider.

- Get more referral traffic: Users can click through directly to salary estimate pages when salary information surfaces in job search results.

If your site provides salary estimates, you can take advantage of these changes in the following ways:

Specify actual salary information

Actual salary refers to the base salary information that is provided by the employer. If your site publishes job listings, you can add JobPosting structured data and populate the baseSalary property to be eligible for inclusion in job search results.

This salary information will be made available in both the list and the detail views.

Provide estimated salary information

In cases where employers don’t provide actual salary, job seekers may see estimated salaries sourced from multiple partners for the same or similar occupation. If your site provides salary estimate information, you can add Occupation structured data to be eligible for inclusion in job search results.

Include exact location information

We’ve heard from users that having accurate, street-level location information helps them to focus on opportunities that work best for them. Sites that publish job listings can do this can do this by using the jobLocation property in JobPosting structured data.

Validate your structured data

To double-check the structured data on your pages, we’ll be updating the Structured Data Testing Tool and the Search Console reports in the near future. In the meantime, you can monitor the performance of your job postings in Search Analytics. Stay tuned!

Since launching this summer, we’ve seen over 60% growth in number of companies with jobs showing on Google and connected tens of millions of people to new job opportunities. We are excited to help users find jobs with salaries that meet their needs, and to route them to your site for more information. We invite sites that provide salary estimates to mark up their salary pages using the Occupation structured data. Should you have any questions regarding the use of structured data on your site, feel free to drop by our webmaster help forums.

Posted by Nick Zakrasek, Product Manager

The new Search Console: a sneak peek at two experimental features

Now we have decided to embark on an extensive redesign to better serve you, our users. Our hope is that this redesign will provide you with:

- More actionable insights – We will now group the identified issues by what we suspect is the common “root-cause” to help you find where you should fix your code. We organize these issues into tasks that have a state (similar to bug tracking systems) so you can easily see whether the issue is still open, whether Google has detected your fix, and track the progress of re-processing the affected pages.

- Better support of your organizational workflow – As we talked to many organizations, we’ve learned that multiple people are typically involved in implementing, diagnosing, and fixing issues. This is why we are introducing sharing functionality that allows you to pick-up an action item and share it with other people in your group, like developers who will get references to the code in question.

- Faster feedback loops between you and Google – We’ve built a mechanism to allow you to iterate quickly on your fixes, and not waste time waiting for Google to recrawl your site, only to tell you later that it’s not fixed yet. Rather, we’ll provide on-the-spot testing of fixes and are automatically speeding up crawling once we see things are ok. Similarly, the testing tools will include code snippets and a search preview – so you can quickly see where your issues are, confirm you’ve fixed them, and see how the pages will look on Search.

In the next few weeks, we’re releasing two exciting BETA features from the new Search Console to a small set of users — Index Coverage report and AMP fixing flow.

The new Index Coverage report shows the count of indexed pages, information about why some pages could not be indexed, along with example pages and tips on how to fix indexing issues. It also enables a simple sitemap submission flow, and the capability to filter all Index Coverage data to any of the submitted sitemaps.

Here’s a peek of our new Index Coverage report:

The new AMP fixing flow

The new AMP fixing experience starts with the AMP Issues report. This report shows the current AMP issues affecting your site, grouped by the underlying error. Drill down into an issue to get more details, including sample affected pages. After you fix the underlying issue, click a button to verify your fix, and have Google recrawl the pages affected by that issue. Google will notify you of the progress of the recrawl, and will update the report as your fixes are validated.

As we start to experiment with these new features, some users will be introduced to the new redesign through the coming weeks.

Posted by John Mueller and the Search Console Team

The new Search Console: a sneak peek at two experimental features

Now we have decided to embark on an extensive redesign to better serve you, our users. Our hope is that this redesign will provide you with:

- More actionable insights – We will now group the identified issues by what we suspect is the common “root-cause” to help you find where you should fix your code. We organize these issues into tasks that have a state (similar to bug tracking systems) so you can easily see whether the issue is still open, whether Google has detected your fix, and track the progress of re-processing the affected pages.

- Better support of your organizational workflow – As we talked to many organizations, we’ve learned that multiple people are typically involved in implementing, diagnosing, and fixing issues. This is why we are introducing sharing functionality that allows you to pick-up an action item and share it with other people in your group, like developers who will get references to the code in question.

- Faster feedback loops between you and Google – We’ve built a mechanism to allow you to iterate quickly on your fixes, and not waste time waiting for Google to recrawl your site, only to tell you later that it’s not fixed yet. Rather, we’ll provide on-the-spot testing of fixes and are automatically speeding up crawling once we see things are ok. Similarly, the testing tools will include code snippets and a search preview – so you can quickly see where your issues are, confirm you’ve fixed them, and see how the pages will look on Search.

In the next few weeks, we’re releasing two exciting BETA features from the new Search Console to a small set of users — Index Coverage report and AMP fixing flow.

The new Index Coverage report shows the count of indexed pages, information about why some pages could not be indexed, along with example pages and tips on how to fix indexing issues. It also enables a simple sitemap submission flow, and the capability to filter all Index Coverage data to any of the submitted sitemaps.

Here’s a peek of our new Index Coverage report:

The new AMP fixing flow

The new AMP fixing experience starts with the AMP Issues report. This report shows the current AMP issues affecting your site, grouped by the underlying error. Drill down into an issue to get more details, including sample affected pages. After you fix the underlying issue, click a button to verify your fix, and have Google recrawl the pages affected by that issue. Google will notify you of the progress of the recrawl, and will update the report as your fixes are validated.

As we start to experiment with these new features, some users will be introduced to the new redesign through the coming weeks.

Posted by John Mueller and the Search Console Team

Search at I/O 16 Recap: Eight things you don’t want to miss

Cross-posted from the Google Developers Blog

Two weeks ago, over 7,000 developers descended upon Mountain View for this year’s Google I/O, with a takeaway that it’s truly an exciting time for Search. People come to Google billions of times per day to fulfill their daily information needs. We’re focused on creating features and tools that we believe will help users and publishers make the most of Search in today’s world. As Google continues to evolve and expand to new interfaces, such as the Google assistant and Google Home, we want to make it easy for publishers to integrate and grow with Google.

In case you didn’t have a chance to attend all our sessions, we put together a recap of all the Search happenings at I/O.

1: Introducing rich cards

We announced rich cards, a new Search result format building on rich snippets, that uses schema.org markup to display content in an even more engaging and visual format. Rich cards are available in English for recipes and movies and we’re excited to roll out for more content categories soon. To learn more, browse the new gallery with screenshots and code samples of each markup type or watch our rich cards devByte.

2: New Search Console reports

We want to make it easy for webmasters and developers to track and measure their performance in search results. We launched a new report in Search Console to help developers confirm that their rich card markup is valid. In the report we highlight “enhanceable cards,” which are cards that can benefit from marking up more fields. The new Search Appearance filter also makes it easy for webmasters to filter their traffic by AMP and rich cards.

3: Real-time indexing

Users are searching for more than recipes and movies: they’re often coming to Search to find fresh information about what’s happening right now. This insight kickstarted our efforts to use real-time indexing to connect users searching for real-time events with fresh content. Instead of waiting for content to be crawled and indexed, publishers will be able to use the Google Indexing API to trigger the indexing of their content in real time. It’s still in its early days, but we’re excited to launch a pilot later this summer.

3: Getting up to speed with Accelerated Mobile Pages

We provided an update on our use of AMP, an open source effort to speed up the mobile web. Google Search uses AMP to enable instant-loading content. Speed is important—over 40% of users abandon a page that takes more than three seconds to load. We announced that we’re bringing AMPed news carousels to the iOS and Android Google apps, as well as experimenting with combining AMP and rich cards. Stay tuned for more via our blog and github page.

In addition to the sessions, attendees could talk directly with Googlers at the Search & AMP sandbox.

5: A new and improved Structured Data Testing Tool

We updated the popular Structured Data Testing tool. The tool is now tightly integrated with the DevSite Search Gallery and the new Search Preview service, which lets you preview how your rich cards will look on the search results page.

6: App Indexing got a new home (and new features)

We announced App Indexing’s migration to Firebase, Google’s unified developer platform. Watch the session to learn how to grow your app with Firebase App Indexing.

7: App streaming

App streaming is a new way for Android users to try out games without having to download and install the app — and it’s already available in Google Search. Check out the session to learn more.

8. Revamped documentation

We also revamped our developer documentation, organizing our docs around topical guides to make it easier to follow.

Thanks to all who came to I/O — it’s always great to talk directly with developers and hear about experiences first-hand. And whether you came in person or tuned in from afar, let’s continue the conversation on the webmaster forum or during our office hours, hosted weekly via hangouts-on-air.

Posted by Posted by Fabian Schlup, Software Engineer

App deep linking with goo.gl

Starting now, goo.gl short links function as a single link you can use to all your content — whether that content is in your Android app, iOS app, or website. Once you’ve taken the necessary steps to set up App Indexing for Android and iOS, goo.gl URLs will send users straight to the right page in your app if they have it installed, and everyone else to your website. This will provide additional opportunities for your app users to re-engage with your app.

This feature works for both new short URLs and retroactively, so any existing goo.gl short links to your content will now also direct users to your app.

Share links that ‘do the right thing’

You can also make full use of this feature by integrating the URL Shortener API into your app’s share flow, so users can share links that automatically redirect to your native app cross-platform. This will also allow others to embed links in their websites and apps which deep link directly to your app.

Take Google Maps as an example. With the new cross-platform goo.gl links, the Maps share button generates one link that provides the best possible sharing experience for everyone. When opened, the link auto-detects the user’s platform and if they have Maps installed. If the user has the app installed, the short link opens the content directly in the Android or iOS Maps app. If the user doesn’t have the app installed or is on desktop, the short link opens the page on the Maps website.

Try it out for yourself! Don’t forget to use a phone with the Google Maps app installed: http://goo.gl/maps/xlWFj.

How to set it up

To set up app deep linking on goo.gl:

- Complete the necessary steps to participate in App Indexing for Android and iOS at g.co/AppIndexing. Note that goo.gl deep links are open to all iOS developers, unlike deep links from Search currently. After this step, existing goo.gl short links will start deep linking to your app.

- Optionally integrate the URL Shortener API with your app’s share flow, your email campaigns, etc. to programmatically generate links that will deep link directly back to your app.

We hope you enjoy this new functionality and happy cross-platform sharing!

Posted by Fabian Schlup, Software Engineer

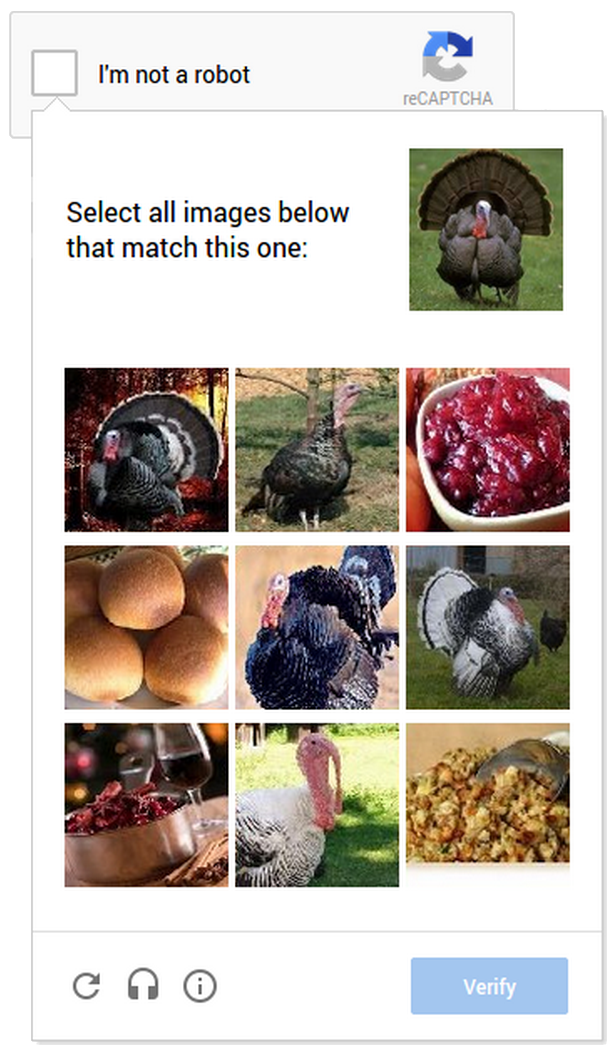

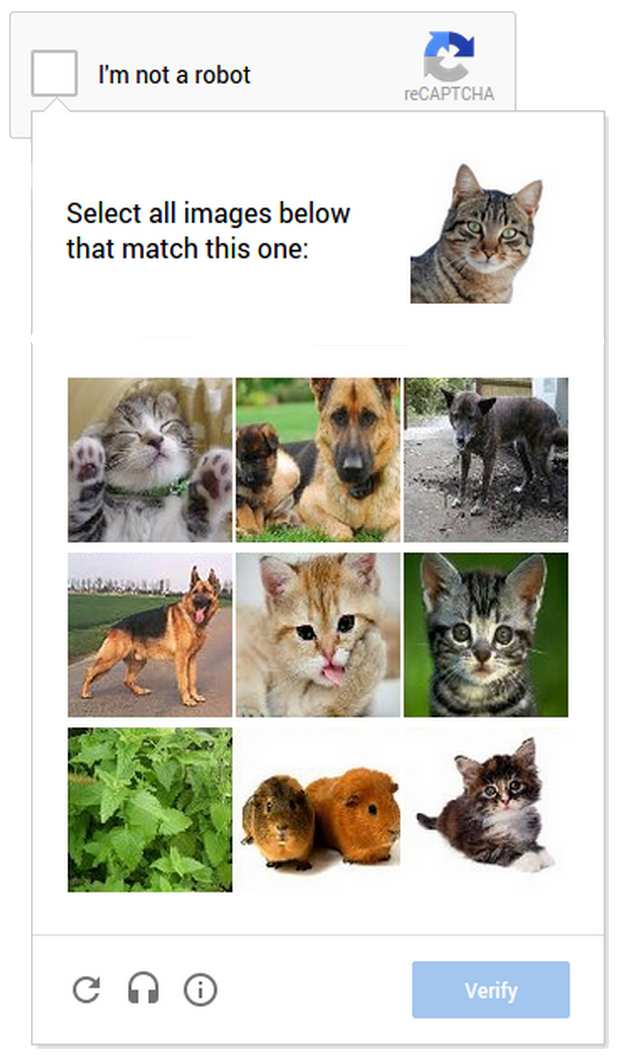

Are you a robot? Introducing “No CAPTCHA reCAPTCHA”

But, we figured it would be easier to just directly ask our users whether or not they are robots—so, we did! We’ve begun rolling out a new API that radically simplifies the reCAPTCHA experience. We’re calling it the “No CAPTCHA reCAPTCHA” and this is how it looks:

On websites using this new API, a significant number of users will be able to securely and easily verify they’re human without actually having to solve a CAPTCHA. Instead, with just a single click, they’ll confirm they are not a robot.

A brief history of CAPTCHAs

While the new reCAPTCHA API may sound simple, there is a high degree of sophistication behind that modest checkbox. CAPTCHAs have long relied on the inability of robots to solve distorted text. However, our research recently showed that today’s Artificial Intelligence technology can solve even the most difficult variant of distorted text at 99.8% accuracy. Thus distorted text, on its own, is no longer a dependable test.

To counter this, last year we developed an Advanced Risk Analysis backend for reCAPTCHA that actively considers a user’s entire engagement with the CAPTCHA—before, during, and after—to determine whether that user is a human. This enables us to rely less on typing distorted text and, in turn, offer a better experience for users. We talked about this in our Valentine’s Day post earlier this year.

The new API is the next step in this steady evolution. Now, humans can just check the box and in most cases, they’re through the challenge.

Are you sure you’re not a robot?

However, CAPTCHAs aren’t going away just yet. In cases when the risk analysis engine can’t confidently predict whether a user is a human or an abusive agent, it will prompt a CAPTCHA to elicit more cues, increasing the number of security checkpoints to confirm the user is valid.

Making reCAPTCHAs mobile-friendly

This new API also lets us experiment with new types of challenges that are easier for us humans to use, particularly on mobile devices. In the example below, you can see a CAPTCHA based on a classic Computer Vision problem of image labeling. In this version of the CAPTCHA challenge, you’re asked to select all of the images that correspond with the clue. It’s much easier to tap photos of cats or turkeys than to tediously type a line of distorted text on your phone.

As more websites adopt the new API, more people will see “No CAPTCHA reCAPTCHAs”. Early adopters, like Snapchat, WordPress, Humble Bundle, and several others are already seeing great results with this new API. For example, in the last week, more than 60% of WordPress’ traffic and more than 80% of Humble Bundle’s traffic on reCAPTCHA encountered the No CAPTCHA experience—users got to these sites faster. To adopt the new reCAPTCHA for your website, visit our site to learn more.

Humans, we’ll continue our work to keep the Internet safe and easy to use. Abusive bots and scripts, it’ll only get worse—sorry we’re (still) not sorry.

Posted by Vinay Shet, Product Manager, reCAPTCHA

App Indexing in more languages

In April, we launched App Indexing in English globally so deep links to your mobile apps could appear in Google Search results on Android everywhere. Today, we’re adding the first publishers with content in other languages: Fairfax Domain, MercadoLibre, Letras.Mus.br, Vagalume, Idealo, L’Equipe, Player.fm, Upcoming, Au Feminin, Marmiton, and chip.de. In the U.S., we now also support some more apps — Walmart, Tapatalk, and Fancy.

We’ve also translated our developer guidelines into eight additional languages: Chinese (Traditional), French, German, Italian, Japanese, Brazilian Portuguese, Russian, and Spanish.

If you’re interested in participating in App Indexing, and your content and implementation are ready, please let us know by filling out this form. As always, you can ask questions on the mobile section of our webmaster forum.

Finally, if you’re headed to Google I/O in June, be sure to check out the session on the “Future of Apps and Search”, where we’ll share some more updates on App Indexing.

Posted by Erik Hendriks, Software Engineer

App Engine IP Range Change Notice

Google uses a wide range of IP addresses for its different services, and the addresses may change without notification. Google App Engine is a Platform as a Service offering which hosts a wide variety of 3rd party applications. This post announces changes in the IP address range and headers used by the Google App Engine URLFetch (outbound HTTP) and outbound sockets APIs.

While we recommend that App Engine IP ranges not be used to filter inbound requests, we are aware that some services have created filters that rely on specific addresses. Google App Engine will be changing its IP range beginning this month. Please see these instructions to determine App Engine’s IP range.

Additionally, the HTTP User-Agent header string that historically allowed identification of individual App Engine applications should no longer be relied on to identify the application. With the introduction of outbound sockets for App Engine, applications may now make HTTP requests without using the URLFetch API, and those requests may set a User-Agent of their own choosing.

Posted by the Google App Engine Team

Introducing website satisfaction by Google Consumer Surveys

Webmaster level: all

We’re now offering webmasters an easy and free way to collect feedback from your website visitors with website satisfaction surveys. All you have to do is paste a small snippet of code in the HTML for your website and this will load a discreet satisfaction survey in the lower right hand corner of your website. Google automatically aggregates and analyzes responses, providing the data back to you through a simple online interface.

Users will be asked to complete a four-question satisfaction survey. Surveys will run until they have received 500 responses and will start again after 30 days so you can track responses over time. This is currently limited to US English visitors on non-mobile devices. The default questions are free and you can customize questions for just $0.01 per response or $5.00 for 500 responses.

Survey Setup and Code Placement Tips

To set up the survey code, you’ll need to have access to the source code for your website.

- Sign into Google Consumer Surveys for website satisfaction to find the code snippet.

- You have the option to enter the website name and URL, survey timing, and survey frequency.

- Click on the “Activate survey” button when ready.

- Once you find the code snippet on top of the setup page, copy and paste it into your web page, just before the closing </head> tag. If your website uses templates to generate pages, enter it just before the closing </head> tag in the file that contains the <head> section.

If you have any questions, please read our Help Center article to learn more.

Posted by Marisa Currie-Rose

Answering the top questions from government webmasters

Webmaster level: Beginner – Intermediate

Government sites, from city to state to federal agencies, are extremely important to Google Search. For one thing, governments have a lot of content — and government websites are often the canonical source of information that’s important to citizens. Around 20 percent of Google searches are for local information, and local governments are experts in their communities.

That’s why I’ve spoken at the National Association of Government Webmasters (NAGW) national conference for the past few years. It’s always interesting speaking to webmasters about search, but the people running government websites have particular concerns and questions. Since some questions come up frequently I thought I’d share this FAQ for government websites.

Question 1: How do I fix an incorrect phone number or address in search results or Google Maps?

Although managing their agency’s site is plenty of work, government webmasters are often called upon to fix problems found elsewhere on the web too. By far the most common question I’ve taken is about fixing addresses and phone numbers in search results. In this case, government site owners really can do it themselves, by claiming their Google+ Local listing. Incorrect or missing phone numbers, addresses, and other information can be fixed by claiming the listing.

Most locations in Google Maps have a Google+ Local listing — businesses, offices, parks, landmarks, etc. I like to use the San Francisco Main Library as an example: it has contact info, detailed information like the hours they’re open, user reviews and fun extras like photos. When we think users are searching for libraries in San Francisco, we may display a map and a listing so they can find the library as quickly as possible.

If you work for a government agency and want to claim a listing, we recommend using a shared Google Account with an email address at your .gov domain if possible. Usually, ownership of the page is confirmed via a phone call or post card.

Question 2: I’ve claimed the listing for our office, but I have 43 different city parks to claim in Google Maps, and none of them have phones or mailboxes. How do I claim them?

Use the bulk uploader! If you have 10 or more listings / addresses to claim at the same time, you can upload a specially-formatted spreadsheet. Go to www.google.com/places/, click the “Get started now” button, and then look for the “bulk upload” link.

If you run into any issues, use the Verification Troubleshooter.

Question 3: We’re moving from a .gov domain to a new .com domain. How should we move the site?

We have a Help Center article with more details, but the basic process involves the following steps:

- Make sure you have both the old and new domain verified in the same Webmaster Tools account.

- Use a 301 redirect on all pages to tell search engines your site has moved permanently.

- Don’t do a single redirect from all pages to your new home page — this gives a bad user experience.

- If there’s no 1:1 match between pages on your old site and your new site (recommended), try to redirect to a new page with similar content.

- If you can’t do redirects, consider cross-domain canonical links.

- Make sure to check if the new location is crawlable by Googlebot using the Fetch as Google feature in Webmaster Tools.

- Use the Change of Address tool in Webmaster Tools to notify Google of your site’s move.

- Have a look at the Links to Your Site in Webmaster Tools and inform the important sites that link to your content about your new location.

- We recommend not implementing other major changes at the same time, like large-scale content, URL structure, or navigational updates.

- To help Google pick up new URLs faster, use the Fetch as Google tool to ask Google to crawl your new site, and submit a Sitemap listing the URLs on your new site.

- To prevent confusion, it’s best to retain control of your old site’s domain and keep redirects in place for as long as possible — at least 180 days.

What if you’re moving just part of the site? This question came up too — for example, a city might move its “Tourism and Visitor Info” section to its own domain.

In that case, many of the same steps apply: verify both sites in Webmaster Tools, use 301 redirects, clean up old links, etc. In this case you don’t need to use the Change of Address form in Webmaster Tools since only part of your site is moving. If for some reason you’ll have some of the same content on both sites, you may want to include a cross-domain canonical link pointing to the preferred domain.

Question 4: We’ve done a ton of work to create unique titles and descriptions for pages. How do we get Google to pick them up?

First off, that’s great! Better titles and descriptions help users decide to click through to get the information they need on your page. The government webmasters I’ve spoken with care a lot about the content and organization of their sites, and work hard to provide informative text for users.

Google’s generation of page titles and descriptions (or “snippets”) is completely automated and takes into account both the content of a page as well as references to it that appear on the web. Changes are picked up as we recrawl your site. But you can do two things to let us know about URLs that have changed:

- Submit an updated XML Sitemap so we know about all of the pages on your site.

- In Webmaster Tools, use the Fetch as Google feature on a URL you’ve updated. Then you can choose to submit it to the index.

- You can choose to submit all of the linked pages as well — if you’ve updated an entire section of your site, you might want to submit the main page or an index page for that section to let us know about a broad collection of URLs.

Question 5: How do I get into the YouTube government partner program?

For this question, I have bad news, good news, and then even better news. On the one hand, the government partner program has been discontinued. But don’t worry, because most of the features of the program are now available to your regular YouTube account. For example, you can now upload videos longer than 10 minutes.

Did I say I had even better news? YouTube has added a lot of functionality useful for governments in the past year:

- You can now broadcast live streaming video to YouTube via Hangouts On Air (requires a Google+ account).

- You can link your YouTube account with your Webmaster Tools account, making it the “official channel” for your site.

- Automatic captions continue to get better and better, supporting more languages.

I hope this FAQ has been helpful, but I’m sure I haven’t covered everything government webmasters want to know. I highly recommend our Webmaster Academy, where you can learn all about making your site search-engine friendly. If you have a specific question, please feel free to add a question in the comments or visit our really helpful Webmaster Central Forum.

Posted by Jason Morrison, Search Quality Team