How we fought Search spam on Google – Webspam Report 2019

Video Series for New Webmasters: Search for Beginners!

We are excited to introduce our newest video series: “Search For Beginners”! The series was created primarily to help new webmasters. It is also for anyone with an interest in Search or anyone who is still learning about the Web and how to manage their online presence.

We love to see the webmaster community grow! Every day, there are countless new webmasters who are taking the first steps in learning how Search works, and how to make their websites perform well and discoverable on Search. We understand that it sometimes can be challenging or even overwhelming to start with our existing content without some prior knowledge or basic understandings of the Web. We find our basic videos in our YouTube channels to be the ones with the most views. At the same time, advanced webmasters also see the need for content that can be sent to clients or stakeholders to help explain important concepts in managing an online presence.

We want to help all webmasters succeed, regardless of whether you have been managing websites for many years or you’ve just started out yesterday. We want to do more to help the new webmasters and this video series will hopefully help us achieve that.

Introduction to the series:

Episode 1:

The “Search For Beginners” video series covers basic online presence topics ranging from ‘Do you need a website?’, ‘What are the goals for your website?’ to more organic search-related topics such as ‘How does Google Search work?’, ‘How to change description line’, or ‘How to change wrong address information on Google’. Actually, we get asked these questions frequently in forums, social channels and at events around the world! The videos are fully animated. The videos are in English with subtitles available in Spanish, Portuguese, Korean, Chinese, Indonesian, Italian, Japanese, and English. We are working on more, so please stay tuned!

And if you consider yourself a more experienced user, please feel free to use these videos to support your pitches or explaining things to your clients. If you want to share any ideas or learnings, please leave them in the comment section in each video so that others can benefit from your knowledge and experience.

Follow us on Twitter and subscribe on YouTube for the upcoming videos! We will be adding new videos in this series to this playlist about every two weeks!

Posted by Cherry Prommawin, Search Quality Analyst

This year in Search Spam – Webspam report 2018

Google webspam trends and how we fought webspam in 2018

Working with users, webmasters and developers for a better web

This year in Search Spam – Webspam report 2018

Google webspam trends and how we fought webspam in 2018

Working with users, webmasters and developers for a better web

Help Google Search know the best date for your web page

Sometimes, Google shows dates next to listings in its search results. In this post, we’ll answer some commonly-asked questions webmasters have about how these dates are determined and provide some best practices to help improve their accuracy.

How dates are determined

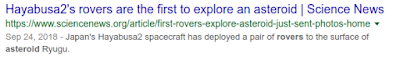

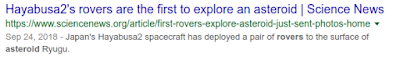

Google shows the date of a page when its automated systems determine that it would be relevant to do so, such as for pages that can be time-sensitive, including news content:

Google determines a date using a variety of factors, including but not limited to: any prominent date listed on the page itself or dates provided by the publisher through structured markup.

Google doesn’t depend on one single factor because all of them can be prone to issues. Publishers may not always provide a clear visible date. Sometimes, structured data may be lacking or may not be adjusted to the correct time zone. That’s why our systems look at several factors to come up with what we consider to be our best estimate of when a page was published or significantly updated.

How to specify a date on a page

To help Google to pick the right date, site owners and publishers should:

- Show a clear date: Show a visible date prominently on the page.

- Use structured data: Use the

datePublishedanddateModifiedschema with the correct time zone designator for AMP or non-AMP pages. When using structured data, make sure to use the ISO 8601 format for dates.

Guidelines specific to Google News

Google News requires clearly showing both the date and the time that content was published or updated. Structured data alone is not enough, though it is recommended to use in addition to a visible date and time. Date and time should be positioned between the headline and the article text. For more guidance, also see our help page about article dates.

If an article has been substantially changed, it can make sense to give it a fresh date and time. However, don’t artificially freshen a story without adding significant information or some other compelling reason for the freshening. Also, do not create a very slightly updated story from one previously published, then delete the old story and redirect to the new one. That’s against our article URLs guidelines.

More best practices for dates on web pages

In addition to the most important requirements listed above, here are additional best practices to help Google determine the best page to consider showing for a web page:

- Show when a page has been updated: If you update a page significantly, also update the visible date (and time, if you display that). If desired, you can show two dates: when a page was originally published and when it was updated. Just do so in a way that’s visually clear to your readers. If showing both dates, it’s also highly recommended to use

datePublishedanddateModifiedfor AMP or non-AMP pages to make it easier for algorithms to recognize. - Use the right time zone: If specifying a time, make sure to provide the correct timezone, taking into account daylight saving time as appropriate.

- Be consistent in usage. Within a page, make sure to use exactly the same date (and, potentially, time) in structured data as well as in the visible part of the page. Make sure to use the same timezone if you specify one on the page.

- Don’t use future dates or dates related to what a page is about: Always use a date for when a page itself was published or updated, not a date linked to something like an event that the page is writing about, especially for events or other subjects that happen in the future (you may use Event markup separately, if appropriate).

- Follow Google’s structured data guidelines: While Google doesn’t guarantee that a date (or structured data in general) specified on a page will be used, following our structured data guidelines does help our algorithms to have it available in a machine-readable way.

- Troubleshoot by minimizing other dates on the page: If you’ve followed the best practices above and find incorrect dates are being selected, consider if you can remove or minimize other dates that may appear on the page, such as those that might be next to related stories.

We hope these guidelines help to make it easier to specify the right date on your website’s pages! For questions or comments on this, or other structured data topics, feel free to drop by our webmaster help forums.

Posted by John Mueller, Developer Advocate, Zurich

Help Google Search know the best date for your web page

Sometimes, Google shows dates next to listings in its search results. In this post, we’ll answer some commonly-asked questions webmasters have about how these dates are determined and provide some best practices to help improve their accuracy.

How dates are determined

Google shows the date of a page when its automated systems determine that it would be relevant to do so, such as for pages that can be time-sensitive, including news content:

Google determines a date using a variety of factors, including but not limited to: any prominent date listed on the page itself or dates provided by the publisher through structured markup.

Google doesn’t depend on one single factor because all of them can be prone to issues. Publishers may not always provide a clear visible date. Sometimes, structured data may be lacking or may not be adjusted to the correct time zone. That’s why our systems look at several factors to come up with what we consider to be our best estimate of when a page was published or significantly updated.

How to specify a date on a page

To help Google to pick the right date, site owners and publishers should:

- Show a clear date: Show a visible date prominently on the page.

- Use structured data: Use the

datePublishedanddateModifiedschema with the correct time zone designator for AMP or non-AMP pages. When using structured data, make sure to use the ISO 8601 format for dates.

Guidelines specific to Google News

Google News requires clearly showing both the date and the time that content was published or updated. Structured data alone is not enough, though it is recommended to use in addition to a visible date and time. Date and time should be positioned between the headline and the article text. For more guidance, also see our help page about article dates.

If an article has been substantially changed, it can make sense to give it a fresh date and time. However, don’t artificially freshen a story without adding significant information or some other compelling reason for the freshening. Also, do not create a very slightly updated story from one previously published, then delete the old story and redirect to the new one. That’s against our article URLs guidelines.

More best practices for dates on web pages

In addition to the most important requirements listed above, here are additional best practices to help Google determine the best page to consider showing for a web page:

- Show when a page has been updated: If you update a page significantly, also update the visible date (and time, if you display that). If desired, you can show two dates: when a page was originally published and when it was updated. Just do so in a way that’s visually clear to your readers. If showing both dates, it’s also highly recommended to use

datePublishedanddateModifiedfor AMP or non-AMP pages to make it easier for algorithms to recognize. - Use the right time zone: If specifying a time, make sure to provide the correct timezone, taking into account daylight saving time as appropriate.

- Be consistent in usage. Within a page, make sure to use exactly the same date (and, potentially, time) in structured data as well as in the visible part of the page. Make sure to use the same timezone if you specify one on the page.

- Don’t use future dates or dates related to what a page is about: Always use a date for when a page itself was published or updated, not a date linked to something like an event that the page is writing about, especially for events or other subjects that happen in the future (you may use Event markup separately, if appropriate).

- Follow Google’s structured data guidelines: While Google doesn’t guarantee that a date (or structured data in general) specified on a page will be used, following our structured data guidelines does help our algorithms to have it available in a machine-readable way.

- Troubleshoot by minimizing other dates on the page: If you’ve followed the best practices above and find incorrect dates are being selected, consider if you can remove or minimize other dates that may appear on the page, such as those that might be next to related stories.

We hope these guidelines help to make it easier to specify the right date on your website’s pages! For questions or comments on this, or other structured data topics, feel free to drop by our webmaster help forums.

Posted by John Mueller, Developer Advocate, Zurich

Notifying users of unclear subscription pages

Unclear mobile subscriptions

Clearer billing information for Chrome users

- Is the billing information visible and obvious to users? For example, adding no subscription information on the subscription page or hiding the information is a bad start because users should have access to the information when agreeing to subscribe.

- Can customers easily see the costs they’re going to incur before accepting the terms? For example, displaying the billing information in grey characters over a grey background, therefore making it less readable, is not considered a good user practice.

- Is the fee structure easily understandable? For example, the formula presented to explain how the cost of the service will be determined should be as simple and straightforward as possible.

If your billing service takes users through a clearly visible and understandable billing process as described in our best practices, you don’t need to make any changes. Also, the new warning in Chrome doesn’t impact your website’s ranking in Google Search.

How we fought webspam – Webspam Report 2017

We always want to make sure that when you use Google Search to find information, you get the highest quality results. But, we are aware of many bad actors who are trying to manipulate search ranking and profit from it, which is at odds with our core mission: to organize the world’s information and make it universally accessible and useful. Over the years, we’ve devoted a huge effort toward combating abuse and spam on Search. Here’s a look at how we fought abuse in 2017.

We call these various types of abuse that violate the webmaster guidelines “spam.” Our evaluation indicated that for many years, less than 1 percent of search results users visited are spammy. In the last couple of years, we’ve managed to further reduce this by half.

Google webspam trends and how we fought webspam in 2017

Another abuse vector is the manipulation of links, which is one of the foundation ranking signals for Search. In 2017 we doubled down our effort in removing unnatural links via ranking improvements and scalable manual actions. We have observed a year-over-year reduction of spam links by almost half.

Working with users and webmasters for a better web

We also actively work with webmasters to maintain the health of the web ecosystem. Last year, we sent 45 million messages to registered website owners via Search Console letting them know about issues we identified with their websites. More than 6 million of these messages are related to manual actions, providing transparency to webmasters so they understand why their sites got manual actions and how to resolve the issue.

Last year, we released a beta version of a new Search Console to a limited number of users and afterwards, to all users of Search Console. We listened to what matters most to the users, and started with popular functionalities such as Search performance, Index Coverage and others. These can help webmasters optimize their websites’ Google Search presence more easily.

Through enhanced Safe Browsing protections, we continue to protect more users from bad actors online. In the last year, we have made significant improvements to our safe browsing protection, such as broadening our protection of macOS devices, enabling predictive phishing protection in Chrome, cracked down on mobile unwanted software, and launched significant improvements to our ability to protect users from deceptive Chrome extension installation.

We have a multitude of channels to engage directly with webmasters. We have dedicated team members who meet with webmasters regularly both online and in-person. We conducted more than 250 online office hours, online events and offline events around the world in more than 60 cities to audiences totaling over 220,000 website owners, webmasters and digital marketers. In addition, our official support forum has answered a high volume of questions in many languages. Last year, the forum had 63,000 threads generating over 280,000 contributing posts by 100+ Top Contributors globally. For more details, see this post. Apart from the forums, blogs and the SEO starter guide, the Google Webmaster YouTube channel is another channel to find more tips and insights. We launched a new SEO snippets video series to help with short and to-the-point answers to specific questions. Be sure to subscribe to the channel!

Despite all these improvements, we know we’re not yet done. We’re relentless in our pursue of an abuse-free user experience, and will keep improving our collaboration with the ecosystem to make it happen.

Our goal: helping webmasters and content creators

- Google Web Fundamentals: Provides technical guidance on building a modern website that takes advantage of open web standards.

- Google Search developer documentation: Describes how Google crawls and indexes a website. Includes authoritative guidance on building a site that is optimized for Google Search.

- Search Console Help Center: Provides detailed information on how to use and take advantage of Search Console, the best way for a website owner to understand how Google sees their site.

- The Search Engine Optimization (SEO) Starter Guide: Provides a complete overview of the basics of SEO according to our recommended best practices.

- Google webmaster guidelines: Describes policies and practices that may lead to a site being removed entirely from the Google index or otherwise affected by an algorithmic or manual spam action that can negatively affect their Search appearance.

- Google Webmasters YouTube Channel

Send your recipes to the Google Assistant

Last year, we launched Google Home with recipe guidance, providing users with step-by-step instructions for cooking recipes. With more people using Google Home every day, we’re publishing new guidelines so your recipes can support this voice guided ex…

We updated our job posting guidelines

Last year, we launched job search on Google to connect more people with jobs. When you provide Job Posting structured data, it helps drive more relevant traffic to your page by connecting job seekers with your content. To ensure that job seekers are getting the best possible experience, it’s important to follow our Job Posting guidelines.

We’ve recently made some changes to our Job Posting guidelines to help improve the job seeker experience.

- Remove expired jobs

- Place structured data on the job’s detail page

- Make sure all job details are present in the job description

Remove expired jobs

When job seekers put in effort to find a job and apply, it can be very discouraging to discover that the job that they wanted is no longer available. Sometimes, job seekers only discover that the job posting is expired after deciding to apply for the job. Removing expired jobs from your site may drive more traffic because job seekers are more confident when jobs that they visit on your site are still open for application. For more information on how to remove a job posting, see Remove a job posting.

Place structured data on the job’s detail page

Job seekers find it confusing when they land on a list of jobs instead of the specific job’s detail page. To fix this, put structured data on the most detailed leaf page possible. Don’t add structured data to pages intended to present a list of jobs (for example, search result pages) and only add it to the most specific page describing a single job with its relevant details.

Make sure all job details are present in the job description

We’ve also noticed that some sites include information in the JobPosting structured data that is not present anywhere in the job posting. Job seekers are confused when the job details they see in Google Search don’t match the job description page. Make sure that the information in the JobPosting structured data always matches what’s on the job posting page. Here are some examples:

- If you add salary information to the structured data, then also add it to the job posting. Both salary figures should match.

- The location in the structured data should match the location in the job posting.

Providing structured data content that is consistent with the content of the job posting pages not only helps job seekers find the exact job that they were looking for, but may also drive more relevant traffic to your job postings and therefore increase the chances of finding the right candidates for your jobs.

If your site violates the Job Posting guidelines (including the guidelines in this blog post), we may take manual action against your site and it may not be eligible for display in the jobs experience on Google Search. You can submit a reconsideration request to let us know that you have fixed the problem(s) identified in the manual action notification. If your request is approved, the manual action will be removed from your site or page.

For more information, visit our Job Posting developer documentation and our JobPosting FAQ.

Posted by Anouar Bendahou, Trust & Safety Search Team

A reminder about links in large-scale article campaigns

-

Stuffing keyword-rich links to your site in your articles

-

Having the articles published across many different sites; alternatively, having a large number of articles on a few large, different sites

-

Using or hiring article writers that aren’t knowledgeable about the topics they’re writing on

-

Using the same or similar content across these articles; alternatively, duplicating the full content of articles found on your own site (in which case use of rel=”canonical”, in addition to rel=”nofollow”, is advised)

Protect your site from user generated spam

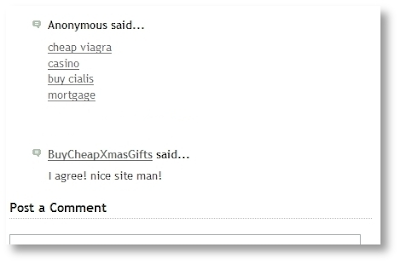

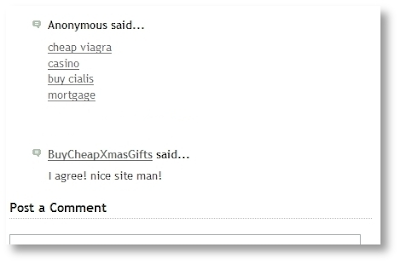

As a website owner, you might have come across some auto-generated content in comments sections or forum threads. When such content is created on your pages, not only does it disrupt those visiting your site, but it also shows some content that you may not want to be associated with your site to Google and other search engines.

In this blog post, we will give you tips to help you deal with this type of spam in your site and forum.

Some spammers abuse sites owned by others by posting deceiving content and links, in an attempt to get more traffic to their sites. Here are a few examples:

Comments and forum threads can be a really good source of information and an efficient way of engaging a site’s users in discussions. This valuable content should not be buried by auto-generated keywords and links placed there by spammers.

There are many ways of securing your site’s forums and comment threads and making them unattractive to spammers:

- Keep your forum software updated and patched. Take the time to keep your software up-to-date and pay special attention to important security updates. Spammers take advantage of security issues in older versions of blogs, bulletin boards, and other content management systems.

- Add a CAPTCHA. CAPTCHAs require users to confirm that they are not robots in order to prove they’re a human being and not an automated script. One way to do this is to use a service like reCAPTCHA, Securimage and Jcaptcha .

- Block suspicious behavior. Many forums allow you to set time limits between posts, and you can often find plugins to look for excessive traffic from individual IP addresses or proxies and other activity more common to bots than human beings. For example, phpBB, Simple Machines, myBB, and many other forum platforms enable such configurations.

- Check your forum’s top posters on a daily basis. If a user joined recently and has an excessive amount of posts, then you probably should review their profile and make sure that their posts and threads are not spammy.

- Consider disabling some types of comments. For example, It’s a good practice to close some very old forum threads that are unlikely to get legitimate replies.

If you plan on not monitoring your forum going forward and users are no longer interacting with it, turning off posting completely may prevent spammers from abusing it. - Make good use of moderation capabilities. Consider enabling features in moderation that require users to have a certain reputation before links can be posted or where comments with links require moderation.

If possible, change your settings so that you disallow anonymous posting and make posts from new users require approval before they’re publicly visible.

Moderators, together with your friends/colleagues and some other trusted users can help you review and approve posts while spreading the workload. Keep an eye on your forum’s new users by looking on their posts and activities on your forum. - Consider blacklisting obviously spammy terms. Block obviously inappropriate comments with a blacklist of spammy terms (e.g. Illegal streaming or pharma related terms) . Add inappropriate and off-topic terms that are only used by spammers, learn from the spam posts that you often see on your forum or other forums. Built-in features or plugins can delete or mark comments as spam for you.

- Use the “nofollow” attribute for links in the comment field. This will deter spammers from targeting your site. By default, many blogging sites (such as Blogger) automatically add this attribute to any posted comments.

- Use automated systems to defend your site. Comprehensive systems like Akismet, which has plugins for many blogs and forum systems are easy to install and do most of the work for you.

For detailed information about these topics, check out our Help Center document on User Generated Spam and comment spam. You can also visit our Webmaster Central Help Forum if you need any help.

Posted by Anouar Bendahou, Search Quality Strategist, Google Ireland

Protect your site from user generated spam

As a website owner, you might have come across some auto-generated content in comments sections or forum threads. When such content is created on your pages, not only does it disrupt those visiting your site, but it also shows some content that you may not want to be associated with your site to Google and other search engines.

In this blog post, we will give you tips to help you deal with this type of spam in your site and forum.

Some spammers abuse sites owned by others by posting deceiving content and links, in an attempt to get more traffic to their sites. Here are a few examples:

Comments and forum threads can be a really good source of information and an efficient way of engaging a site’s users in discussions. This valuable content should not be buried by auto-generated keywords and links placed there by spammers.

There are many ways of securing your site’s forums and comment threads and making them unattractive to spammers:

- Keep your forum software updated and patched. Take the time to keep your software up-to-date and pay special attention to important security updates. Spammers take advantage of security issues in older versions of blogs, bulletin boards, and other content management systems.

- Add a CAPTCHA. CAPTCHAs require users to confirm that they are not robots in order to prove they’re a human being and not an automated script. One way to do this is to use a service like reCAPTCHA, Securimage and Jcaptcha .

- Block suspicious behavior. Many forums allow you to set time limits between posts, and you can often find plugins to look for excessive traffic from individual IP addresses or proxies and other activity more common to bots than human beings. For example, phpBB, Simple Machines, myBB, and many other forum platforms enable such configurations.

- Check your forum’s top posters on a daily basis. If a user joined recently and has an excessive amount of posts, then you probably should review their profile and make sure that their posts and threads are not spammy.

- Consider disabling some types of comments. For example, It’s a good practice to close some very old forum threads that are unlikely to get legitimate replies.

If you plan on not monitoring your forum going forward and users are no longer interacting with it, turning off posting completely may prevent spammers from abusing it. - Make good use of moderation capabilities. Consider enabling features in moderation that require users to have a certain reputation before links can be posted or where comments with links require moderation.

If possible, change your settings so that you disallow anonymous posting and make posts from new users require approval before they’re publicly visible.

Moderators, together with your friends/colleagues and some other trusted users can help you review and approve posts while spreading the workload. Keep an eye on your forum’s new users by looking on their posts and activities on your forum. - Consider blacklisting obviously spammy terms. Block obviously inappropriate comments with a blacklist of spammy terms (e.g. Illegal streaming or pharma related terms) . Add inappropriate and off-topic terms that are only used by spammers, learn from the spam posts that you often see on your forum or other forums. Built-in features or plugins can delete or mark comments as spam for you.

- Use the “nofollow” attribute for links in the comment field. This will deter spammers from targeting your site. By default, many blogging sites (such as Blogger) automatically add this attribute to any posted comments.

- Use automated systems to defend your site. Comprehensive systems like Akismet, which has plugins for many blogs and forum systems are easy to install and do most of the work for you.

For detailed information about these topics, check out our Help Center document on User Generated Spam and comment spam. You can also visit our Webmaster Central Help Forum if you need any help.

Posted by Anouar Bendahou, Search Quality Strategist, Google Ireland

A reminder about widget links

Google has long taken a strong stance against links that manipulate a site’s PageRank. Today we would like to reiterate our policy on the creation of keyword-rich, hidden or low-quality links embedded in widgets that are distributed across various sites.

Widgets can help website owners enrich the experience of their site and engage users. However, some widgets add links to a site that a webmaster did not editorially place and contain anchor text that the webmaster does not control. Because these links are not naturally placed, they’re considered a violation of Google Webmaster Guidelines.

Below you can find the examples of widgets which contain links that violate Google Webmaster Guidelines:

Google’s webspam team may take manual actions on unnatural links. When a manual action is taken, Google will notify the site owners through Search Console. If you receive such a warning for unnatural links to your site and you use links in widgets to promote your site, we recommend resolving these issues and requesting reconsideration.

You can resolve issues with unnatural links by making sure they don’t pass PageRank. To do this, add a rel=”nofollow” attribute on the widget links or remove the links entirely. After fixing or removing widget links and any other unnatural links to your site, let Google know about your change by submitting a reconsideration request in Search Console. Once the request has been reviewed, you’ll get a notification about whether the reconsideration request was successful or not.

Also, we would like to remind webmasters who use widgets on their sites to check those widgets for any unnatural links. Add a rel=”nofollow” attribute on those unnatural links or remove the links entirely from the widget.

For more information, please watch our video about widget links and refer to our Webmaster Guidelines on Link Schemes. Additionally, feel free to ask questions in our Webmaster Help Forums, where a community of webmasters can help with their experience.

Posted by Agnieszka Łata, Trust & Safety Search Team and Eric Kuan, Webmaster Relations Specialist

A reminder about widget links

Google has long taken a strong stance against links that manipulate a site’s PageRank. Today we would like to reiterate our policy on the creation of keyword-rich, hidden or low-quality links embedded in widgets that are distributed across various sites.

Widgets can help website owners enrich the experience of their site and engage users. However, some widgets add links to a site that a webmaster did not editorially place and contain anchor text that the webmaster does not control. Because these links are not naturally placed, they’re considered a violation of Google Webmaster Guidelines.

Below you can find the examples of widgets which contain links that violate Google Webmaster Guidelines:

Google’s webspam team may take manual actions on unnatural links. When a manual action is taken, Google will notify the site owners through Search Console. If you receive such a warning for unnatural links to your site and you use links in widgets to promote your site, we recommend resolving these issues and requesting reconsideration.

You can resolve issues with unnatural links by making sure they don’t pass PageRank. To do this, add a rel=”nofollow” attribute on the widget links or remove the links entirely. After fixing or removing widget links and any other unnatural links to your site, let Google know about your change by submitting a reconsideration request in Search Console. Once the request has been reviewed, you’ll get a notification about whether the reconsideration request was successful or not.

Also, we would like to remind webmasters who use widgets on their sites to check those widgets for any unnatural links. Add a rel=”nofollow” attribute on those unnatural links or remove the links entirely from the widget.

For more information, please watch our video about widget links and refer to our Webmaster Guidelines on Link Schemes. Additionally, feel free to ask questions in our Webmaster Help Forums, where a community of webmasters can help with their experience.

Posted by Agnieszka Łata, Trust & Safety Search Team and Eric Kuan, Webmaster Relations Specialist

Best practices for bloggers reviewing free products they receive from companies

As a form of online marketing, some companies today will send bloggers free products to review or give away in return for a mention in a blogpost. Whether you’re the company supplying the product or the blogger writing the post, below are a few best practices to ensure that this content is both useful to users and compliant with Google Webmaster Guidelines.

- Use the nofollow tag where appropriate

Links that pass PageRank in exchange for goods or services are against Google guidelines on link schemes. Companies sometimes urge bloggers to link back to:

- the company’s site

- the company’s social media accounts

- an online merchant’s page that sells the product

- a review service’s page featuring reviews of the product

- the company’s mobile app on an app store

Bloggers should use the nofollow tag on all such links because these links didn’t come about organically (i.e., the links wouldn’t exist if the company hadn’t offered to provide a free good or service in exchange for a link). Companies, or the marketing firms they’re working with, can do their part by reminding bloggers to use nofollow on these links.

- Disclose the relationship

Users want to know when they’re viewing sponsored content. Also, there are laws in some countries that make disclosure of sponsorship mandatory. A disclosure can appear anywhere in the post; however, the most useful placement is at the top in case users don’t read the entire post.

- Create compelling, unique content

The most successful blogs offer their visitors a compelling reason to come back. If you’re a blogger you might try to become the go-to source of information in your topic area, cover a useful niche that few others are looking at, or provide exclusive content that only you can create due to your unique expertise or resources.

For more information, please drop by our Google Webmaster Central Help Forum.

Posted by the Google Webspam Team

Detect and get rid of unwanted sneaky mobile redirects

In many cases, it is OK to show slightly different content on different devices. For example, optimizing the smaller space of a smartphone screen can mean that some content, like images, will have to be modified. Or you might want to store your website’s menu in a navigation drawer (find documentation here) to make mobile browsing easier and more effective. When implemented properly, these user-centric modifications can be understood very well by Google.

The situation is similar when it comes to mobile-only redirect. Redirecting mobile users to improve their mobile experience (like redirecting mobile users from example.com/url1 to m.example.com/url1) is often beneficial to them. But redirecting mobile users sneakily to a different content is bad for user experience and is against Google’s webmaster guidelines.

A frustrating experience: The same URL shows up in search results pages on desktop and on mobile. When a user clicks on this result on their desktop computer, the URL opens normally. However, when clicking on the same result on a smartphone, a redirect happens and an unrelated URL loads.

Who implements these mobile-only sneaky redirects?

There are cases where webmasters knowingly decide to put into place redirection rules for their mobile users. This is typically a webmaster guidelines violation, and we do take manual action against it when it harms Google users’ experience (see last section of this article).

But we’ve also observed situations where mobile-only sneaky redirects happen without site owners being aware of it:

- Advertising schemes that redirect mobile users specifically

A script/element installed to display ads and monetize content might be redirecting mobile users to a completely different site without the webmaster being aware of it.

- Mobile redirect as a result of the site being a target of hacking

In other cases, if your website has been hacked, a potential result can be redirects to spammy domains for mobile users only.

How do I detect if my site is doing sneaky mobile redirects?

- Check if you are redirected when you navigate to your site on your smartphone

We recommend you to check the mobile user experience of your site by visiting your pages from Google search results with a smartphone. When debugging, mobile emulation in desktop browsers is handy, mostly because you can test for many different devices. You can, for example, do it straight from your browser in Chrome, Firefox or Safari (for the latter, make sure you have enabled the “Show Develop menu in menu bar” feature).

- Listen to your users

Your users could see your site in a different way than you do. It’s always important to pay attention to user complaints, so you can hear of any issue related to mobile UX. - Monitor your users in your site’s analytics data

Unusual mobile user activity could be detected by looking at some of the data held in your website’s analytics data. For example, looking at the average time spent on your site by your mobile users could be a good signal to watch: if all of a sudden, your mobile users (and only them) start spending much less time on your site than they used to, there might be an issue related to mobile redirections.To be aware of wide changes in mobile user activity as soon as they happen, you can for example set up Google Analytics alerts. For example, you can set an alert to be warned in case of a sharp drop in average time spent on your site by mobile users, or a drop in mobile users (always take into account that big changes in those metrics are not a clear, direct signal that your site is doing mobile sneaky redirects).

I’ve detected sneaky redirects for my mobile users, and I did not set it up: what do I do?

- Make sure that your site is not hacked.

Check the Security Issues tool in the Search Console, if we have noticed any hack, you should get some information there.

Review our additional resources on typical symptoms of hacked sites, and our case studies on hacked sites. - Audit third-party scripts/elements on your site

If your site is not hacked, then we recommend you take the time to investigate if third-party scripts/elements are causing the redirects. You can follow these steps:

A. Remove one by one the third-party scripts/elements you do not control from the redirecting page(s).

B. Check your site on a mobile device or through emulation between each script/element removal, and see when the redirect stops.

C. If you think a particular script/element is responsible for the sneaky redirect, consider removing it from your site, and debugging the issue with the script/element provider.

Last Thoughts on Sneaky Mobile Redirects

It’s a violation of the Google Webmaster Guidelines to redirect a user to a page with the intent of displaying content other than what was made available to the search engine crawler (more information on sneaky redirects). To ensure quality search results for our users, the Google Search Quality team can take action on such sites, including removal of URLs from our index. When we take manual action, we send a message to the site owner via Search Console. Therefore, make sure you’ve set up a Search Console account.

Be sure to choose advertisers who are transparent on how they handle user traffic, to avoid unknowingly redirecting your own users. If you are interested in trust-building in the online advertising space, you may check out industry-wide best practices when participating in ad networks. For example, the Trustworthy Accountability Group’s (Interactive Advertising Bureau) Inventory Quality Guidelines are a good place to start. There are many ways to monetize your content with mobile solutions that provide a high quality user experience, be sure to use them.

If you have questions or comments about mobile-only redirects, join us in our Google Webmaster Support forum.

Written by Vincent Courson & Badr Salmi El Idrissi, Search Quality team

An update on how we tackle hacked spam

Recently we have started rolling out a series of algorithmic changes that aim to tackle hacked spam in our search results. A huge amount of legitimate sites are hacked by spammers and used to engage in abusive behavior, such as malware download, promotion of traffic to low quality sites, porn, and marketing of counterfeit goods or illegal pharmaceutical drugs, etc.

Website owners that don’t implement standard best practices for security can leave their websites vulnerable to being easily hacked. This can include government sites, universities, small business, company websites, restaurants, hobby organizations, conferences, etc. Spammers and cyber-criminals purposely seek out those sites and inject pages with malicious content in an attempt to gain rank and traffic in search engines.

We are aggressively targeting hacked spam in order to protect users and webmasters.

The algorithmic changes will eventually impact roughly 5% of queries, depending on the language. As we roll out the new algorithms, users might notice that for certain queries, only the most relevant results are shown, reducing the number of results shown:

This is due to the large amount of hacked spam being removed, and should improve in the near future. We are continuing tuning our systems to weed out the bad content while retaining the organic, legitimate results. If you have any questions about these changes, or want to give us feedback on these algorithms, feel free to drop by our Webmaster Help Forums.

Posted by Ning Song, Software Engineer

First Click Free update

Around ten years ago when we introduced a policy called “First Click Free,” it was hard to imagine that the always-on, multi-screen, multiple device world we now live in would change content consumption so much and so fast. The spirit of the First Click Free effort was – and still is – to help users get access to high quality news with a minimum of effort, while also ensuring that publishers with a paid subscription model get discovered in Google Search and via Google News.

In 2009, we updated the FCF policy to allow a limit of five articles per day, in order to protect publishers who felt some users were abusing the spirit of this policy. Recently we have heard from publishers about the need to revisit these policies to reflect the mobile, multiple device world. Today we are announcing a change to the FCF limit to allow a limit of three articles a day. This change will be valid on both Google Search and Google News.

Google wants to play its part in connecting users to quality news and in connecting publishers to users. We believe the FCF is important in helping achieve that goal, and we will periodically review and update these policies as needed so they continue to benefit users and publishers alike. We are listening and always welcome feedback.

Questions and answers about First Click Free

Q: Do the rest of the old guidelines still apply?

A: Yes, please check the guidelines for Google News as well as the guidelines for Web Search and the associated blog post for more information.

Q: Can I apply First Click Free to only a section of my site / only for Google News (or only for Web Search)?

A: Sure! Just make sure that both Googlebot and users from the appropriate search results can view the content as required. Keep in mind that showing Googlebot the full content of a page while showing users a registration page would be considered cloaking.

Q: Do I have to sign up to use First Click Free?

A: Please let us know about your decision to use First Click Free if you are using it for Google News. There’s no need to inform us of the First Click Free status for Google Web Search.

Q: What is the preferred way to count a user’s accesses?

A: Since there are many different site architectures, we believe it’s best to leave this up to the publisher to decide.

(Please see our related blog post for more information on First Click Free for Google News.)

Posted by John Mueller, Google Switzerland