Search Off The Record podcast – behind the scenes with Search Relations

As the Search Relations team at Google, we’re here to help webmasters be successful with their websites in Google Search. We write on this blog, create and maintain the Google Search documentation, produce videos on our YouTube channel – and now we’re …

More options to help websites preview their content on Google Search

Google uses content previews, including text snippets and other media, to help people decide whether a result is relevant to their query. The type of preview shown depends on many factors, including the type of content a person is looking for and the kind of device they’re viewing it on.

For instance, if you look for recipe results on Google, you may see thumbnail images and user ratings–things that may be more helpful than text snippets when it comes to deciding what you want to eat. Alternately, or perhaps you’re looking for a concert nearby, and are able to check out the events directly in the search results. These are made possible by publishers marking up their pages with structured data.

Google automatically generates previews in a way intended to help a user understand why the results shown are relevant to their search and why the user would want to visit the linked pages. However, we recognize that site owners may wish to independently adjust the extent of their preview content in search results. To make it easier for individual websites to define how much or which text should be available for snippeting and the extent to which other media should be included in their previews, we’re now introducing several new settings for webmasters.

Letting Google know about your snippet and content preview preferences

Previously, it was only possible to allow a textual snippet or to not allow one. We’re now introducing a set of methods that allow more fine-grained configuration of the preview content shown for your pages. This is done through two types of new settings: a set of robots meta tags and an HTML attribute.

Using robots meta tags

The robots meta tag is added to an HTML page’s <head>, or specified via the x-robots-tag HTTP header. The robots meta tags addressing the preview content for a page are:

- “

nosnippet“

This is an existing option to specify that you don’t want any textual snippet shown for this page. - “

max-snippet:[number]“

New! Specify a maximum text-length, in characters, of a snippet for your page. - “

max-video-preview:[number]“

New! Specify a maximum duration in seconds of an animated video preview. - “

max-image-preview:[setting]“

New! Specify a maximum size of image preview to be shown for images on this page, using either “none”, “standard”, or “large”.

They can be combined, for example:

<meta name="robots" content="max-snippet:50, max-image-preview:large">

Preview settings from these meta tags will become effective in mid-to-late October 2019 and may take about a week for the global rollout to complete.

Using the new data-nosnippet HTML attribute

A new way to help limit which part of a page is eligible to be shown as a snippet is the “data-nosnippet” HTML attribute on span, div, and section elements. With this, you can prevent that part of an HTML page from being shown within the textual snippet on the page.

For example:

<p><span data-nosnippet>Harry Houdini</span> is undoubtedly the most famous magician ever to live.</p>

The data-nosnippet HTML attribute will be start affecting presentation on Google products later this year. Learn more in our developer documentation for the robots meta tag, x-robots-tag, and data-nosnippet.

A note about rich results and featured snippets

Content in structured data is eligible for display as rich results in search. These kinds of results do not conform to limits declared in the above meta robots settings, but rather, can be addressed with much greater specificity by limiting or modifying the content provided in the structured data itself. For example, if a recipe is included in structured data, the contents of that structured data may be presented in a recipe carousel in the search results. Similarly, if an event is marked up with structured data, it may be presented as such in the search results. To limit that presentation, a publisher can limit the amount and type of content in the structured data.

Some special features on Search depend on the availability of preview content, so limiting your previews may prevent your content from appearing in these areas. Featured snippets, for example, requires a certain minimum number of characters to be displayed. This can vary by language, which is why there is no exact max-snippets length we can provide to ensure appearing in this feature. Those who do not wish to have content appear as featured snippets can experiment with lower max-snippet lengths. Those who want a guaranteed way to opt-out of featured snippets should use nosnippet.

The AMP Format

The AMP format comes with certain benefits, including eligibility for more prominent presentation of thumbnail images in search results and in the Google Discover feed. These characteristics have been shown to drive more traffic to publishers’ articles. However, publishers who do not want Google to use larger thumbnail images when their AMP pages are presented in search and Discover can use the above meta robots settings to specify max-image-preview of “standard” or “none.”

These new options are available to content owners worldwide and will operate the same for results we display globally. We hope they make it easier for you to optimize the value you get from Search and achieve your business goals. For more information, check out our developer documentation on meta tags. Should you have any questions, feel free to reach out to us, or drop by our webmaster help forums.

Posted by John Mueller, Webmaster Trends Analyst, Google Switzerland

When indexing goes wrong: how Google Search recovered from indexing issues & lessons learned since.

Most of the time, our search engine runs properly. Our teams work hard to prevent technical issues that could affect our users who are searching the web, or webmasters whose sites we index and serve to users. Similarly, the underlying systems that we use to power the search engine also run as intended most of the time. When small disruptions happen, they are largely not visible to anyone except our teams who ensure that our products are up and running. However, like all complex systems, sometimes larger outages can occur, which may lead to disruptions for both users and website creators.

In the last few months, such a situation occurred with our indexing systems, which had a ripple effect on some other parts of our infrastructure. While we worked as quickly as possible to remedy the situation, we apologize for the disruption, as our goal is to continuously provide high-quality products to our users and to the web ecosystem.

Since then, we took a closer, careful look into the situation. In the process, we learned a few lessons that we’d like to share with you today. In this blog post, we will go into more details about what happened, clarify how we plan to communicate better if such things happen in the future, and remind website owners of the channels they can use to communicate with us.

So, what happened a few months ago?

In April, we had several issues related to our index. The Search index is the database that holds the hundreds of billions of web pages that we crawled on the web and that we think could answer some of our users’ queries. When a user enters a query in the Google search engine, our ranking algorithms sort through those pages in our Search index to find the most relevant, useful results in a fraction of a second. Here is more information on what happened.

1. The indexing issue

To start it off, we temporarily lost part of the Search index.

Wait… What? What do you mean “lost part of the index?” Is that even possible?

Basically, when serving search results to users, to accelerate the speed of the service, the query of the user only “travels” as far as the closest of our data centers supporting the Google Search product, from which the Search Engine Results Page (SERP) is generated. So when there are modifications to the composition of the index (some pages added and removed, documents are merged, or other types of data modification), those modifications need to be reflected in all of those data centers. The consequence is that users all over the world are consistently served pages from the most recent version of the index.

Google owns and operates data centers (like the one pictured above) around the world, to keep our products running 24 hours a day, 7 days a week – source

Keeping the index unified across all those data centers is a non trivial task. For large user-facing services, we may deploy updates by starting in one data center and expand until all relevant data centers are updated. For sensitive pieces of infrastructure, we may extend a rollout over several days, interleaving them across instances in different geographic regions. source

So, as we pushed some planned changes to the Search index, on April 5th parts of the deployment system broke, on a Friday no-less! More specifically: as we were updating the index over some of our data centers, a small number of documents ended up being dropped from the index accidentally. Hence: “we lost part of the index.”

Luckily, our on-call engineers caught the issue pretty quickly, at the same time as we started picking up chatter on social media (thanks to everyone who notified us over that weekend!). As a result, we were able to start reverting the Search index to its previous stable state in all data centers only a few hours after the issue was uncovered (we keep back-ups of our indexes just in case such events happen).

We communicated on Sunday, April 7th that we were aware of the issue, and that things were starting to get back to normal. As data centers were progressively reverting back to a stable index, we continued updating on Twitter (on April 8th, on April 9th), until we were confident that all data centers were fully back to a complete version of the index on April 11th.

2. The Search Console issue

Search Console is the set of tools and reports any webmaster can use to access data about their website’s performance in Search. For example, it shows how many impressions and clicks a website gets in the organic search results every day, or information on what pages of a website are included and excluded from the Search index.

As a consequence of the Search index having the issues we described above, Search Console started to also show inconsistencies. This is because some of the data that surfaces in Search Console originates from the Search index itself:

- the Index Coverage report depends on the Search index being consistent across data centers.

- when we store a page in the Search index, we can annotate the entry with key signals about the page, like the fact that the page contains rich results markup for example. Therefore, an issue with the Search index can have an impact on the Rich Results reports in Search Console.

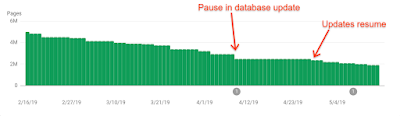

Basically, many Search Console individual reports read data from a dedicated database. That database is partially built by using information that comes from the Search index. As we had to revert back to a previous version of the Search index, we also had to pause the updating of the Search Console database. This resulted in plateau-ing data for some reports (and flakiness in others, like the URL inspection tool).

Index coverage report for indexed pages, which shows an example of the data freshness issues in Search Console in April 2019, with a longer time between 2 updates than what is usually observed.

Because the whole Search index issue took several days to roll back (see explanation above), we were delayed focusing on fixing the Search Console database until a few days later, only after the indexing issues were fixed. We communicated on April 15th – tweet – that the Search Console was having troubles and that we were working on fixing it, and we completed our fixes on April 28th (day on which the reports started gathering fresh data again, see graph above). We communicated on Twitter on April 30th that the issue was resolved- tweet.

3. Other issues unrelated to the main indexing bug

Google Search relies on a number of systems that work together. While some of those systems can be tightly linked to one another, in some cases different parts of the system experience unrelated problems around the same time.

In the present case for example, around the same time as the main indexing bug explained above, we also had brief problems gathering fresh Google News content. Additionally, while rendering pages, certain URLs started to redirect Googlebot to other unrelated pages. These issues were entirely unrelated to the indexing bug, and were quickly resolved (tweet 1 & tweet 2).

Our communication and how we intend on doing better

In addition to communicating on social media (as highlighted above) during those few weeks, we also gave webmasters more details in 2 other channels: Search Console, as well as the Search Console Help Center.

In the Search Console Help Center

We updated our “Data anomalies in Search Console” help page after the issue was fully identified. This page is used to communicate information about data disruptions to our Search Console service when the impact affects a large number of website owners.

In Search Console

Because we know that not all our users read social media or the external Help Center page, we also added annotations on Search Console reports, to notify users that the data might not be accurate (see image below). We added this information after the resolution of the bugs. Clicking on “see here for more details” sends users to the “Data Anomalies” page in the Help Center.

Index coverage report for indexed pages, which shows an example of the data annotations that we can include to notify users of specific issues.

Communications going forward

When things break at Google, we have a strong “postmortem” culture: creating a document to debrief on the breakage, and try to avoid it happening next time. The whole process is described in more detail at the Google Site Reliability Engineering website.

In the wake of the April indexing issues, we included in the postmortem how to better communicate with webmasters in case of large system failures. Our key decisions were:

- Explore ways to more quickly share information within Search Console itself about widespread bugs, and have that information serve as the main point of reference for webmasters to check, in case they are suspecting outages.

- More promptly post to the Search Console data anomalies page, when relevant (if the disturbance is going to be seen over the long term in Search Console data).

- Continue tweeting as quickly as we can about such issues to quickly reassure webmasters we’re aware and that the issue is on our end.

Those commitments should make potential future similar situations more transparent for webmasters as a whole.

Putting our resolutions into action: the “new URLs not indexed” case study

On May 22nd, we tested our new communications strategy, as we experienced another issue. Here’s what happened: while processing certain URLs, our duplicate management system ran out of memory after a planned infrastructure upgrade, which caused all incoming URLs to stop processing.

Here is a timeline of how we thought about communications, following the 3 points highlighted just above:

- We noticed the issue (around 5.30am California time, May 22nd)

We tweeted about the ongoing issue (around 6.40am California time, May 22nd)

We tweeted about the resolution (around 10pm California time, May 22nd) - We evaluated updating the “Data Anomalies” page in the Help Center, but decided against it since we did not expect any long-term impact for the majority of webmasters’ Search Console data in the long run.

- The confusion that this issue created for many confirmed our earlier conclusions that we need a way to signal more clearly in the Search Console itself that there might be a disruption to one of our systems which could impact webmasters. Such a solution might take longer to implement. We will communicate on this topic in the future, as we have more news.

Last week, we also had another indexing issue. As with May 22, we tweeted to let people know there was an issue, that we were working to fix it and when the issue was resolved.

How to debug and communicate with us

We hope that this post will bring more clarity to how our systems are complex and can sometimes break, and will also help you understand how we communicate about these matters. But while this post focuses on a widespread breakage of our systems, it’s important to keep in mind that most website indexing issues are caused by an individual website’s configuration, which can create difficulties for Google Search to index that website properly. For those cases, all webmasters can debug issues using Search Console and our Help center. After doing so, if you still think that an issue is not coming from your site or don’t know how to resolve it, come talk to us and our community, we always want to take feedback from our users. Here is how to signal an issue to us:

- Check our Webmaster Community, sometimes other webmasters have highlighted an issue that also impacts your site.

- In person! We love contact, come and talk to us at events. Calendar.

- Within our products! The Search Console feedback tool is very useful to our teams.

- Twitter and YouTube!

Posted by Vincent Courson, Google Search Outreach

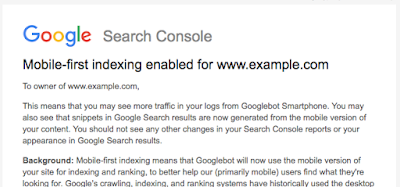

Mobile-First Indexing by default for new domains

Over the years since announcing mobile-first indexing – Google’s crawling of the web using a smartphone Googlebot – our analysis has shown that new websites are generally ready for this method of crawling. Accordingly, we’re happy to announce that mobile-first indexing will be enabled by default for all new, previously unknown to Google Search, websites starting July 1, 2019. It’s fantastic to see that new websites are now generally showing users – and search engines – the same content on both mobile and desktop devices!

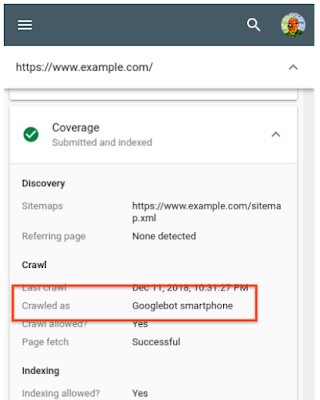

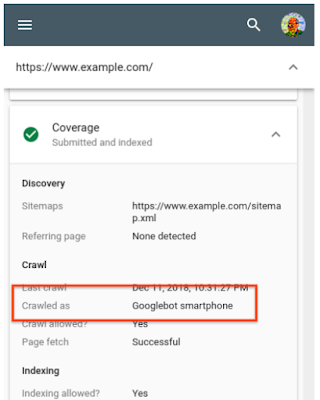

You can continue to check for mobile-first indexing of your website by using the URL Inspection Tool in Search Console. By looking at a URL on your website there, you’ll quickly see how it was last crawled and indexed. For older websites, we’ll continue monitoring and evaluating pages for their readiness for mobile first indexing, and will notify them through Search Console once they’re seen as being ready. Since the default state for new websites will be mobile-first indexing, there’s no need to send a notification.

Using the URL Inspection Tool to check the mobile-first indexing status

Our guidance on making all websites work well for mobile-first indexing continues to be relevant, for new and existing sites. For existing websites we determine their readiness for mobile-first indexing based on parity of content (including text, images, videos, links), structured data, and other meta-data (for example, titles and descriptions, robots meta tags). We recommend double-checking these factors when a website is launched or significantly redesigned.

While we continue to support responsive web design, dynamic serving, and separate mobile URLs for mobile websites, we recommend responsive web design for new websites. Because of issues and confusion we’ve seen from separate mobile URLs over the years, both from search engines and users, we recommend using a single URL for both desktop and mobile websites.

Mobile-first indexing has come a long way. We’re happy to see how the web has evolved from being focused on desktop, to becoming mobile-friendly, and now to being mostly crawlable and indexable with mobile user-agents! We realize it has taken a lot of work from your side to get there, and on behalf of our mostly-mobile users, we appreciate that. We’ll continue to monitor and evaluate this change carefully. If you have any questions, please drop by our Webmaster forums or our public events.

Posted by John Mueller, Developer Advocate, Google Zurich

Google I/O 2019 – What sessions should SEOs and webmasters watch?

However, you don’t have to physically attend the event to take advantage of this once-a-year opportunity: many conferences and talks are live streamed on YouTube for anyone to watch. Browse the full schedule of events, including a list of talks that we think will be interesting for webmasters to watch (all talks are in English). All the links shared below will bring you to pages with more details about each talk, and links to watch the sessions will display on the day of each event. All times are Pacific Central time (California time).

- Tuesday, May 7th

4pm – Building Successful Websites: Case Studies for Mature and Emerging Markets, with Aancha Bahadur, Charlie Croom, Matt Doyle, Rudra Kasturi, and Jesar Shah

- Wednesday, May 8th

10.30am – Enhance Your Search and Assistant Presence with Structured Data, with Aylin Alroik and Will Leszczuk

11.30am – Create App-like Experiences on Google Search and the Google Assistant, with Allen Harvey

11.30am – Rapidly Building Better Web Experiences with AMP, with Adam Greenberg and Naina Raisinghani

6.30pm – Unlocking New Capabilities for the Web, with Pete LePage and Thomas Steiner

- Thursday, May 9th

10.30am – Google Search: State of the Union, with John Mueller and Martin Splitt

1.30pm – Google Search and JavaScript Sites, with Zoe Clifford and Martin Splitt

How to discover & suggest Google-selected canonical URLs for your pages

Sometimes a web page can be reached by using more than one URL. In such cases, Google tries to determine the best URL to display in search and to use in other ways. We call this the “canonical URL.” There are ways site owners can help us better determine what should be the canonical URLs for their content.

If you suspect we’ve not selected the best canonical URL for your content, you can check by entering your page’s address into the URL Inspection tool within Search Console. It will show you the Google-selected canonical. If you believe there’s a better canonical that should be used, follow the steps on our duplicate URLs help page on how to suggest a preferred choice for consideration.

Please be aware that if you search using the site: or inurl: commands, you will be shown the domain you specified in those, even if these aren’t the Google-selected canonical. This happens because we’re fulfilling the exact request entered. Behind-the-scenes, we still use the Google-selected canonical, including for when people see pages without using the site: or inurl: commands.

We’ve also changed URL Inspection tool so that it will display any Google-selected canonical for a URL, not just those for properties you manage in Search Console. With this change, we’re also retiring the info: command. This was an alternative way of discovering canonicals. It was relatively underused, and URL Inspection tool provides a more comprehensive solution to help publishers with URLs.

Posted by John Mueller, Google Switzerland

How to discover & suggest Google-selected canonical URLs for your pages

Sometimes a web page can be reached by using more than one URL. In such cases, Google tries to determine the best URL to display in search and to use in other ways. We call this the “canonical URL.” There are ways site owners can help us better determine what should be the canonical URLs for their content.

If you suspect we’ve not selected the best canonical URL for your content, you can check by entering your page’s address into the URL Inspection tool within Search Console. It will show you the Google-selected canonical. If you believe there’s a better canonical that should be used, follow the steps on our duplicate URLs help page on how to suggest a preferred choice for consideration.

Please be aware that if you search using the site: or inurl: commands, you will be shown the domain you specified in those, even if these aren’t the Google-selected canonical. This happens because we’re fulfilling the exact request entered. Behind-the-scenes, we still use the Google-selected canonical, including for when people see pages without using the site: or inurl: commands.

We’ve also changed URL Inspection tool so that it will display any Google-selected canonical for a URL, not just those for properties you manage in Search Console. With this change, we’re also retiring the info: command. This was an alternative way of discovering canonicals. It was relatively underused, and URL Inspection tool provides a more comprehensive solution to help publishers with URLs.

Posted by John Mueller, Google Switzerland

Mobile-First indexing, structured data, images, and your site

It’s been two years since we started working on “mobile-first indexing” – crawling the web with smartphone Googlebot, similar to how most users access it. We’ve seen websites across the world embrace the mobile web, making fantastic websites that work on all kinds of devices. There’s still a lot to do, but today, we’re happy to announce that we now use mobile-first indexing for over half of the pages shown in search results globally.

Checking for mobile-first indexing

In general, we move sites to mobile-first indexing when our tests assure us that they’re ready. When we move sites over, we notify the site owner through a message in Search Console. It’s possible to confirm this by checking the server logs, where a majority of the requests should be from Googlebot Smartphone. Even easier, the URL inspection tool allows a site owner to check how a URL from the site (it’s usually enough to check the homepage) was last crawled and indexed.

If your site uses responsive design techniques, you should be all set! For sites that aren’t using responsive web design, we’ve seen two kinds of issues come up more frequently in our evaluations:

Missing structured data on mobile pages

Structured data is very helpful to better understand the content on your pages, and allows us to highlight your pages in fancy ways in the search results. If you use structured data on the desktop versions of your pages, you should have the same structured data on the mobile versions of the pages. This is important because with mobile-first indexing, we’ll only use the mobile version of your page for indexing, and will otherwise miss the structured data.

Testing your pages in this regard can be tricky. We suggest testing for structured data in general, and then comparing that to the mobile version of the page. For the mobile version, check the source code when you simulate a mobile device, or use the HTML generated with the mobile-friendly testing tool. Note that a page does not need to be mobile-friendly in order to be considered for mobile-first indexing.

Missing alt-text for images on mobile pages

The value of alt-attributes on images (“alt-text”) is a great way to describe images to users with screen-readers (which are used on mobile too!), and to search engine crawlers. Without alt-text for images, it’s a lot harder for Google Images to understand the context of images that you use on your pages.

Check “img” tags in the source code of the mobile version for representative pages of your website. As above, the source of the mobile version can be seen by either using the browser to simulate a mobile device, or by using the Mobile-Friendly test to check the Googlebot rendered version. Search the source code for “img” tags, and double-check that your page is providing appropriate alt-attributes for any that you want to have findable in Google Images.

For example, that might look like this:

With alt-text (good!):<img src="cute-puppies.png" alt="A photo of cute puppies on a blanket">

Without alt-text:<img src="sad-puppies.png">

It’s fantastic to see so many great websites that work well on mobile! We’re looking forward to being able to index more and more of the web using mobile-first indexing, helping more users to search the web in the same way that they access it: with a smartphone. We’ll continue to monitor and evaluate this change carefully. If you have any questions, please drop by our Webmaster forums or our public events.

Posted by John Mueller, wearer of many socks, Google Switzerland

Introducing the Indexing API and structured data for livestreams

Over the past few years, it’s become easier than ever to stream live videos online, from celebrity updates to special events. But it’s not always easy for people to determine which videos are live and know when to tune in. Today, we’re introducing new …

New URL inspection tool & more in Search Console

A few months ago, we introduced the new Search Console. Here are some updates on how it’s progressing.

Welcome “URL inspection” tool

One of our most common user requests in Search Console is for more details on how Google Search sees a specific URL. We listened, and today we’ve started launching a new tool, “URL inspection,” to provide these details so Search becomes more transparent. The URL Inspection tool provides detailed crawl, index, and serving information about your pages, directly from the Google index.

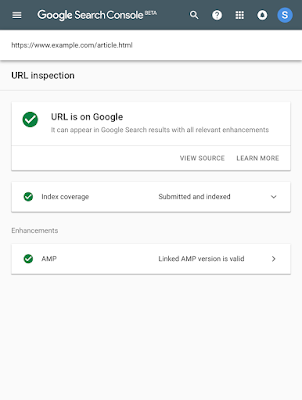

Enter a URL that you own to learn the last crawl date and status, any crawling or indexing errors, and the canonical URL for that page. If the page was successfully indexed, you can see information and status about any enhancements we found on the page, such as linked AMP version or rich results like Recipes and Jobs.

URL is indexed with valid AMP enhancement

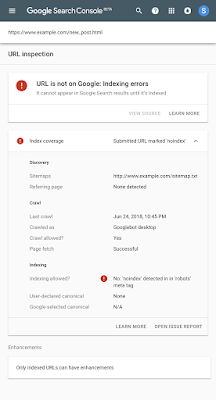

If a page isn’t indexed, you can learn why. The new report includes information about noindex robots meta tags and Google’s canonical URL for the page.

URL is not indexed due to ‘noindex’ meta tag in the HTML

A single click can take you to the issue report showing all other pages affected by the same issue to help you track down and fix common bugs.

We hope that the URL Inspection tool will help you debug issues with new or existing pages in the Google Index. We began rolling it out today; it will become available to all users in the coming weeks.

More exciting updates

In addition to the launch of URL inspection, we have a few more features and reports we recently launched to the new Search Console:

- Sixteen months of traffic data: The Search Analytics API now returns 16 months of data, just like the Performance report.

- Recipe report: The Recipe report help you fix structured data issues affecting recipes rich results. Use our task-oriented interface to test and validate your fixes; we will keep you informed on your progress using messages.

- New Search Appearance filters in Search Analytics: The performance report now gives you more visibility on new search appearance results, including Web Light and Google Play Instant results.

Thank you for your feedback

We are constantly reading your feedback, conducting surveys, and monitoring usage statistics of the new Search Console. We are happy to see so many of you using the new issue validation flow in Index Coverage and the AMP report. We notice that issues tend to get fixed quicker when you use these tools. We also see that you appreciate the updates on the validation process that we provide by email or on the validation details page.

We want to thank everyone who provided feedback: it has helped us improve our flows and fix bugs on our side.

More to come

The new Search Console is still beta, but it’s adding features and reports every month. Please keep sharing your feedback through the various channels and let us know how we’re doing.

Posted by Roman Kecher and Sion Schori – Search Console engineers

Google I/O 2018 – What sessions should SEOs and Webmasters watch live ?

However, you don’t have to physically attend the event to take advantage of this once-a-year opportunity: many conferences and talks are live streamed on YouTube for anyone to watch. You will find the full-event schedule here.

Rolling out mobile-first indexing

Today we’re announcing that after a year and a half of careful experimentation and testing, we’ve started migrating sites that follow the best practices for mobile-first indexing.

To recap, our crawling, indexing, and ranking systems have typically used the desktop version of a page’s content, which may cause issues for mobile searchers when that version is vastly different from the mobile version. Mobile-first indexing means that we’ll use the mobile version of the page for indexing and ranking, to better help our – primarily mobile – users find what they’re looking for.

We continue to have one single index that we use for serving search results. We do not have a “mobile-first index” that’s separate from our main index. Historically, the desktop version was indexed, but increasingly, we will be using the mobile versions of content.

We are notifying sites that are migrating to mobile-first indexing via Search Console. Site owners will see significantly increased crawl rate from the Smartphone Googlebot. Additionally, Google will show the mobile version of pages in Search results and Google cached pages.

To understand more about how we determine the mobile content from a site, see our developer documentation. It covers how sites using responsive web design or dynamic serving are generally set for mobile-first indexing. For sites that have AMP and non-AMP pages, Google will prefer to index the mobile version of the non-AMP page.

Sites that are not in this initial wave don’t need to panic. Mobile-first indexing is about how we gather content, not about how content is ranked. Content gathered by mobile-first indexing has no ranking advantage over mobile content that’s not yet gathered this way or desktop content. Moreover, if you only have desktop content, you will continue to be represented in our index.

Having said that, we continue to encourage webmasters to make their content mobile-friendly. We do evaluate all content in our index — whether it is desktop or mobile — to determine how mobile-friendly it is. Since 2015, this measure can help mobile-friendly content perform better for those who are searching on mobile. Related, we recently announced that beginning in July 2018, content that is slow-loading may perform less well for both desktop and mobile searchers.

To recap:

- Mobile-indexing is rolling out more broadly. Being indexed this way has no ranking advantage and operates independently from our mobile-friendly assessment.

- Having mobile-friendly content is still helpful for those looking at ways to perform better in mobile search results.

- Having fast-loading content is still helpful for those looking at ways to perform better for mobile and desktop users.

- As always, ranking uses many factors. We may show content to users that’s not mobile-friendly or that is slow loading if our many other signals determine it is the most relevant content to show.

We’ll continue to monitor and evaluate this change carefully. If you have any questions, please drop by our Webmaster forums or our public events.

Posted by Fan Zhang, Software Engineer

Introducing the new Search Console

A few months ago we released a beta version of a new Search Console experience to a limited number of users. We are now starting to release this beta version to all users of Search Console, so that everyone can explore this simplified process of optimizing a website’s presence on Google Search. The functionality will include Search performance, Index Coverage, AMP status, and Job posting reports. We will send a message once your site is ready in the new Search Console.

We started by adding some of the most popular functionality in the new Search Console (which can now be used in your day-to-day flow of addressing these topics). We are not done yet, so over the course of the year the new Search Console (beta) will continue to add functionality from the classic Search Console. Until the new Search Console is complete, both versions will live side-by-side and will be easily interconnected via links in the navigation bar, so you can use both.

The new Search Console was rebuilt from the ground up by surfacing the most actionable insights and creating an interaction model which guides you through the process of fixing any pending issues. We’ve also added ability to share reports within your own organization in order to simplify internal collaboration.

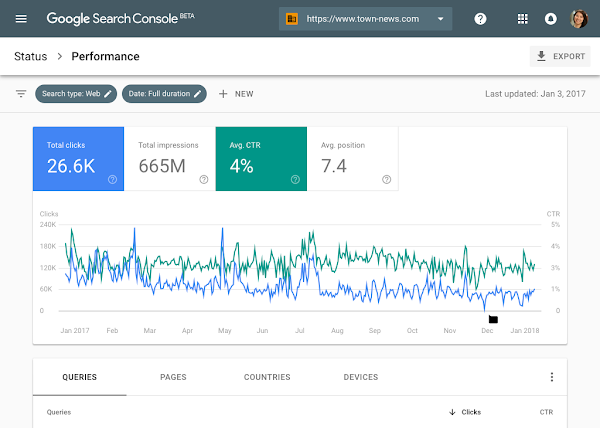

Search Performance: with 16 months of data!

If you’ve been a fan of Search Analytics, you’ll love the new Search Performance report. Over the years, users have been consistent in asking us for more data in Search Analytics. With the new report, you’ll have 16 months of data, to make analyzing longer-term trends easier and enable year-over-year comparisons. In the near future, this data will also be available via the Search Console API.

Index Coverage: a comprehensive view on Google’s indexing

The updated Index Coverage report gives you insight into the indexing of URLs from your website. It shows correctly indexed URLs, warnings about potential issues, and reasons why Google isn’t indexing some URLs. The report is built on our new Issue tracking functionality

that alerts you when new issues are detected and helps you monitor their fix.

So how does that work?

- When you drill down into a specific issue you will see a sample of URLs from your site. Clicking on error URLs brings up the page details with links to diagnostic-tools that help you understand what is the source of the problem.

- Fixing Search issues often involves multiple teams within a company. Giving the right people access to information about the current status, or about issues that have come up there, is critical to improving an implementation quickly. Now, within most reports in the new Search Console, you can do that with the share button on top of the report which will create a shareable link to the report. Once things are resolved, you can disable sharing just as easily.

- The new Search Console can also help you confirm that you’ve resolved an issue, and help us to update our index accordingly. To do this, select a flagged issue, and click validate fix. Google will then crawl and reprocess the affected URLs with a higher priority, helping your site to get back on track faster than ever.

- The Index Coverage report works best for sites that submit sitemap files. Sitemap files are a great way to let search engines know about new and updated URLs. Once you’ve submitted a sitemap file, you can now use the sitemap filter over the Index Coverage data, so that you’re able to focus on an exact list of URLs.

Search Enhancements: improve your AMP and Job Postings pages

The new Search Console is also aimed at helping you implement Search Enhancements such as AMP and Job Postings (more to come). These reports provide details into the specific errors and warnings that Google identified for these topics. In addition to the functionally described in the index coverage report, we augmented the reports with two extra features:

- The first feature is aimed at providing faster feedback in the process of fixing an issue. This is achieved by running several instantaneous tests once you click the validate fix button. If your pages don’t pass this test we provide you with an immediate notification, otherwise we go ahead and reprocess the rest of the affected pages.

- The second new feature is aimed at providing positive feedback during the fix process by expanding the validation log with a list of URLs that were identified as fixed (in addition to URLs that failed the validation or might still be pending).

Similar to the AMP report, the new Search Console provides a Job postings report. If you have jobs listings on your website, you may be eligible to have those shown directly through Google for Jobs (currently only available in certain locations).

Feedback welcome

We couldn’t have gotten so far without the ongoing feedback from our diligent trusted testers (we plan to share more on how their feedback helped us dramatically improve Search Console). However, your continued feedback is critical for us: if there’s something you find confusing or wrong, or if there’s something you really like, please let us know through the feedback feature in the sidebar. Also note that the mobile experience in the new Search Console is still a work in progress.

We want to end this blog sharing an encouraging response we got from a user who has been testing the new Search Console recently:

“The UX of new Search Console is clean and well laid out, everything is where we expect it to be. I can even kick-off validation of my fixes and get email notifications with the result. It’s been a massive help in fixing up some pesky AMP errors and warnings that were affecting pages on our site. On top of all this, the Search Analytics report now extends to 16 months of data which is a total game changer for us” – Noah Szubski, Chief Product Officer, DailyMail.com

Are there any other tools that would make your life as a webmaster easier? Let us know in the comments here, and feel free to jump into our webmaster help forums to discuss your ideas with others!

Posted by John Mueller, Ofir Roval and Hillel Maoz

Introducing the new Search Console

A few months ago we released a beta version of a new Search Console experience to a limited number of users. We are now starting to release this beta version to all users of Search Console, so that everyone can explore this simplified process of optimizing a website’s presence on Google Search. The functionality will include Search performance, Index Coverage, AMP status, and Job posting reports. We will send a message once your site is ready in the new Search Console.

We started by adding some of the most popular functionality in the new Search Console (which can now be used in your day-to-day flow of addressing these topics). We are not done yet, so over the course of the year the new Search Console (beta) will continue to add functionality from the classic Search Console. Until the new Search Console is complete, both versions will live side-by-side and will be easily interconnected via links in the navigation bar, so you can use both.

The new Search Console was rebuilt from the ground up by surfacing the most actionable insights and creating an interaction model which guides you through the process of fixing any pending issues. We’ve also added ability to share reports within your own organization in order to simplify internal collaboration.

Search Performance: with 16 months of data!

If you’ve been a fan of Search Analytics, you’ll love the new Search Performance report. Over the years, users have been consistent in asking us for more data in Search Analytics. With the new report, you’ll have 16 months of data, to make analyzing longer-term trends easier and enable year-over-year comparisons. In the near future, this data will also be available via the Search Console API.

Index Coverage: a comprehensive view on Google’s indexing

The updated Index Coverage report gives you insight into the indexing of URLs from your website. It shows correctly indexed URLs, warnings about potential issues, and reasons why Google isn’t indexing some URLs. The report is built on our new Issue tracking functionality that alerts you when new issues are detected and helps you monitor their fix.

So how does that work?

- When you drill down into a specific issue you will see a sample of URLs from your site. Clicking on error URLs brings up the page details with links to diagnostic-tools that help you understand what is the source of the problem.

- Fixing Search issues often involves multiple teams within a company. Giving the right people access to information about the current status, or about issues that have come up there, is critical to improving an implementation quickly. Now, within most reports in the new Search Console, you can do that with the share button on top of the report which will create a shareable link to the report. Once things are resolved, you can disable sharing just as easily.

- The new Search Console can also help you confirm that you’ve resolved an issue, and help us to update our index accordingly. To do this, select a flagged issue, and click validate fix. Google will then crawl and reprocess the affected URLs with a higher priority, helping your site to get back on track faster than ever.

- The Index Coverage report works best for sites that submit sitemap files. Sitemap files are a great way to let search engines know about new and updated URLs. Once you’ve submitted a sitemap file, you can now use the sitemap filter over the Index Coverage data, so that you’re able to focus on an exact list of URLs.

Search Enhancements: improve your AMP and Job Postings pages

The new Search Console is also aimed at helping you implement Search Enhancements such as AMP and Job Postings (more to come). These reports provide details into the specific errors and warnings that Google identified for these topics. In addition to the functionally described in the index coverage report, we augmented the reports with two extra features:

- The first feature is aimed at providing faster feedback in the process of fixing an issue. This is achieved by running several instantaneous tests once you click the validate fix button. If your pages don’t pass this test we provide you with an immediate notification, otherwise we go ahead and reprocess the rest of the affected pages.

- The second new feature is aimed at providing positive feedback during the fix process by expanding the validation log with a list of URLs that were identified as fixed (in addition to URLs that failed the validation or might still be pending).

Similar to the AMP report, the new Search Console provides a Job postings report. If you have jobs listings on your website, you may be eligible to have those shown directly through Google for Jobs (currently only available in certain locations).

Feedback welcome

We couldn’t have gotten so far without the ongoing feedback from our diligent trusted testers (we plan to share more on how their feedback helped us dramatically improve Search Console). However, your continued feedback is critical for us: if there’s something you find confusing or wrong, or if there’s something you really like, please let us know through the feedback feature in the sidebar. Also note that the mobile experience in the new Search Console is still a work in progress.

We want to end this blog sharing an encouraging response we got from a user who has been testing the new Search Console recently:

“The UX of new Search Console is clean and well laid out, everything is where we expect it to be. I can even kick-off validation of my fixes and get email notifications with the result. It’s been a massive help in fixing up some pesky AMP errors and warnings that were affecting pages on our site. On top of all this, the Search Analytics report now extends to 16 months of data which is a total game changer for us” – Noah Szubski, Chief Product Officer, DailyMail.com

Are there any other tools that would make your life as a webmaster easier? Let us know in the comments here, and feel free to jump into our webmaster help forums to discuss your ideas with others!

Posted by John Mueller, Ofir Roval and Hillel Maoz

Rendering AJAX-crawling pages

The AJAX crawling scheme was introduced as a way of making JavaScript-based webpages accessible to Googlebot, and we’ve previously announced our plans to turn it down. Over time, Google engineers have significantly improved rendering of JavaScript for…

Rendering AJAX-crawling pages

The AJAX crawling scheme was introduced as a way of making JavaScript-based webpages accessible to Googlebot, and we’ve previously announced our plans to turn it down. Over time, Google engineers have significantly improved rendering of JavaScript for…

Closing down for a day

Even in today’s “always-on” world, sometimes businesses want to take a break. There are times when even their online presence needs to be paused. This blog post covers some of the available options so that a site’s search presence isn’t affected.

Option: Block cart functionality

If a site only needs to block users from buying things, the simplest approach is to disable that specific functionality. In most cases, shopping cart pages can either be blocked from crawling through the robots.txt file, or blocked from indexing with a robots meta tag. Since search engines either won’t see or index that content, you can communicate this to users in an appropriate way. For example, you may disable the link to the cart, add a relevant message, or display an informational page instead of the cart.

Option: Always show interstitial or pop-up

If you need to block the whole site from users, be it with a “temporarily unavailable” message, informational page, or popup, the server should return a 503 HTTP result code (“Service Unavailable”). The 503 result code makes sure that Google doesn’t index the temporary content that’s shown to users. Without the 503 result code, the interstitial would be indexed as your website’s content.

Googlebot will retry pages that return 503 for up to about a week, before treating it as a permanent error that can result in those pages being dropped from the search results. You can also include a “Retry after” header to indicate how long the site will be unavailable. Blocking a site for longer than a week can have negative effects on the site’s search results regardless of the method that you use.

Option: Switch whole website off

Turning the server off completely is another option. You might also do this if you’re physically moving your server to a different data center. For this, have a temporary server available to serve a 503 HTTP result code for all URLs (with an appropriate informational page for users), and switch your DNS to point to that server during that time.

- Set your DNS TTL to a low time (such as 5 minutes) a few days in advance.

- Change the DNS to the temporary server’s IP address.

- Take your main server offline once all requests go to the temporary server.

- … your server is now offline …

- When ready, bring your main server online again.

- Switch DNS back to the main server’s IP address.

- Change the DNS TTL back to normal.

We hope these options cover the common situations where you’d need to disable your website temporarily. If you have any questions, feel free to drop by our webmaster help forums!

PS If your business is active locally, make sure to reflect these closures in the opening hours for your local listings too!

Posted by John Mueller, Webmaster Trends Analyst, Switzerland

Closing down for a day

Note: This post is specific to Google’s organic web-search. For Google’s other services, please check with the appropriate help center (e.g., for Google Shopping) or help forum.

Even in today’s “always-on” world, sometimes businesses want to take a break. There are times when even their online presence needs to be paused. This blog post covers some of the available options so that a site’s search presence isn’t affected.

Option: Block cart functionality

If a site only needs to block users from buying things, the simplest approach is to disable that specific functionality. In most cases, shopping cart pages can either be blocked from crawling through the robots.txt file, or blocked from indexing with a robots meta tag. Since search engines either won’t see or index that content, you can communicate this to users in an appropriate way. For example, you may disable the link to the cart, add a relevant message, or display an informational page instead of the cart.

Option: Always show interstitial or pop-up

If you need to block the whole site from users, be it with a “temporarily unavailable” message, informational page, or popup, the server should return a 503 HTTP result code (“Service Unavailable”). The 503 result code makes sure that Google doesn’t index the temporary content that’s shown to users. Without the 503 result code, the interstitial would be indexed as your website’s content.

Googlebot will retry pages that return 503 for up to about a week, before treating it as a permanent error that can result in those pages being dropped from the search results. You can also include a “Retry after” header to indicate how long the site will be unavailable. Blocking a site for longer than a week can have negative effects on the site’s search results regardless of the method that you use.

Option: Switch whole website off

Turning the server off completely is another option. You might also do this if you’re physically moving your server to a different data center. For this, have a temporary server available to serve a 503 HTTP result code for all URLs (with an appropriate informational page for users), and switch your DNS to point to that server during that time.

- Set your DNS TTL to a low time (such as 5 minutes) a few days in advance.

- Change the DNS to the temporary server’s IP address.

- Take your main server offline once all requests go to the temporary server.

- … your server is now offline …

- When ready, bring your main server online again.

- Switch DNS back to the main server’s IP address.

- Change the DNS TTL back to normal.

We hope these options cover the common situations where you’d need to disable your website temporarily. If you have any questions, feel free to drop by our webmaster help forums!

PS If your business is active locally, make sure to reflect these closures in the opening hours for your local listings too!

Posted by John Mueller, Webmaster Trends Analyst, Switzerland

What Crawl Budget Means for Googlebot

Recently, we’ve heard a number of definitions for “crawl budget”, however we don’t have a single term that would describe everything that “crawl budget” stands for externally. With this post we’ll clarify what we actually have and what it means for Goo…

An update on Google’s feature-phone crawling & indexing

Limited mobile devices, “feature-phones”, require a special form of markup or a transcoder for web content. Most websites don’t provide feature-phone-compatible content in WAP/WML any more. Given these developments, we’ve made changes in how we crawl f…