#NoHacked 3.0: Tips on prevention

- Top ways websites get hacked by spammers:

Understanding how your site was compromised is an important part of protecting your site from attacks, here sometop ways that sites get compromised by spammers.

- Be mindful of your sources! Be very careful of a free premium theme/plugin!

You probably have heard about free premium plugins! If you’ve ever stumbled upon a site offering you plugins you normally have to purchase for free, be very careful. Many hackers lure you in by copying a popular plugin and then add backdoors or malware that will allow them to access your site. Read more about a similar case on the Sucuri blog. Additionally, even legit good quality plugins and themes can become dangerous if : - you do not update them as soon as a new version becomes available

- the developer of said theme or plugin does not update them, and they become old over time

- Botnet in wordpress

A botnetis a cluster of machines, devices, or websites under the control of a third party often used to commit malicious acts, such as operating spam campaigns, clickbots, or DDoS. It’s difficult to detect if your site has been infected by a botnet because there are often no specific changes to your site. However, your site’s reputation, resources, and data are at risk if your site is in a botnet. Learn more about botnets, how to detect them, and how they can affect your site at Botnet in wordpress and joomla article.

As usual if you have any questions post on our Webmaster Help Forums for help from the friendly community and see you next week!

#NoHacked 3.0: Tips on prevention

- Top ways websites get hacked by spammers:

Understanding how your site was compromised is an important part of protecting your site from attacks, here sometop ways that sites get compromised by spammers.

- Be mindful of your sources! Be very careful of a free premium theme/plugin!

You probably have heard about free premium plugins! If you’ve ever stumbled upon a site offering you plugins you normally have to purchase for free, be very careful. Many hackers lure you in by copying a popular plugin and then add backdoors or malware that will allow them to access your site. Read more about a similar case on the Sucuri blog. Additionally, even legit good quality plugins and themes can become dangerous if :

- you do not update them as soon as a new version becomes available

- the developer of said theme or plugin does not update them, and they become old over time

In any case, keeping all your site’s software modern and updated is essential in keeping hackers out of your website.

- Botnet in wordpress

A botnetis a cluster of machines, devices, or websites under the control of a third party often used to commit malicious acts, such as operating spam campaigns, clickbots, or DDoS. It’s difficult to detect if your site has been infected by a botnet because there are often no specific changes to your site. However, your site’s reputation, resources, and data are at risk if your site is in a botnet. Learn more about botnets, how to detect them, and how they can affect your site at Botnet in wordpress and joomla article.

As usual if you have any questions post on our Webmaster Help Forums for help from the friendly community and see you next week!

A revamped SEO Starter Guide

The traditional SEO Starter Guide lists best practices that make it easier for search engines to crawl, index and understand content on websites. The Webmaster Academy has the information and tools to teach webmasters how to create a site and have it found in Google Search. Since these two resources have some overlapping purpose and content, and could be more exhaustive on some aspects of creating a user friendly and safe website, we’re deprecating the Webmaster Academy and removing the old SEO Starter Guide PDF.

The updated SEO Starter Guide will replace both the old Starter Guide and the Webmaster Academy. The updated version builds on top of the previously available document, and has additional sections on the need for search engine optimization, adding structured data markup and building mobile-friendly websites.

This new Guide is available in nine languages (English, German, Spanish, French, Italian, Japanese, Portuguese, Russian and Turkish) starting today, and we’ll be adding sixteen more languages very soon.

Go check out the new SEO Starter Guide, and let us know what you think about it.

For any questions, feel free to drop by our Webmaster Help Forums!

Posted by Abhas Tripathi, Search Quality Strategist

A revamped SEO Starter Guide

The traditional SEO Starter Guide lists best practices that make it easier for search engines to crawl, index and understand content on websites. The Webmaster Academy has the information and tools to teach webmasters how to create a site and have it found in Google Search. Since these two resources have some overlapping purpose and content, and could be more exhaustive on some aspects of creating a user friendly and safe website, we’re deprecating the Webmaster Academy and removing the old SEO Starter Guide PDF.

The updated SEO Starter Guide will replace both the old Starter Guide and the Webmaster Academy. The updated version builds on top of the previously available document, and has additional sections on the need for search engine optimization, adding structured data markup and building mobile-friendly websites.

This new Guide is available in nine languages (English, German, Spanish, French, Italian, Japanese, Portuguese, Russian and Turkish) starting today, and we’ll be adding sixteen more languages very soon.

Go check out the new SEO Starter Guide, and let us know what you think about it.

For any questions, feel free to drop by our Webmaster Help Forums!

Posted by Abhas Tripathi, Search Quality Strategist

#NoHacked 3.0: How do I know if my site is hacked?

Last week #NoHacked is back on our G+ and Twitter channels! #NoHacked is our social campaign which aims to bring awareness about hacking attacks and offer tips on how to keep your sites safe from hackers. This time we would like to start sharing content from #NoHacked campaign on this blog in your local language!

Why do sites get hacked? Hackers havedifferent motives for compromising a website, and hack attacks can be very different, so they are not always easily detected. Here are some tips which will help you in detecting hacked sites!

- Getting started:

Start with our guide “How do I know if my site is hacked?” if you’ve received a security alert from Google or another party. This guide will walk you through basic steps to check for any signs of compromises on your site.

- Understand the alert on Google Search:

At Google, we have different processes to deal with hacking scenarios. Scanning tools will often detect malware, but they can miss some spamming hacks. A clean verdict from Safe Browsing does not mean that you haven’t been hacked to distribute spam.

- If you ever see “This site may be hacked”, your site may have been hacked to display spam. Essentially, your site has been hijacked to serve some free advertising.

- If you see“This site may harm your computer” beneath the site URL then we think the site you’re about to visit might allow programs to install malicious software on your computer.

- If you see a big red screen before your site, that can mean a variety of things:

- If you see “The site ahead contains malware”, Google has detected that your site distributes malware.

- If you see “The site ahead contains harmful programs”, then the site has been flagged for distributing unwanted software.

- “Deceptive site ahead” warnings indicate that your site may be serving phishing or social engineering. Your site could have been hacked to do any of these things.

- Malvertising vs Hack:

Malvertising happens when your site loads a bad ad. It may make it seem as though your site has been hacked, perhaps by redirecting your visitors, but in fact is just an ad behaving badly.

- Open redirects: check if your site is enabling open redirects

Hackers might want to take advantage of a good site to mask their URLs. One way they do this is by using open redirects, which allow them to use your site to redirect users to any URL of their choice. You can read more here!

- Mobile check: make sure to view your site from a mobile browser in incognito mode. Check for bad mobile ad networks.

Sometimes bad content like ads or other third-party elements unknowingly redirect mobile users. This behavior can easily escape detection because it’s only visible from certain browsers. Be sure to check that the mobile and desktop versions of your site show the same content.

- Use Search Console and get message:

Search Console is a tool that Google uses to communicate with you about your website. It also includes many other tools that can help you improve and manage your website. Make sure you have your site verified in Search Console even if you aren’t a primary developer on your site. The alerts and messages in Search Console will let you know if Google has detected any critical errors on your site.

If you’re still unable to find any signs of a hack, ask a security expert or post on our Webmaster Help Forums for a second look.

The #NoHacked campaign will run for the next 3 weeks. Follow us on our G+ and Twitter channels or look out for the content in this blog as we will be posting summary for each week right here at the beginning of each week! Stay safe meanwhile!

#NoHacked 3.0: How do I know if my site is hacked?

Last week #NoHacked is back on our G+ and Twitter channels! #NoHacked is our social campaign which aims to bring awareness about hacking attacks and offer tips on how to keep your sites safe from hackers. This time we would like to start sharing content from #NoHacked campaign on this blog in your local language!

Why do sites get hacked? Hackers havedifferent motives for compromising a website, and hack attacks can be very different, so they are not always easily detected. Here are some tips which will help you in detecting hacked sites!

- Getting started:

Start with our guide “How do I know if my site is hacked?” if you’ve received a security alert from Google or another party. This guide will walk you through basic steps to check for any signs of compromises on your site.

- Understand the alert on Google Search:

At Google, we have different processes to deal with hacking scenarios. Scanning tools will often detect malware, but they can miss some spamming hacks. A clean verdict from Safe Browsing does not mean that you haven’t been hacked to distribute spam.

- If you ever see “This site may be hacked”, your site may have been hacked to display spam. Essentially, your site has been hijacked to serve some free advertising.

- If you see“This site may harm your computer” beneath the site URL then we think the site you’re about to visit might allow programs to install malicious software on your computer.

- If you see a big red screen before your site, that can mean a variety of things:

- If you see “The site ahead contains malware”, Google has detected that your site distributes malware.

- If you see “The site ahead contains harmful programs”, then the site has been flagged for distributing unwanted software.

- “Deceptive site ahead” warnings indicate that your site may be serving phishing or social engineering. Your site could have been hacked to do any of these things.

- Malvertising vs Hack:

Malvertising happens when your site loads a bad ad. It may make it seem as though your site has been hacked, perhaps by redirecting your visitors, but in fact is just an ad behaving badly.

- Open redirects: check if your site is enabling open redirects

Hackers might want to take advantage of a good site to mask their URLs. One way they do this is by using open redirects, which allow them to use your site to redirect users to any URL of their choice. You can read more here!

- Mobile check: make sure to view your site from a mobile browser in incognito mode. Check for bad mobile ad networks.

Sometimes bad content like ads or other third-party elements unknowingly redirect mobile users. This behavior can easily escape detection because it’s only visible from certain browsers. Be sure to check that the mobile and desktop versions of your site show the same content.

- Use Search Console and get message:

Search Console is a tool that Google uses to communicate with you about your website. It also includes many other tools that can help you improve and manage your website. Make sure you have your site verified in Search Console even if you aren’t a primary developer on your site. The alerts and messages in Search Console will let you know if Google has detected any critical errors on your site.

If you’re still unable to find any signs of a hack, ask a security expert or post on our Webmaster Help Forums for a second look.

The #NoHacked campaign will run for the next 3 weeks. Follow us on our G+ and Twitter channels or look out for the content in this blog as we will be posting summary for each week right here at the beginning of each week! Stay safe meanwhile!

Rendering AJAX-crawling pages

The AJAX crawling scheme was introduced as a way of making JavaScript-based webpages accessible to Googlebot, and we’ve previously announced our plans to turn it down. Over time, Google engineers have significantly improved rendering of JavaScript for…

Rendering AJAX-crawling pages

The AJAX crawling scheme was introduced as a way of making JavaScript-based webpages accessible to Googlebot, and we’ve previously announced our plans to turn it down. Over time, Google engineers have significantly improved rendering of JavaScript for…

A reminder about “event” markup

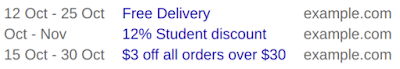

Lately we’ve been receiving feedback from users seeing non-events like coupons or vouchers showing up in search results where “events” snippets appear. This is really confusing for users and also against our guidelines, where we have added additional clarification.

So, what’s the problem?

We’ve seen a number of publishers in the coupons/vouchers space use the “event” markup to describe their offers. And as much as using a discount voucher can be a very special thing, that doesn’t make coupons or vouchers events or “saleEvents”. Using Event markup to describe something that is not an event creates a bad user experience, by triggering a rich result for something that will happen at a particular time, despite no actual event being present.

Here are some examples to illustrate the issue:

Since this creates a misleading user experience, we may take manual action on such cases. In case your website is affected by such a manual action, you will find a notification in your Search Console account. If a manual action is taken, it can result in structured data markup for the whole site not being used for search results.

While we’re specifically highlighting coupons and vouchers in this blogpost, this applies to all other non-event items being annotated with “event” markup as well — or, really, for applying a type of markup to something other than the type of thing it is meant to describe.

For more information, please visit our developer documentation or stop by our Webmaster Forum in case you have additional questions!

Posted by Sven Naumann, Trust & Safety Search Team

A reminder about “event” markup

Lately we’ve been receiving feedback from users seeing non-events like coupons or vouchers showing up in search results where “events” snippets appear. This is really confusing for users and also against our guidelines, where we have added additional clarification.

So, what’s the problem?

We’ve seen a number of publishers in the coupons/vouchers space use the “event” markup to describe their offers. And as much as using a discount voucher can be a very special thing, that doesn’t make coupons or vouchers events or “saleEvents”. Using Event markup to describe something that is not an event creates a bad user experience, by triggering a rich result for something that will happen at a particular time, despite no actual event being present.

Here are some examples to illustrate the issue:

Since this creates a misleading user experience, we may take manual action on such cases. In case your website is affected by such a manual action, you will find a notification in your Search Console account. If a manual action is taken, it can result in structured data markup for the whole site not being used for search results.

While we’re specifically highlighting coupons and vouchers in this blogpost, this applies to all other non-event items being annotated with “event” markup as well — or, really, for applying a type of markup to something other than the type of thing it is meant to describe.

For more information, please visit our developer documentation or stop by our Webmaster Forum in case you have additional questions!

Posted by Sven Naumann, Trust & Safety Search Team

Engaging users through high quality AMP pages

To improve our users’ experience with AMP results, we are making changes to how we enforce our policy on content parity with AMP. Starting Feb 1, 2018, the policy requires that the AMP page content be comparable to the (original) canonical page content…

Engaging users through high quality AMP pages

To improve our users’ experience with AMP results, we are making changes to how we enforce our policy on content parity with AMP. Starting Feb 1, 2018, the policy requires that the AMP page content be comparable to the (original) canonical page content…

Make your site’s complete jobs information accessible to job seekers

In June, we announced a new experience that put the convenience of Search into the hands of job seekers. Today, we are taking the next step in improving the job search experience on Google by adding a feature that shows estimated salary information from the web alongside job postings, as well as adding new UI features for users.

Salary information has been one of the most requested additions from job seekers. This helps people evaluate whether a job is a good fit, and is an opportunity for sites with estimated salary information to:

- Increase brand awareness: Estimated salary information shows a representative logo from the estimated salary provider.

- Get more referral traffic: Users can click through directly to salary estimate pages when salary information surfaces in job search results.

If your site provides salary estimates, you can take advantage of these changes in the following ways:

Specify actual salary information

Actual salary refers to the base salary information that is provided by the employer. If your site publishes job listings, you can add JobPosting structured data and populate the baseSalary property to be eligible for inclusion in job search results.

This salary information will be made available in both the list and the detail views.

Provide estimated salary information

In cases where employers don’t provide actual salary, job seekers may see estimated salaries sourced from multiple partners for the same or similar occupation. If your site provides salary estimate information, you can add Occupation structured data to be eligible for inclusion in job search results.

Include exact location information

We’ve heard from users that having accurate, street-level location information helps them to focus on opportunities that work best for them. Sites that publish job listings can do this can do this by using the jobLocation property in JobPosting structured data.

Validate your structured data

To double-check the structured data on your pages, we’ll be updating the Structured Data Testing Tool and the Search Console reports in the near future. In the meantime, you can monitor the performance of your job postings in Search Analytics. Stay tuned!

Since launching this summer, we’ve seen over 60% growth in number of companies with jobs showing on Google and connected tens of millions of people to new job opportunities. We are excited to help users find jobs with salaries that meet their needs, and to route them to your site for more information. We invite sites that provide salary estimates to mark up their salary pages using the Occupation structured data. Should you have any questions regarding the use of structured data on your site, feel free to drop by our webmaster help forums.

Posted by Nick Zakrasek, Product Manager

Make your site’s complete jobs information accessible to job seekers

In June, we announced a new experience that put the convenience of Search into the hands of job seekers. Today, we are taking the next step in improving the job search experience on Google by adding a feature that shows estimated salary information from the web alongside job postings, as well as adding new UI features for users.

Salary information has been one of the most requested additions from job seekers. This helps people evaluate whether a job is a good fit, and is an opportunity for sites with estimated salary information to:

- Increase brand awareness: Estimated salary information shows a representative logo from the estimated salary provider.

- Get more referral traffic: Users can click through directly to salary estimate pages when salary information surfaces in job search results.

If your site provides salary estimates, you can take advantage of these changes in the following ways:

Specify actual salary information

Actual salary refers to the base salary information that is provided by the employer. If your site publishes job listings, you can add JobPosting structured data and populate the baseSalary property to be eligible for inclusion in job search results.

This salary information will be made available in both the list and the detail views.

Provide estimated salary information

In cases where employers don’t provide actual salary, job seekers may see estimated salaries sourced from multiple partners for the same or similar occupation. If your site provides salary estimate information, you can add Occupation structured data to be eligible for inclusion in job search results.

Include exact location information

We’ve heard from users that having accurate, street-level location information helps them to focus on opportunities that work best for them. Sites that publish job listings can do this can do this by using the jobLocation property in JobPosting structured data.

Validate your structured data

To double-check the structured data on your pages, we’ll be updating the Structured Data Testing Tool and the Search Console reports in the near future. In the meantime, you can monitor the performance of your job postings in Search Analytics. Stay tuned!

Since launching this summer, we’ve seen over 60% growth in number of companies with jobs showing on Google and connected tens of millions of people to new job opportunities. We are excited to help users find jobs with salaries that meet their needs, and to route them to your site for more information. We invite sites that provide salary estimates to mark up their salary pages using the Occupation structured data. Should you have any questions regarding the use of structured data on your site, feel free to drop by our webmaster help forums.

Posted by Nick Zakrasek, Product Manager

Enabling more high quality content for users

In Google’s mission to organize the world’s information, we want to guide Google users to the highest quality content, the principle exemplified in our quality rater guidelines. Professional publishers provide the lion’s share of quality content that benefits users and we want to encourage their success.

The ecosystem is sustained via two main sources of revenue: ads and subscriptions, with the latter requiring a delicate balance to be effective in Search. Typically subscription content is hidden behind paywalls, so that users who don’t have a subscription don’t have access. Our evaluations have shown that users who are not familiar with the high quality content behind a paywall often turn to other sites offering free content. It is difficult to justify a subscription if one doesn’t already know how valuable the content is, and in fact, our experiments have shown that a portion of users shy away from subscription sites. Therefore, it is essential that sites provide some amount of free sampling of their content so that users can learn how valuable their content is.

The First Click Free (FCF) policy for both Google web search and News was designed to address this issue. It offers promotion and discovery opportunities for publishers with subscription content, while giving Google users an opportunity to discover that content. Over the past year, we have worked with publishers to investigate the effects of FCF on user satisfaction and on the sustainability of the publishing ecosystem. We found that while FCF is a reasonable sampling model, publishers are in a better position to determine what specific sampling strategy works best for them. Therefore, we are removing FCF as a requirement for Search, and we encourage publishers to experiment with different free sampling schemes, as long as they stay within the updated webmaster guidelines. We call this Flexible Sampling.

One of the original motivations for FCF is to address the issues surrounding cloaking, where the content served to Googlebot is different from the content served to users. Spammers often seek to game search engines by showing interesting content to the search engine, say healthy food recipes, but then showing users an offer for diet pills. This “bait and switch” scheme creates a bad user experience since users do not get the content they expected. Sites with paywalls are strongly encouraged to apply the new structured data to their pages, because without it, the paywall may be interpreted as a form of cloaking, and the pages would then be removed from search results.

Based on our investigations, we have created detailed best practices for implementing flexible sampling. There are two types of sampling we advise: metering, which provides users with a quota of free articles to consume, after which paywalls will start appearing; and lead-in, which offers a portion of an article’s content without it being shown in full.

For metering, we think that monthly (rather than daily) metering provides more flexibility and a safer environment for testing. The user impact of changing from one integer value to the next is less significant at, say, 10 monthly samples than at 3 daily samples. All publishers and their audiences are different, so there is no single value for optimal free sampling across publishers. However, we recommend that publishers start by providing 10 free clicks per month to Google search users in order to preserve a good user experience for new potential subscribers. Publishers should then experiment to optimize the tradeoff between discovery and conversion that works best for their businesses.

Lead-in is generally implemented as truncated content, such as the first few sentences or 50-100 words of the article. Lead-in allows users a taste of how valuable the content may be. Compared to a page with completely blocked content, lead-in clearly provides more utility and added value to users.

We are excited by this change as it allows the growth of the premium content ecosystem, which ultimately benefits users. We look forward to the prospect of serving users more high quality content!

Posted by Cody Kwok, Principal Engineer

Enabling more high quality content for users

In Google’s mission to organize the world’s information, we want to guide Google users to the highest quality content, the principle exemplified in our quality rater guidelines. Professional publishers provide the lion’s share of quality content that benefits users and we want to encourage their success.

The ecosystem is sustained via two main sources of revenue: ads and subscriptions, with the latter requiring a delicate balance to be effective in Search. Typically subscription content is hidden behind paywalls, so that users who don’t have a subscription don’t have access. Our evaluations have shown that users who are not familiar with the high quality content behind a paywall often turn to other sites offering free content. It is difficult to justify a subscription if one doesn’t already know how valuable the content is, and in fact, our experiments have shown that a portion of users shy away from subscription sites. Therefore, it is essential that sites provide some amount of free sampling of their content so that users can learn how valuable their content is.

The First Click Free (FCF) policy for both Google web search and News was designed to address this issue. It offers promotion and discovery opportunities for publishers with subscription content, while giving Google users an opportunity to discover that content. Over the past year, we have worked with publishers to investigate the effects of FCF on user satisfaction and on the sustainability of the publishing ecosystem. We found that while FCF is a reasonable sampling model, publishers are in a better position to determine what specific sampling strategy works best for them. Therefore, we are removing FCF as a requirement for Search, and we encourage publishers to experiment with different free sampling schemes, as long as they stay within the updated webmaster guidelines. We call this Flexible Sampling.

One of the original motivations for FCF is to address the issues surrounding cloaking, where the content served to Googlebot is different from the content served to users. Spammers often seek to game search engines by showing interesting content to the search engine, say healthy food recipes, but then showing users an offer for diet pills. This “bait and switch” scheme creates a bad user experience since users do not get the content they expected. Sites with paywalls are strongly encouraged to apply the new structured data to their pages, because without it, the paywall may be interpreted as a form of cloaking, and the pages would then be removed from search results.

Based on our investigations, we have created detailed best practices for implementing flexible sampling. There are two types of sampling we advise: metering, which provides users with a quota of free articles to consume, after which paywalls will start appearing; and lead-in, which offers a portion of an article’s content without it being shown in full.

For metering, we think that monthly (rather than daily) metering provides more flexibility and a safer environment for testing. The user impact of changing from one integer value to the next is less significant at, say, 10 monthly samples than at 3 daily samples. All publishers and their audiences are different, so there is no single value for optimal free sampling across publishers. However, we recommend that publishers start by providing 10 free clicks per month to Google search users in order to preserve a good user experience for new potential subscribers. Publishers should then experiment to optimize the tradeoff between discovery and conversion that works best for their businesses.

Lead-in is generally implemented as truncated content, such as the first few sentences or 50-100 words of the article. Lead-in allows users a taste of how valuable the content may be. Compared to a page with completely blocked content, lead-in clearly provides more utility and added value to users.

We are excited by this change as it allows the growth of the premium content ecosystem, which ultimately benefits users. We look forward to the prospect of serving users more high quality content!

Posted by Cody Kwok, Principal Engineer

How to move from m-dot URLs to responsive site

With more sites moving towards responsive web design, many webmasters have questions about migrating from separate mobile URLs, also frequently known as “m-dot URLs”, to using responsive web design. Here are some recommendations on how to move from separate urls to one responsive URL in a way that gives your sites the best chance of performing well on Google’s search results.

Moving to responsive sites in a Googlebot-friendly way

Once you have your responsive site ready, moving is something you can definitely do with just a bit of forethought. Considering your URLs stay the same for desktop version, all you have to do is to configure 301 redirects from the mobile URLs to the responsive web URLs.

Here are the detailed steps:

- Get your responsive site ready

- Configure 301 redirects on the old mobile URLs to point to the responsive versions (the new pages). These redirects need to be done on a per-URL basis, individually from each mobile URLs to the responsive URLs.

- Remove any mobile-URL specific configuration your site might have, such as conditional redirects or a vary HTTP header.

- As a good practice, setup rel=canonical on the responsive URLs pointing to themselves (self-referential canonicals).

If you’re currently using dynamic serving and want to move to responsive design, you don’t need to add or change any redirects.

Some benefits for moving to responsive web design

Moving to a responsive site should make maintenance and reporting much easier for you down the road. Aside from no longer needing to manage separate URLs for all pages, it will also make it much easier to adopt practices and technologies such as hreflang for internationalization, AMP for speed, structured data for advanced search features and more.

As always, if you need more help you can ask a question in our webmaster forum.

Posted by Cherry Prommawin, Webmaster Relations

How to move from m-dot URLs to responsive site

With more sites moving towards responsive web design, many webmasters have questions about migrating from separate mobile URLs, also frequently known as “m-dot URLs”, to using responsive web design. Here are some recommendations on how to move from separate urls to one responsive URL in a way that gives your sites the best chance of performing well on Google’s search results.

Moving to responsive sites in a Googlebot-friendly way

Once you have your responsive site ready, moving is something you can definitely do with just a bit of forethought. Considering your URLs stay the same for desktop version, all you have to do is to configure 301 redirects from the mobile URLs to the responsive web URLs.

Here are the detailed steps:

- Get your responsive site ready

- Configure 301 redirects on the old mobile URLs to point to the responsive versions (the new pages). These redirects need to be done on a per-URL basis, individually from each mobile URLs to the responsive URLs.

- Remove any mobile-URL specific configuration your site might have, such as conditional redirects or a vary HTTP header.

- As a good practice, setup rel=canonical on the responsive URLs pointing to themselves (self-referential canonicals).

If you’re currently using dynamic serving and want to move to responsive design, you don’t need to add or change any redirects.

Some benefits for moving to responsive web design

Moving to a responsive site should make maintenance and reporting much easier for you down the road. Aside from no longer needing to manage separate URLs for all pages, it will also make it much easier to adopt practices and technologies such as hreflang for internationalization, AMP for speed, structured data for advanced search features and more.

As always, if you need more help you can ask a question in our webmaster forum.

Posted by Cherry Prommawin, Webmaster Relations

Introducing Our New International Webmaster Blogs!

Join us in welcoming the latest additions to the Webmasters community:

नमस्ते Webmasters in Hindi!

Добро Пожаловать Webmasters in Russian!

Hoşgeldiniz Webmasters in Turkish!

สวัสดีค่ะ Webmasters in Thai!

xin chào Webmasters in Vietnamese!

We will be sharing webmaster-related updates in our current and new blogs to make sure you have a place to follow the latest launches, updates and changes in Search in your languages! We will share links to relevant Help resources, educational content and events as they become available.

Just a reminder, here are some of the resources that we have available in multiple languages:

- Google.com/webmasters – documentation, support channels, tools (including a link to Search Console) and learning materials.

- Help Center – tips and tutorials on using Search Console, answers to frequently asked questions and step-by-step guides.

- Help forum – ask your questions and get advice from the Webmaster community

- YouTube Channel – recordings of Hangouts on Air in different languages are on our

- G+ community – another place we announce and share our Hangouts On Air

Testing tools:

- PageSpeed insights – actionable insights on how to increase your site’s performance

- Mobile-Friendly test – identify areas where you can improve your site’s performance on Mobile devices

- Structure Data testing tool – preview and test your Structured Data markup

Some other valuable resources (English-only):

- Developer documentation on Search – a great resource where you can find feature guides, code labs, videos and links to more useful tools for webmasters.

If you have webmaster-specific questions, check our event calendar for the next hangout session or live event! Alternatively, you can post your questions to one of the local help forum, where our talented Product Experts from the TC program will try to answer your questions. Our Experts are product enthusiasts who have earned the distinction of “Top Contributor,” or “Rising Star,” by sharing their knowledge on the Google Help Forums.

If you have suggestions, please let us know in the comments below. We look forward to working with you in your language!

Introducing Our New International Webmaster Blogs!

Join us in welcoming the latest additions to the Webmasters community:

नमस्ते Webmasters in Hindi!

Добро Пожаловать Webmasters in Russian!

Hoşgeldiniz Webmasters in Turkish!

สวัสดีค่ะ Webmasters in Thai!

xin chào Webmasters in Vietnamese!

We will be sharing webmaster-related updates in our current and new blogs to make sure you have a place to follow the latest launches, updates and changes in Search in your languages! We will share links to relevant Help resources, educational content and events as they become available.

Just a reminder, here are some of the resources that we have available in multiple languages:

- Google.com/webmasters – documentation, support channels, tools (including a link to Search Console) and learning materials.

- Help Center – tips and tutorials on using Search Console, answers to frequently asked questions and step-by-step guides.

- Help forum – ask your questions and get advice from the Webmaster community

- YouTube Channel – recordings of Hangouts on Air in different languages are on our

- G+ community – another place we announce and share our Hangouts On Air

Testing tools:

- PageSpeed insights – actionable insights on how to increase your site’s performance

- Mobile-Friendly test – identify areas where you can improve your site’s performance on Mobile devices

- Structure Data testing tool – preview and test your Structured Data markup

Some other valuable resources (English-only):

- Developer documentation on Search – a great resource where you can find feature guides, code labs, videos and links to more useful tools for webmasters.

If you have webmaster-specific questions, check our event calendar for the next hangout session or live event! Alternatively, you can post your questions to one of the local help forum, where our talented Product Experts from the TC program will try to answer your questions. Our Experts are product enthusiasts who have earned the distinction of “Top Contributor,” or “Rising Star,” by sharing their knowledge on the Google Help Forums.

If you have suggestions, please let us know in the comments below. We look forward to working with you in your language!