Googlebot evergreen rendering in our testing tools

Today we updated most of our testing tools so they are using the evergreen Chromium renderer. This affects our testing tools like the mobile-friendly test or the URL inspection tool in Search Console. In this post we look into what this means and …

What webmasters should know about Google’s “core updates”

Sometimes, an update may be more noticeable. We aim to confirm such updates when we feel there is actionable information that webmasters, content producers or others might take in relation to them. For example, when our “Speed Update” happened, we gave months of advanced notice and advice.

Several times a year, we make significant, broad changes to our search algorithms and systems. We refer to these as “core updates.” They’re designed to ensure that overall, we’re delivering on our mission to present relevant and authoritative content to searchers. These core updates may also affect Google Discover.

We confirm broad core updates because they typically produce some widely notable effects. Some sites may note drops or gains during them. We know those with sites that experience drops will be looking for a fix, and we want to ensure they don’t try to fix the wrong things. Moreover, there might not be anything to fix at all.

Core updates & reassessing content

There’s nothing wrong with pages that may perform less well in a core update. They haven’t violated our webmaster guidelines nor been subjected to a manual or algorithmic action, as can happen to pages that do violate those guidelines. In fact, there’s nothing in a core update that targets specific pages or sites. Instead, the changes are about improving how our systems assess content overall. These changes may cause some pages that were previously under-rewarded to do better.

One way to think of how a core update operates is to imagine you made a list of the top 100 movies in 2015. A few years later in 2019, you refresh the list. It’s going to naturally change. Some new and wonderful movies that never existed before will now be candidates for inclusion. You might also reassess some films and realize they deserved a higher place on the list than they had before.

The list will change, and films previously higher on the list that move down aren’t bad. There are simply more deserving films that are coming before them.

Focus on content

As explained, pages that drop after a core update don’t have anything wrong to fix. This said, we understand those who do less well after a core update change may still feel they need to do something. We suggest focusing on ensuring you’re offering the best content you can. That’s what our algorithms seek to reward.

A starting point is to revisit the advice we’ve offered in the past on how to self-assess if you believe you’re offering quality content. We’ve updated that advice with a fresh set of questions to ask yourself about your content:

- Does the content provide original information, reporting, research or analysis?

- Does the content provide a substantial, complete or comprehensive description of the topic?

- Does the content provide insightful analysis or interesting information that is beyond obvious?

- If the content draws on other sources, does it avoid simply copying or rewriting those sources and instead provide substantial additional value and originality?

- Does the headline and/or page title provide a descriptive, helpful summary of the content?

- Does the headline and/or page title avoid being exaggerating or shocking in nature?

- Is this the sort of page you’d want to bookmark, share with a friend, or recommend?

- Would you expect to see this content in or referenced by a printed magazine, encyclopedia or book?

- Does the content present information in a way that makes you want to trust it, such as clear sourcing, evidence of the expertise involved, background about the author or the site that publishes it, such as through links to an author page or a site’s About page?

- If you researched the site producing the content, would you come away with an impression that it is well-trusted or widely-recognized as an authority on its topic?

- Is this content written by an expert or enthusiast who demonstrably knows the topic well?

- Is the content free from easily-verified factual errors?

- Would you feel comfortable trusting this content for issues relating to your money or your life?

- Is the content free from spelling or stylistic issues?

- Was the content produced well, or does it appear sloppy or hastily produced?

- Is the content mass-produced by or outsourced to a large number of creators, or spread across a large network of sites, so that individual pages or sites don’t get as much attention or care?

- Does the content have an excessive amount of ads that distract from or interfere with the main content?

- Does content display well for mobile devices when viewed on them?

- Does the content provide substantial value when compared to other pages in search results?

- Does the content seem to be serving the genuine interests of visitors to the site or does it seem to exist solely by someone attempting to guess what might rank well in search engines?

Beyond asking yourself these questions, consider having others you trust but who are unaffiliated with your site provide an honest assessment.

Also consider an audit of the drops you may have experienced. What pages were most impacted and for what types of searches? Look closely at these to understand how they’re assessed against some of the questions above.

Get to know the quality rater guidelines & E-A-T

Another resource for advice on great content is to review our search quality rater guidelines. Raters are people who give us insights on if our algorithms seem to be providing good results, a way to help confirm our changes are working well.

It’s important to understand that search raters have no control over how pages rank. Rater data is not used directly in our ranking algorithms. Rather, we use them as a restaurant might get feedback cards from diners. The feedback helps us know if our systems seem to be working.

If you understand how raters learn to assess good content, that might help you improve your own content. In turn, you might perhaps do better in Search.

In particular, raters are trained to understand if content has what we call strong E-A-T. That stands for Expertise, Authoritativeness and Trustworthiness. Reading the guidelines may help you assess how your content is doing from an E-A-T perspective and improvements to consider.

Here are a few articles written by third-parties who share how they’ve used the guidelines as advice to follow:

- E-A-T and SEO, from Marie Haynes

- Google Updates Quality Rater Guidelines Targeting E-A-T, Page Quality & Interstitials, from Jennifer Slegg

- Leveraging E-A-T for SEO Success, presentation from Lily Ray

- Google’s Core Algorithm Updates and The Power of User Studies: How Real Feedback From Real People Can Help Site Owners Surface Website Quality Problems (And More), Glenn Gabe

- Why E-A-T & Core Updates Will Change Your Content Approach, from Fajr Muhammad

Recovering and more advice

A common question after a core update is how long does it take for a site to recover, if it improves content?

Broad core updates tend to happen every few months. Content that was impacted by one might not recover – assuming improvements have been made – until the next broad core update is released.

However, we’re constantly making updates to our search algorithms, including smaller core updates. We don’t announce all of these because they’re generally not widely noticeable. Still, when released, they can cause content to recover if improvements warrant.

Do keep in mind that improvements made by site owners aren’t a guarantee of recovery, nor do pages have any static or guaranteed position in our search results. If there’s more deserving content, that will continue to rank well with our systems.

It’s also important to understand that search engines like Google do not understand content the way human beings do. Instead, we look for signals we can gather about content and understand how those correlate with how humans assess relevance. How pages link to each other is one well-known signal that we use. But we use many more, which we don’t disclose to help protect the integrity of our results.

We test any broad core update before it goes live, including gathering feedback from the aforementioned search quality raters, to see if how we’re weighing signals seems beneficial.

Of course, no improvement we make to Search is perfect. This is why we keep updating. We take in more feedback, do more testing and keep working to improve our ranking systems. This work on our end can mean that content might recover in the future, even if a content owner makes no changes. In such situations, our continued improvements might assess such content more favorably.

We hope the guidance offered here is helpful. You’ll also find plenty of advice about good content with the resources we offer from Google Webmasters, including tools, help pages and our forums. Learn more here.

Posted by Danny Sullivan, Public Liaison for Search

Helping publishers and users get more out of visual searches on Google Images with AMP

Google Images has made a series of changes to help people explore, learn and do more through visual search. An important element of visual search is the ability for users to scan many ideas before coming to a decision, whether it’s purchasing a product, learning more about a stylish room, or finding instructions for a DIY project. Often this involves loading many web pages, which can slow down a search considerably and prevent users from completing a task.

As previewed at Google I/O, we’re launching a new AMP-powered feature in Google Images on the mobile web, Swipe to Visit, which makes it faster and easier for users to browse and visit web pages. After a Google Images user selects an image to view on a mobile device, they will get a preview of the website header, which can be easily swiped up to load the web page instantly.

Swipe to Visit uses AMP’s prerender capability to show a preview of the page displayed at the bottom of the screen. When a user swipes up on the preview, the web page is displayed instantly and the publisher receives a pageview. The speed and ease of this experience makes it more likely for users to visit a publisher’s site, while still allowing users to continue their browsing session.

Publishers who support AMP don’t need to take any additional action for their sites to appear in Swipe to Visit on Google Images. Publishers who don’t support AMP can learn more about getting started with AMP here. In the coming weeks, publishers can also view their traffic data from AMP in Google Images in a Search Console’s performance report for Google Images in a new search area named “AMP on Image result”.

We look forward to continuing to support the Google Images ecosystem with features that help users and publishers alike.

Posted by Assaf Broitman, Google Images PM

A note on unsupported rules in robots.txt

Why isn’t a code handler for other rules like crawl-delay included in the code?

The internet draft we published yesterday provides an extensible architecture for rules that are not part of the standard. This means that if a crawler wanted to support their own line like “unicorns: allowed”, they could. To demonstrate how this would look in a parser, we included a very common line, sitemap, in our open-source robots.txt parser.

While open-sourcing our parser library, we analyzed the usage of robots.txt rules. In particular, we focused on rules unsupported by the internet draft, such as crawl-delay, nofollow, and noindex. Since these rules were never documented by Google, naturally, their usage in relation to Googlebot is very low. Digging further, we saw their usage was contradicted by other rules in all but 0.001% of all robots.txt files on the internet. These mistakes hurt websites’ presence in Google’s search results in ways we don’t think webmasters intended.

In the interest of maintaining a healthy ecosystem and preparing for potential future open source releases, we’re retiring all code that handles unsupported and unpublished rules (such as noindex) on September 1, 2019. For those of you who relied on the noindex indexing directive in the robots.txt file, which controls crawling, there are a number of alternative options:

- Noindex in robots meta tags: Supported both in the HTTP response headers and in HTML, the noindex directive is the most effective way to remove URLs from the index when crawling is allowed.

- 404 and 410 HTTP status codes: Both status codes mean that the page does not exist, which will drop such URLs from Google’s index once they’re crawled and processed.

- Password protection: Unless markup is used to indicate subscription or paywalled content, hiding a page behind a login will generally remove it from Google’s index.

- Disallow in robots.txt: Search engines can only index pages that they know about, so blocking the page from being crawled usually means its content won’t be indexed. While the search engine may also index a URL based on links from other pages, without seeing the content itself, we aim to make such pages less visible in the future.

- Search Console Remove URL tool: The tool is a quick and easy method to remove a URL temporarily from Google’s search results.

For more guidance about how to remove information from Google’s search results, visit our Help Center. If you have questions, you can find us on Twitter and in our Webmaster Community, both offline and online.

Posted by Gary

Google’s robots.txt parser is now open source

For 25 years, the Robots Exclusion Protocol (REP) was only a de-facto standard. This had frustrating implications sometimes. On one hand, for webmasters, it meant uncertainty in corner cases, like when their text editor included BOM characters in their…

Formalizing the Robots Exclusion Protocol Specification

In 1994, Martijn Koster (a webmaster himself) created the initial standard after crawlers were overwhelming his site. With more input from other webmasters, the REP was born, and it was adopted by search engines to help website owners manage their server resources easier.

However, the REP was never turned into an official Internet standard, which means that developers have interpreted the protocol somewhat differently over the years. And since its inception, the REP hasn’t been updated to cover today’s corner cases. This is a challenging problem for website owners because the ambiguous de-facto standard made it difficult to write the rules correctly.

We wanted to help website owners and developers create amazing experiences on the internet instead of worrying about how to control crawlers. Together with the original author of the protocol, webmasters, and other search engines, we’ve documented how the REP is used on the modern web, and submitted it to the IETF.

The proposed REP draft reflects over 20 years of real world experience of relying on robots.txt rules, used both by Googlebot and other major crawlers, as well as about half a billion websites that rely on REP. These fine grained controls give the publisher the power to decide what they’d like to be crawled on their site and potentially shown to interested users. It doesn’t change the rules created in 1994, but rather defines essentially all undefined scenarios for robots.txt parsing and matching, and extends it for the modern web. Notably:

- Any URI based transfer protocol can use robots.txt. For example, it’s not limited to HTTP anymore and can be used for FTP or CoAP as well.

- Developers must parse at least the first 500 kibibytes of a robots.txt. Defining a maximum file size ensures that connections are not open for too long, alleviating unnecessary strain on servers.

- A new maximum caching time of 24 hours or cache directive value if available, gives website owners the flexibility to update their robots.txt whenever they want, and crawlers aren’t overloading websites with robots.txt requests. For example, in the case of HTTP, Cache-Control headers could be used for determining caching time.

- The specification now provisions that when a previously accessible robots.txt file becomes inaccessible due to server failures, known disallowed pages are not crawled for a reasonably long period of time.

Additionally, we’ve updated the augmented Backus–Naur form in the internet draft to better define the syntax of robots.txt, which is critical for developers to parse the lines.

RFC stands for Request for Comments, and we mean it: we uploaded the draft to IETF to get feedback from developers who care about the basic building blocks of the internet. As we work to give web creators the controls they need to tell us how much information they want to make available to Googlebot, and by extension, eligible to appear in Search, we have to make sure we get this right.

If you’d like to drop us a comment, ask us questions, or just say hi, you can find us on Twitter and in our Webmaster Community, both offline and online.

Posted by Henner Zeller, Lizzi Harvey, and Gary

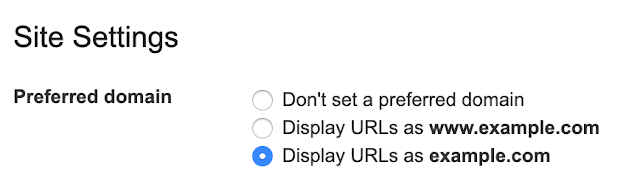

Bye Bye Preferred Domain setting

It’s common for a website to have the same content on multiple URLs. For example, it might have the same content on http://example.com/ as on https://www.example.com/index.html. To make things easier, when our systems recognize that, we’ll pick one URL as the “canonical” for Search. You can still tell us your preference in multiple ways if there’s something specific you want us to pick (see paragraph below). But if you don’t have a preference, we’ll choose the best option we find. Note that with the deprecation we will no longer use any existing Search Console preferred domain configuration.

You can find detailed explanations on how to tell us your preference in the Consolidate duplicate URLs help center article. Here are some of the options available to you:

- Use rel=”canonical” link tag on HTML pages

- Use rel=”canonical” HTTP header

- Use a sitemap

- Use 301 redirects for retired URLs

Send us any feedback either through Twitter or our forum.

Posted by Daniel Waisberg, Search Advocate

Webmaster Conference: an event made for you

Over the years we attended hundreds of conferences, we spoke to thousands of webmasters, and recorded hundreds of hours of videos to help web creators find information about how to perform better in Google Search results. Now we’d like to go further: h…

A video series on SEO myths for web developers

We invited members of the SEO and web developer community to join us for a new video series called “SEO mythbusting”.

In this series, we discuss various topics around SEO from a developer’s perspective, how we can work to make the “SEO black box” more transparent, and what technical SEO might look like as the web keeps evolving. We already published a few episodes: Web developer’s 101:

A look at Googlebot:

Microformats and structured data:

JavaScript and SEO:

We have a few more episodes for you and we will launch the next episodes weekly on the Google Webmasters YouTube channel, so don’t forget to subscribe to stay in the loop. You can also find all published episodes in this YouTube playlist. We look forward to hearing your feedback, topic suggestions, and guest recommendations in the YouTube comments as well as our Twitter account! Posted by Martin Splitt, friendly web fairy & series host, WTA team

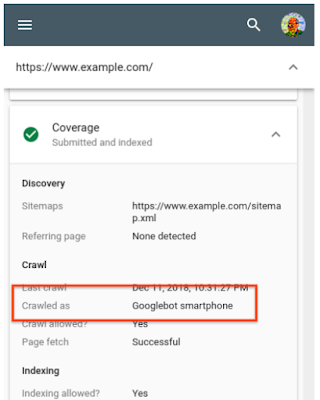

Mobile-First Indexing by default for new domains

Over the years since announcing mobile-first indexing – Google’s crawling of the web using a smartphone Googlebot – our analysis has shown that new websites are generally ready for this method of crawling. Accordingly, we’re happy to announce that mobile-first indexing will be enabled by default for all new, previously unknown to Google Search, websites starting July 1, 2019. It’s fantastic to see that new websites are now generally showing users – and search engines – the same content on both mobile and desktop devices!

You can continue to check for mobile-first indexing of your website by using the URL Inspection Tool in Search Console. By looking at a URL on your website there, you’ll quickly see how it was last crawled and indexed. For older websites, we’ll continue monitoring and evaluating pages for their readiness for mobile first indexing, and will notify them through Search Console once they’re seen as being ready. Since the default state for new websites will be mobile-first indexing, there’s no need to send a notification.

Using the URL Inspection Tool to check the mobile-first indexing status

Our guidance on making all websites work well for mobile-first indexing continues to be relevant, for new and existing sites. For existing websites we determine their readiness for mobile-first indexing based on parity of content (including text, images, videos, links), structured data, and other meta-data (for example, titles and descriptions, robots meta tags). We recommend double-checking these factors when a website is launched or significantly redesigned.

While we continue to support responsive web design, dynamic serving, and separate mobile URLs for mobile websites, we recommend responsive web design for new websites. Because of issues and confusion we’ve seen from separate mobile URLs over the years, both from search engines and users, we recommend using a single URL for both desktop and mobile websites.

Mobile-first indexing has come a long way. We’re happy to see how the web has evolved from being focused on desktop, to becoming mobile-friendly, and now to being mostly crawlable and indexable with mobile user-agents! We realize it has taken a lot of work from your side to get there, and on behalf of our mostly-mobile users, we appreciate that. We’ll continue to monitor and evaluate this change carefully. If you have any questions, please drop by our Webmaster forums or our public events.

Posted by John Mueller, Developer Advocate, Google Zurich

Search at Google I/O 2019

Google I/O is our yearly developer conference where we have the pleasure of announcing some exciting new Search-related features and capabilities. A good place to start is Google Search: State of the Union, which explains how to take advantage of the l…

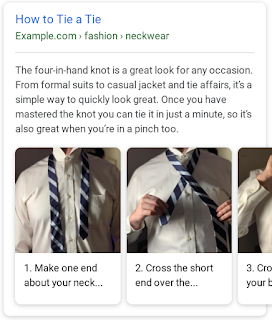

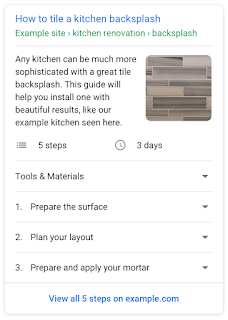

New in structured data: FAQ and How-to

In this post, we provide details to help you implement structured data on your FAQ and how-to pages in order to make your pages eligible to feature on Google Search as rich results and How-to Actions for the Assistant. We also show examples of how to monitor your search appearance with new Search Console enhancement reports.

Disclaimer: Google does not guarantee that your structured data will show up in search results, even if your page is marked up correctly. To determine whether a result gets a rich treatment, Google algorithms use a variety of additional signals to make sure that users see rich results when their content best serves the user’s needs. Learn more about structured data guidelines.

How-to on Search and the Google Assistant

How-to rich results provide users with richer previews of web results that guide users through step-by-step tasks. For example, if you provide information on how to tile a kitchen backsplash, tie a tie, or build a treehouse, you can add How-to structured data to your pages to enable the page to appear as a rich result on Search and a How-to Action for the Assistant.

Add structured data to the steps, tools, duration, and other properties to enable a How-to rich result for your content on the search page. If your page uses images or video for each step, make sure to mark up your visual content to enhance the preview and expose a more visual representation of your content to users. Learn more about the required and recommended properties you can use on your markup in the How-to developer documentation.

Your content can also start surfacing on the Assistant through new voice guided experiences. This feature lets you expand your content to new surfaces, to help users complete tasks wherever they are, and interactively progress through the steps using voice commands.

As shown in the Google Home Hub example below, the Assistant provides a conversational, hands-free experience that can help users complete a task. This is an incredibly lightweight way for web developers to expand their content to the Assistant. For more information about How-to for the Assistant, visit Build a How-to Guide Action with Markup.

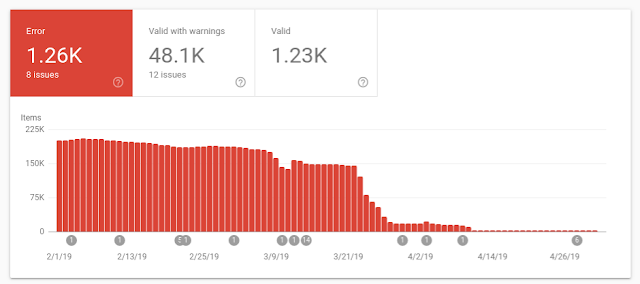

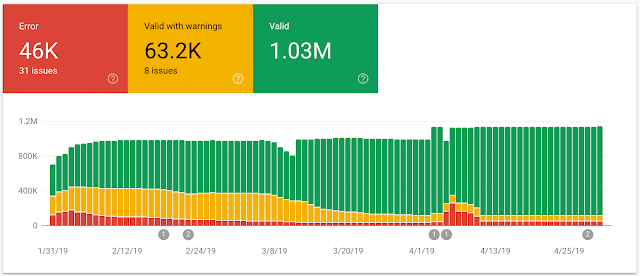

To help you monitor How-to markup issues, we launched a report in Search Console that shows all errors, warnings and valid items for pages with HowTo structured data. Learn more about how to use the report to monitor your results.

|

FAQ on Search and the Google Assistant

An FAQ page provides a list of frequently asked questions and answers on a particular topic. For example, an FAQ page on an e-commerce website might provide answers on shipping destinations, purchase options, return policies, and refund processes. By using FAQPage structured data, you can make your content eligible to display these questions and answers to display directly on Google Search and the Assistant, helping users to quickly find answers to frequently asked questions.

FAQ structured data is only for official questions and answers; don’t add FAQ structured data on forums or other pages where users can submit answers to questions – in that case, use the Q&A Page markup.

You can learn more about implementation details in the FAQ developer documentation.

To provide more ways for users to access your content, FAQ answers can also be surfaced on the Google Assistant. Your users can invoke your FAQ content by asking direct questions and get the answers that you marked up in your FAQ pages. For more information, visit Build an FAQ Action with Markup.

To help you monitor FAQ issues and search appearance, we also launched an FAQ report in Search Console that shows all errors, warnings and valid items related to your marked-up FAQ pages.

We would love to hear your thoughts on how FAQ or How-to structured data works for you. Send us any feedback either through Twitter or our forum.

Posted by Daniel Waisberg, Damian Biollo, Patrick Nevels, and Yaniv Loewenstein

The new evergreen Googlebot

What that means for you

Compared to the previous version, Googlebot now supports 1000+ new features, like:

- ES6 and newer JavaScript features

- IntersectionObserver for lazy-loading

- Web Components v1 APIs

You should check if you’re transpiling or use polyfills specifically for Googlebot and if so, evaluate if this is still necessary. There are still some limitations, so check our troubleshooter for JavaScript-related issues and the video series on JavaScript SEO.

Any thoughts on this? Talk to us on Twitter, the webmaster forums, or join us for the online office hours.

Posted by Martin Splitt, friendly internet fairy at the Webmasters Trends Analyst team

Google I/O 2019 – What sessions should SEOs and webmasters watch?

However, you don’t have to physically attend the event to take advantage of this once-a-year opportunity: many conferences and talks are live streamed on YouTube for anyone to watch. Browse the full schedule of events, including a list of talks that we think will be interesting for webmasters to watch (all talks are in English). All the links shared below will bring you to pages with more details about each talk, and links to watch the sessions will display on the day of each event. All times are Pacific Central time (California time).

- Tuesday, May 7th

4pm – Building Successful Websites: Case Studies for Mature and Emerging Markets, with Aancha Bahadur, Charlie Croom, Matt Doyle, Rudra Kasturi, and Jesar Shah

- Wednesday, May 8th

10.30am – Enhance Your Search and Assistant Presence with Structured Data, with Aylin Alroik and Will Leszczuk

11.30am – Create App-like Experiences on Google Search and the Google Assistant, with Allen Harvey

11.30am – Rapidly Building Better Web Experiences with AMP, with Adam Greenberg and Naina Raisinghani

6.30pm – Unlocking New Capabilities for the Web, with Pete LePage and Thomas Steiner

- Thursday, May 9th

10.30am – Google Search: State of the Union, with John Mueller and Martin Splitt

1.30pm – Google Search and JavaScript Sites, with Zoe Clifford and Martin Splitt

Monitoring structured data with Search Console

This post focuses on what you can do with Search Console to monitor and make the most out of structured data for your site. In addition, we have some new features that will help you even more. Below are the new additions, read on to learn more about them.

- Unparsable structured data is a new report that aggregates structured data syntax errors.

- New enhancement reports for Sitelinks searchbox and Logo.

Monitoring overall structured data performance

Every time Search Console detects a new issue related to structured data on a website, we send an email to account owners – but if an existing issue gets worse, it won’t trigger an email, so it is still important for you to check your account sporadically.

This is not something you need to do every day, but we recommend you check it once in a while to make sure everything is working as intended. If your website development has defined cycles, it might be a good practice to log in to Search Console after changes are made to the website to monitor your performance.

If you’d like to have an overall idea of all the errors for a specific structured data feature in your site, you can navigate to the Enhancements menu in the left sidebar and click a feature. You’ll find a summary of all errors and warnings, as well as the valid items.

As mentioned above, we added a new set of reports to help you understand more types of structured data on your site: Sitelinks searchbox and Logo. They are joining the existing set of reports on Recipe, Event, Job Posting and others. You can read more about the reports in the Search Console Help Center.

Here’s an example of an Enhancement report, note that you can only see enhancements that have been detected in your pages. The report helps you with the following actions:

- Review the trends of errors, warnings and valid items: To view each status issue separately, click the colored boxes above the bar chart.

- Review warnings and errors per page: To see examples of pages which are currently affected by the issues, click a specific row below the bar chart.

|

| Image: Enhancements report |

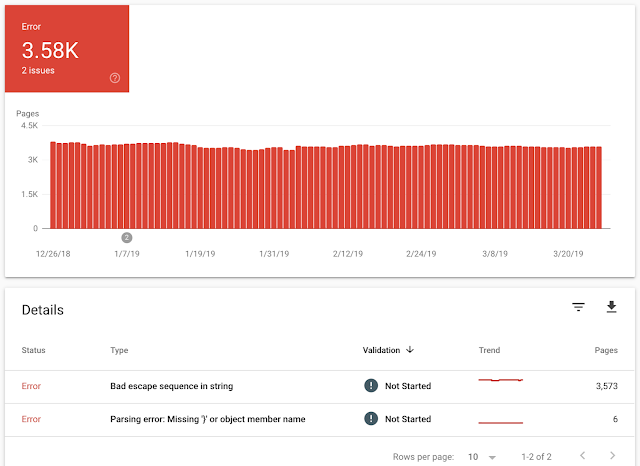

We are also happy to launch the Unparsable Structured Data report, which aggregates parsing issues such as structured data syntax errors that prevented Google from identifying the feature type. That is the reason these issues are aggregated here instead of the intended specific feature report.

Check this report to see if Google was unable to parse any of the structured data you tried to add to your site. Parsing issues could point you to lost opportunities for rich results for your site. Below is a screenshot showing how the report looks like. You can access the report directly and read more about the report in our help center.

|

| Image: Unparsable Structured Data report |

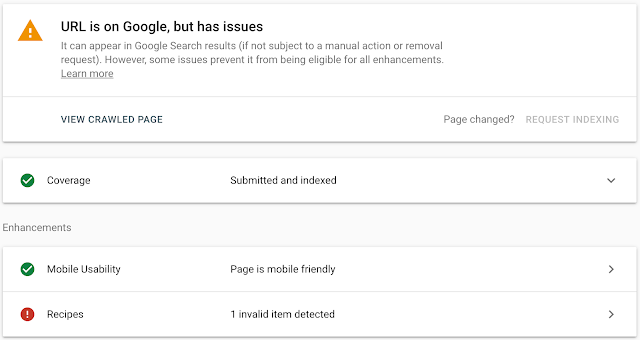

Testing structured data on a URL level

To make sure your pages were processed correctly and are eligible for rich results or as a way to diagnose why some rich result are not surfacing for a specific URL, you can use the URL Inspection tool. This tool helps you understand areas of improvement at a URL level and helps you get an idea on where to focus.

When you paste a URL into the search box at the top of Search Console, you can find what’s working properly and warnings or errors related to your structured data in the enhancements section, as seen below for Recipes.

|

| Image: URL Inspection tool |

In the screenshot above, there is an error related to Recipes. If you click Recipes, information about the error displays, and you can click the little chart icon to the right of the error to learn more about it.

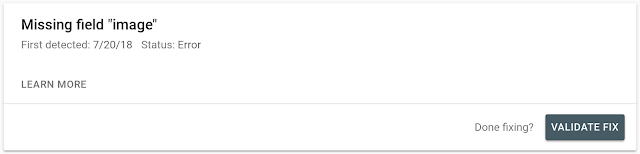

Once you understand and fix the error, you can click Validate Fix (see screenshot below) so Google can start validating whether the issue is indeed fixed. When you click the Validate Fix button, Google runs several instantaneous tests. If your pages don’t pass this test, Search Console provides you with an immediate notification. Otherwise, Search Console reprocesses the rest of the affected pages.

|

| Image: Structured data error detail |

We would love to hear your feedback on how Search Console has helped you and how it can help you even more with structured data. Send us feedback through Twitter or the Webmaster forum.

Posted by Daniel Waisberg, Search Advocate & Na’ama Zohary, Search Console team

Enriching Search Results Through Structured Data

We’ve worked hard to provide you with tools to understand how your websites are shown in Google Search results and whether there are issues you can fix. To help give a complete overview of structured data, we decided to do a series to explore it. This post provides a quick intro and discusses some best practices, future posts will focus on how to use Search Console to succeed with structured data.

What is structured data?

Structured data is a common way of providing information about a page and its content – we recommend using the schema.org vocabulary for doing so. Google supports three different formats of in-page markup: JSON-LD (recommended), Microdata, and RDFa. Different search features require different kinds of structured data – you can learn more about these in our search gallery. Our developer documentation has more details on the basics of structured data.

Structured data helps Google’s systems understand your content more accurately, which means it’s better for users as they will get more relevant results. If you implement structured data your pages may become eligible to be shown with an enhanced appearance in Google search results.

Disclaimer: Google does not guarantee that your structured data will show up in search results, even if your page is marked up correctly. Using structured data enables a feature to be present, it does not guarantee that it will be present. Learn more about structured data guidelines.

Sites that use structured data see results

Over the years, we’ve seen a growing adoption of structured data in the ecosystem. In general, rich results help users to better understand how your pages are relevant to their searches, so they translate into success for websites. Here are some results that are showcased in our case studies gallery:

- Eventbrite leveraged event structured data and saw 100% increase in the typical YOY growth of traffic from search.

- Jobrapido integrated with the job experience on Google Search and saw 115% increase in organic traffic, 270% increase of new user registrations from organic traffic, and 15% lower bounce rate for Google visitors to job pages.

- Rakuten used the recipe search experience and saw a 2.7X increase in traffic from search engines and a 1.5X increase in session duration.

How to use structured data?

There are a few ways your site could benefit from structured data. Below we discuss some examples grouped by different types of goals: increase brand awareness, highlight content, and highlight product information.

1. Increase brand awareness

One thing you can do to promote your brand with structured data is to take advantage of features such as Logo, Local business, and Sitelinks searchbox. In addition to adding structured data, you should verify your site for the Knowledge Panel and claim your business on Google My Business. Here is an example of the knowledge panel with a Logo.

2. Highlight content

If you publish content on the web, there are a number of features that can help promote your content and attract more users, depending on your industry. For example: Article, Breadcrumb, Event, Job, Q&A, Recipe, Review and others. Here is an example of a recipe rich result.

3. Highlight product information

If you sell merchandise, you could add product structured data to your page, including price, availability, and review ratings. Here is how your product might show for a relevant search.

Try it and let us know

Now that you understand the importance of structured data, try our codelab to learn how to add it to your pages. Stay tuned to learn more about structured data, in the coming posts we’ll be discussing how to use Search Console to better analyze your efforts.

We would love to hear your thoughts and stories on how structured data works for you, send us any feedback either through Twitter or our forum.

Posted by Daniel Waisberg, Search Advocate

Instant-loading AMP pages from your own domain

Today we are rolling out support in Google Search’s AMP web results (also known as “blue links”) to link to signed exchanges, an emerging new feature of the web enabled by the IETF web packaging specification. Signed exchanges enable displaying the publisher’s domain when content is instantly loaded via Google Search. This is available in browsers that support the necessary web platform feature—as of the time of writing, Google Chrome—and availability will expand to include other browsers as they gain support (e.g. the upcoming version of Microsoft Edge).

Background on AMP’s instant loading

One of AMP’s biggest user benefits has been the unique ability to instantly load AMP web pages that users click on in Google Search. Near-instant loading works by requesting content ahead of time, balancing the likelihood of a user clicking on a result with device and network constraints–and doing it in a privacy-sensitive way.

We believe that privacy-preserving instant loading web content is a transformative user experience, but in order to accomplish this, we had to make trade-offs; namely, the URLs displayed in browser address bars begin with google.com/amp, as a consequence of being shown in the Google AMP Viewer, rather than display the domain of the publisher. We heard both user and publisher feedback over this, and last year we identified a web platform innovation that provides a solution that shows the content’s original URL while still retaining AMP’s instant loading.

Introducing signed exchanges

A signed exchange is a file format, defined in the web packaging specification, that allows the browser to trust a document as if it belongs to your origin. This allows you to use first-party cookies and storage to customize content and simplify analytics integration. Your page appears under your URL instead of the google.com/amp URL.

Google Search links to signed exchanges when the publisher, browser, and the Search experience context all support it. As a publisher, you will need to publish both the signed exchange version of the content in addition to the non-signed exchange version. Learn more about how Google Search supports signed exchange.

Getting started with signed exchanges

Many publishers have already begun to publish signed exchanges since the developer preview opened up last fall. To implement signed exchanges in your own serving infrastructure, follow the guide “Serve AMP using Signed Exchanges” available at amp.dev.

If you use a CDN provider, ask them if they can provide AMP signed exchanges. Cloudflare has recently announced that it is offering signed exchanges to all of its customers free of charge.

Check out our resources like the webmaster community or get in touch with members of the AMP Project with any questions. You can also provide feedback on the signed exchange specification.

Posted by Devin Mullins and Greg Rogers

Instant-loading AMP pages from your own domain

Today we are rolling out support in Google Search’s AMP web results (also known as “blue links”) to link to signed exchanges, an emerging new feature of the web enabled by the IETF web packaging specification. Signed exchanges enable displaying the publisher’s domain when content is instantly loaded via Google Search. This is available in browsers that support the necessary web platform feature—as of the time of writing, Google Chrome—and availability will expand to include other browsers as they gain support (e.g. the upcoming version of Microsoft Edge).

Background on AMP’s instant loading

One of AMP’s biggest user benefits has been the unique ability to instantly load AMP web pages that users click on in Google Search. Near-instant loading works by requesting content ahead of time, balancing the likelihood of a user clicking on a result with device and network constraints–and doing it in a privacy-sensitive way.

We believe that privacy-preserving instant loading web content is a transformative user experience, but in order to accomplish this, we had to make trade-offs; namely, the URLs displayed in browser address bars begin with google.com/amp, as a consequence of being shown in the Google AMP Viewer, rather than display the domain of the publisher. We heard both user and publisher feedback over this, and last year we identified a web platform innovation that provides a solution that shows the content’s original URL while still retaining AMP’s instant loading.

Introducing signed exchanges

A signed exchange is a file format, defined in the web packaging specification, that allows the browser to trust a document as if it belongs to your origin. This allows you to use first-party cookies and storage to customize content and simplify analytics integration. Your page appears under your URL instead of the google.com/amp URL.

Google Search links to signed exchanges when the publisher, browser, and the Search experience context all support it. As a publisher, you will need to publish both the signed exchange version of the content in addition to the non-signed exchange version. Learn more about how Google Search supports signed exchange.

Getting started with signed exchanges

Many publishers have already begun to publish signed exchanges since the developer preview opened up last fall. To implement signed exchanges in your own serving infrastructure, follow the guide “Serve AMP using Signed Exchanges” available at amp.dev.

If you use a CDN provider, ask them if they can provide AMP signed exchanges. Cloudflare has recently announced that it is offering signed exchanges to all of its customers free of charge.

Check out our resources like the webmaster community or get in touch with members of the AMP Project with any questions. You can also provide feedback on the signed exchange specification.

Posted by Devin Mullins and Greg Rogers

Search Console reporting for your site’s Discover performance data

Discover is a popular way for users to stay up-to-date on all their favorite topics, even when they’re not searching. To provide publishers and sites visibility into their Discover traffic, we’re adding a new report in Google Search Console to share relevant statistics and help answer questions such as:

- How often is my site shown in users’ Discover? How large is my traffic?

- Which pieces of content perform well in Discover?

- How does my content perform differently in Discover compared to traditional search results?

A quick reminder: What is Discover?

Discover is a feature within Google Search that helps users stay up-to-date on all their favorite topics, without needing a query. Users get to their Discover experience in the Google app, on the Google.com mobile homepage, and by swiping right from the homescreen on Pixel phones. It has grown significantly since launching in 2017 and now helps more than 800M monthly active users get inspired and explore new information by surfacing articles, videos, and other content on topics they care most about. Users have the ability to follow topics directly or let Google know if they’d like to see more or less of a specific topic. In addition, Discover isn’t limited to what’s new. It surfaces the best of the web regardless of publication date, from recipes and human interest stories, to fashion videos and more. Here is our guide on how you can optimize your site for Discover.

Discover in Search Console

The new Discover report is shown to websites that have accumulated meaningful visibility in Discover, with the data shown back to March 2019. We hope this report is helpful in thinking about how you might optimize your content strategy to help users discover engaging information– both new and evergreen.

For questions or comments on the report, feel free to drop by our webmaster help forums, or contact us through our other channels.

Posted by Michael Huzman, Ariel Kroszynski

Search Console reporting for your site’s Discover performance data

Discover is a popular way for users to stay up-to-date on all their favorite topics, even when they’re not searching. To provide publishers and sites visibility into their Discover traffic, we’re adding a new report in Google Search Console to share relevant statistics and help answer questions such as:

- How often is my site shown in users’ Discover? How large is my traffic?

- Which pieces of content perform well in Discover?

- How does my content perform differently in Discover compared to traditional search results?

A quick reminder: What is Discover?

Discover is a feature within Google Search that helps users stay up-to-date on all their favorite topics, without needing a query. Users get to their Discover experience in the Google app, on the Google.com mobile homepage, and by swiping right from the homescreen on Pixel phones. It has grown significantly since launching in 2017 and now helps more than 800M monthly active users get inspired and explore new information by surfacing articles, videos, and other content on topics they care most about. Users have the ability to follow topics directly or let Google know if they’d like to see more or less of a specific topic. In addition, Discover isn’t limited to what’s new. It surfaces the best of the web regardless of publication date, from recipes and human interest stories, to fashion videos and more. Here is our guide on how you can optimize your site for Discover.

Discover in Search Console

The new Discover report is shown to websites that have accumulated meaningful visibility in Discover, with the data shown back to March 2019. We hope this report is helpful in thinking about how you might optimize your content strategy to help users discover engaging information– both new and evergreen.

For questions or comments on the report, feel free to drop by our webmaster help forums, or contact us through our other channels.

Posted by Michael Huzman, Ariel Kroszynski