We updated our job posting guidelines

Last year, we launched job search on Google to connect more people with jobs. When you provide Job Posting structured data, it helps drive more relevant traffic to your page by connecting job seekers with your content. To ensure that job seekers are getting the best possible experience, it’s important to follow our Job Posting guidelines.

We’ve recently made some changes to our Job Posting guidelines to help improve the job seeker experience.

- Remove expired jobs

- Place structured data on the job’s detail page

- Make sure all job details are present in the job description

Remove expired jobs

When job seekers put in effort to find a job and apply, it can be very discouraging to discover that the job that they wanted is no longer available. Sometimes, job seekers only discover that the job posting is expired after deciding to apply for the job. Removing expired jobs from your site may drive more traffic because job seekers are more confident when jobs that they visit on your site are still open for application. For more information on how to remove a job posting, see Remove a job posting.

Place structured data on the job’s detail page

Job seekers find it confusing when they land on a list of jobs instead of the specific job’s detail page. To fix this, put structured data on the most detailed leaf page possible. Don’t add structured data to pages intended to present a list of jobs (for example, search result pages) and only add it to the most specific page describing a single job with its relevant details.

Make sure all job details are present in the job description

We’ve also noticed that some sites include information in the JobPosting structured data that is not present anywhere in the job posting. Job seekers are confused when the job details they see in Google Search don’t match the job description page. Make sure that the information in the JobPosting structured data always matches what’s on the job posting page. Here are some examples:

- If you add salary information to the structured data, then also add it to the job posting. Both salary figures should match.

- The location in the structured data should match the location in the job posting.

Providing structured data content that is consistent with the content of the job posting pages not only helps job seekers find the exact job that they were looking for, but may also drive more relevant traffic to your job postings and therefore increase the chances of finding the right candidates for your jobs.

If your site violates the Job Posting guidelines (including the guidelines in this blog post), we may take manual action against your site and it may not be eligible for display in the jobs experience on Google Search. You can submit a reconsideration request to let us know that you have fixed the problem(s) identified in the manual action notification. If your request is approved, the manual action will be removed from your site or page.

For more information, visit our Job Posting developer documentation and our JobPosting FAQ.

Posted by Anouar Bendahou, Trust & Safety Search Team

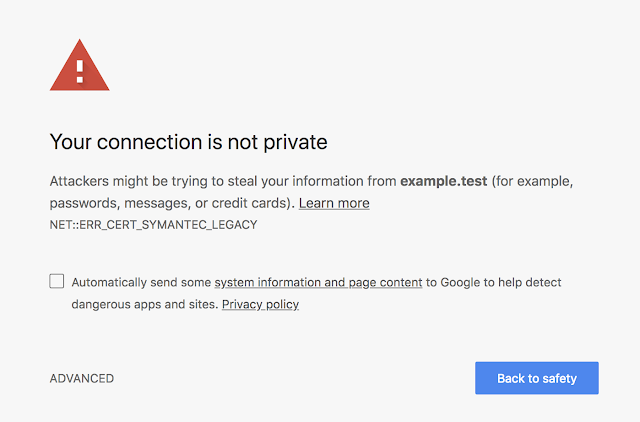

Distrust of the Symantec PKI: Immediate action needed by site operators

We previously announced plans to deprecate Chrome’s trust in the Symantec certificate authority (including Symantec-owned brands like Thawte, VeriSign, Equifax, GeoTrust, and RapidSSL). This post outlines how site operators can determine if they’re affected by this deprecation, and if so, what needs to be done and by when. Failure to replace these certificates will result in site breakage in upcoming versions of major browsers, including Chrome.

Chrome 66

If your site is using a SSL/TLS certificate from Symantec that was issued before June 1, 2016, it will stop functioning in Chrome 66, which could already be impacting your users.

If you are uncertain about whether your site is using such a certificate, you can preview these changes in Chrome Canary to see if your site is affected. If connecting to your site displays a certificate error or a warning in DevTools as shown below, you’ll need to replace your certificate. You can get a new certificate from any trusted CA, including Digicert, which recently acquired Symantec’s CA business.

|

| An example of a certificate error that Chrome 66 users might see if you are using a Legacy Symantec SSL/TLS certificate that was issued before June 1, 2016. |

|

Chrome 66 has already been released to the Canary and Dev channels, meaning affected sites are already impacting users of these Chrome channels. If affected sites do not replace their certificates by March 15, 2018, Chrome Beta users will begin experiencing the failures as well. You are strongly encouraged to replace your certificate as soon as possible if your site is currently showing an error in Chrome Canary.

Chrome 70

Starting in Chrome 70, all remaining Symantec SSL/TLS certificates will stop working, resulting in a certificate error like the one shown above. To check if your certificate will be affected, visit your site in Chrome today and open up DevTools. You’ll see a message in the console telling you if you need to replace your certificate.

| The DevTools message you will see if you need to replace your certificate before Chrome 70. |

If you see this message in DevTools, you’ll want to replace your certificate as soon as possible. If the certificates are not replaced, users will begin seeing certificate errors on your site as early as July 20, 2018. The first Chrome 70 Beta release will be around September 13, 2018.

Expected Chrome Release Timeline

The table below shows the First Canary, First Beta and Stable Release for Chrome 66 and 70. The first impact from a given release will coincide with the First Canary, reaching a steadily widening audience as the release hits Beta and then ultimately Stable. Site operators are strongly encouraged to make the necessary changes to their sites before the First Canary release for Chrome 66 and 70, and no later than the corresponding Beta release dates.

|

Release

|

First Canary

|

First Beta

|

Stable Release

|

|

Chrome 66

|

January 20, 2018

|

~ March 15, 2018

|

~ April 17, 2018

|

|

Chrome 70

|

~ July 20, 2018

|

~ September 13, 2018

|

~ October 16, 2018

|

For information about the release timeline for a particular version of Chrome, you can also refer to the Chromium Development Calendar which will be updated should release schedules change.

In order to address the needs of certain enterprise users, Chrome will also implement an Enterprise Policy that allows disabling the Legacy Symantec PKI distrust starting with Chrome 66. As of January 1, 2019, this policy will no longer be available and the Legacy Symantec PKI will be distrusted for all users.

Special Mention: Chrome 65

As noted in the previous announcement, SSL/TLS certificates from the Legacy Symantec PKI issued after December 1, 2017 are no longer trusted. This should not affect most site operators, as it requires entering in to special agreement with DigiCert to obtain such certificates. Accessing a site serving such a certificate will fail and the request will be blocked as of Chrome 65. To avoid such errors, ensure that such certificates are only served to legacy devices and not to browsers such as Chrome.

Posted by Devon O’Brien, Ryan Sleevi, Emily Stark, Chrome security team

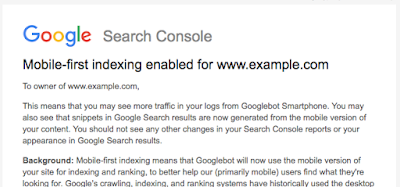

Rolling out mobile-first indexing

Today we’re announcing that after a year and a half of careful experimentation and testing, we’ve started migrating sites that follow the best practices for mobile-first indexing.

To recap, our crawling, indexing, and ranking systems have typically used the desktop version of a page’s content, which may cause issues for mobile searchers when that version is vastly different from the mobile version. Mobile-first indexing means that we’ll use the mobile version of the page for indexing and ranking, to better help our – primarily mobile – users find what they’re looking for.

We continue to have one single index that we use for serving search results. We do not have a “mobile-first index” that’s separate from our main index. Historically, the desktop version was indexed, but increasingly, we will be using the mobile versions of content.

We are notifying sites that are migrating to mobile-first indexing via Search Console. Site owners will see significantly increased crawl rate from the Smartphone Googlebot. Additionally, Google will show the mobile version of pages in Search results and Google cached pages.

To understand more about how we determine the mobile content from a site, see our developer documentation. It covers how sites using responsive web design or dynamic serving are generally set for mobile-first indexing. For sites that have AMP and non-AMP pages, Google will prefer to index the mobile version of the non-AMP page.

Sites that are not in this initial wave don’t need to panic. Mobile-first indexing is about how we gather content, not about how content is ranked. Content gathered by mobile-first indexing has no ranking advantage over mobile content that’s not yet gathered this way or desktop content. Moreover, if you only have desktop content, you will continue to be represented in our index.

Having said that, we continue to encourage webmasters to make their content mobile-friendly. We do evaluate all content in our index — whether it is desktop or mobile — to determine how mobile-friendly it is. Since 2015, this measure can help mobile-friendly content perform better for those who are searching on mobile. Related, we recently announced that beginning in July 2018, content that is slow-loading may perform less well for both desktop and mobile searchers.

To recap:

- Mobile-indexing is rolling out more broadly. Being indexed this way has no ranking advantage and operates independently from our mobile-friendly assessment.

- Having mobile-friendly content is still helpful for those looking at ways to perform better in mobile search results.

- Having fast-loading content is still helpful for those looking at ways to perform better for mobile and desktop users.

- As always, ranking uses many factors. We may show content to users that’s not mobile-friendly or that is slow loading if our many other signals determine it is the most relevant content to show.

We’ll continue to monitor and evaluate this change carefully. If you have any questions, please drop by our Webmaster forums or our public events.

Posted by Fan Zhang, Software Engineer

Introducing the Webmaster Video Series, now in Hindi

A few months ago, we launched the SEO Snippets video series, where the Google team answered some of the webmaster and SEO questions that we regularly see on the Webmaster Central Help Forum. We are now launching a similar series in Hindi, called the SEO Snippets in Hindi.

IFrom deciding what language to create content in (Hindi vs. Hinglish) to duplicate content, we’re answering the most frequently asked questions on the Hindi Webmaster forum and the India Webmaster community on Google+, in Hindi.

Check out the links shared in the videos to get more helpful webmaster information, drop by our help forum and subscribe to our YouTube channel for more tips and insights!

Posted by Syed Malik, Google Search Outreach

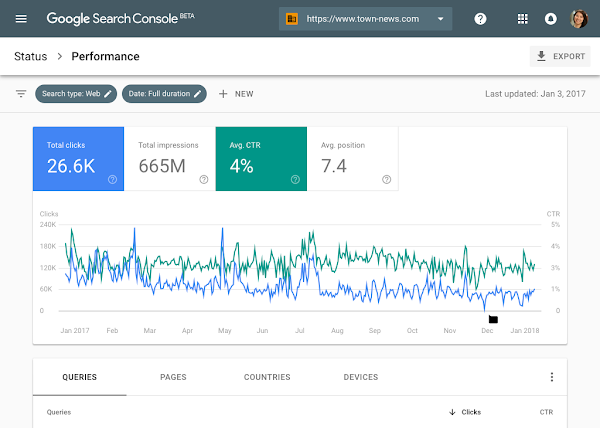

How listening to our users helped us build a better Search Console

We’ve used 3 main communication channels to hear what our users are saying:

- Help forum Top Contributors – Top Contributors in our help forums have been very helpful in bringing up topics seen in the forums. They communicate regularly with Google’s Search teams, and help the large community of Search Console users.

- Open feedback – We analyzed open feedback comments about classic Search Console and identified the top requests coming in. Open feedback can be sent via the ‘Submit feedback’ button in Search Console. This open feedback helped us get more context around one of the top requests from the last years: more than 90 days of data in the Search Analytics (Performance) report. We learned of the need to compare to a similar period in the previous year, which confirmed that our decision to include 16 months of data might be on the right track.

- Search Console panel – Last year we created a new communication channel by enlisting a group of four hundred randomly selected Search Console users, representing websites of all sizes. The panel members took part in almost every design iteration we had throughout the year, from explorations of new concepts through surveys, interviews and usability tests. The Search Console panel members have been providing valuable feedback which helped us test our assumptions and improve designs.

In one of these rounds we tested the new suggested design for the Performance report. Specifically we wanted to see whether it was clear how to use the ‘compare’ and ‘filter’ functionalities. To create an experience that felt as real as possible, we used a high fidelity prototype connected to real data. The prototype allowed study participants to freely interact with the user interface before even one row of production code had been written.

In this study we learned that the ‘compare’ functionality was often overlooked. We consequently changed the design with ‘filter’ and ‘compare’ appearing in a unified dialogue box, triggered when the ‘Add new’ chip is clicked. We continue to test this design and others to optimize its usability and usefulness.

We incorporated user feedback not only in practical design details, but also in architectural decisions. For example, user feedback led us to make major changes in the product’s core information architecture influencing the navigation and product structure of the new Search Console. The error and coverage reports were originally separated which could lead to multiple views of the same error. As a result of user feedback we united the error and coverage reporting offering one holistic view.

As the launch date grew closer, we performed several larger scale experiments. We A/B tested some of the new Search Console reports against the existing reports with 30,000 users. We tracked issue fix rates to verify new Search Console drives better results and sent out follow-up surveys to learn about their experience. This most recent feedback confirmed that export functionality was not a nice-to-have, but rather a requirement for many users and helped us tune detailed help pages in the initial release.

We are happy to announce that the new Search Console is now available to all sites. Whether it is through Search Console’s feedback button or through the user panel, we truly value a collaborative design process, where all of our users can help us build the best product.

Try out the new search console.

We’re not finished yet! Which feature would you love to see in the next iteration of Search Console? Let us know below.

Posted by the Search Console UX team

Launching SEO Audit category in Lighthouse Chrome extension

Lighthouse is an open-source, automated auditing tool for improving the quality of web pages. It provides a well-lit path for improving the quality of sites by allowing developers to run audits for performance, accessibility, progressive web apps compatibility and more. Basically, it “keeps you from crashing into the rocks”, hence the name Lighthouse.

The SEO audit category within Lighthouse enables developers and webmasters to run a basic SEO health-check for any web page that identifies potential areas for improvement. Lighthouse runs locally in your Chrome browser, enabling you to run the SEO audits on pages in a staging environment as well as on live pages, public pages and pages that require authentication.

Bringing SEO best practices to you

The current list of SEO audits is not an exhaustive list, nor does it make any SEO guarantees for Google websearch or other search engines. The current list of audits was designed to validate and reflect the SEO basics that every site should get right, and provides detailed guidance to developers and SEO practitioners of all skill levels. In the future, we hope to add more and more in-depth audits and guidance — let us know if you have suggestions for specific audits you’d like to see!

How to use it

Currently there are two ways to run these audits.

- Install the Lighthouse Chrome Extension

- Click on the Lighthouse icon in the extension bar

- Select the Options menu, tick “SEO” and click OK, then Generate report

- Open Chrome Developer Tools

- Go to Audits

- Click Perform an audit

- Tick the “SEO” checkbox and click Run Audit.

The current Lighthouse Chrome extension contains an initial set of SEO audits which we’re planning to extend and enhance in the future. Once we’re confident of its functionality, we’ll make the audits available by default in the stable release of Chrome Developer Tools.

We hope you find this functionality useful for your current and future projects. If these basic SEO tips are totally new to you and you find yourself interested in this area, make sure to read our complete SEO starter-guide! Leave your feedback and suggestions in the comments section below, on GitHub or on our Webmaster forum.

Happy auditing!

Posted by Valentyn, Webmaster Outreach Strategist.

Using page speed in mobile search ranking

People want to be able to find answers to their questions as fast as possible — studies show that people really care about the speed of a page. Although speed has been used in ranking for some time, that signal was focused on desktop searches. Today we’re announcing that starting in July 2018, page speed will be a ranking factor for mobile searches.

The “Speed Update,” as we’re calling it, will only affect pages that deliver the slowest experience to users and will only affect a small percentage of queries. It applies the same standard to all pages, regardless of the technology used to build the page. The intent of the search query is still a very strong signal, so a slow page may still rank highly if it has great, relevant content.

We encourage developers to think broadly how about performance affects a user’s experience of their page and to consider a variety of user experience metrics. Although there is no tool that directly indicates whether a page is affected by this new ranking factor, here are some resources that can be used to evaluate a page’s performance.

- Chrome User Experience Report, a public dataset of key user experience metrics for popular destinations on the web, as experienced by Chrome users under real-world conditions

- Lighthouse, an automated tool and a part of Chrome Developer Tools for auditing the quality (performance, accessibility, and more) of web pages

- PageSpeed Insights, a tool that indicates how well a page performs on the Chrome UX Report and suggests performance optimizations

As always, if you have any questions or feedback, please visit our webmaster forums.

Posted by Zhiheng Wang and Doantam Phan

Using page speed in mobile search ranking

People want to be able to find answers to their questions as fast as possible — studies show that people really care about the speed of a page. Although speed has been used in ranking for some time, that signal was focused on desktop searches. Today we’re announcing that starting in July 2018, page speed will be a ranking factor for mobile searches.

The “Speed Update,” as we’re calling it, will only affect pages that deliver the slowest experience to users and will only affect a small percentage of queries. It applies the same standard to all pages, regardless of the technology used to build the page. The intent of the search query is still a very strong signal, so a slow page may still rank highly if it has great, relevant content.

We encourage developers to think broadly how about performance affects a user’s experience of their page and to consider a variety of user experience metrics. Although there is no tool that directly indicates whether a page is affected by this new ranking factor, here are some resources that can be used to evaluate a page’s performance.

- Chrome User Experience Report, a public dataset of key user experience metrics for popular destinations on the web, as experienced by Chrome users under real-world conditions

- Lighthouse, an automated tool and a part of Chrome Developer Tools for auditing the quality (performance, accessibility, and more) of web pages

- PageSpeed Insights, a tool that indicates how well a page performs on the Chrome UX Report and suggests performance optimizations

As always, if you have any questions or feedback, please visit our webmaster forums.

Posted by Zhiheng Wang and Doantam Phan

Real-world data in PageSpeed Insights

PageSpeed Insights provides information about how well a page adheres to a set of best practices. In the past, these recommendations were presented without the context of how fast the page performed in the real world, which made it hard to understand when it was appropriate to apply these optimizations. Today, we’re announcing that PageSpeed Insights will use data from the Chrome User Experience Report to make better recommendations for developers and the optimization score has been tuned to be more aligned with the real-world data.

The PSI report now has several different elements:

- The Speed score categorizes a page as being Fast, Average, or Slow. This is determined by looking at the median value of two metrics: First Contentful Paint (FCP) and DOM Content Loaded (DCL). If both metrics are in the top one-third of their category, the page is considered fast.

- The Optimization score categorizes a page as being Good, Medium, or Low by estimating its performance headroom. The calculation assumes that a developer wants to keep the same appearance and functionality of the page.

- The Page Load Distributions section presents how this page’s FCP and DCL events are distributed in the data set. These events are categorized as Fast (top third), Average (middle third), and Slow (bottom third) by comparing to all events in the Chrome User Experience Report.

- The Page Stats section describes the round trips required to load the page’s render-blocking resources, the total bytes used by the page, and how it compares to the median number of round trips and bytes used in the dataset. It can indicate if the page might be faster if the developer modifies the appearance and functionality of the page.

- Optimization Suggestions is a list of best practices that could be applied to this page. If the page is fast, these suggestions are hidden by default, as the page is already in the top third of all pages in the data set.

For more details on these changes, see About PageSpeed Insights. As always, if you have any questions or feedback, please visit our forums and please remember to include the URL that is being evaluated.

Posted by Mushan Yang (杨沐杉) and Xiangyu Luo (罗翔宇), Software Engineers

Real-world data in PageSpeed Insights

PageSpeed Insights provides information about how well a page adheres to a set of best practices. In the past, these recommendations were presented without the context of how fast the page performed in the real world, which made it hard to understand when it was appropriate to apply these optimizations. Today, we’re announcing that PageSpeed Insights will use data from the Chrome User Experience Report to make better recommendations for developers and the optimization score has been tuned to be more aligned with the real-world data.

The PSI report now has several different elements:

- The Speed score categorizes a page as being Fast, Average, or Slow. This is determined by looking at the median value of two metrics: First Contentful Paint (FCP) and DOM Content Loaded (DCL). If both metrics are in the top one-third of their category, the page is considered fast.

- The Optimization score categorizes a page as being Good, Medium, or Low by estimating its performance headroom. The calculation assumes that a developer wants to keep the same appearance and functionality of the page.

- The Page Load Distributions section presents how this page’s FCP and DCL events are distributed in the data set. These events are categorized as Fast (top third), Average (middle third), and Slow (bottom third) by comparing to all events in the Chrome User Experience Report.

- The Page Stats section describes the round trips required to load the page’s render-blocking resources, the total bytes used by the page, and how it compares to the median number of round trips and bytes used in the dataset. It can indicate if the page might be faster if the developer modifies the appearance and functionality of the page.

- Optimization Suggestions is a list of best practices that could be applied to this page. If the page is fast, these suggestions are hidden by default, as the page is already in the top third of all pages in the data set.

For more details on these changes, see About PageSpeed Insights. As always, if you have any questions or feedback, please visit our forums and please remember to include the URL that is being evaluated.

Posted by Mushan Yang (杨沐杉) and Xiangyu Luo (罗翔宇), Software Engineers

Introducing the new Search Console

A few months ago we released a beta version of a new Search Console experience to a limited number of users. We are now starting to release this beta version to all users of Search Console, so that everyone can explore this simplified process of optimizing a website’s presence on Google Search. The functionality will include Search performance, Index Coverage, AMP status, and Job posting reports. We will send a message once your site is ready in the new Search Console.

We started by adding some of the most popular functionality in the new Search Console (which can now be used in your day-to-day flow of addressing these topics). We are not done yet, so over the course of the year the new Search Console (beta) will continue to add functionality from the classic Search Console. Until the new Search Console is complete, both versions will live side-by-side and will be easily interconnected via links in the navigation bar, so you can use both.

The new Search Console was rebuilt from the ground up by surfacing the most actionable insights and creating an interaction model which guides you through the process of fixing any pending issues. We’ve also added ability to share reports within your own organization in order to simplify internal collaboration.

Search Performance: with 16 months of data!

If you’ve been a fan of Search Analytics, you’ll love the new Search Performance report. Over the years, users have been consistent in asking us for more data in Search Analytics. With the new report, you’ll have 16 months of data, to make analyzing longer-term trends easier and enable year-over-year comparisons. In the near future, this data will also be available via the Search Console API.

Index Coverage: a comprehensive view on Google’s indexing

The updated Index Coverage report gives you insight into the indexing of URLs from your website. It shows correctly indexed URLs, warnings about potential issues, and reasons why Google isn’t indexing some URLs. The report is built on our new Issue tracking functionality

that alerts you when new issues are detected and helps you monitor their fix.

So how does that work?

- When you drill down into a specific issue you will see a sample of URLs from your site. Clicking on error URLs brings up the page details with links to diagnostic-tools that help you understand what is the source of the problem.

- Fixing Search issues often involves multiple teams within a company. Giving the right people access to information about the current status, or about issues that have come up there, is critical to improving an implementation quickly. Now, within most reports in the new Search Console, you can do that with the share button on top of the report which will create a shareable link to the report. Once things are resolved, you can disable sharing just as easily.

- The new Search Console can also help you confirm that you’ve resolved an issue, and help us to update our index accordingly. To do this, select a flagged issue, and click validate fix. Google will then crawl and reprocess the affected URLs with a higher priority, helping your site to get back on track faster than ever.

- The Index Coverage report works best for sites that submit sitemap files. Sitemap files are a great way to let search engines know about new and updated URLs. Once you’ve submitted a sitemap file, you can now use the sitemap filter over the Index Coverage data, so that you’re able to focus on an exact list of URLs.

Search Enhancements: improve your AMP and Job Postings pages

The new Search Console is also aimed at helping you implement Search Enhancements such as AMP and Job Postings (more to come). These reports provide details into the specific errors and warnings that Google identified for these topics. In addition to the functionally described in the index coverage report, we augmented the reports with two extra features:

- The first feature is aimed at providing faster feedback in the process of fixing an issue. This is achieved by running several instantaneous tests once you click the validate fix button. If your pages don’t pass this test we provide you with an immediate notification, otherwise we go ahead and reprocess the rest of the affected pages.

- The second new feature is aimed at providing positive feedback during the fix process by expanding the validation log with a list of URLs that were identified as fixed (in addition to URLs that failed the validation or might still be pending).

Similar to the AMP report, the new Search Console provides a Job postings report. If you have jobs listings on your website, you may be eligible to have those shown directly through Google for Jobs (currently only available in certain locations).

Feedback welcome

We couldn’t have gotten so far without the ongoing feedback from our diligent trusted testers (we plan to share more on how their feedback helped us dramatically improve Search Console). However, your continued feedback is critical for us: if there’s something you find confusing or wrong, or if there’s something you really like, please let us know through the feedback feature in the sidebar. Also note that the mobile experience in the new Search Console is still a work in progress.

We want to end this blog sharing an encouraging response we got from a user who has been testing the new Search Console recently:

“The UX of new Search Console is clean and well laid out, everything is where we expect it to be. I can even kick-off validation of my fixes and get email notifications with the result. It’s been a massive help in fixing up some pesky AMP errors and warnings that were affecting pages on our site. On top of all this, the Search Analytics report now extends to 16 months of data which is a total game changer for us” – Noah Szubski, Chief Product Officer, DailyMail.com

Are there any other tools that would make your life as a webmaster easier? Let us know in the comments here, and feel free to jump into our webmaster help forums to discuss your ideas with others!

Posted by John Mueller, Ofir Roval and Hillel Maoz

Introducing the new Search Console

A few months ago we released a beta version of a new Search Console experience to a limited number of users. We are now starting to release this beta version to all users of Search Console, so that everyone can explore this simplified process of optimizing a website’s presence on Google Search. The functionality will include Search performance, Index Coverage, AMP status, and Job posting reports. We will send a message once your site is ready in the new Search Console.

We started by adding some of the most popular functionality in the new Search Console (which can now be used in your day-to-day flow of addressing these topics). We are not done yet, so over the course of the year the new Search Console (beta) will continue to add functionality from the classic Search Console. Until the new Search Console is complete, both versions will live side-by-side and will be easily interconnected via links in the navigation bar, so you can use both.

The new Search Console was rebuilt from the ground up by surfacing the most actionable insights and creating an interaction model which guides you through the process of fixing any pending issues. We’ve also added ability to share reports within your own organization in order to simplify internal collaboration.

Search Performance: with 16 months of data!

If you’ve been a fan of Search Analytics, you’ll love the new Search Performance report. Over the years, users have been consistent in asking us for more data in Search Analytics. With the new report, you’ll have 16 months of data, to make analyzing longer-term trends easier and enable year-over-year comparisons. In the near future, this data will also be available via the Search Console API.

Index Coverage: a comprehensive view on Google’s indexing

The updated Index Coverage report gives you insight into the indexing of URLs from your website. It shows correctly indexed URLs, warnings about potential issues, and reasons why Google isn’t indexing some URLs. The report is built on our new Issue tracking functionality that alerts you when new issues are detected and helps you monitor their fix.

So how does that work?

- When you drill down into a specific issue you will see a sample of URLs from your site. Clicking on error URLs brings up the page details with links to diagnostic-tools that help you understand what is the source of the problem.

- Fixing Search issues often involves multiple teams within a company. Giving the right people access to information about the current status, or about issues that have come up there, is critical to improving an implementation quickly. Now, within most reports in the new Search Console, you can do that with the share button on top of the report which will create a shareable link to the report. Once things are resolved, you can disable sharing just as easily.

- The new Search Console can also help you confirm that you’ve resolved an issue, and help us to update our index accordingly. To do this, select a flagged issue, and click validate fix. Google will then crawl and reprocess the affected URLs with a higher priority, helping your site to get back on track faster than ever.

- The Index Coverage report works best for sites that submit sitemap files. Sitemap files are a great way to let search engines know about new and updated URLs. Once you’ve submitted a sitemap file, you can now use the sitemap filter over the Index Coverage data, so that you’re able to focus on an exact list of URLs.

Search Enhancements: improve your AMP and Job Postings pages

The new Search Console is also aimed at helping you implement Search Enhancements such as AMP and Job Postings (more to come). These reports provide details into the specific errors and warnings that Google identified for these topics. In addition to the functionally described in the index coverage report, we augmented the reports with two extra features:

- The first feature is aimed at providing faster feedback in the process of fixing an issue. This is achieved by running several instantaneous tests once you click the validate fix button. If your pages don’t pass this test we provide you with an immediate notification, otherwise we go ahead and reprocess the rest of the affected pages.

- The second new feature is aimed at providing positive feedback during the fix process by expanding the validation log with a list of URLs that were identified as fixed (in addition to URLs that failed the validation or might still be pending).

Similar to the AMP report, the new Search Console provides a Job postings report. If you have jobs listings on your website, you may be eligible to have those shown directly through Google for Jobs (currently only available in certain locations).

Feedback welcome

We couldn’t have gotten so far without the ongoing feedback from our diligent trusted testers (we plan to share more on how their feedback helped us dramatically improve Search Console). However, your continued feedback is critical for us: if there’s something you find confusing or wrong, or if there’s something you really like, please let us know through the feedback feature in the sidebar. Also note that the mobile experience in the new Search Console is still a work in progress.

We want to end this blog sharing an encouraging response we got from a user who has been testing the new Search Console recently:

“The UX of new Search Console is clean and well laid out, everything is where we expect it to be. I can even kick-off validation of my fixes and get email notifications with the result. It’s been a massive help in fixing up some pesky AMP errors and warnings that were affecting pages on our site. On top of all this, the Search Analytics report now extends to 16 months of data which is a total game changer for us” – Noah Szubski, Chief Product Officer, DailyMail.com

Are there any other tools that would make your life as a webmaster easier? Let us know in the comments here, and feel free to jump into our webmaster help forums to discuss your ideas with others!

Posted by John Mueller, Ofir Roval and Hillel Maoz

Introducing the new Webmaster Video Series

Google has a broad range of resources to help you better understand your website and improve its performance. This Webmaster Central Blog, the Help Center, the Webmaster forum, and the recently released Search Engine Optimization (SEO) Starter Guide a…

Introducing the new Webmaster Video Series

Google has a broad range of resources to help you better understand your website and improve its performance. This Webmaster Central Blog, the Help Center, the Webmaster forum, and the recently released Search Engine Optimization (SEO) Starter Guide a…

Introducing Rich Results & the Rich Results Testing Tool

Over the years, the different ways you can choose to highlight your website’s content in search has grown dramatically. In the past, we’ve called these rich snippets, rich cards, or enriched results. Going forward – to simplify the terminology – our documentation will use the name “rich results” for all of them. Additionally, we’re introducing a new rich results testing tool to make diagnosing your pages’ structured data easier.

The new testing tool focuses on the structured data types that are eligible to be shown as rich results. It allows you to test all data sources on your pages, such as JSON-LD (which we recommend), Microdata, or RDFa. The new tool provides a more accurate reflection of the page’s appearance on Search and includes improved handling for Structured Data found on dynamically loaded content. The tests for Recipes, Jobs, Movies, and Courses are currently supported — but this is just a first step, we plan on expanding over time.

Testing a page is easy: just open the testing tool, enter a URL, and review the output. If there are issues, the tool will highlight the invalid code in the page source. If you’re working with others on this page, the share-icon on the bottom-right lets you do that quickly. You can also use preview button to view all the different rich results the page is eligible for. And … once you’re happy with the result, use Submit To Google to fetch & index this page for search.

Want to get started with rich snippets rich results? Check out our guides for marking up your content. Feel free to drop by our Webmaster Help forums should you have any questions or get stuck; the awesome experts there can often help resolve issues and give you tips in no time!

Posted by Shachar Pooyae, Software Engineer

Introducing Rich Results & the Rich Results Testing Tool

Over the years, the different ways you can choose to highlight your website’s content in search has grown dramatically. In the past, we’ve called these rich snippets, rich cards, or enriched results. Going forward – to simplify the terminology – our documentation will use the name “rich results” for all of them. Additionally, we’re introducing a new rich results testing tool to make diagnosing your pages’ structured data easier.

The new testing tool focuses on the structured data types that are eligible to be shown as rich results. It allows you to test all data sources on your pages, such as JSON-LD (which we recommend), Microdata, or RDFa. The new tool provides a more accurate reflection of the page’s appearance on Search and includes improved handling for Structured Data found on dynamically loaded content. The tests for Recipes, Jobs, Movies, and Courses are currently supported — but this is just a first step, we plan on expanding over time.

Testing a page is easy: just open the testing tool, enter a URL, and review the output. If there are issues, the tool will highlight the invalid code in the page source. If you’re working with others on this page, the share-icon on the bottom-right lets you do that quickly. You can also use preview button to view all the different rich results the page is eligible for. And … once you’re happy with the result, use Submit To Google to fetch & index this page for search.

Want to get started with rich snippets rich results? Check out our guides for marking up your content. Feel free to drop by our Webmaster Help forums should you have any questions or get stuck; the awesome experts there can often help resolve issues and give you tips in no time!

Posted by Shachar Pooyae, Software Engineer

#NoHacked 3.0: Fixing common hack cases

So far on #NoHacked, we have shared some tips on detection and prevention. Now that you are able to detect hack attack, we would like to introduce some common hacking techniques and guides on how to fix them!

- Fixing the Cloaked Keywords and Links Hack

The cloaked keywords and link hack automatically creates many pages with nonsensical sentences, links, and images. These pages sometimes contain basic template elements from the original site, so at first glance, the pages might look like normal parts of the target site until you read the content. In this type of attack, hackers usually use cloaking techniques to hide the malicious content and make the injected page appear as part of the original site or a 404 error page.

- Fixing the Gibberish Hack

The gibberish hack automatically creates many pages with nonsensical sentences filled with keywords on the target site. Hackers do this so the hacked pages show up in Google Search. Then, when people try to visit these pages, they’ll be redirected to an unrelated page, like a porn site for example.

- Fixing the Japanese Keywords Hack

The Japanese keywords hack typically creates new pages with Japanese text on the target site in randomly generated directory names. These pages are monetized using affiliate links to stores selling fake brand merchandise and then shown in Google search. Sometimes the accounts of the hackers get added in Search Console as site owners.

Lastly, after you clean your site and fix the problem, make sure to file for a reconsideration request to have our teams review your site.

If you have any questions, post your questions on our Webmaster Help Forums!

#NoHacked 3.0: Fixing common hack cases

So far on #NoHacked, we have shared some tips on detection and prevention. Now that you are able to detect hack attack, we would like to introduce some common hacking techniques and guides on how to fix them!

- Fixing the Cloaked Keywords and Links Hack

The cloaked keywords and link hack automatically creates many pages with nonsensical sentences, links, and images. These pages sometimes contain basic template elements from the original site, so at first glance, the pages might look like normal parts of the target site until you read the content. In this type of attack, hackers usually use cloaking techniques to hide the malicious content and make the injected page appear as part of the original site or a 404 error page.

- Fixing the Gibberish Hack

The gibberish hack automatically creates many pages with nonsensical sentences filled with keywords on the target site. Hackers do this so the hacked pages show up in Google Search. Then, when people try to visit these pages, they’ll be redirected to an unrelated page, like a porn site for example.

- Fixing the Japanese Keywords Hack

The Japanese keywords hack typically creates new pages with Japanese text on the target site in randomly generated directory names. These pages are monetized using affiliate links to stores selling fake brand merchandise and then shown in Google search. Sometimes the accounts of the hackers get added in Search Console as site owners.

Lastly, after you clean your site and fix the problem, make sure to file for a reconsideration request to have our teams review your site.

If you have any questions, post your questions on our Webmaster Help Forums!

Getting your site ready for mobile-first indexing

To recap, currently our crawling, indexing, and ranking systems typically look at the desktop version of a page’s content, which may cause issues for mobile searchers when that version is vastly different from the mobile version. Mobile-first indexing means that we’ll use the mobile version of the content for indexing and ranking, to better help our – primarily mobile – users find what they’re looking for. Webmasters will see significantly increased crawling by Smartphone Googlebot, and the snippets in the results, as well as the content on the Google cache pages, will be from the mobile version of the pages.

As we said, sites that make use of responsive web design and correctly implement dynamic serving (that include all of the desktop content and markup) generally don’t have to do anything. Here are some extra tips that help ensure a site is ready for mobile-first indexing:

- Make sure the mobile version of the site also has the important, high-quality content. This includes text, images (with alt-attributes), and videos – in the usual crawlable and indexable formats.

- Structured data is important for indexing and search features that users love: it should be both on the mobile and desktop version of the site. Ensure URLs within the structured data are updated to the mobile version on the mobile pages.

- Metadata should be present on both versions of the site. It provides hints about the content on a page for indexing and serving. For example, make sure that titles and meta descriptions are equivalent across both versions of all pages on the site.

- No changes are necessary for interlinking with separate mobile URLs (m.-dot sites). For sites using separate mobile URLs, keep the existing link rel=canonical and link rel=alternate elements between these versions.

- Check hreflang links on separate mobile URLs. When using link rel=hreflang elements for internationalization, link between mobile and desktop URLs separately. Your mobile URLs’ hreflang should point to the other language/region versions on other mobile URLs, and similarly link desktop with other desktop URLs using hreflang link elements there.

- Ensure the servers hosting the site have enough capacity to handle potentially increased crawl rate. This doesn’t affect sites that use responsive web design and dynamic serving, only sites where the mobile version is on a separate host, such as m.example.com.

We will be evaluating sites independently on their readiness for mobile-first indexing based on the above criteria and transitioning them when ready. This process has already started for a handful of sites and is closely being monitored by the search team.

We continue to be cautious with rolling out mobile-first indexing. We believe taking this slowly will help webmasters get their sites ready for mobile users, and because of that, we currently don’t have a timeline for when it’s going to be completed. If you have any questions, drop by our Webmaster forums or our public events.

Posted by Gary

Getting your site ready for mobile-first indexing

To recap, currently our crawling, indexing, and ranking systems typically look at the desktop version of a page’s content, which may cause issues for mobile searchers when that version is vastly different from the mobile version. Mobile-first indexing means that we’ll use the mobile version of the content for indexing and ranking, to better help our – primarily mobile – users find what they’re looking for. Webmasters will see significantly increased crawling by Smartphone Googlebot, and the snippets in the results, as well as the content on the Google cache pages, will be from the mobile version of the pages.

As we said, sites that make use of responsive web design and correctly implement dynamic serving (that include all of the desktop content and markup) generally don’t have to do anything. Here are some extra tips that help ensure a site is ready for mobile-first indexing:

- Make sure the mobile version of the site also has the important, high-quality content. This includes text, images (with alt-attributes), and videos – in the usual crawlable and indexable formats.

- Structured data is important for indexing and search features that users love: it should be both on the mobile and desktop version of the site. Ensure URLs within the structured data are updated to the mobile version on the mobile pages.

- Metadata should be present on both versions of the site. It provides hints about the content on a page for indexing and serving. For example, make sure that titles and meta descriptions are equivalent across both versions of all pages on the site.

- No changes are necessary for interlinking with separate mobile URLs (m.-dot sites). For sites using separate mobile URLs, keep the existing link rel=canonical and link rel=alternate elements between these versions.

- Check hreflang links on separate mobile URLs. When using link rel=hreflang elements for internationalization, link between mobile and desktop URLs separately. Your mobile URLs’ hreflang should point to the other language/region versions on other mobile URLs, and similarly link desktop with other desktop URLs using hreflang link elements there.

- Ensure the servers hosting the site have enough capacity to handle potentially increased crawl rate. This doesn’t affect sites that use responsive web design and dynamic serving, only sites where the mobile version is on a separate host, such as m.example.com.

We will be evaluating sites independently on their readiness for mobile-first indexing based on the above criteria and transitioning them when ready. This process has already started for a handful of sites and is closely being monitored by the search team.

We continue to be cautious with rolling out mobile-first indexing. We believe taking this slowly will help webmasters get their sites ready for mobile users, and because of that, we currently don’t have a timeline for when it’s going to be completed. If you have any questions, drop by our Webmaster forums or our public events.

Posted by Gary