Mobile-First indexing, structured data, images, and your site

It’s been two years since we started working on “mobile-first indexing” – crawling the web with smartphone Googlebot, similar to how most users access it. We’ve seen websites across the world embrace the mobile web, making fantastic websites that work on all kinds of devices. There’s still a lot to do, but today, we’re happy to announce that we now use mobile-first indexing for over half of the pages shown in search results globally.

Checking for mobile-first indexing

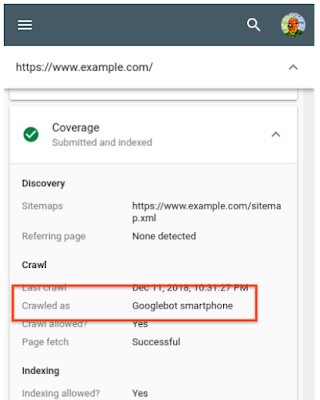

In general, we move sites to mobile-first indexing when our tests assure us that they’re ready. When we move sites over, we notify the site owner through a message in Search Console. It’s possible to confirm this by checking the server logs, where a majority of the requests should be from Googlebot Smartphone. Even easier, the URL inspection tool allows a site owner to check how a URL from the site (it’s usually enough to check the homepage) was last crawled and indexed.

If your site uses responsive design techniques, you should be all set! For sites that aren’t using responsive web design, we’ve seen two kinds of issues come up more frequently in our evaluations:

Missing structured data on mobile pages

Structured data is very helpful to better understand the content on your pages, and allows us to highlight your pages in fancy ways in the search results. If you use structured data on the desktop versions of your pages, you should have the same structured data on the mobile versions of the pages. This is important because with mobile-first indexing, we’ll only use the mobile version of your page for indexing, and will otherwise miss the structured data.

Testing your pages in this regard can be tricky. We suggest testing for structured data in general, and then comparing that to the mobile version of the page. For the mobile version, check the source code when you simulate a mobile device, or use the HTML generated with the mobile-friendly testing tool. Note that a page does not need to be mobile-friendly in order to be considered for mobile-first indexing.

Missing alt-text for images on mobile pages

The value of alt-attributes on images (“alt-text”) is a great way to describe images to users with screen-readers (which are used on mobile too!), and to search engine crawlers. Without alt-text for images, it’s a lot harder for Google Images to understand the context of images that you use on your pages.

Check “img” tags in the source code of the mobile version for representative pages of your website. As above, the source of the mobile version can be seen by either using the browser to simulate a mobile device, or by using the Mobile-Friendly test to check the Googlebot rendered version. Search the source code for “img” tags, and double-check that your page is providing appropriate alt-attributes for any that you want to have findable in Google Images.

For example, that might look like this:

With alt-text (good!):<img src="cute-puppies.png" alt="A photo of cute puppies on a blanket">

Without alt-text:<img src="sad-puppies.png">

It’s fantastic to see so many great websites that work well on mobile! We’re looking forward to being able to index more and more of the web using mobile-first indexing, helping more users to search the web in the same way that they access it: with a smartphone. We’ll continue to monitor and evaluate this change carefully. If you have any questions, please drop by our Webmaster forums or our public events.

Posted by John Mueller, wearer of many socks, Google Switzerland

Why & how to secure your website with the HTTPS protocol

You can find the whole session, about one hour long, in this video:

- What HTTPS encryption is, and why it is important to protect your visitors and yourself,

- How HTTPS enables a more modern web,

- What are the usual complaints about HTTPS, and are they still true today?

- “But HTTPS certificates cost so much money!”

- “But switching to HTTPS will destroy my SEO!”

- “But “mixed content” is such a headache!”

- “But my ad revenue will get destroyed!”

- “But HTTPS is sooooo sloooow!”

- Some practical advice to run the migration. Those are an aggregation of:

- The “site move with URL changes” documentation

- General level advice on which HTTPS specifications to choose (HSTS, encryption key strength, etc…)

Introducing the Indexing API and structured data for livestreams

Over the past few years, it’s become easier than ever to stream live videos online, from celebrity updates to special events. But it’s not always easy for people to determine which videos are live and know when to tune in. Today, we’re introducing new …

Rich Results expands for Question & Answer pages

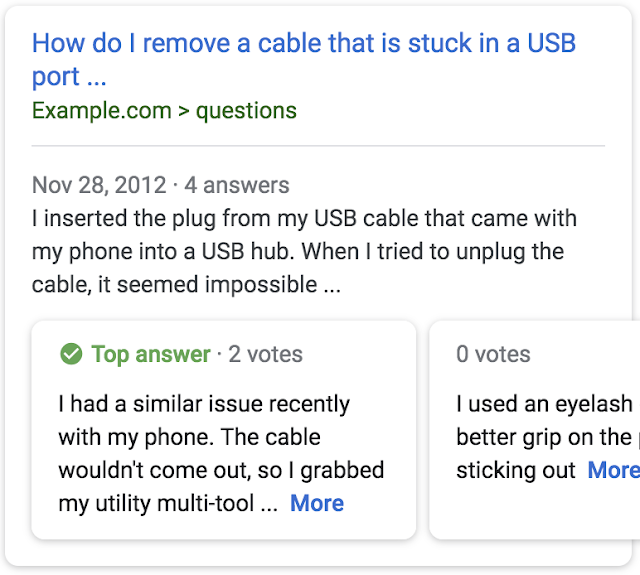

Frequently, the information they’re looking for is on sites where users ask and answer each other’s questions. Popular social news sites, expert forums, and help and support message boards are all examples of this pattern.

|

|

A screenshot of an example search result for a page titled “Why do touchscreens sometimes register a touch when …” with a preview of the top answers from the page.

|

In order to help users better identify which search results may give the best information about their question, we have developed a new rich result type for question and answer sites. Search results for eligible Q&A pages display a preview of the top answers. This new presentation helps site owners reach the right users for their content and helps users get the relevant information about their questions faster.

|

| A screenshot of an example search result for a page titled “Why do touchscreens sometimes register a touch when …” with a preview of the top answers from the page. |

To be eligible for this feature, add Q&A structured data to your pages with Q&A content. Be sure to use the Structured Data Testing Tool to see if your page is eligible and to preview the appearance in search results. You can also check out Search Console to see aggregate stats and markup error examples. The Performance report also tells you which queries show your Q&A Rich Result in Search results, and how these change over time.

If you have any questions, ask us in the Webmaster Help Forum or reach out on Twitter!

Posted by Kayla Hanson, Software Engineer

PageSpeed Insights, now powered by Lighthouse

At Google, we know that speed matters and we provide a variety of tools to help everyone understand the performance of a page or site. Historically, these tools have used different analysis engines. Unfortunately, this caused some confusion because the…

Notifying users of unclear subscription pages

Unclear mobile subscriptions

Clearer billing information for Chrome users

- Is the billing information visible and obvious to users? For example, adding no subscription information on the subscription page or hiding the information is a bad start because users should have access to the information when agreeing to subscribe.

- Can customers easily see the costs they’re going to incur before accepting the terms? For example, displaying the billing information in grey characters over a grey background, therefore making it less readable, is not considered a good user practice.

- Is the fee structure easily understandable? For example, the formula presented to explain how the cost of the service will be determined should be as simple and straightforward as possible.

If your billing service takes users through a clearly visible and understandable billing process as described in our best practices, you don’t need to make any changes. Also, the new warning in Chrome doesn’t impact your website’s ranking in Google Search.

Introducing reCAPTCHA v3: the new way to stop bots

A Frictionless User Experience

Over the last decade, reCAPTCHA has continuously evolved its technology. In reCAPTCHA v1, every user was asked to pass a challenge by reading distorted text and typing into a box. To improve both user experience and security, we introduced reCAPTCHA v2 and began to use many other signals to determine whether a request came from a human or bot. This enabled reCAPTCHA challenges to move from a dominant to a secondary role in detecting abuse, letting about half of users pass with a single click. Now with reCAPTCHA v3, we are fundamentally changing how sites can test for human vs. bot activities by returning a score to tell you how suspicious an interaction is and eliminating the need to interrupt users with challenges at all. reCAPTCHA v3 runs adaptive risk analysis in the background to alert you of suspicious traffic while letting your human users enjoy a frictionless experience on your site.

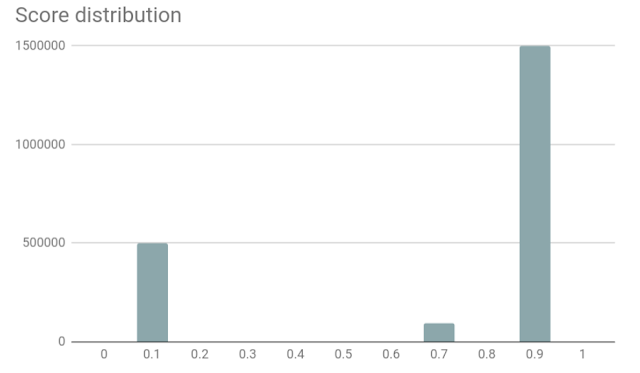

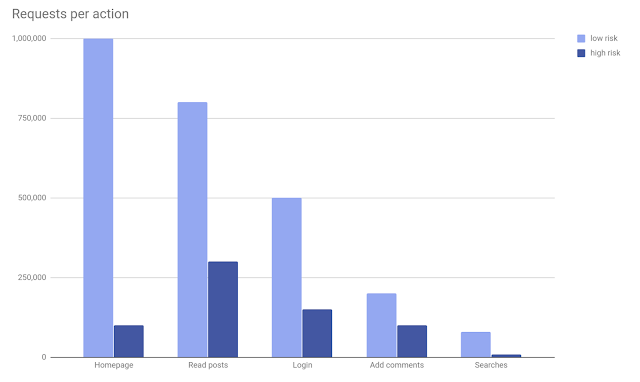

More Accurate Bot Detection with “Actions”

In reCAPTCHA v3, we are introducing a new concept called “Action”—a tag that you can use to define the key steps of your user journey and enable reCAPTCHA to run its risk analysis in context. Since reCAPTCHA v3 doesn’t interrupt users, we recommend adding reCAPTCHA v3 to multiple pages. In this way, the reCAPTCHA adaptive risk analysis engine can identify the pattern of attackers more accurately by looking at the activities across different pages on your website. In the reCAPTCHA admin console, you can get a full overview of reCAPTCHA score distribution and a breakdown for the stats of the top 10 actions on your site, to help you identify which exact pages are being targeted by bots and how suspicious the traffic was on those pages.

Fighting Bots Your Way

Another big benefit that you’ll get from reCAPTCHA v3 is the flexibility to prevent spam and abuse in the way that best fits your website. Previously, the reCAPTCHA system mostly decided when and what CAPTCHAs to serve to users, leaving you with limited influence over your website’s user experience. Now, reCAPTCHA v3 will provide you with a score that tells you how suspicious an interaction is. There are three potential ways you can use the score. First, you can set a threshold that determines when a user is let through or when further verification needs to be done, for example, using two-factor authentication and phone verification. Second, you can combine the score with your own signals that reCAPTCHA can’t access—such as user profiles or transaction histories. Third, you can use the reCAPTCHA score as one of the signals to train your machine learning model to fight abuse. By providing you with these new ways to customize the actions that occur for different types of traffic, this new version lets you protect your site against bots and improve your user experience based on your website’s specific needs.

In short, reCAPTCHA v3 helps to protect your sites without user friction and gives you more power to decide what to do in risky situations. As always, we are working every day to stay ahead of attackers and keep the Internet easy and safe to use (except for bots).

Ready to get started with reCAPTCHA v3? Visit our developer site for more details. Posted by Wei Liu, Google Product Manager

Google is introducing its Product Experts Program!

Google’s Top Contributors () and Rising Stars (

) are some of our most active and helpful members on these forums. With over 100 members globally just for the Webmaster Forums (1000 members if you count all product forums), this community of experts helps thousands of people every year by sharing their knowledge and helping others get the most out of Google products.

Today, we’re excited to announce that we’re rebranding and relaunching the Top Contributor program as Google’s Product Experts program! Same community of experts, shiny new brand.

Over the following days, we’ll be updating our badges in the forums so you can recognize who our most passionate and dedicated Product Experts are:

Silver Product Expert: Newer members who are developing their product knowledge

Gold Product Expert: Trusted members who are knowledgeable and active contributors

Platinum Product Expert: Seasoned members who contribute beyond providing help through mentoring, creating content, and more

Product Expert Alumni: Past members who are no longer active, but were previously recognized for their helpfulness

More information about the new badges and names.

Those Product Experts are users who are passionate about Google products and enjoy helping other users. They also help us by giving feedback on the tools we all use, like the Search Console, by surfacing questions they think Google should answer better, etc… Obtaining feedback from our users is one of Google’s core values, and Product Experts often have a great understanding of what affects a lot of our users. For example, here is a blog post detailing how Product Expert feedback about the Search Console was used to build the new version of the tool.

Visit the new Product Experts program website to get information on how to become a Product Expert yourself, and come and join us on our Webmaster forums, we’d love to hear from you!

Written by Vincent Courson, Search Outreach team

The new Search Console is graduating out of Beta 🎓

Today we mark an important milestone in Search Console’s history: we are graduating the new Search Console out of beta! With this graduation we are also launching the Manual Actions report and a “Test Live” capability to the recently launched URL inspection tool, which are joining a stream of reports and features we launched in the new Search Console over the past few months.

Our journey to the new Search Console

We launched the new Search Console at the beginning of the year. Since then we have been busy hearing and responding to your feedback, adding new features such as the URL Inspection Tool, and migrating key reports and features. Here’s what the new Search Console gives you:

More data:

- Get an accurate view of your website content using the Index Coverage report.

- Review your Search Analytics data going back 16 months in the Performance report.

- See information on links pointing to your site and within your site using the Links report.

- Retrieve crawling, indexing, and serving information for any URL directly from the Google index using the URL Inspection Tool.

Better alerting and new “fixed it” flows:

- Get automatic alerts and see a listing of pages affected by Crawling, Indexing, AMP, Mobile Usability, Recipes, or Job posting issues.

- Reports now show the HTML code where we think a fix necessary (if applicable).

- Share information quickly with the relevant people in your organization to drive the fix.

- Notify Google when you’ve fixed an issue. We will review your pages, validate whether the issue is fixed, and return a detailed log of the validation findings.

Simplified sitemaps and account settings management:

- Let Google know how your site is structured by submitting sitemaps

- Submit individual URLs for indexing (see below).

- Add new sites to your account, invite and manage users.

Out of Beta

While the old Search Console still has some features that are not yet available in the new one, we believe that the most common use cases are supported, in an improved way, in the new Search Console. When an equivalent feature exists in both old and new Search Console, our messages will point users to the new version. We’ll also add a reminder link in the old report. After a reasonable period, we will remove the old report.

Read more about how to migrate from old to the new Search Console, including a list of improved reports and how to perform common tasks, in our help center.

Manual Actions and Security Issues alerts

To ensure that you don’t miss any critical alerts for your site, active manual actions and security issues will be shown directly on the Overview page in the new console. In addition, the Manual Actions report has gotten a fresher look in the new Search Console. From there, you can review the details for any pending Manual Action and, if needed, file a reconsideration request.

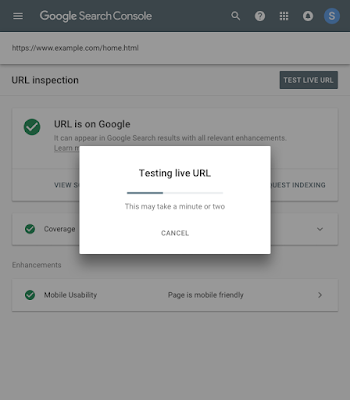

URL Inspection – Live mode and request indexing

The URL inspection tool that we launched a few months ago now enables you to run the inspection on the live version of the page. This is useful for debugging and fixing issues in a page or confirming whether a reported issue still exists in a page. If the issue is fixed on the live version of the page, you can ask Google to recrawl and index the page.

We’re not finished yet!

Your feedback is important to us! As we evolve Search Console, your feedback helps us to tune our efforts. You can still switch between the old and new products easily, so any missing functionality you need is just a few clicks away. We will continue working on moving more reports and tools as well as adding exciting new capabilities to the new Search Console.

Posted by Hillel Maoz and Yinnon Haviv, Engineering Leads, Search Console team

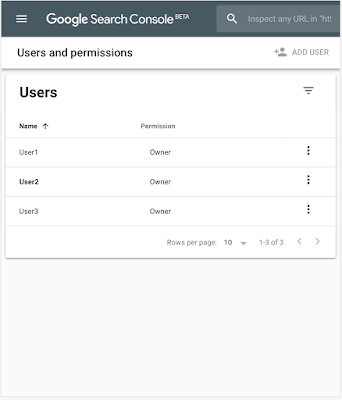

Collaboration and user management in the new Search Console

As part of our reinvention of Search Console, we have been rethinking the models of facilitating cooperation and accountability for our users. We decided to redesign the product around cooperative team usage and transparency of action history. The new Search Console will gradually provide better history tracking to show who performed which significant property-affecting modifications, such as changing a setting, validating an issue or submitting a new sitemap. In that spirit we also plan to enable all users to see critical site messages.

New features

- User management is now an integral part of Search Console.

- The new Search Console enables you to share a read-only view of many reports, including Index coverage, AMP, and Mobile Usability. Learn more.

- A new user management interface that enables all users to see and (if appropriate), manage user roles for all property users.

New Role definition

- In order to provide a simpler permission model, we are planning to limit the “restricted” user role to read-only status. While being able to see all information, read-only users will no longer be able to perform any state-changing actions, including starting a fix validation or sharing an issue.

Best practices

As a reminder, here are some best practices for managing user permissions in Search Console:

- Grant users only the permission level that they need to do their work. See the permissions descriptions.

- If you need to share an issue details report, click the Share link on that page.

- Revoke permissions from users who no longer work on a property.

- When removing a previous verified owner, be sure to remove all verification tokens for that user.

- Regularly audit and update the user permissions using the Users & Permissions page in new Search Console.

User feedback

As part of our Beta exploration, we released visibility of the user management interface to all user roles. Some users reached out to request more time to prepare for the updated user management model, including the ability of restricted and full users to easily see a list of other collaborators on the site. We’ve taken that feedback and will hold off on that part of the launch. Stay tuned for more updates relating to collaboration tools and changes on our permission models.

As always, we love to hear feedback from our users. Feel free to use the feedback form within Search Console, and we welcome your discussions in our help forums as well!

Posted by John Mueller, Google Switzerland

Links, Mobile Usability, and site management in the new Search Console

More features are coming to the new Search Console. This time we’ve focused on importing existing popular features from the old Search Console to the new product.

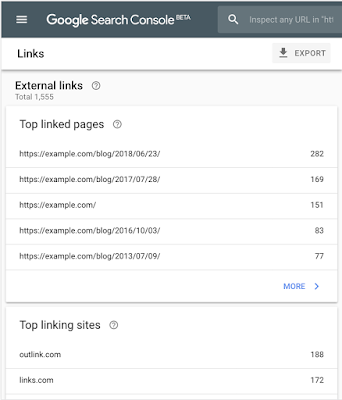

Links Report

Search Console users value the ability to see links to and within their site, as Google Search sees them. Today, we are rolling out the new Links report, which combines the functionality of the “Links to your site” and “Internal Links” reports on the old Search Console. We hope you find this useful!

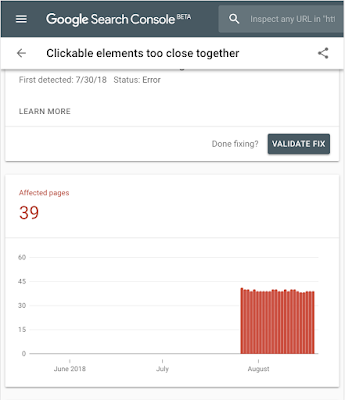

Mobile Usability report

Mobile Usability is an important priority for all site owners. In order to help site owners with fixing mobile usability issues, we launched the Mobile Usability report on the new Search Console. Issue names are the same as in the old report but we now allow users to submit a validation and reindexing request when an issue is fixed, similar to other reports in the new Search Console.

Site and user management

To make the new Search Console feel more like home, we’ve added the ability to add and verify new sites, and manage your property’s users and permissions, directly in new Search Console using our newly added settings page.

Keep sending feedback

As always, we would love to get your feedback through the tools directly and our help forums so please share and let us know how we’re doing.

Posted by Ariel Kroszynski and Roman Kecher – Search Console engineers

Hey Google, what’s the latest news?

Since launching the Google Assistant in 2016, we have seen users ask questions about everything from weather to recipes and news. In order to fulfill news queries with results people can count on, we collaborated on a new schema.org structured data spe…

An update to referral source URLs for Google Images

Every day, hundreds of millions of people use Google Images to visually discover and explore content on the web. Whether it be finding ideas for your next baking project, or visual instructions on how to fix a flat tire, exploring image results can so…

How we fought webspam – Webspam Report 2017

We always want to make sure that when you use Google Search to find information, you get the highest quality results. But, we are aware of many bad actors who are trying to manipulate search ranking and profit from it, which is at odds with our core mission: to organize the world’s information and make it universally accessible and useful. Over the years, we’ve devoted a huge effort toward combating abuse and spam on Search. Here’s a look at how we fought abuse in 2017.

We call these various types of abuse that violate the webmaster guidelines “spam.” Our evaluation indicated that for many years, less than 1 percent of search results users visited are spammy. In the last couple of years, we’ve managed to further reduce this by half.

Google webspam trends and how we fought webspam in 2017

Another abuse vector is the manipulation of links, which is one of the foundation ranking signals for Search. In 2017 we doubled down our effort in removing unnatural links via ranking improvements and scalable manual actions. We have observed a year-over-year reduction of spam links by almost half.

Working with users and webmasters for a better web

We also actively work with webmasters to maintain the health of the web ecosystem. Last year, we sent 45 million messages to registered website owners via Search Console letting them know about issues we identified with their websites. More than 6 million of these messages are related to manual actions, providing transparency to webmasters so they understand why their sites got manual actions and how to resolve the issue.

Last year, we released a beta version of a new Search Console to a limited number of users and afterwards, to all users of Search Console. We listened to what matters most to the users, and started with popular functionalities such as Search performance, Index Coverage and others. These can help webmasters optimize their websites’ Google Search presence more easily.

Through enhanced Safe Browsing protections, we continue to protect more users from bad actors online. In the last year, we have made significant improvements to our safe browsing protection, such as broadening our protection of macOS devices, enabling predictive phishing protection in Chrome, cracked down on mobile unwanted software, and launched significant improvements to our ability to protect users from deceptive Chrome extension installation.

We have a multitude of channels to engage directly with webmasters. We have dedicated team members who meet with webmasters regularly both online and in-person. We conducted more than 250 online office hours, online events and offline events around the world in more than 60 cities to audiences totaling over 220,000 website owners, webmasters and digital marketers. In addition, our official support forum has answered a high volume of questions in many languages. Last year, the forum had 63,000 threads generating over 280,000 contributing posts by 100+ Top Contributors globally. For more details, see this post. Apart from the forums, blogs and the SEO starter guide, the Google Webmaster YouTube channel is another channel to find more tips and insights. We launched a new SEO snippets video series to help with short and to-the-point answers to specific questions. Be sure to subscribe to the channel!

Despite all these improvements, we know we’re not yet done. We’re relentless in our pursue of an abuse-free user experience, and will keep improving our collaboration with the ecosystem to make it happen.

Introducing the Indexing API for job posting URLs

Last June we launched a job search experience that has since connected tens of millions of job seekers around the world with relevant job opportunities from third party providers across the web. Timely indexing of new job content is critical because m…

New URL inspection tool & more in Search Console

A few months ago, we introduced the new Search Console. Here are some updates on how it’s progressing.

Welcome “URL inspection” tool

One of our most common user requests in Search Console is for more details on how Google Search sees a specific URL. We listened, and today we’ve started launching a new tool, “URL inspection,” to provide these details so Search becomes more transparent. The URL Inspection tool provides detailed crawl, index, and serving information about your pages, directly from the Google index.

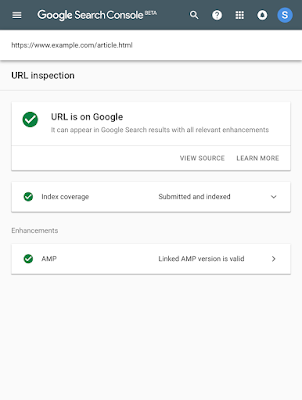

Enter a URL that you own to learn the last crawl date and status, any crawling or indexing errors, and the canonical URL for that page. If the page was successfully indexed, you can see information and status about any enhancements we found on the page, such as linked AMP version or rich results like Recipes and Jobs.

URL is indexed with valid AMP enhancement

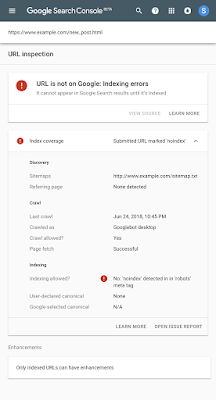

If a page isn’t indexed, you can learn why. The new report includes information about noindex robots meta tags and Google’s canonical URL for the page.

URL is not indexed due to ‘noindex’ meta tag in the HTML

A single click can take you to the issue report showing all other pages affected by the same issue to help you track down and fix common bugs.

We hope that the URL Inspection tool will help you debug issues with new or existing pages in the Google Index. We began rolling it out today; it will become available to all users in the coming weeks.

More exciting updates

In addition to the launch of URL inspection, we have a few more features and reports we recently launched to the new Search Console:

- Sixteen months of traffic data: The Search Analytics API now returns 16 months of data, just like the Performance report.

- Recipe report: The Recipe report help you fix structured data issues affecting recipes rich results. Use our task-oriented interface to test and validate your fixes; we will keep you informed on your progress using messages.

- New Search Appearance filters in Search Analytics: The performance report now gives you more visibility on new search appearance results, including Web Light and Google Play Instant results.

Thank you for your feedback

We are constantly reading your feedback, conducting surveys, and monitoring usage statistics of the new Search Console. We are happy to see so many of you using the new issue validation flow in Index Coverage and the AMP report. We notice that issues tend to get fixed quicker when you use these tools. We also see that you appreciate the updates on the validation process that we provide by email or on the validation details page.

We want to thank everyone who provided feedback: it has helped us improve our flows and fix bugs on our side.

More to come

The new Search Console is still beta, but it’s adding features and reports every month. Please keep sharing your feedback through the various channels and let us know how we’re doing.

Posted by Roman Kecher and Sion Schori – Search Console engineers

Google Search at I/O 2018

What we did at I/O

The event was a wonderful way to meet many great people from various communities across the globe, exchange ideas, and gather feedback. Besides many great web sessions, codelabs, and office hours we shared a few things with the community in two sessions specific to Search:

- Deliver search-friendly JavaScript-powered websites with John Mueller and Tom Greenaway

- Build a successful web presence with Google Search with Mariya Moeva and John Mueller

The sessions included the launch of JavaScript error reporting in the Mobile Friendly Test tool, dynamic rendering (we will discuss this in more detail in a future post), and an explanation of how CMS can use the Indexing and Search Console APIs to provide users with insights. For example, Wix lets their users submit their homepage to the index and see it in Search results instantly, and Squarespace created a Google Search keywords report to help webmasters understand what prospective users search for.

During the event, we also presented the new Search Console in the Sandbox area for people to try and were happy to get a lot of positive feedback, from people being excited about the AMP Status report to others exploring how to improve their content for Search.

Hands-on codelabs, case studies and more

We presented the Structured Data Codelab that walks you through adding and testing structured data. We were really happy to see that it ended up being one of the top 20 codelabs by completions at I/O. If you want to learn more about the benefits of using Structured Data, check out our case studies.

During the in-person office hours we saw a lot of interest around HTTPS, mobile-first indexing, AMP, and many other topics. The in-person Office Hours were a wonderful addition to our monthly Webmaster Office Hours hangout. The questions and comments will help us adjust our documentation and tools by making them clearer and easier to use for everyone.

Highlights and key takeaways

We also repeated a few key points that web developers should have an eye on when building websites, such as:

- Indexing and rendering don’t happen at the same time. We may defer the rendering to a later point in time.

- Make sure the content you want in Search has metadata, correct HTTP statuses, and the intended canonical tag.

- Hash-based routing (URLs with “#”) should be deprecated in favour of the JavaScript History API in Single Page Apps.

- Links should have an href attribute pointing to a URL, so Googlebot can follow the links properly.

Make sure to watch this talk for more on indexing, dynamic rendering and troubleshooting your site. If you wanna learn more about things to do as a CMS developer or theme author or Structured Data, watch this talk.

We were excited to meet some of you at I/O as well as the global I/O extended events and share the latest developments in Search. To stay in touch, join the Webmaster Forum or follow us on Twitter, Google+, and YouTube.

Posted by Martin Splitt, Webmaster Trends Analyst

Our goal: helping webmasters and content creators

- Google Web Fundamentals: Provides technical guidance on building a modern website that takes advantage of open web standards.

- Google Search developer documentation: Describes how Google crawls and indexes a website. Includes authoritative guidance on building a site that is optimized for Google Search.

- Search Console Help Center: Provides detailed information on how to use and take advantage of Search Console, the best way for a website owner to understand how Google sees their site.

- The Search Engine Optimization (SEO) Starter Guide: Provides a complete overview of the basics of SEO according to our recommended best practices.

- Google webmaster guidelines: Describes policies and practices that may lead to a site being removed entirely from the Google index or otherwise affected by an algorithmic or manual spam action that can negatively affect their Search appearance.

- Google Webmasters YouTube Channel

Google I/O 2018 – What sessions should SEOs and Webmasters watch live ?

However, you don’t have to physically attend the event to take advantage of this once-a-year opportunity: many conferences and talks are live streamed on YouTube for anyone to watch. You will find the full-event schedule here.

Send your recipes to the Google Assistant

Last year, we launched Google Home with recipe guidance, providing users with step-by-step instructions for cooking recipes. With more people using Google Home every day, we’re publishing new guidelines so your recipes can support this voice guided ex…