User experience improvements with page speed in mobile search

To help users find the answers to their questions faster, we included page speed as a ranking factor for mobile searches in 2018. Since then, we’ve observed improvements on many pages across the web. We want to recognize the performance improvements webmasters have made over the past year. A few highlights:

- For the slowest one-third of traffic, we saw user-centric performance metrics improve by 15% to 20% in 2018. As a comparison, no improvement was seen in 2017.

- We observed improvements across the whole web ecosystem. On a per country basis, more than 95% of countries had improved speeds.

- When a page is slow to load, users are more likely to abandon the navigation. Thanks to these speed improvements, we’ve observed a 20% reduction in abandonment rate for navigations initiated from Search, a metric that site owners can now also measure via the Network Error Logging API available in Chrome.

- In 2018, developers ran over a billion PageSpeed Insights audits to identify performance optimization opportunities for over 200 million unique urls.

Great work and thank you! We encourage all webmasters to optimize their sites’ user experience. If you’re unsure how your pages are performing, the following tools and documents can be useful:

- PageSpeed Insights provides page analysis and optimization recommendations.

- Google Chrome User Experience Report provides the user experience metrics for how real-world Chrome users experience popular destinations on the web.

- Documentation on performance on Web Fundamentals.

For any questions, feel free to drop by our help forums (like the webmaster community) to chat with other experts.

Posted by Genqing Wu and Doantam Phan

User experience improvements with page speed in mobile search

To help users find the answers to their questions faster, we included page speed as a ranking factor for mobile searches in 2018. Since then, we’ve observed improvements on many pages across the web. We want to recognize the performance improvements webmasters have made over the past year. A few highlights:

- For the slowest one-third of traffic, we saw user-centric performance metrics improve by 15% to 20% in 2018. As a comparison, no improvement was seen in 2017.

- We observed improvements across the whole web ecosystem. On a per country basis, more than 95% of countries had improved speeds.

- When a page is slow to load, users are more likely to abandon the navigation. Thanks to these speed improvements, we’ve observed a 20% reduction in abandonment rate for navigations initiated from Search, a metric that site owners can now also measure via the Network Error Logging API available in Chrome.

- In 2018, developers ran over a billion PageSpeed Insights audits to identify performance optimization opportunities for over 200 million unique urls.

Great work and thank you! We encourage all webmasters to optimize their sites’ user experience. If you’re unsure how your pages are performing, the following tools and documents can be useful:

- PageSpeed Insights provides page analysis and optimization recommendations.

- Google Chrome User Experience Report provides the user experience metrics for how real-world Chrome users experience popular destinations on the web.

- Documentation on performance on Web Fundamentals.

For any questions, feel free to drop by our help forums (like the webmaster community) to chat with other experts.

Posted by Genqing Wu and Doantam Phan

How to discover & suggest Google-selected canonical URLs for your pages

Sometimes a web page can be reached by using more than one URL. In such cases, Google tries to determine the best URL to display in search and to use in other ways. We call this the “canonical URL.” There are ways site owners can help us better determine what should be the canonical URLs for their content.

If you suspect we’ve not selected the best canonical URL for your content, you can check by entering your page’s address into the URL Inspection tool within Search Console. It will show you the Google-selected canonical. If you believe there’s a better canonical that should be used, follow the steps on our duplicate URLs help page on how to suggest a preferred choice for consideration.

Please be aware that if you search using the site: or inurl: commands, you will be shown the domain you specified in those, even if these aren’t the Google-selected canonical. This happens because we’re fulfilling the exact request entered. Behind-the-scenes, we still use the Google-selected canonical, including for when people see pages without using the site: or inurl: commands.

We’ve also changed URL Inspection tool so that it will display any Google-selected canonical for a URL, not just those for properties you manage in Search Console. With this change, we’re also retiring the info: command. This was an alternative way of discovering canonicals. It was relatively underused, and URL Inspection tool provides a more comprehensive solution to help publishers with URLs.

Posted by John Mueller, Google Switzerland

How to discover & suggest Google-selected canonical URLs for your pages

Sometimes a web page can be reached by using more than one URL. In such cases, Google tries to determine the best URL to display in search and to use in other ways. We call this the “canonical URL.” There are ways site owners can help us better determine what should be the canonical URLs for their content.

If you suspect we’ve not selected the best canonical URL for your content, you can check by entering your page’s address into the URL Inspection tool within Search Console. It will show you the Google-selected canonical. If you believe there’s a better canonical that should be used, follow the steps on our duplicate URLs help page on how to suggest a preferred choice for consideration.

Please be aware that if you search using the site: or inurl: commands, you will be shown the domain you specified in those, even if these aren’t the Google-selected canonical. This happens because we’re fulfilling the exact request entered. Behind-the-scenes, we still use the Google-selected canonical, including for when people see pages without using the site: or inurl: commands.

We’ve also changed URL Inspection tool so that it will display any Google-selected canonical for a URL, not just those for properties you manage in Search Console. With this change, we’re also retiring the info: command. This was an alternative way of discovering canonicals. It was relatively underused, and URL Inspection tool provides a more comprehensive solution to help publishers with URLs.

Posted by John Mueller, Google Switzerland

This year in Search Spam – Webspam report 2018

Google webspam trends and how we fought webspam in 2018

Working with users, webmasters and developers for a better web

This year in Search Spam – Webspam report 2018

Google webspam trends and how we fought webspam in 2018

Working with users, webmasters and developers for a better web

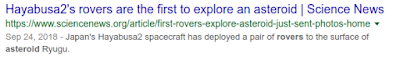

Help Google Search know the best date for your web page

Sometimes, Google shows dates next to listings in its search results. In this post, we’ll answer some commonly-asked questions webmasters have about how these dates are determined and provide some best practices to help improve their accuracy.

How dates are determined

Google shows the date of a page when its automated systems determine that it would be relevant to do so, such as for pages that can be time-sensitive, including news content:

Google determines a date using a variety of factors, including but not limited to: any prominent date listed on the page itself or dates provided by the publisher through structured markup.

Google doesn’t depend on one single factor because all of them can be prone to issues. Publishers may not always provide a clear visible date. Sometimes, structured data may be lacking or may not be adjusted to the correct time zone. That’s why our systems look at several factors to come up with what we consider to be our best estimate of when a page was published or significantly updated.

How to specify a date on a page

To help Google to pick the right date, site owners and publishers should:

- Show a clear date: Show a visible date prominently on the page.

- Use structured data: Use the

datePublishedanddateModifiedschema with the correct time zone designator for AMP or non-AMP pages. When using structured data, make sure to use the ISO 8601 format for dates.

Guidelines specific to Google News

Google News requires clearly showing both the date and the time that content was published or updated. Structured data alone is not enough, though it is recommended to use in addition to a visible date and time. Date and time should be positioned between the headline and the article text. For more guidance, also see our help page about article dates.

If an article has been substantially changed, it can make sense to give it a fresh date and time. However, don’t artificially freshen a story without adding significant information or some other compelling reason for the freshening. Also, do not create a very slightly updated story from one previously published, then delete the old story and redirect to the new one. That’s against our article URLs guidelines.

More best practices for dates on web pages

In addition to the most important requirements listed above, here are additional best practices to help Google determine the best page to consider showing for a web page:

- Show when a page has been updated: If you update a page significantly, also update the visible date (and time, if you display that). If desired, you can show two dates: when a page was originally published and when it was updated. Just do so in a way that’s visually clear to your readers. If showing both dates, it’s also highly recommended to use

datePublishedanddateModifiedfor AMP or non-AMP pages to make it easier for algorithms to recognize. - Use the right time zone: If specifying a time, make sure to provide the correct timezone, taking into account daylight saving time as appropriate.

- Be consistent in usage. Within a page, make sure to use exactly the same date (and, potentially, time) in structured data as well as in the visible part of the page. Make sure to use the same timezone if you specify one on the page.

- Don’t use future dates or dates related to what a page is about: Always use a date for when a page itself was published or updated, not a date linked to something like an event that the page is writing about, especially for events or other subjects that happen in the future (you may use Event markup separately, if appropriate).

- Follow Google’s structured data guidelines: While Google doesn’t guarantee that a date (or structured data in general) specified on a page will be used, following our structured data guidelines does help our algorithms to have it available in a machine-readable way.

- Troubleshoot by minimizing other dates on the page: If you’ve followed the best practices above and find incorrect dates are being selected, consider if you can remove or minimize other dates that may appear on the page, such as those that might be next to related stories.

We hope these guidelines help to make it easier to specify the right date on your website’s pages! For questions or comments on this, or other structured data topics, feel free to drop by our webmaster help forums.

Posted by John Mueller, Developer Advocate, Zurich

Help Google Search know the best date for your web page

Sometimes, Google shows dates next to listings in its search results. In this post, we’ll answer some commonly-asked questions webmasters have about how these dates are determined and provide some best practices to help improve their accuracy.

How dates are determined

Google shows the date of a page when its automated systems determine that it would be relevant to do so, such as for pages that can be time-sensitive, including news content:

Google determines a date using a variety of factors, including but not limited to: any prominent date listed on the page itself or dates provided by the publisher through structured markup.

Google doesn’t depend on one single factor because all of them can be prone to issues. Publishers may not always provide a clear visible date. Sometimes, structured data may be lacking or may not be adjusted to the correct time zone. That’s why our systems look at several factors to come up with what we consider to be our best estimate of when a page was published or significantly updated.

How to specify a date on a page

To help Google to pick the right date, site owners and publishers should:

- Show a clear date: Show a visible date prominently on the page.

- Use structured data: Use the

datePublishedanddateModifiedschema with the correct time zone designator for AMP or non-AMP pages. When using structured data, make sure to use the ISO 8601 format for dates.

Guidelines specific to Google News

Google News requires clearly showing both the date and the time that content was published or updated. Structured data alone is not enough, though it is recommended to use in addition to a visible date and time. Date and time should be positioned between the headline and the article text. For more guidance, also see our help page about article dates.

If an article has been substantially changed, it can make sense to give it a fresh date and time. However, don’t artificially freshen a story without adding significant information or some other compelling reason for the freshening. Also, do not create a very slightly updated story from one previously published, then delete the old story and redirect to the new one. That’s against our article URLs guidelines.

More best practices for dates on web pages

In addition to the most important requirements listed above, here are additional best practices to help Google determine the best page to consider showing for a web page:

- Show when a page has been updated: If you update a page significantly, also update the visible date (and time, if you display that). If desired, you can show two dates: when a page was originally published and when it was updated. Just do so in a way that’s visually clear to your readers. If showing both dates, it’s also highly recommended to use

datePublishedanddateModifiedfor AMP or non-AMP pages to make it easier for algorithms to recognize. - Use the right time zone: If specifying a time, make sure to provide the correct timezone, taking into account daylight saving time as appropriate.

- Be consistent in usage. Within a page, make sure to use exactly the same date (and, potentially, time) in structured data as well as in the visible part of the page. Make sure to use the same timezone if you specify one on the page.

- Don’t use future dates or dates related to what a page is about: Always use a date for when a page itself was published or updated, not a date linked to something like an event that the page is writing about, especially for events or other subjects that happen in the future (you may use Event markup separately, if appropriate).

- Follow Google’s structured data guidelines: While Google doesn’t guarantee that a date (or structured data in general) specified on a page will be used, following our structured data guidelines does help our algorithms to have it available in a machine-readable way.

- Troubleshoot by minimizing other dates on the page: If you’ve followed the best practices above and find incorrect dates are being selected, consider if you can remove or minimize other dates that may appear on the page, such as those that might be next to related stories.

We hope these guidelines help to make it easier to specify the right date on your website’s pages! For questions or comments on this, or other structured data topics, feel free to drop by our webmaster help forums.

Posted by John Mueller, Developer Advocate, Zurich

Introducing a new JavaScript SEO video series

We made a new video series on JavaScript SEO that benefits both web developers and SEOs. In the series we want to help making discoverable web apps with JavaScript.JavaScript is popular as it allows to build more engaging web applications. JavaScript f…

Introducing a new JavaScript SEO video series

We made a new video series on JavaScript SEO that benefits both web developers and SEOs. In the series we want to help making discoverable web apps with JavaScript.JavaScript is popular as it allows to build more engaging web applications. JavaScript f…

Announcing domain-wide data in Search Console

Google recommends verifying all versions of a website — http, https, www, and non-www — in order to get the most comprehensive view of your site in Google Search Console. Unfortunately, many separate listings can make it hard for webmasters to understand the full picture of how Google “sees” their domain as a whole. To make this easier, today we’re announcing “domain properties” in Search Console, a way of verifying and seeing the data from Google Search for a whole domain.

Domain properties show data for all URLs under the domain name, including all protocols, subdomains, and paths. They give you a complete view of your website across Search Console, reducing the need to manually combine data. So regardless of whether you use m-dot URLs for mobile pages, or are (finally) getting the migration to HTTPS set up, Search Console will be able to help with a complete view of your site’s data with regards to how Google Search sees it.

If you already have DNS verification set up, Search Console will automatically create new domain properties for you over the next few weeks, with data over all reports. Otherwise, to add a new domain property, go to the property selector, add a new domain property, and use DNS verification.We recommend using domain properties where possible going forward.

Domain properties were built based on your feedback; thank you again for everything you’ve sent our way over the years! We hope this makes it easier to manage your site, and to get a complete overview without having to manually combine data. Should you have any questions, feel free to drop by our help forums, or leave us a comment on Twitter. And as always, you can also use the feedback feature built in to Search Console as well.

Posted by Erez Bixon, Search Console Team

Announcing domain-wide data in Search Console

Google recommends verifying all versions of a website — http, https, www, and non-www — in order to get the most comprehensive view of your site in Google Search Console. Unfortunately, many separate listings can make it hard for webmasters to understand the full picture of how Google “sees” their domain as a whole. To make this easier, today we’re announcing “domain properties” in Search Console, a way of verifying and seeing the data from Google Search for a whole domain.

Domain properties show data for all URLs under the domain name, including all protocols, subdomains, and paths. They give you a complete view of your website across Search Console, reducing the need to manually combine data. So regardless of whether you use m-dot URLs for mobile pages, or are (finally) getting the migration to HTTPS set up, Search Console will be able to help with a complete view of your site’s data with regards to how Google Search sees it.

If you already have DNS verification set up, Search Console will automatically create new domain properties for you over the next few weeks, with data over all reports. Otherwise, to add a new domain property, go to the property selector, add a new domain property, and use DNS verification.We recommend using domain properties where possible going forward.

Domain properties were built based on your feedback; thank you again for everything you’ve sent our way over the years! We hope this makes it easier to manage your site, and to get a complete overview without having to manually combine data. Should you have any questions, feel free to drop by our help forums, or leave us a comment on Twitter. And as always, you can also use the feedback feature built in to Search Console as well.

Posted by Erez Bixon, Search Console Team

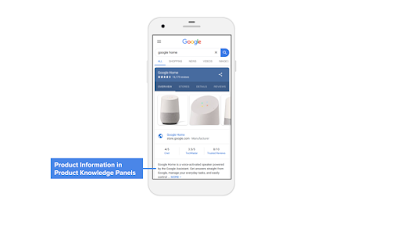

Help customers discover your products on Google

People come to Google to discover new brands and products throughout their shopping journey. On Search and Google Images, shoppers are provided with rich snippets like product description, ratings, and price to help guide purchase decisions.

Connecting potential customers with up-to-date and accurate product information is key to successful shopping journeys on Google, so today, we’re introducing new ways for merchants to provide this information to improve results for shoppers.

- Search Console

Many retailers and brands add structured data markup to their websites to ensure Google understands the products they sell. A new report for ‘Products’ is now available in Search Console for sites that use schema.org structured data markup to annotate product information. The report allows you to see any pending issues for markup on your site. Once an issue is fixed, you can use the report to validate if your issues were resolved by re-crawling your affected pages. Learn more about the rich result status reports

- Merchant Center

While structured data markup helps Google properly display your product information when we crawl your site, we are expanding capabilities for all retailers to directly provide up-to-date product information to Google in real-time. Product data feeds uploaded to Google Merchant Center will now be eligible for display in results on surfaces like Search and Google Images. This product information will be ranked based only on relevance to users’ queries, and no payment is required or accepted for eligibility. We’re starting with the expansion in the US, and support for other countries will be announced later in the year.

Get started

You don’t need a Google Ads campaign to participate. If you don’t have an existing account and sell your products in the US, create a Merchant Center account and upload a product data feed.

- Manufacturer Center

We’re also rolling out new features to improve your brand’s visibility and help customers find your products on Google by providing authoritative and up-to-date product information through Google Manufacturer Center. This information includes product description, variants, and rich content, such as high-quality images and videos that can show on the product’s knowledge panel.

These solutions give you multiple options to better reach and inform potential customers about your products as they shop across Google.

If you have any questions, be sure to post in our forum.

Posted by Bernhard Schindlholzer, Product Manager for Google Merchant Tools

Help customers discover your products on Google

People come to Google to discover new brands and products throughout their shopping journey. On Search and Google Images, shoppers are provided with rich snippets like product description, ratings, and price to help guide purchase decisions.

Connecting potential customers with up-to-date and accurate product information is key to successful shopping journeys on Google, so today, we’re introducing new ways for merchants to provide this information to improve results for shoppers.

- Search Console

Many retailers and brands add structured data markup to their websites to ensure Google understands the products they sell. A new report for ‘Products’ is now available in Search Console for sites that use schema.org structured data markup to annotate product information. The report allows you to see any pending issues for markup on your site. Once an issue is fixed, you can use the report to validate if your issues were resolved by re-crawling your affected pages. Learn more about the rich result status reports

- Merchant Center

While structured data markup helps Google properly display your product information when we crawl your site, we are expanding capabilities for all retailers to directly provide up-to-date product information to Google in real-time. Product data feeds uploaded to Google Merchant Center will now be eligible for display in results on surfaces like Search and Google Images. This product information will be ranked based only on relevance to users’ queries, and no payment is required or accepted for eligibility. We’re starting with the expansion in the US, and support for other countries will be announced later in the year.

Get started

You don’t need a Google Ads campaign to participate. If you don’t have an existing account and sell your products in the US, create a Merchant Center account and upload a product data feed.

- Manufacturer Center

We’re also rolling out new features to improve your brand’s visibility and help customers find your products on Google by providing authoritative and up-to-date product information through Google Manufacturer Center. This information includes product description, variants, and rich content, such as high-quality images and videos that can show on the product’s knowledge panel.

These solutions give you multiple options to better reach and inform potential customers about your products as they shop across Google.

If you have any questions, be sure to post in our forum.

Posted by Bernhard Schindlholzer, Product Manager for Google Merchant Tools

Consolidating your website traffic on canonical URLs

In Search Console, the Performance report currently credits all page metrics to the exact URL that the user is referred to by Google Search. Although this provides very specific data, it makes property management more difficult; for example: if your s…

Dynamic Rendering with Rendertron

Which sites should consider dynamic rendering?

How does dynamic rendering work?

- Take a look at a sample web app

- Set up a small express.js server to serve the web app

- Install and configure Rendertron as a middleware for dynamic rendering

The sample web app

| Cute cat images in a grid and a button to show more – this web app truly has it all! Here is the JavaScript: |

const apiUrl = ‘https://api.thecatapi.com/v1/images/search?limit=50’;

| The mobile-friendly test shows that the page is mobile-friendly, but the screenshot is missing all the cats! The headline and button appear but none of the cat pictures are there. |

Set up the server

Deploy a Rendertron instance

Rendertron runs a server that takes a URL and returns static HTML for the URL by using headless Chromium. We’ll follow the recommendation from the Rendertron project and use Google Cloud Platform.

| The form to create a new Google Cloud Platform project. |

-

Create a new project in the Google Cloud console. Take note of the “Project ID” below the input field.

-

Install the Google Cloud SDK as described in the documentation and log in.

- Clone the Rendertron repository from GitHub with:

git clone https://github.com/GoogleChrome/rendertron.git

cd rendertron

- Run the following commands to install dependencies and build Rendertron on your computer:

npm install && npm run build

- Enable Rendertron’s cache by creating a new file called config.json in the rendertron directory with the following content:

{ “datastoreCache”: true }

- Run the following command from the rendertron directory. Substitute YOUR_PROJECT_ID with your project ID from step 1.

gcloud app deploy app.yaml –project YOUR_PROJECT_ID

-

Select a region of your choice and confirm the deployment. Wait for it to finish.

-

Enter the URL YOUR_PROJECT_ID.appspot.com (substitute YOUR_PROJECT_ID for your actual project ID from step 1 in your browser. You should see Rendertron’s interface with an input field and a few buttons.

| Rendertron’s UI after deploying to Google Cloud Platform |

Add Rendertron to the server

Configure the bot list

Add the middleware

Testing our setup

Conclusion

Focusing on the new Search Console

Over the last year, the new Search Console has been growing and growing, with the goal of making it easier for site owners to focus on the important tasks. For us, focus means being able to put in all our work into the new Search Console, being committed to the users, and with that, being able to turn off some of the older, perhaps already-improved, aspects of the old Search Console. This gives us space to further build out the new Search Console, adding and improving features over time.

Here are some of the upcoming changes in Search Console that we’re planning on making towards end of March, 2019:

Crawl errors in the new Index Coverage report

One of the more common pieces of feedback we received was that the list of crawl errors in Search Console was not actionable when it came to setting priorities (it’s normal that Google crawls URLs which don’t exist, it’s not something that needs to be fixed on the website). By changing the focus on issues and patterns used for site indexing, we believe that site owners will be able to find and fix issues much faster (and when issues are fixed, you can request reprocessing quickly too). With this, we’re going to remove the old Crawl Errors report – for desktop, smartphone, and site-wide errors. We’ll continue to improve the way issues are recognized and flagged, so if there’s something that would help you, please submit feedback in the tools.

Along with the Crawl Errors report, we’re also deprecating the crawl errors API that’s based on the same internal systems. At the moment, we don’t have a replacement for this API. We’ll inform API users of this change directly.

Sitemaps data in Index Coverage

As we move forward with the new Search Console, we’re turning the old sitemaps report off. The new sitemaps report has most of the functionality of the old report, and we’re aiming to bring the rest of the information – specifically for images & video – to the new reports over time. Moreover, to track URLs submitted in sitemap files, within the Index Coverage report you can select and filter using your sitemap files. This makes it easier to focus on URLs that you care about.

Using the URL inspection tool to fetch as Google

The new URL inspection tool offers many ways to check and review URLs on your website. It provides both a look into the current indexing, as well as a live check of URLs that you’ve recently changed. In the meantime, this tool shows even more information on URLs, such as the HTTP headers, page resource, the JavaScript console log, and a screenshot of the page. From there, you can also submit pages for re-processing, to have them added or updated in our search results as quickly as possible.

User-management is now in settings

We’ve improved the user management interface and decreased clutter from the tool by merging it with the Settings section of the new Search Console. This replaces the user-management features in the old Search Console.

Structured data dashboard to dedicated reports per vertical

To help you implement Rich Results for you site, we added several reports to the new Search Console last year. These include Jobs, Recipes, Events and Q&A. We are committed to keep adding reports like these to the new Search Console. When Google encounters a syntax error parsing Structured Data on a page, it will also be reported in aggregate to make sure you don’t miss anything critical.

Other Structured Data types that are not supported with Rich Results features, will not be reported in Search Console anymore. We hope this reduces distraction from non-critical issues, and help you to focus on fixing problems which could be visible in Search.

Letting go of some old features

With the focus on features that we believe are critical to site owners, we’ve had to make a hard decision to drop some features in Search Console. In particular:

HTML suggestions – finding short and duplicated titles can be useful for site owners, but Google’s algorithms have gotten better at showing and improving titles over the years. We still believe this is something useful for sites to look into, and there are some really good tools that help you to crawl your website to extract titles & descriptions too.

Property Sets – while they’re loved by some site owners, the small number of users makes it hard to justify maintaining this feature. However, we did learn that users need a more comprehensive view of their website and so we will soon add the option of managing a search console account over an entire domain (regardless of schema type and sub-domains). Stay tuned!

Android Apps – most of the relevant functionality has been moved to the Firebase console over the years.

Blocked resources – we added this functionality to help sites with unblocking of CSS and JavaScript files for mobile-friendliness several years back. In the meantime, these issues have gotten much fewer, the usage of this tool has dropped significantly, and you’re able to find blocked resources directly in the URL inspection tool.

Please send us feedback!

We realize some of these changes will affect your work-flows, so we want to let you know about them as early as possible. Please send us your feedback directly in the new Search Console, if there are aspects which are unclear, or which would ideally be different for your use-case. For more detailed feedback, please use our help forums, feel free to include screenshots & ideas. In the long run, we believe the new Search Console will make things much easier, help you focus on the issues affecting your site, and the opportunities available to your site, with regards to search.

We’re looking forward to an exciting year!

Posted by Hillel Maoz, Search Console Team

Ways to succeed in Google News

General advice

There is a lot of helpful information to consider within the Google News Publisher Help Center. Be sure to have read the material in this area, in particular the content and technical guidelines.

Headlines and dates

- Present clear headlines: Google News looks at a variety of signals to determine the headline of an article, including within your HTML title tag and for the most prominent text on the page. Review our headline tips.

- Provide accurate times and dates: Google News tries to determine the time and date to display for an article in a variety of ways. You can help ensure we get it right by using the following methods:

- Show one clear date and time: As per our date guidelines, show a clear, visible date and time between the headline and the article text. Prevent other dates from appearing on the page whenever possible, such as for related stories.

- Use structured data: Use the

datePublishedanddateModifiedschema and use the correct time zone designator for AMP or non-AMP pages.

- Avoid artificially freshening stories: If an article has been substantially changed, it can make sense to give it a fresh date and time. However, don’t artificially freshen a story without adding significant information or some other compelling reason for the freshening. Also, do not create a very slightly updated story from one previously published, then delete the old story and redirect to the new one. That’s against our article URLs guidelines.

Duplicate content

Google News seeks to reward independent, original journalistic content by giving credit to the originating publisher, as both users and publishers would prefer. This means we try not to allow duplicate content—which includes scraped, rewritten, or republished material—to perform better than the original content. In line with this, these are guidelines publishers should follow:

- Block scraped content: Scraping commonly refers to taking material from another site, often on an automated basis. Sites that scrape content must block scraped content from Google News.

- Block rewritten content: Rewriting refers to taking material from another site, then rewriting that material so that it is not identical. Sites that rewrite content in a way that provides no substantial or clear added value must block that rewritten content from Google News. This includes, but is not limited to, rewrites that make only very slight changes or those that make many word replacements but still keep the original article’s overall meaning.

- Block or consider canonical for republished content: Republishing refers to when a publisher has permission from another publisher or author to republish an original work, such as material from wire services or in partnership with other publications.

Publishers that allow others to republish content can help ensure that their original versions perform better in Google News by asking those republishing to block or make use of canonical.

Google News also encourages those that republish material to consider proactively blocking such content or making use of the canonical, so that we can better identify the original content and credit it appropriately. - Avoid duplicate content: If you operate a network of news sites that share content, the advice above about republishing is applicable to your network. Select what you consider to be the original article and consider blocking duplicates or making use of the canonical to point to the original.

Transparency

- Be transparent: Visitors to your site want to trust and understand who publishes it and information about those who have written articles. That’s why our content guidelines stress that content should have posts with clear bylines, information about authors, and contact information for the publication.

- Don’t be deceptive: Our content policies do not allow sites or accounts that impersonate any person or organization, or that misrepresent or conceal their ownership or primary purpose. We do not allow sites or accounts that engage in coordinated activity to mislead users. This includes, but isn’t limited to, sites or accounts that misrepresent or conceal their country of origin or that direct content at users in another country under false premises.

More tips

- Avoid taking part in link schemes: Don’t participate in link schemes, which can include large-scale article marketing programs or selling links that pass PageRank. Review our page on link schemes for more information.

- Use structured for rich presentation: Both those using AMP and non-AMP pages can make use of structured data to optimize your content for rich results or carousel-like presentations.

- Protect your users and their data: Consider securing every page of your website with HTTPS to protect the integrity and confidentiality of the data users exchange on your site. You can find more useful tips in our best practices on how to implement HTTPS.

Here’s to a great 2019!

We hope these tips help publishers succeed in Google News over the coming year. For those who have more questions about Google News, we are unable to do one-to-one support. However, we do monitor our Google News Publisher Forum—which has been newly-revamped—and try to provide guidance on questions that might help a number of publishers all at once. The forum is also a great resource where publishers share tips and advice with each other.

Posted by Danny Sullivan, Public Liaison for Search

An update on the Google Webmaster Central blog comments

For every train there’s a passenger, but it turns out comments are not our train.Over the years we read thousands of comments we’ve received on our blog posts on the Google Webmaster Central blog. Sometimes they were extremely thoughtful, other times t…

2018, celebrating our global Webmaster community

| Gold Webmaster Product Experts at this year’s global summit in Sunnyvale. |