Googlebot will soon speak HTTP/2

Quick summary: Starting November 2020, Googlebot will start crawling some sites over HTTP/2.

Googlebot will soon speak HTTP/2

Quick summary: Starting November 2020, Googlebot will start crawling some sites over HTTP/2. Ever since mainstream browsers started supporting the next major revision of HTTP, HTTP/2 or h2 for short, web professionals asked us whether Googlebo…

Sharing what we learned on the first Virtual Webmaster Unconference

The first Virtual Webmaster Unconference successfully took place on August 26th and, as promised, we’d like to share the main findings and conclusions here.

How did the event go?

As communicated before, this event was a pilot, in which we wanted to test a) if there was an appetite for a very different type of event, and b) whether the community would actively engage in the discussions.

To the first question, we were overwhelmed with the interest to participate; it definitely exceeded our expectations and it gives us fuel to try out future iterations. Despite the frustration of many, who did not receive an invitation, we purposefully kept the event small. This brings us to our second point: it is by creating smaller venues that discussions can happen comfortably. Larger audiences are perfect for more conventional conferences, with keynotes and panels. The Virtual Webmaster Unconference, however, was created to hear the attendees’ voices. And we did.

What did we learn in the sessions?

In total, there were 17 sessions. We divided them into two blocks: half of them ran simultaneously on block 1, the other half on block 2. There were many good discussions and, while some teams took on a few suggestions from the community to improve their products and features, others used the session to bounce off ideas and for knowledge sharing.

What were the biggest realizations for our internal teams?

Core Web Vitals came up several times during the sessions. The teams realized that they still feel rather new to users, and that people are still getting used to them. Also, although Google has provided resources on them, many users still find them hard to understand and would like additional Google help docs for non-savvy users. Also, the Discover session shared its most recent documentation update.

The topic of findability of Google help docs was also a concern. Attendees mentioned that it should be easier for people to find the official search docs, in a more centralized way, especially for beginner users who aren’t always sure what to search for.

Great feedback came out from the Search Console brainstorming session, around what features work very well (like the monthly performance emails) and others that don’t work as well for Search Console users (such as messaging cadence).

The Site Kit for WordPress session showed that users were confused about data discrepancies they see between Analytics and Search console. The Structured Data team realized that they still have to focus on clarifying some confusion between the Rich Results Test and the Structured Data Testing Tool.

The e-commerce session concluded that there is a lot of concern around the heavy competition that small businesses face in the online retail space. To get an edge over large retailers and marketplaces, e-commerce stores could try to focus their efforts on a single niche, thus driving all their ranking signals towards that specific topic. Additionally, small shops have the opportunity to add additional unique value through providing expertise, for example, by creating informative content on product-related topics and thus increasing relevance and trustworthiness for both their audience and Google.

What are the main technical findings for attendees?

The Java Script Issues session concluded that 3rd party script creep is an issue for developers. Also, during the session Fun with Scripts!, attendees saw how scripts can take data sets and turn them into actionable insights. Some of the resources shared were: Code Labs, best place to learn something quickly; Data Studio, if you’re interested in app scripts or building your own connector; a starting point to get inspired: https://developers.google.com/apps-script/guides/videos

Some myths were also busted…

There were sessions that busted some popular beliefs. For example, there is no inherent ranking advantage from mobile first indexing and making a site technically better doesn’t mean that it’s actually better, since the content is key.

The Ads and SEO Mythbusting session was able to bust the following false statements:

1) Ads that run on Google Ads rank higher & Sites that run Google Ads rank better (False)

2) Ads from other companies causing low dwell time/high bounce lower your Ranking (False)

3) Ads vs no ads on site affects SEO (False)

What can you expect in the future?

As we mentioned previously, the event was met with overwhelmingly positive responses from the community – we see there is a need and a format to make meaningful conversations between Googlers and the community happen, so we’re happy to say: We will repeat this in the future!

Based on the feedback we got from you all, we are currently exploring options in terms of how we will run the future events in terms of timezones, languages and frequency. We’ve learned a lot from the pilot event and we’re using these learnings to make the future Virtual Webmaster Unconference even more accessible and enjoyable.

On top of working on the next editions of this event format, we heard your voice and we will have more information about an online Webmaster Conference (the usual format) very soon, as well as other topics. In order to stay informed, make sure you follow us on Twitter, YouTube and this Blog so that you don’t miss any updates on future events or other news.

Thanks again for your fantastic support!

Posted by Aurora Morales & Martin Splitt, your Virtual Webmaster Unconference team

Make the licensing information for your images visible on Google Images

For the last few years, we’ve collaborated with the image licensing industry to raise awareness of licensing requirements for content found through Google Images. In 2018, we began supporting IPTC Image Rights metadata; in February 2020 we announced a new metadata framework through Schema.org and IPTC for licensable images. Since then, we’ve seen widespread adoption of this new standard by websites, image platforms and agencies of all sizes. Today, we’re launching new features on Google Images which will highlight licensing information for images, and make it easier for users to understand how to use images responsibly.

What is it?

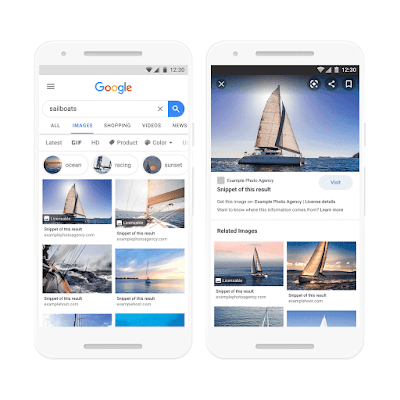

Images that include licensing information will be labeled with a “Licensable” badge on the results page. When a user opens the image viewer (the window that appears when they select an image), we will show a link to the license details and/or terms page provided by the content owner or licensor. If available, we’ll also show an additional link that directs users to a page from the content owner or licensor where the user can acquire the image.

Left: Images result page with the Licensable badge

Right: Images Viewer showing licensable image, with the new fields Get the image on and License details

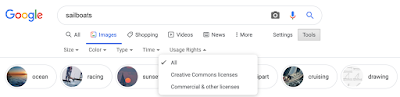

We’re also making it easier to find images with licensing metadata. We’ve enhanced the usage rights drop-down menu in Google Images to support filtering for Creative Commons licenses, as well as those that have commercial or other licenses.

Updated Usage Rights filter

What are the benefits to image licensors?

- As noted earlier, if licensing metadata is provided from the image licensor, then the licensable badge, license details page and image acquisition page will be surfaced in the images viewer, making it easier for users to purchase or license the image from the licensor

- If an image resides on a page that isn’t set up to let a user acquire it (e.g. a portfolio, article, or gallery page), image licensors can link to a new URL from Google Images which takes the user directly to the page where they can purchase or license the image

- For image licensors, the metadata can also be applied by publishers who have purchased your images, enabling your licensing details to be visible with your images when they’re used by your customers. (This requires your customers to not remove or alter the IPTC metadata that you provide them.)

We believe this is a step towards helping people better understand the nature of the content they’re looking at on Google Images and how they can use it responsibly.

How do I participate?

To learn more about these features, how you can implement them and troubleshoot issues, visit the Google developer help page and our common FAQs page.

To provide feedback on these features, please use the feedback tools available on the developer page for the licensable images features, the Google Webmaster Forum, and stay tuned for upcoming virtual office hours where we will review common questions.

What do image licensors say about these features?

“A collaboration between Google and CEPIC, which started some four years ago, has ensured that authors and rights holders are identified on Google Images. Now, the last link of the chain, determining which images are licensable, has been implemented thanks to our fruitful collaboration with Google. We are thrilled at the window of opportunities that are opening up for photography agencies and the wider image industry due to this collaboration. Thanks, Google.”

– Alfonso Gutierrez, President of CEPIC

“As a result of a multi-year collaboration between IPTC and Google, when an image containing embedded IPTC Photo Metadata is re-used on a popular website, Google Images will now direct an interested user back to the supplier of the image,” said Michael Steidl, Lead of the IPTC Photo Metadata Working Group. “This is a huge benefit for image suppliers and an incentive to add IPTC metadata to image files.”

– Michael Steidl, Lead of the IPTC Photo Metadata Working Group

“Google’s licensable image features are a great step forward in making it easier for users to quickly identify and license visual content. Google has worked closely with DMLA and its members during the features’ development, sharing tools and details while simultaneously gathering feedback and addressing our members’ questions or concerns. We look forward to continuing this collaboration as the features deploy globally.”

– Leslie Hughes, President of the Digital Media Licensing Association

“We live in a dynamic and changing media landscape where imagery is an integral component of online storytelling and communication for more and more people. This means that it is crucial that people understand the importance of licensing their images from proper sources for their own protection, and to ensure the investment required to create these images continues. We are hopeful Google’s approach will bring more visibility to the intrinsic value of licensed images and the rights required to use them.”

– Ken Mainardis, SVP, Content, Getty Images & iStock by Getty Images

“With Google’s licensable images features, users can now find high-quality images on Google Images and more easily navigate to purchase or license images in accordance with the image copyright. This is a significant milestone for the professional photography industry, in that it’s now easier for users to identify images that they can acquire safely and responsibly. EyeEm was founded on the idea that technology will revolutionise the way companies find and buy images. Hence, we were thrilled to participate in Google’s licensable images project from the very beginning, and are now more than excited to see these features being released.”

– Ramzi Rizk, Co-founder, EyeEm

“As the world’s largest network of professional providers and users of digital images, we at picturemaxx welcome Google’s licensable images features. For our customers as creators and rights managers, not only is the visibility in a search engine very important, but also the display of copyright and licensing information. To take advantage of this feature, picturemaxx will be making it possible for customers to provide their images for Google Images in the near future. The developments are already under way.”

– Marcin Czyzewski, CTO, picturemaxx

“Google has consulted and collaborated closely with Alamy and other key figures in the photo industry on this project. Licensable tags will reduce confusion for consumers and help inform the wider public of the value of high quality creative and editorial images.”

– James Hall, Product Director, Alamy

“Google Images’ new features help both image creators and image consumers by bringing visibility to how creators’ content can be licensed properly. We are pleased to have worked closely with Google on this feature, by advocating for protections that result in fair compensation for our global community of over 1 million contributors. In developing this feature, Google has clearly demonstrated its commitment to supporting the content creation ecosystem.

– Paul Brennan, VP of Content Operations, Shutterstock

“Google Images’ new licensable images features will provide expanded options for creative teams to discover unique content. By establishing Google Images as a reliable way to identify licensable content, Google will drive discovery opportunities for all agencies and independent photographers, creating an efficient process to quickly find and acquire the most relevant, licensable content.”

– Andrew Fingerman, CEO of PhotoShelter

Posted by Francois Spies, Product Manager, Google Images

Options for retailers to control how their crawled product information appears on Google

Earlier this year Google launched a new way for shoppers to find clothes, shoes and other retail products on Search in the U.S. and recently announced that free retail listings are coming to product knowledge panels on Google Search. These new types of experiences on Google Search, along with the global availability of rich results for products, enable retailers to make information about their products visible to millions of Google users, for free.

The best way for retailers and brands to participate in this experience is by annotating the product information on their websites using schema.org markup or by submitting this information directly to Google Merchant Center. Retailers can refer to our documentation to learn more about showing products for free on surfaces across Google or adding schema.org markup to a website.

While the processes above are the best way to ensure that product information will appear in this Search experience, Google may also include content that has not been marked up using schema.org or submitted through Merchant Center when the content has been crawled and is related to retail. Google does this to ensure that users see a wide variety of products from a broad group of retailers when they search for information on Google.

While we believe that this approach positively benefits the retail ecosystem, we recognize that some retailers may prefer to control how their product information appears in this experience. This can be done by using existing mechanisms for Google Search, as covered below.

Controlling your preview preferences

There are a number of ways that retailers can control what data is displayed on Google. These are consistent with changes announced last year that allow website owners and retailers specifically to provide preferences on which information from their website can be shown as a preview on Google. This is done through a set of robots meta tags and an HTML attribute.

Here are some ways you can implement these controls to limit your products and product data from being displayed on Google:

“nosnippet” robots meta tag

Using this meta tag you can specify that no snippet should be shown for this page in search results. It completely removes the textual, image and rich snippet for this page on Google and removes the page from any free listing experience.

“max-snippet:[number]” robots meta tag

This meta tag allows you to specify a maximum snippet length, in characters, of a snippet for your page to be displayed on Google results. If the structured data (e.g. product name, description, price, availability) is greater than the maximum snippet length, the page will be removed from any free listing experience.

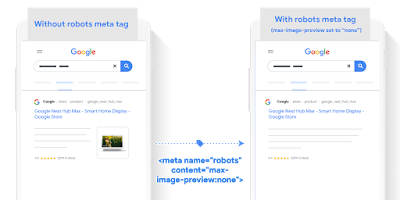

“max-image-preview:[setting]” robots meta tag

This meta tag allows you to specify a maximum size of image preview to be shown for images on this page, using either “none”, “standard”, or “large”.

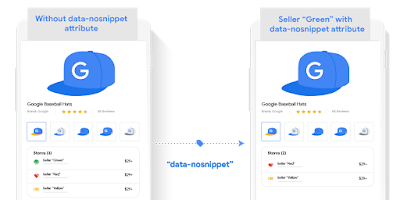

“data-nosnippet” HTML attribute

This attribute allows you to specify a section on your webpage that should not be included in a snippet preview on Google. When applied to relevant attributes for offers (price, availability, ratings, image) removes the textual, image and rich snippet for this page on Google and removes the listing from any free listing experiences.

Additional notes on these preferences:

-

The above preferences do not apply to information supplied via schema.org markup on the page itself. The schema.org markup needs to be removed first, before these opt-out mechanisms can become active.

-

The opt-out preferences do not apply to product data submitted through Google Merchant Center, which offers specific mechanisms to opt-out products from appearing on surfaces across Google.

Use of mechanisms like nosnippet and data-nosnippet only affect the display of data and eligibility for certain experiences. Display restrictions don’t affect the ranking of these pages in Search. The exclusion of some parts of product data from display may prevent the product from being shown in rich results and other product results on Google.

We hope these options make it easier for you to maximize the value you get from Search and achieve your business goals. These options are available to retailers worldwide and will operate the same for results we display globally. For more information, check out our developer documentation on meta tags.

Should you have any questions, feel free to reach out to us, or drop by our webmaster help forums.

Posted by Bernhard Schindlholzer, Product Manager

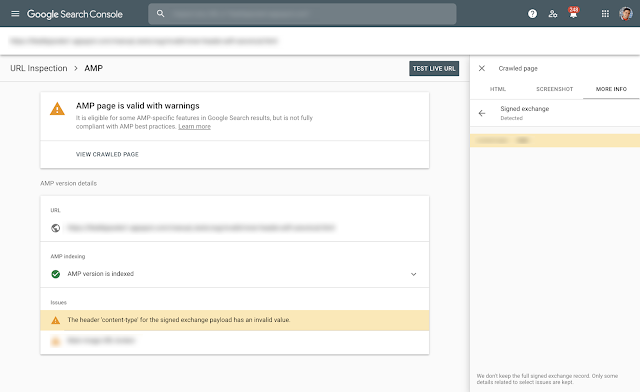

Identify and fix AMP Signed Exchange errors in Search Console

Signed Exchanges (SXG) is a subset of the emerging family of specifications called Web Packaging, which enables publishers to safely make their content portable, while still keeping the publisher’s integrity and attribution. In 2019, Google Search started linking to signed AMP pages served from Google’s cache when available. This feature allows the content to be prefetched without loss of privacy, while attributing the content to the right origin.

Today we are happy to announce that sites implementing SXG for their AMP pages will be able to understand if there are any issues preventing Google from serving the SXG version of their page using the Google AMP Cache.

Use the AMP report to check if your site has any SXG related issues – look for issues with ‘signed exchange’ in their name. We will also send emails to alert you of new issues as we detect them.

To help with debugging and to verify a specific page is served using SXG, you can inspect a URL using the URL Inspection Tool to find if any SXG related issues appear on the AMP section of the analysis.

You can diagnose issues affecting the indexed version of the page or use the “live” option which will check validity for the live version currently served by your site.

To learn more about the types of SXG issues we can report on, check out this Help Center Article on SXG issues. If you have any questions, feel free to ask in the Webmasters community or the Google Webmasters Twitter handle.

Posted by Amir Rachum, Search Console Software Engineer & Jeffrey Jose, Google Search Product Manager.

Join our first Virtual Webmaster Unconference

While the in-person Webmaster Conference events are still on hold, we continue to share insights and information with you in the Webmaster Conference Lightning Talks series on our YouTube channel. But we understand that you might be missing the connection during live events, so we’d like to invite you to join a new event format: the first Virtual Webmaster Unconference, on August 26th, at 8AM PDT!

What is the Virtual Webmaster Unconference?

Because we want you to actively participate in the event, this is neither a normal Webmaster Conference nor a typical online conference. This event isn’t just for you – it’s your event. In particular, the word “Unconference” means that you get to choose which sessions you want to attend and become an active part of. You will shape the event by taking part in discussions, feedback sessions and similar formats that need your input.

It’s your chance to collaborate with other webmasters, SEOs, developers, digital marketers, publishers and Google product teams, such as Search Console and Google Search, and help us to deliver more value to you and the community.

How does it work?

We have opened the registration for a few more spots in the event again. If you’re seeing “registration is closed”, the spots have filled up already. We may run more events in the future, so keep an eye on our Twitter feed and this blog.

As part of the registration process, we will ask you to select two sessions you would like to participate in. Only the sessions that receive the most votes will be scheduled to take place on the event day, so make sure you pick your favorite ones before August 19th!

As we have limited spots, we might have to select attendees based on background and demographics to get a good mix of perspectives in the event. We will let you know by August 20th if your registration is confirmed. Once your registration is confirmed, you will receive the invitation for the Google Meet call on August 26th with all the other participants, the MC and the session leads. You can expect to actively participate in the sessions you’re interested in via voice and/or video call through Google Meet. Please note that the sessions will not be recorded; we will publish a blog post with some of the top learnings after the event.

We have very interesting proposals lined up for you to vote on, as well as other fun surprises. Save your spot before August 19th and join the first ever Virtual Webmaster Unconference!

Posted by Martin Splitt, Search Relations Team

Rich Results & Search Console Webmaster Conference Lightning Talk

A few weeks ago we held another Webmaster Conference Lightning Talk, this time about Rich Results and Search Console. During the talk we hosted a live chat and a lot of viewers asked questions – we tried to answer all we could, but our typing skills didn’t match the challenge… so we thought we’d follow up on the questions posed in this blog post.

If you missed the video, you can watch it below: it discusses how to get started with rich results and use Search Console to optimize your search appearance in Google Search.

Rich Results & Search Console FAQs

Will a site rank higher against competitors if it implements structured data?

Structured data by itself is not a generic ranking factor. However, it can help Google to understand what the page is about, which can make it easier for us to show it where relevant and make it eligible for additional search experiences.

Which structured data would you recommend to use in Ecommerce category pages?

You don’t need to mark up the products on a category page, a Product should only be marked up when they are the primary element on a page.

How much content should be included in my structured data? Can there be too much?

There is no limit to how much structured data you can implement on your pages, but make sure you’re sticking to the general guidelines. For example the markup should always be visible to users and representative of the main content of the page.

What exactly are FAQ clicks and impressions based on?

A Frequently Asked Question (FAQ) page contains a list of questions and answers pertaining to a particular topic. Properly marked up FAQ pages may be eligible to have a rich result on Search and an Action on the Google Assistant, which can help site owners reach the right users. These rich results include snippets with frequently asked questions, allowing users to expand and collapse answers to them. Every time such a result appears in Search results for an user it will be counted as an impression on Search Console, and if the user clicks to visit the website it will be counted as a click. Clicks to expand and collapse the search result will not be counted as clicks on Search Console as they do not lead the user to the website. You can check impressions and clicks on your FAQ rich results using the ‘Search appearance’ tab in the Search Performance report.

Will Google show rich results for reviews made by the review host site?

Reviews must not be written or provided by the business or content provider. According to our review snippets guidelines: “Ratings must be sourced directly from users” – publishing reviews written by the business itself are against the guidelines and might trigger a Manual Action.

There are schema types that are not used by Google, why should we use them?

Google supports a number of schema types, but other search engines can use different types to surface rich results, so you might want to implement it for them.

Why do rich results that previously appeared in Search sometimes disappear?

The Google algorithm tailors search results to create what it thinks is the best search experience for each user, depending on many variables, including search history, location, and device type. In some cases, it may determine that one feature is more appropriate than another, or even that a plain blue link is best. Check the Rich Results Status report, If you don’t see a drop in the number of valid items, or a spike in errors, your implementation should be fine.

How can I verify my dynamically generated structured data?

The safest way to check your structured data implementation is to inspect the URL on Search Console. This will provide information about Google’s indexed version of a specific page. You can also use the Rich Results Test public tool to get a verdict. If you don’t see the structured data through those tools, your markup is not valid.

How can I add structured data in WordPress?

There are a number of WordPress plugins available that could help with adding structured data. Also check your theme settings, it might also support some types of markup.

With the deprecation of the Structured Data Testing Tool, will the Rich Results Test support structured data that is not supported by Google Search?

The Rich Results Test supports all structured data that triggers a rich result on Google Search, and as Google creates new experiences for more structured data types we’ll add support for them in this test. While we prepare to deprecate the Structured Data Testing Tool, we’ll be investigating how a generic tool can be supported outside of Google.

Stay Tuned!

If you missed our previous lightning talks, check the WMConf Lightning Talk playlist. Also make sure to subscribe to our YouTube channel for more videos to come! We definitely recommend joining the premieres on YouTube to participate in the live chat and Q&A session for each episode!

Posted by Daniel Waisberg, Search Advocate

Search Console API announcements

Over the past year, we have been working to upgrade the infrastructure of the Search Console API, and we are happy to let you know that we’re almost there. You might have already noticed some minor changes, but in general, our goal was to make the migration as invisible as possible. This change will help Google to improve the performance of the API as demand grows.

Note that if you’re not querying the API yourself you don’t need to take action. If you are querying the API for your own data or if you maintain a tool which uses that data (e.g. a WordPress plugin), read on. Below is a summary of the changes:

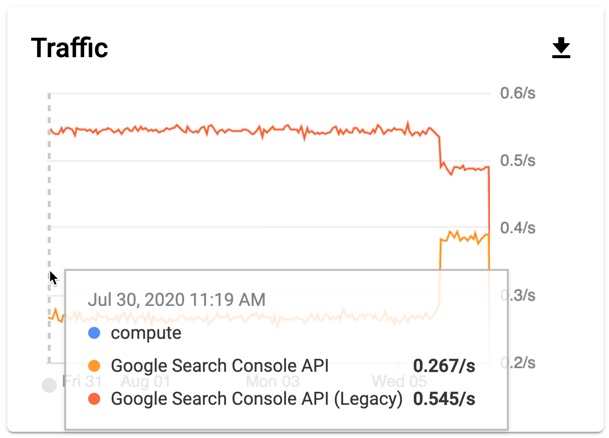

- Changes on Google Cloud Platform dashboard: you’ll see is a drop in the old API usage report and an increase in the new one.

- API key restriction changes: if you have previously set API key restrictions, you might need to change them.

- Discovery document changes: if you’re querying the API using a third-party API library or querying the Webmasters Discovery Document directly, you will need to update it by the end of the year.

Please note that other than these changes, the API is backward compatible and there are currently no changes in scope or functionality.

Google Cloud Platform (GCP) changes

In the Google Cloud Console dashboards, you will notice a traffic drop for the legacy API and an increase in calls to the new one. This is the same API, just under the new name.

|

| Image: Search Console API changes in Google Cloud Console |

You can monitor your API usage on the new Google Search Console API page.

API key restriction changes

As mentioned in the introduction, these instructions are important only if you query the data yourself or provide a tool that does that for your users.

To check if you have an API restriction active on your API key, follow these steps in the credentials page and make sure the Search Console API is not restricted. If you have added an API restriction for your API keys you will need to take action by August 31.

In order to allow your API calls to be migrated automatically to the new API infrastructure, you need to make sure the Google Search Console API is not restricted.

- If your API restrictions are set to “Don’t restrict key” you’re all set.

- If your API restrictions are set to “Restrict key”, the Search Console API should be checked as shown in the image below.

|

| Image: Google Search Console API restrictions setting |

Discovery document changes

If you’re querying the Search Console API using an external API library, or querying the Webmasters API discovery document directly you will need to take action as we’ll drop the support in the Webmasters discovery document. Our current plan is to support it until December 31, 2020 – but we’ll provide more details and guidance in the coming months.

If you have any questions, feel free to ask in the Webmasters community or the Google Webmasters Twitter handle.

Posted by Nati Yosephian, Search Console Software Engineer

Search Off The Record podcast – behind the scenes with Search Relations

As the Search Relations team at Google, we’re here to help webmasters be successful with their websites in Google Search. We write on this blog, create and maintain the Google Search documentation, produce videos on our YouTube channel – and now we’re …

Prepare for mobile-first indexing (with a little extra time)

Mobile-first indexing has been an ongoing effort of Google for several years. We’ve enabled mobile-first indexing for most currently crawled sites, and enabled it by default for all the new sites. Our initial plan was to enable mobile-first indexing for all sites in Search in September 2020. We realize that in these uncertain times, it’s not always easy to focus on work as otherwise, so we’ve decided to extend the timeframe to the end of March 2021. At that time, we’re planning on switching our indexing over to mobile-first indexing.

For the sites that are not yet ready for mobile-first indexing, we’ve already mentioned some issues blocking your sites in previous blog posts. Now that we’ve done more testing and evaluation, we have seen a few more issues that are worth mentioning to better prepare your sites.

Make sure Googlebot can see your content

In mobile-first indexing, we will only get the information of your site from the mobile version, so make sure Googlebot can see the full content and all resources there. Here are some things to pay attention to:

Robots meta tags on mobile version

You should use the same robots meta tags on the mobile version as those on the desktop version. If you use a different one on the mobile version (such as noindex or nofollow), Google may fail to index or follow links on your page when your site is enabled for mobile-first indexing.

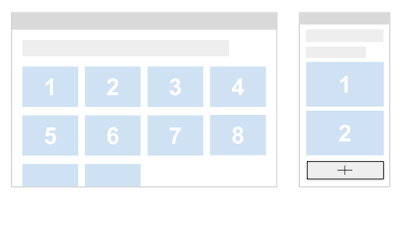

Lazy-loading on mobile version

Lazy-loading is more common on mobile than on desktop, especially for loading images and videos. We recommend following lazy-loading best practices. In particular, avoid lazy-loading your primary content based on user interactions (like swiping, clicking, or typing), because Googlebot won’t trigger these user interactions.

For example, if your page has 10 primary images on the desktop version, and the mobile version only has 2 of them, with the other 8 images loaded from the server only when the user clicks the “+” button:

(Caption: desktop version with 10 thumbnails / mobile version with 2 thumbnails)

In this case, Googlebot won’t click the button to load the 8 images, so Google won’t see those images. The result is that they won’t be indexed or shown in Google Images. Follow Google’s lazy-loading best practices, and lazy load content automatically based on its visibility in the viewport.

Be aware of what you block

Some resources have different URLs on the mobile version from those on the desktop version, sometimes they are served on different hosts. If you want Google to crawl your URLs, make sure you’re not disallowing crawling of them with your robots.txt file.

For example, blocking the URLs of .css files will prevent Googlebot from rendering your pages correctly, which can harm the ranking of your pages in Search. Similarly, blocking the URLs of images will make these images disappear from Google Images.

Make sure primary content is the same on desktop and mobile

If your mobile version has less content than your desktop version, you should consider updating your mobile version so that its primary content (the content you want to rank with, or the reason for users to come to your site) is equivalent. Only the content shown on the mobile version will be used for indexing and ranking in Search. If it’s your intention that the mobile version has less content than the desktop version, your site may lose some traffic when Google enables mobile-first indexing for your site, since Google won’t be able to get the full information anymore.

Use the same clear and meaningful headings on your mobile version as on the desktop version. Missing meaningful headings may negatively affect your page’s visibility in Search, because we might not be able to fully understand the page.

For example, if your desktop version has the following tag for the heading of the page:

<h1>Photos of cute puppies on a blanket</h1>

Your mobile version should also use the same heading tag with the same words for it, rather than using headings like:

<h1>Photos</h1> (not clear and meaningful)

<div>Photos of cute puppies on a blanket</div> (not using a heading tag)

Check your images and videos

Make sure the images and videos on your mobile version follow image best practices and video best practices. In particular, we recommend that you perform the following checks:

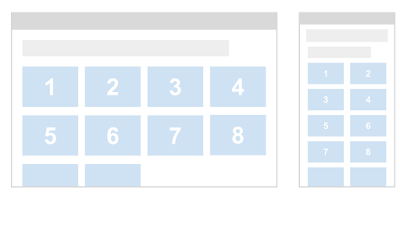

Image quality

Don’t use images that are too small or have a low resolution on the mobile version. Small or low-quality images might not be selected for inclusion in Google Images, or shown as favorably when indexed.

For example, if your page has 10 primary images on the desktop version, and they are normal, good quality images. On the mobile version, a bad practice is to use very small thumbnails for these images to make them all fit in the smaller screen:

(Caption: desktop version with normal thumbnails / mobile version tiny thumbnails)

In this case, these thumbnails may be considered “low quality” by Google because they are too small and in a low resolution.

Alt attributes for images

As previously mentioned, remember that using less-meaningful alt attributes might negatively affect how your images are shown in Google Images.

For example, a good practice is like the following:

<img src="dogs.jpg" alt="A photo of cute puppies on a blanket"> (meaningful alt text)

While bad practices are like the following:

<img src="dogs.jpg" alt> (empty alt text)

<img src="dogs.jpg" alt="Photo"> (alt text not meaningful)

Different image URLs between desktop and mobile version

If your site uses different image URLs for the desktop and mobile version, you may see a temporary traffic loss from Google Images while your site transitions to mobile-first indexing. This is because the image URLs on the mobile version are new to the Google indexing system, and it takes some time for the new image URLs to be understood appropriately. To minimize a temporary traffic loss from search, review whether you can retain the image URLs used by desktop.

Video markup

If your desktop version uses schema.org’s VideoObject structured data to describe videos, make sure the mobile version also includes the VideoObject, with equivalent information provided. Otherwise our video indexing systems may have trouble getting enough information about your videos, resulting in them not being shown as visibly in Search.

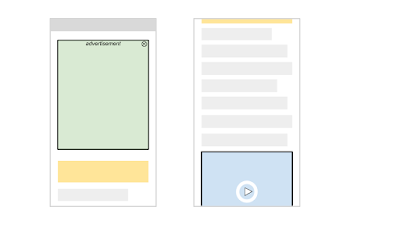

Video and image placement

Make sure to position videos and images in an easy to find location on the mobile version of your pages. Videos or images not placed well could affect user experience on mobile devices, making it possible that Google would not show these as visibly in search.

For example, assume you have a video embedded in your content in an easy to find location on desktop:

On mobile, you place an ad near the top of the page which takes up a large part of the page. This can result your video being moved off the page, requiring users to scroll down a lot to find the video:

(Caption: video on mobile is much less visible to users)

In this case, the page might not be deemed a useful video landing page by our algorithms, resulting in the video not being shown in Search.

You can find more information and more best practices in our developer guide for mobile-first indexing.

Mobile-first indexing has come a long way. It’s great to see how the web has evolved from desktop to mobile, and how webmasters have helped to allow crawling and indexing to match how users interact with the web! We appreciate all your work over the years, which has helped to make this transition fairly smooth. We’ll continue to monitor and evaluate these changes carefully. If you have any questions, please drop by our Webmaster forums or our public events.

Posted by Yingxi Wu, Google Mobile-First Indexing team

How spam reports are used at Google

Thanks to our users, we receive hundreds of spam reports every day. While many of the spam reports lead to manual actions, they represent a small fraction of the manual actions we issue. Most of the manual actions come from the work our internal teams regularly do to detect spam and improve search results. Today we’re updating our Help Center articles to better reflect this approach: we use spam reports only to improve our spam detection algorithms.

Spam reports play a significant role: they help us understand where our automated spam detection systems may be missing coverage. Most of the time, it’s much more impactful for us to fix an underlying issue with our automated detection systems than it is to take manual action on a single URL or site.

In theory, if our automated systems were perfect, we would catch all spam and not need reporting systems at all. The reality is that while our spam detection systems work well, there’s always room for improvement, and spam reporting is a crucial resource to help us with that. Spam reports in aggregate form help us analyze trends and patterns in spammy content to improve our algorithms.

Overall, one of the best approaches to keeping spam out of Search is to rely on high quality content created by the web community and our ability to surface it through ranking. You can learn more about our approach to improving Search and generating great results at our How Search Works site. Content owners and creators can also learn how to create high-quality content to be successful in Search through our Google Webmasters resources. Our spam detection systems work with our regular ranking systems, and spam reports help us continue to improve both so we very much appreciate them.

If you have any questions or comments, please let us know on Twitter.

Posted by Gary

How we fought Search spam on Google – Webspam Report 2019

Evaluating page experience for a better web

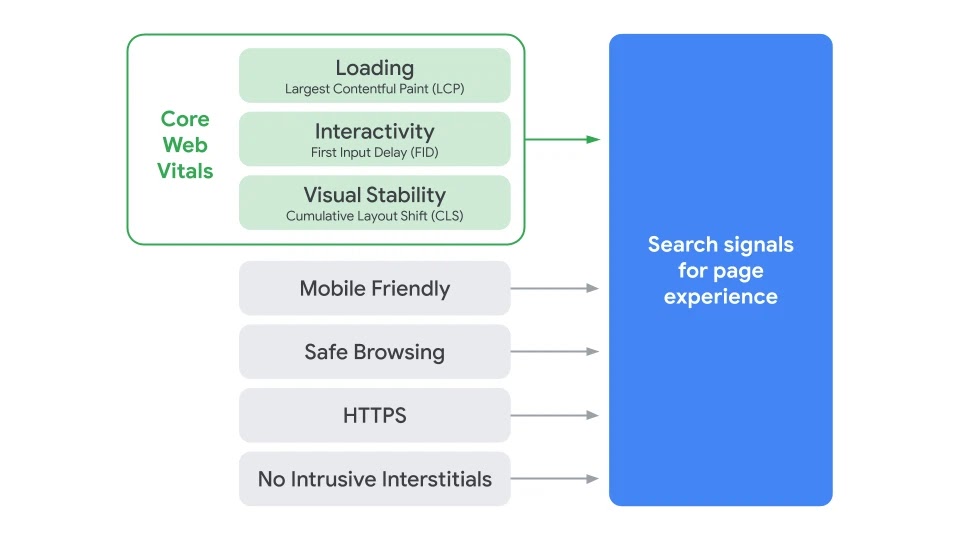

Through both internal studies and industry research, users show they prefer sites with a great page experience. In recent years, Search has added a variety of user experience criteria, such as how quickly pages load and mobile-friendliness, as factors for ranking results. Earlier this month, the Chrome team announced Core Web Vitals, a set of metrics related to speed, responsiveness and visual stability, to help site owners measure user experience on the web.

Today, we’re building on this work and providing an early look at an upcoming Search ranking change that incorporates these page experience metrics. We will introduce a new signal that combines Core Web Vitals with our existing signals for page experience to provide a holistic picture of the quality of a user’s experience on a web page.

As part of this update, we’ll also incorporate the page experience metrics into our ranking criteria for the Top Stories feature in Search on mobile, and remove the AMP requirement from Top Stories eligibility. Google continues to support AMP, and will continue to link to AMP pages when available. We’ve also updated our developer tools to help site owners optimize their page experience.

A note on timing: We recognize many site owners are rightfully placing their focus on responding to the effects of COVID-19. The ranking changes described in this post will not happen before next year, and we will provide at least six months notice before they’re rolled out. We’re providing the tools now to get you started (and because site owners have consistently requested to know about ranking changes as early as possible), but there is no immediate need to take action.

About page experience

The page experience signal measures aspects of how users perceive the experience of interacting with a web page. Optimizing for these factors makes the web more delightful for users across all web browsers and surfaces, and helps sites evolve towards user expectations on mobile. We believe this will contribute to business success on the web as users grow more engaged and can transact with less friction.

Core Web Vitals are a set of real-world, user-centered metrics that quantify key aspects of the user experience. They measure dimensions of web usability such as load time, interactivity, and the stability of content as it loads (so you don’t accidentally tap that button when it shifts under your finger – how annoying!).

We’re combining the signals derived from Core Web Vitals with our existing Search signals for page experience, including mobile-friendliness, safe-browsing, HTTPS-security, and intrusive interstitial guidelines, to provide a holistic picture of page experience. Because we continue to work on identifying and measuring aspects of page experience, we plan to incorporate more page experience signals on a yearly basis to both further align with evolving user expectations and increase the aspects of user experience that we can measure.

Page experience ranking

Great page experiences enable people to get more done and engage more deeply; in contrast, a bad page experience could stand in the way of a person being able to find the valuable information on a page. By adding page experience to the hundreds of signals that Google considers when ranking search results, we aim to help people more easily access the information and web pages they’re looking for, and support site owners in providing an experience users enjoy.

For some developers, understanding how their sites measure on the Core Web Vitals—and addressing noted issues—will require some work. To help out, we’ve updated popular developer tools such as Lighthouse and PageSpeed Insights to surface Core Web Vitals information and recommendations, and Google Search Console provides a dedicated report to help site owners quickly identify opportunities for improvement. We’re also working with external tool developers to bring Core Web Vitals into their offerings.

While all of the components of page experience are important, we will prioritize pages with the best information overall, even if some aspects of page experience are subpar. A good page experience doesn’t override having great, relevant content. However, in cases where there are multiple pages that have similar content, page experience becomes much more important for visibility in Search.

Page experience and the mobile Top Stories feature

The mobile Top Stories feature is a premier fresh content experience in Search that currently emphasizes AMP results, which have been optimized to exhibit a good page experience. Over the past several years, Top Stories has inspired new thinking about the ways we could promote better page experiences across the web.

When we roll out the page experience ranking update, we will also update the eligibility criteria for the Top Stories experience. AMP will no longer be necessary for stories to be featured in Top Stories on mobile; it will be open to any page. Alongside this change, page experience will become a ranking factor in Top Stories, in addition to the many factors assessed. As before, pages must meet the Google News content policies to be eligible. Site owners who currently publish pages as AMP, or with an AMP version, will see no change in behavior – the AMP version will be what’s linked from Top Stories.

Summary

We believe user engagement will improve as experiences on the web get better — and that by incorporating these new signals into Search, we’ll help make the web better for everyone. We hope that sharing our roadmap for the page experience updates and launching supporting tools ahead of time will help the diverse ecosystem of web creators, developers, and businesses to improve and deliver more delightful user experiences.

Frequently asked questions about JavaScript and links

We get lots of questions every day – in our Webmaster office hours, at conferences, in the webmaster forum and on Twitter. One of the more frequent themes among these questions are links and especially those generated through JavaScript.

In our Webmaster Conference Lightning Talks video series, we recently addressed the most frequently asked questions on Links and JavaScript:

Note: This video has subtitles in many languages available, too.

During the live premiere, we also had a Q&A session with a few additional questions from the community and decided to publish those questions and our answers along with some other frequently asked questions around the topic of links and JavaScript.

What kinds of links can Googlebot discover?

Googlebot parses the HTML of a page, looking for links to discover the URLs of related pages to crawl. To discover these pages, you need to make your links actual HTML links, as described in the webmaster guidelines on links.

What kind of URLs are okay for Googlebot?

Googlebot extracts the URLs from the href attribute of your links and then enqueues them for crawling. This means that the URL needs to be resolvable or simply put: The URL should work when put into the address bar of a browser. See the webmaster guidelines on links for more information.

Is it okay to use JavaScript to create and inject links?

As long as these links fulfill the criteria as per our webmaster guidelines and outlined above, yes.

When Googlebot renders a page, it executes JavaScript and then discovers the links generated from JavaScript, too. It’s worth mentioning that link discovery can happen twice: Before and after JavaScript executed, so having your links in the initial server response allows Googlebot to discover your links a bit faster.

Does Googlebot understand fragment URLs?

Fragment URLs, also known as “hash URLs”, are technically fine, but might not work the way you expect with Googlebot.

Fragments are supposed to be used to address a piece of content within the page and when used for this purpose, fragments are absolutely fine.

Sometimes developers decide to use fragments with JavaScript to load different content than what is on the page without the fragment. That is not what fragments are meant for and won’t work with Googlebot. See the JavaScript SEO guide on how the History API can be used instead.

Does Googlebot still use the AJAX crawling scheme?

The AJAX crawling scheme has long been deprecated. Do not rely on it for your pages.

The recommendation for this is to use the History API and migrate your web apps to URLs that do not rely on fragments to load different content.

Stay tuned for more Webmaster Conference Lightning Talks

This post was inspired by the first installment of the Webmaster Conference Lightning Talks, but make sure to subscribe to our YouTube channel for more videos to come! We definitely recommend joining the premieres on YouTube to participate in the live chat and Q&A session for each episode!

If you are interested to see more Webmaster Conference Lightning Talks, check out the video Google Monetized Policies and subscribe to our channel to stay tuned for the next one!

Join the webmaster community in the upcoming video premieres and in the YouTube comments!

Posted by Martin Splitt, Googlebot whisperer at the Google Search Relations team

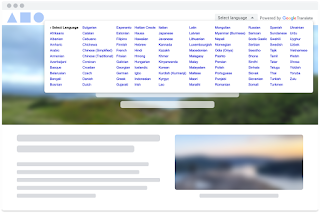

Google Translate’s Website Translator – available for non-commercial use

In this time of a global pandemic, webmasters across the world—from government officials to health organizations—are frequently updating their websites with the latest information meant to help fight the spread of COVID-19 and provide access to resources. However, they often lack the time or funding to translate this content into multiple languages, which can prevent valuable information from reaching a diverse set of readers. Additionally, some content may only be available via a file, e.g. a .pdf or .doc, which requires additional steps to translate.

To help these webmasters reach more users, we’re reopening access to the Google Translate Website Translator—a widget that translates web page content into 100+ different languages. It uses our latest machine translation technology, is easy to integrate, and is free of charge. To start using the Website Translator widget, sign up here.

Please note that usage will be restricted to government, non-profit, and/or non-commercial websites (e.g. academic institutions) that focus on COVID-19 response. For all other websites, we recommend using the Google Cloud Translation API.

Google Translate also offers both webmasters and their readers a way to translate documents hosted on a website. For example, if you need to translate this PDF file into Spanish, go to translate.google.com and enter the file’s URL into the left-hand textbox , then choose “Spanish” as the target language on the right. The link shown in the right-hand textbox will take you to the translated version of the PDF file. The following file formats are supported: .doc, .docx, .odf, .pdf, .ppt, .pptx, .ps, .rtf, .txt, .xls, or .xlsx.

Finally, it’s very important to note that while we continuously look for ways to improve the quality of our translations, they may not be perfect – so please use your best judgement when reading any content translated via Google Translate.

Posted by Xinxing Gu, Product Manager/Mountain View, CA, and Michal Lahav, User Experience Research/ Seattle, WA

New reports for Guided Recipes on Assistant in Search Console

Over the past two years, Google Assistant has helped users around the world cook yummy recipes and discover new favorites. During this time, web site owners have had to wait for Google to reprocess their web pages before they could see their updates on the Assistant. Along the way, we’ve heard many of you say you want an easier and faster way to know how the Assistant will guide users through a recipe.

We are happy to announce support for Guided Recipes in Search Console as well as in the Rich Results Test tool. This will allow you to instantly validate the markup for individual recipes, as well as discover any issues with all of the recipes on your site.

Guided Recipes Enhancement report

In Search Console, you can now view a Rich Result Status Report with the errors, warnings, and fully valid pages found among your site’s recipes. It also includes a check box to show trends on search impressions, which can help in understanding the impact of your rich results appearances.

In addition, if you find an issue and fix it, you can use the report to notify Google that your page has changed and should be recrawled.

|

| Image: Guided Recipes Enhancement report |

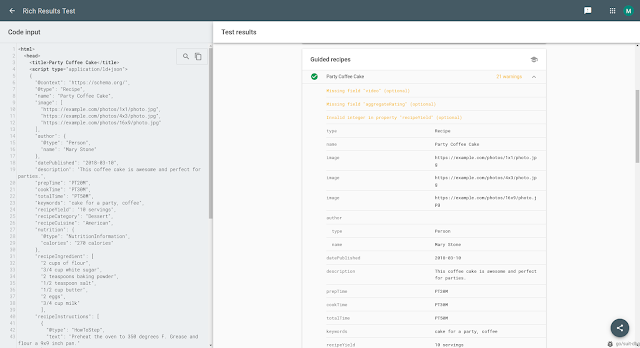

Guided Recipes in Rich Results Test

Add Guided Recipe structured data to a page and submit its URL – or just test a code snippet within the Rich Results Test tool. The test results show any errors or suggestions for your structured data as can be seen in the screenshot below.

|

| Image: Guided Recipes in Rich Results Test |

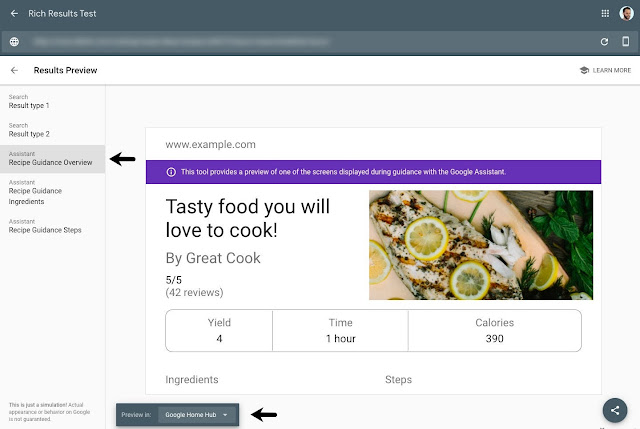

You can also use the Preview tool in the Rich Results Test to see how Assistant guidance for your recipe can appear on a smart display. You can find any issues with your markup before publishing it.

|

| Image: Guide Recipes preview in the Rich Results Test |

Let us know if you have any comments, questions or feedback either through the Webmasters help community or Twitter.

Posted by Earl J. Wagner, Software Engineer and Moshe Samet, Search Console Product Manager

Reintroducing a community for Polish & Turkish Site Owners

Google Webmaster Help forums are a great place for website owners to help each other, engage in friendly discussion, and get input from awesome Product Experts. We currently have forums operating in 12 languages.

We’re happy to announce the re-opening of the Polish and Turkish webmaster communities with support from a global team of Community Specialists dedicated to helping support Product Experts. If you speak Turkish or Polish, we’d love to have you drop by the new forums yourself, perhaps there’s a question or a challenge you can help with as well!

Current Webmaster Product Experts are welcome to join & keep their status in the new communities as well. If you have previously contributed and would like to start contributing again, you can start posting again, and feel free to ask others in the community if you have any questions.

We look forward to seeing you there!

Posted by Aaseesh Marina, Webmaster Product Support Manager

Przywracamy społeczność dla właścicieli witryn z Polski i Turcji

Fora pomocy dotyczące usług Google dla webmasterów są miejscem, w którym właściciele witryn mogą pomagać sobie nawzajem, dołączać do dyskusji i poznawać wskazówki Ekspertów Produktowych. Obecnie nasze fora działają w 12 językach.

Cieszymy się, że dzięki pomocy globalnego zespołu Specjalistów Społeczności, którzy z oddaniem wspierają Ekspertów Produktowych, możemy przywrócić społeczności webmasterów w językach polskim i tureckim. Jeśli posługujesz się którymś z tych języków, zajrzyj na nasze nowe fora. Może znajdziesz tam problem, który potrafisz rozwiązać?

Zapraszamy obecnych Ekspertów Produktowych usług Google dla webmasterów do dołączenia do nowych społeczności z zachowaniem dotychczasowego statusu. Jeśli chcesz, możesz wrócić do publikowania. W razie pytań zwróć się do innych członków społeczności.

Do zobaczenia!

Autor: Aaseesh Marina, Webmaster Product Support Manager

New reports for Special Announcements in Search Console

Last month we introduced a new way for sites to highlight COVID-19 announcements on Google Search. At first, we’re using this information to highlight announcements in Google Search from health and government agency sites, to cover important updates like school closures or stay-at-home directives.

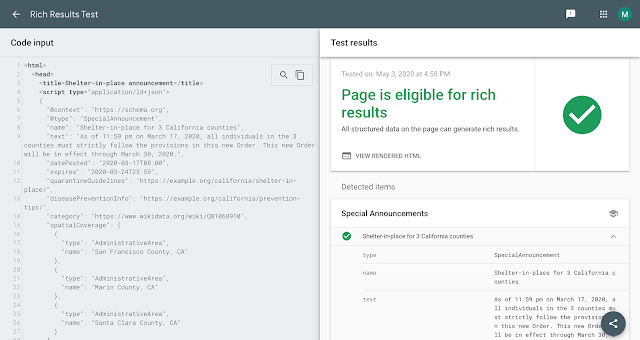

Today we are announcing support for SpecialAnnouncement in Google Search Console, including new reports to help you find any issues with your implementation and monitor how this rich result type is performing. In addition we now also support the markup on the Rich Results Test to review your existing URLs or debug your markup code before moving it to production.

Special Announcements Enhancement report

A new report is now available in Search Console for sites that have implemented SpecialAnnouncement structured data. The report allows you to see errors, warnings, and valid pages for markup implemented on your site.

In addition, if you fix an issue, you can use the report to validate it, which will trigger a process where Google recrawls your affected pages. Learn more about the Rich result status reports.

|

| Image: Special Announcements Enhancement report |

Special Announcements appearance in Performance report

The Search Console Performance report now also allows you to see the performance of your SpecialAnnouncement marked-up pages on Google Search. This means that you can check the impressions, clicks and CTR results of your special announcement pages and check their performance to understand how they are trending for any of the dimensions available. Learn more about the Search appearance tab in the performance report.

|

| Image: Special Announcements appearance in Performance report |

Special Announcements in Rich Results Test

After adding SpecialAnnouncement structured data to your pages, you can test them using the Rich Results Test tool. If you haven’t published the markup on your site yet, you can also upload a piece of code to check the markup. The test shows any errors or suggestions for your structured data.

|

| Image: Special Announcements in Rich Results Test |

These new tools should make it easier to understand how your marked-up SpecialAnnouncement pages perform on Search and to identify and fix issues.

If you have any questions, check out the Google Webmasters community.

Posted by Daniel Waisberg, Search Advocate & Moshe Samet, Search Console PM.