PES@Home 2020: Google’s first virtual summit for Product Experts

More than ever, users are relying on Google products to do their jobs, educate their kids, and stay in touch with loved ones. Our Google Product Experts (PEs) play a vital role in supporting these users in community forums, like the Webmaster Help Comm…

PES@Home 2020: Google’s first virtual summit for Product Experts

More than ever, users are relying on Google products to do their jobs, educate their kids, and stay in touch with loved ones. Our Google Product Experts (PEs) play a vital role in supporting these users in community forums, like the Webmaster Help Community, across many languages.

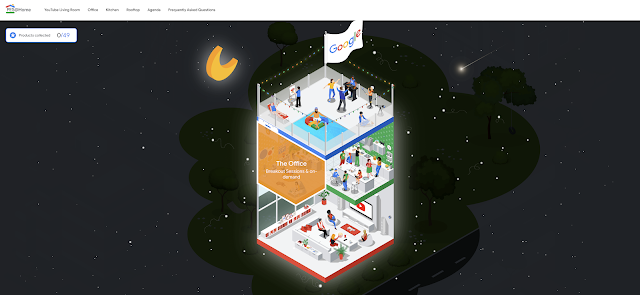

For several years now, we have been inviting our most advanced PEs from around the world to a bi-annual, 3-day PE Summit. These events provide an opportunity not only to share latest product updates with PEs, but also to acknowledge and celebrate their tireless contributions to help our users. As the world was going into lockdown in March, we quickly decided that we don’t want to miss out on this annual celebration. So the summit team shifted focus and started planning an all virtual event which came to be called PES@Home.

Through the house-themed virtual event platform, PEs participated in over 120 sessions in the “office”, got a chance to engage with each other and Googlers in the “kitchen”, had fun on the “rooftop” learning more about magic or mixology, and – hopefully – came out feeling reconnected and re-energized. In addition to a large number of general talks, Webmaster PEs were able to attend and ask questions during eight product specific breakout sessions with Search product managers and engineers, which covered topics like page experience, Web Stories, crawling, Image Search, and free shopping listings.

We are truly overwhelmed and grateful for how Webmaster PEs continue to grow the community, connection, and engagement in such a strange time. As a testament to the helpful spirit in the Webmaster community, we were thrilled to present the “Silver Lining Award” for someone who demonstrates a sense of humour and emphasizes the positive side of every situation to one of our own Webmaster PEs.

In the name of all the countless people asking product questions in the forums, we’d like to express our thanks to the patient, knowledgeable, helpful, and friendly Webmaster Product Experts, who help to make the web shine when it comes to presence on Search.

If you want to read more about the summit, check out this summary from a Webmaster PE point of view.

Posted by Antje Weisser, Product Support Manager

PES@Home 2020: Google’s first virtual summit for Product Experts

More than ever, users are relying on Google products to do their jobs, educate their kids, and stay in touch with loved ones. Our Google Product Experts (PEs) play a vital role in supporting these users in community forums, like the Webmaster Help Community, across many languages.

For several years now, we have been inviting our most advanced PEs from around the world to a bi-annual, 3-day PE Summit. These events provide an opportunity not only to share latest product updates with PEs, but also to acknowledge and celebrate their tireless contributions to help our users. As the world was going into lockdown in March, we quickly decided that we don’t want to miss out on this annual celebration. So the summit team shifted focus and started planning an all virtual event which came to be called PES@Home.

Through the house-themed virtual event platform, PEs participated in over 120 sessions in the “office”, got a chance to engage with each other and Googlers in the “kitchen”, had fun on the “rooftop” learning more about magic or mixology, and – hopefully – came out feeling reconnected and re-energized. In addition to a large number of general talks, Webmaster PEs were able to attend and ask questions during eight product specific breakout sessions with Search product managers and engineers, which covered topics like page experience, Web Stories, crawling, Image Search, and free shopping listings.

We are truly overwhelmed and grateful for how Webmaster PEs continue to grow the community, connection, and engagement in such a strange time. As a testament to the helpful spirit in the Webmaster community, we were thrilled to present the “Silver Lining Award” for someone who demonstrates a sense of humour and emphasizes the positive side of every situation to one of our own Webmaster PEs.

In the name of all the countless people asking product questions in the forums, we’d like to express our thanks to the patient, knowledgeable, helpful, and friendly Webmaster Product Experts, who help to make the web shine when it comes to presence on Search.

If you want to read more about the summit, check out this summary from a Webmaster PE point of view.

Posted by Antje Weisser, Product Support Manager

Best practices for Black Friday and Cyber Monday pages

The end of the year holiday season is a peak time for many merchants with special sales events such as Black Friday and Cyber Monday. As a merchant, you can help Google highlight your sales events by providing landing pages with relevant content and hi…

Best practices for Black Friday and Cyber Monday pages

The end of the year holiday season is a peak time for many merchants with special sales events such as Black Friday and Cyber Monday. As a merchant, you can help Google highlight your sales events by providing landing pages with relevant content and hi…

Best practices for Black Friday and Cyber Monday pages

The end of the year holiday season is a peak time for many merchants with special sales events such as Black Friday and Cyber Monday. As a merchant, you can help Google highlight your sales events by providing landing pages with relevant content and hi…

Best practices for Black Friday and Cyber Monday pages

The end of the year holiday season is a peak time for many merchants with special sales events such as Black Friday and Cyber Monday. As a merchant, you can help Google highlight your sales events by providing landing pages with relevant content and h…

Best practices for Black Friday and Cyber Monday pages

The end of the year holiday season is a peak time for many merchants with special sales events such as Black Friday and Cyber Monday. As a merchant, you can help Google highlight your sales events by providing landing pages with relevant content and h…

The Search Console Training lives on

In November 2019 we announced the Search Console Training YouTube series and started publishing videos regularly. The goal of the series was to create updated video content to be used alongside Search documentation, for example in the Help Center and i…

The Search Console Training lives on

In November 2019 we announced the Search Console Training YouTube series and started publishing videos regularly. The goal of the series was to create updated video content to be used alongside Search documentation, for example in the Help Center and i…

The Search Console Training lives on

In November 2019 we announced the Search Console Training YouTube series and started publishing videos regularly. The goal of the series was to create updated video content to be used alongside Search documentation, for example in the Help Center and i…

The Search Console Training lives on

In November 2019 we announced the Search Console Training YouTube series and started publishing videos regularly. The goal of the series was to create updated video content to be used alongside Search documentation, for example in the Help Center and in the Developers site.

The wonderful Google Developer Studio team (the engine behind those videos!) put together this fun blooper reel for the first wave of videos that we recorded in the Google London studio.

So far we’ve published twelve episodes in the series, each focusing on a different part of the tool. We’ve seen it’s helping lots of people to learn how to use Search Console – so we decided to continue recording videos… at home! Please bear with the trucks, ambulances, neighbors, passing clouds, and of course the doorbell. ¯_(ツ)_/¯

In addition to the location change, we’re also changing the scope of the new videos. Instead of focusing on one report at a time, we’ll discuss how Search Console can help YOUR business. In each episode we’ll focus on types of website, like ecommerce, and job roles, like developers.

To hear about new videos as soon as they’re published, subscribe to our YouTube channel, and feel free to leave feedback on Twitter.

Stay tuned!

Daniel Waisberg, Search Advocate

New Schema.org support for retailer shipping data

Quick summary: Starting today, we support shippingDetails schema.org markup as an alternative way for retailers to be eligible for shipping details in Google Search results.

New Schema.org support for retailer shipping data

Quick summary: Starting today, we support shippingDetails schema.org markup as an alternative way for retailers to be eligible for shipping details in Google Search results.

New Schema.org support for retailer shipping data

Quick summary: Starting today, we support shippingDetails schema.org markup as an alternative way for retailers to be eligible for shipping details in Google Search results.

Since June 2020, retailers have been able to list their products across different Google surfaces for free, including on Google Search. We are committed to supporting ways for the ecosystem to better connect with users that come to Google to look for the best products, brands, and retailers by investing both in more robust tooling in Google Merchant Center as well as with new kinds of schema.org options.

Shipping details, including cost and expected delivery times, are often a key consideration for users making purchase decisions. In our own studies, we’ve heard that users abandon shopping checkouts because of unforeseen or uncertain shipping costs. This is why we will often show shipping cost information in certain result types, including on free listings on Google Search (currently in the US, in English only).

Retailers have always been able to configure shipping settings in Google Merchant Center in order to display this information in listings. Starting today, we now also support the shippingDetails schema.org markup type for retailers who don’t have active Merchant Center accounts with product feeds.

For retailers that are interested in this new markup, check out our documentation to get started.

Posted by Kyle Kelly, Shopping Product Manager

New open source robots.txt projects

Last year we released the robots.txt parser and matcher that we use in our production systems to the open source world. Since then, we’ve seen people build new tools with it, contribute to the open source library (effectively improving our production s…

New open source robots.txt projects

Last year we released the robots.txt parser and matcher that we use in our production systems to the open source world. Since then, we’ve seen people build new tools with it, contribute to the open source library (effectively improving our production s…

New open source robots.txt projects

Last year we released the robots.txt parser and matcher that we use in our production systems to the open source world. Since then, we’ve seen people build new tools with it, contribute to the open source library (effectively improving our production s…

Googlebot will soon speak HTTP/2

Quick summary: Starting November 2020, Googlebot will start crawling some sites over HTTP/2.