SEO 2014

We’re at the start of 2014.

SEO is finished.

Well, what we had come to know as the practical execution of “whitehat SEO” is finished. Google has defined it out of existence. Research keyword. Write page targeting keyword. Place links with that keyword in the link. Google cares not for this approach.

SEO, as a concept, is now an integral part of digital marketing. To do SEO in 2014 – Google-compliant, whitehat SEO – digital marketers must seamlessly integrate search strategy into other aspects of digital marketing. It’s a more complicated business than traditional SEO, but offers a more varied and interesting challenge, too.

Here are a few things to think about for 2014.

1. Focus On Brand

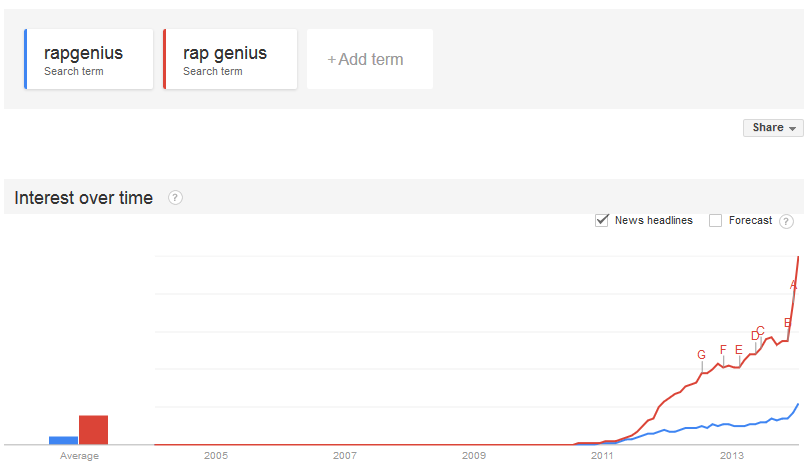

Big brands not only get a free pass, they can get extra promotion. By being banned. Take a look at Rap Genius. Aggressive link-building strategy leads to de-indexing. A big mea culpa follows and what happens? Not only do they get reinstated, they’ve earned themselves a wave of legitimate links.

Now that’s genius.

Google would look deficient if they didn’t show that site as visitors would expect to find it – enough people know the brand. To not show a site that has brand awareness would make Google look bad.

Expedia’s link profile was similarly outed for appearing to be at odds with Google’s published standards. Could a no-name site pass a hand inspection if they use aggressive linking? Unlikely.

What this shows is that if you have a brand important enough so that Google would look deficient by excluding it, then you will have advantages that no-name sites don’t enjoy. You will more likely pass manual inspections, and you’re probably more likely to get penalties lifted.

What is a brand?

In terms of search, it’s a site that visitors can use a brand search to find. Just how much search volume you require is open to debate, but you don’t need to be a big brand like Apple, or Trip Advisor or Microsoft. Rap Genius aren’t. Ask “would Google look deficient if this site didn’t show up” and you can usually tell that by looking for evidence of search volume on a sites name.

In advertising, brands have been used to secure a unique identity. That identity is associated with a product or service by the customer. Search used to be about matching a keyword term. But as keyword areas become saturated, and Google returns fewer purely keyword-focused pages anyway, developing a unique identity is a good way forward.

If you haven’t already, put some work into developing a cohesive, unique brand. If you have a brand, then think about generating more awareness. This may mean higher spends on brand-related advertising than you’ve allocated in previous years. The success metric is an increase in brand searches i.e. the name of the site.

2. Be Everywhere

The idea of a stand-alone site is becoming redundant. In 2014, you need to be everywhere your potential visitors reside. If your potential visitors are spending all day in Facebook, or YouTube, that’s where you need to be, too. It’s less about them coming to you, which is the traditional search metaphor, and a lot more about you going to them.

You draw visitors back to your site, of course, but look at every platform and other site as a potential extension of your own site. Pages or content you place on those platforms are yet another front door to your site, and can be found in Google searches. If you’re not where your potential visitors are, you can be sure your competitors will be, especially if they’re investing in social media strategies.

A reminder to see all channels as potential places to be found.

Mix in cross-channel marketing with remarketing and consumers get the perception that your brand is bigger. Google ran the following display ad before they broadly enabled retargeting ads. Retargeting only further increases that lift in brand searches.

3. Advertise Everywhere

Are you finding it difficult to get top ten in some areas? Consider advertising with AdWords and on the sites that already hold those positions. Do some brand advertising on them to raise awareness and generate some brand searches. An advert placed on a site that offers a complementary good or service might be cheaper than going to the expense and effort needed to rank. It might also help insulate you from Google’s whims.

The same goes for guest posts and content placement, although obviously you need to be a little careful as Google can take a dim view of it. The safest way is to make sure the content you place is unique, valuable and has utility in it’s own right. Ask yourself if the content would be equally at home on your own site if you were to host it for someone else. If so, it’s likely okay.

4. Valuable Content

Google does an okay job of identifying good content. It could do better. They’ve lost their way a bit in terms of encouraging production of good content. It’s getting harder and harder to make the numbers work in order to cover the production cost.

However, it remains Google’s mission to provide the user with answers the visitor deems relevant and useful. The utility of Google relies on it. Any strategy that is aligned with providing genuine visitor utility will align with Google’s long term goals.

Review your content creation strategies. Content that is of low utility is unlikely to prosper. While it’s still a good idea to use keyword research as a guide to content creation, it’s a better idea to focus on topic areas and creating engagement through high utility. What utility is the user expecting from your chosen topic area? If it’s rap lyrics for song X, then only the rap lyrics for song X will do. If it is plans for a garden, then only plans for a garden will do. See being “relevant” as “providing utility”, not keyword matching.

Go back to the basic principles of classifying the search term as either Navigational, Informational, or Transactional. If the keyword is one of those types, make sure the content offers the utility expected of that type. Be careful when dealing with informational queries that Google could use in it’s Knowledge Graph. If your pages deal with established facts that anyone else can state, then you have no differentiation, and that type of information is more likely to end up as part of Google’s Knowledge Graph. Instead, go deep on information queries. Expand the information. Associate it with other information. Incorporate opinion.

BTW, Bill has some interesting reading on the methods by which Google might be identifying different types of queries.

Methods, systems, and apparatus, including computer program products, for identifying navigational resources for queries. In an aspect, a candidate query in a query sequence is selected, and a revised query subsequent to the candidate query in the query sequence is selected. If a quality score for the revised query is greater than a quality score threshold and a navigation score for the revised query is greater than a navigation score threshold, then a navigational resource for the revised query is identified and associated with the candidate query. The association specifies the navigational resource as being relevant to the candidate query in a search operation.

5. Solve Real Problems

This is a follow-on from “ensuring you provide content with utility”. Go beyond keyword and topical relevance. Ask “what problem is the user is trying to solve”? Is it an entertainment problem? A “How To” problem? What would their ideal solution look like? What would a great solution look like?

There is no shortcut to determining what a user finds most useful. You must understand the user. This understanding can be gleaned from interviews, questionnaires, third party research, chat sessions, and monitoring discussion forums and social channels. Forget about the keyword for the time being. Get inside a visitors head. What is their problem? Write a page addressing that problem by providing a solution.

6. Maximise Engagement

Google are watching for the click-back to the SERP results, an action characteristic of a visitor who clicked through to a site and didn’t deem what they found to be relevant to the search query in terms of utility. Relevance in terms of subject match is now a given.

Big blocks of dense text, even if relevant, can be off-putting. Add images and videos to pages that have low engagement and see if this fixes the problem. Where appropriate, make sure the user takes an action of some kind. Encourage the user to click deeper into the site following an obvious, well placed link. Perhaps they watch a video. Answer a question. Click a button. Anything that isn’t an immediate click back.

If you’ve focused on utility, and genuinely solving a users problem, as opposed to just matching a keyword, then your engagement metrics should be better than the guy who is still just chasing keywords and only matching in terms of relevance to a keyword term.

7. Think Like A PPCer

Treat every click like you were paying for it directly. Once that visitor has arrived, what is the one thing you want them to do next? Is it obvious what they have to do next? Always think about how to engage that visitor once they land. Get them to take an action, where possible.

8.Think Like A Conversion Optimizer

Conversion optimization tries to reduce the bounce-rate by re-crafting the page to ensure it meets the users needs. They do this by split testing different designs, phrases, copy and other elements on the page.

It’s pretty difficult to test these things in SEO, but it’s good to keep this process in mind. What pages of your site are working well and which pages aren’t? Is it anything to do with different designs or element placement? What happens if you change things around? What do the three top ranking sites in your niche look like? If their link patterns are similar to yours, what is it about those sites that might lead to higher engagement and relevancy scores?

9. Rock Solid Strategic Marketing Advantage

SEO is really hard to do on generic me-too sites. It’s hard to get links. It’s hard to get anyone to talk about them. People don’t share them with their friends. These sites don’t generate brand searches. The SEO option for these sites is typically what Google would describe as blackhat, namely link buying.

Look for a marketing angle. Find a story to tell. Find something unique and remarkable about the offering. If a site doesn’t have a clearly-articulated point of differentiation, then the harder it is to get value from organic search if your aim is to do so whilst staying within the guidelines.

10. Links

There’s a reason Google hammer links. It’s because they work. Else, surely Google wouldn’t make a big deal about them.

Links count. It doesn’t matter if they are no-follow, scripted, within social networks, or wherever, they are still paths down which people travel. It comes back to a clear point of differentiation, genuine utility and a coherent brand. It’s a lot easier, and safer, to link build when you’ve got all the other bases covered first.

Did @mattcutts Endorse Rap Genius Link Spam?

On TWIG Matt Cutts spoke about the importance of defunding spammers & breaking their spirits.

If you want to stop spam, the most straight forward way to do it is to deny people money because they care about the money and that should be their end goal. But if you really want to stop spam, it is a little bit mean, but what you want to do, is break their spirits. You want to make them frustrated and angry. There are parts of Google’s algorithms specifically designed to frustrate spammers and mystify them and make them frustrated. And some of the stuff we do gives people a hint their site is going to drop and then a week or two later their site actually does drop so they get a little bit more frustrated. And so hopefully, and we’ve seen this happen, people step away from the dark side and say “you know, that was so much pain and anguish and frustration, let’s just stay on the high road from now on” some of the stuff I like best is when people say “you know this SEO stuff is too unpredictable, I’m just going to write some apps. I’m going to go off and do something productive for society.” And that’s great because all that energy is channeled at something good.

What was less covered was that in the same video Matt Cutts made it sound like anything beyond information architecture, duplicate content cleanup & clean URLs was quickly approaching scamming – especially anything to do with links. So over time more and more behaviors get reclassified as black hat spam as Google gains greater control over the ecosystem.

there’s the kind of SEO that is better architecture, cleaner URLs, not duplicate content … that’s just like making sure your resume doesn’t have any typos on it. that’s just clever stuff. and then there’s the type of SEO that is sort of cheating. trying to get a lot of bad backlinks or scamming, and that’s more like lying on your resume. when you get caught sometime’s there’s repercussions. and it definitely helps to personalize because now anywhere you search for plumbers there’s local results and they are not the same across the world. we’ve done a diligent job of trying to crack down on black hat spam. so we had an algorithm named Penguin that launched that kind of had a really big impact. we had a more recent launch just a few months ago. and if you go and patrole the black hat SEO forums where the guys talk about the techniques that work, now its more people trying to sell other people scams rather than just trading tips. a lot of the life has gone out of those forums. and even the smaller networks that they’re trying to promote “oh buy my anglo rank or whatever” we’re in the process of tackling a lot of those link networks as well. the good part is if you want to create a real site you don’t have to worry as much about these bad guys jumping ahead of you. the playing ground is a lot more level now. panda was for low quality. penguin was for spam – actual cheating.

The Matt Cutts BDSM School of SEO

As part of the ongoing campaign to “break their spirits” we get increasing obfuscation, greater time delays between certain algorithmic updates, algorithmic features built explicitly with the goal of frustrating people, greater brand bias, and more outrageous selective enforcement of the guidelines.

Those who were hit by either Panda or Penguin in some cases took a year or more to recover. Far more common is no recovery — ever. How long do you invest in & how much do you invest in a dying project when the recovery timeline is unknown?

You Don’t Get to Fascism Without 2-Tier Enforcement

While success in and of itself may make one a “spammer” to the biased eyes of a search engineer (especially if you are not VC funded nor part of a large corporation), many who are considered “spammers” self-regulate in a way that make them far more conservative than the alleged “clean” sites do.

Pretend you are Ask.com and watch yourself get slaughtered without warning.

Build a big brand & you will have advanced notification & free customer support inside the GooglePlex:

In my experience with large brand penalties, (ie, LARGE global brands) Google have reached out in advance of the ban every single time. – Martin Macdonald

Launching a Viral Linkspam Sitemap Campaign

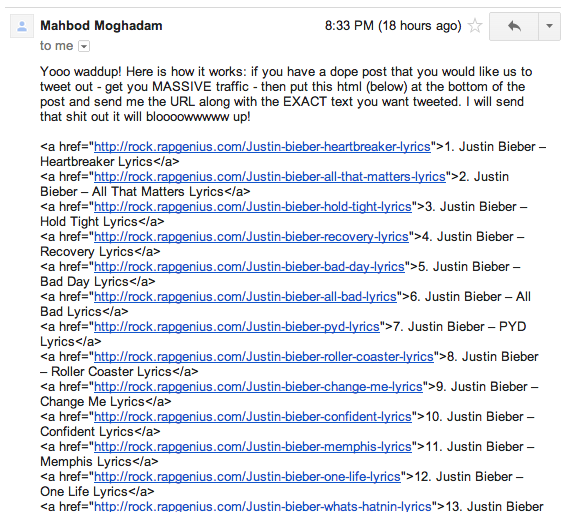

When RapGenius was penalized, the reason they were penalized is they were broadly and openly and publicly soliciting to promote bloggers who would dump a list of keyword rich deeplinks into their blog posts. They were basically turning boatloads of blogs into mini-sitemaps for popular new song albums.

Remember reading dozens (hundreds?) of blog posts last year about how guest posts are spam & Google should kill them? Well these posts from RapGenius were like a guest post on steroids. The post “buyer” didn’t have to pay a single cent for the content, didn’t care at all about relevancy, AND a sitemap full of keyword rich deep linking spam was included in EACH AND EVERY post.

Most “spammers” would never attempt such a campaign because they would view it as being far too spammy. They would have a zero percent chance of recovery as Google effectively deletes their site from the web.

And while RG is quick to distance itself from scraper sites, for almost the entirety of their history virtually none of the lyrics posted on their site were even licensed.

In the past I’ve mentioned Google is known to time the news cycle. It comes without surprise that on a Saturday barely a week after being penalized Google restored RapGenius’s rankings.

How to Gain Over 400% More Links, While Allegedly Losing

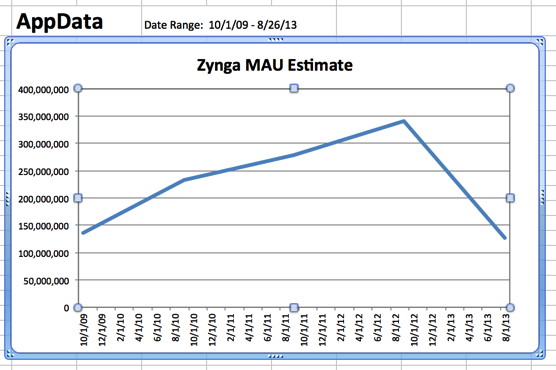

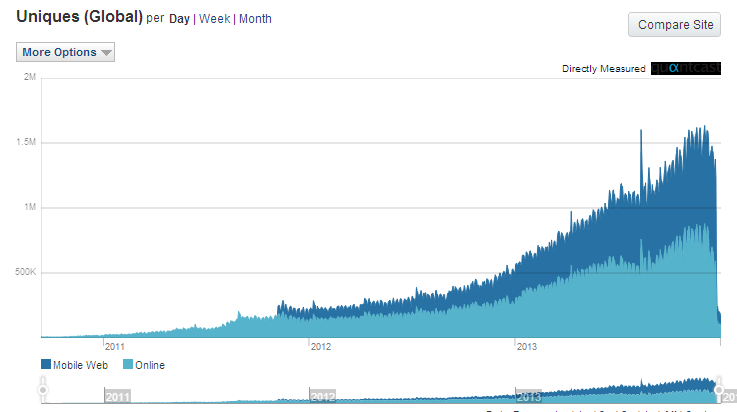

While the following graph may look scary in isolation, if you know the penalty is only a week or two then there’s virtually no downside.

Since being penalized, RapGenius has gained links from over 1,000* domains

- December 25th: 129

- December 26th: 85

- December 27th: 87

- December 28th: 54

- December 29th: 61

- December 30th: 105

- December 31st: 182

- January 1st: 142

- January 2nd: 112

- January 3rd: 122

The above add up to 1,079 & RapGenius only has built a total of 11,930 unique linking domains in their lifetime. They grew about 10% in 10 days!

On every single day the number of new referring domains VASTLY exceeded the number of referring domains that disappeared. And many of these new referring domains are the mainstream media and tech press sites, which are both vastly over-represented in importance/authority on the link graph. They not only gained far more links than they lost, but they also gained far higher quality links that will be nearly impossible for their (less spammy) competitors to duplicate.

They not only got links, but the press coverage acted as a branded advertising campaign for RapGenius.

Here’s some quotes from RapGenius on their quick recovery:

- “we owe a big thanks to Google for being fair and transparent and allowing us back onto their results pages” <– Not the least bit true. RapGenius was not treated fairly, but rather they were given a free ride compared to the death hundreds of thousands of small businesses have been been handed over the past couple years.

- “On guest posts, we appended lists of song links (often tracklists of popular new albums) that were sometimes completely unrelated to the music that was the subject of the post.” <– and yet others are afraid of writing relevant on topic posts due to Google’s ramped fearmongering campaigns

- “we compiled a list of 100 “potentially problematic domains”” <– so their initial list of domains to inspect was less than 10% the number of links they gained while being penalized

- “Generally Google doesn’t hold you responsible for unnatural inbound links outside of your control” <– another lie

- “of the 286 potentially problematic URLs that we manually identified, 217 (more than 75 percent!) have already had all unnatural links purged.” <– even the “all in” removal of pages was less than 25% of the number of unique linking domains generated during the penalty period

And Google allowed the above bullshit during a period when they were sending out messages telling other people WHO DID THINGS FAR LESS EGREGIOUS that they are required to remove more links & Google won’t even look at their review requests for at least a couple weeks – A TIME PERIOD GREATER THAN THE ENTIRE TIME RAPGENIUS WAS PENALIZED FOR.

Failed reconsideration requests are now coming with this email that tells site owners they must remove more links: pic.twitter.com/tiyXtPvY32— Marie Haynes (@Marie_Haynes) January 2, 2014

In Conclusion…

If you tell people what works and why you are a spammer with no morals. But if you are VC funded, Matt Cutts has made it clear that you should spam the crap out of Google. Just make sure you hire a PR firm to trump up press coverage of the “unexpected” event & then have a faux apology saved in advance. So long as you lie to others and spread Google’s propaganda you are behaving in an ethical white hat manner.

Google & @mattcutts didn’t ACTUALLY care about Rap Genius’ link scheme, they just didn’t want to miss a propaganda opportunity.— Ben Cook (@Skitzzo) January 4, 2014

Notes

* These stats are from Ahrefs. A few of these links may have been in place before the penality and only recently crawled. However it is also worth mentioning that all third party databases of links are limited in size & refresh rate by optimizing their capital spend, so there are likely hundreds more links which have not yet been crawled by Ahrefs. One should also note that the story is still ongoing and they keep generating more links every day. By the time the story is done spreading they are likely to see roughly a 30% growth in unique linking domains in about 6 weeks.

Gray Hat Search Engineering

Almost anyone in internet marketing who has spent a couple months in the game has seen some “shocking” case study where changing the color of a button increased sales 183% or such. In many cases such changes only happen when the original site had not had any focus on conversion at all.

Google, on the other hand, has billions of daily searches and is constantly testing ways to increase yield:

The company was considering adding another sponsored link to its search results, and they were going to do a 30-day A/B test to see what the resulting change would be. As it turns out, the change brought massive returns. Advertising revenues from those users who saw more ads doubled in the first 30 days.

…

By the end of the second month, 80 percent of the people in the cohort that was being served an extra ad had started using search engines other than Google as their primary search engine.

One of the reasons traditional media outlets struggle with the web is the perception that ads and content must be separated. When they had regional monopolies they could make large demands to advertisers – sort of like how Google may increase branded CPCs on AdWords by 500% if you add sitelinks. You not only pay more for clicks that you were getting for free, but you also pay more for the other paid clicks you were getting cheaper in the past.

That’s how monopolies work – according to Eric Schmidt they are immune from market forces.

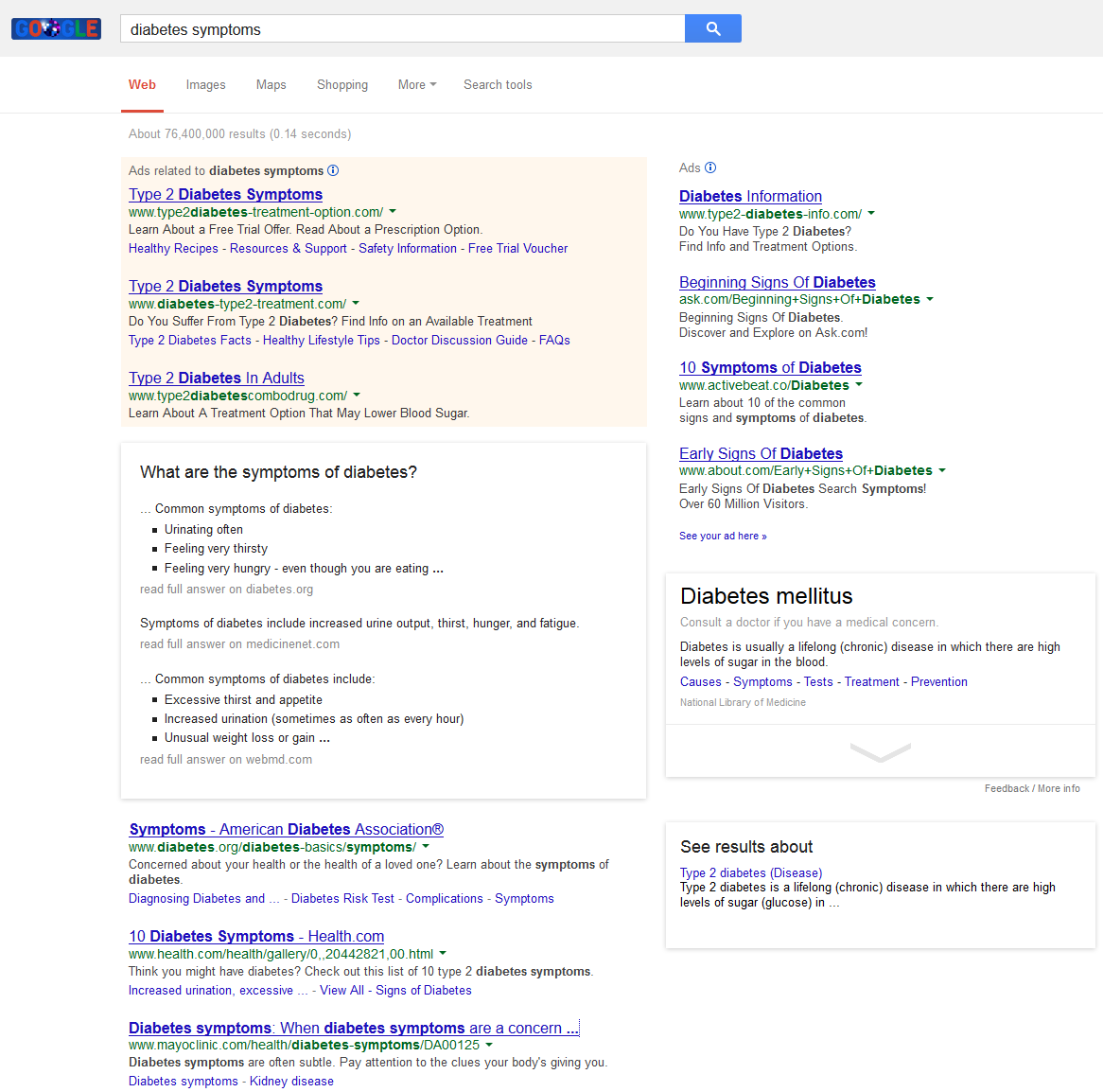

Search itself is the original “native ad.” The blend confuses many searchers as the background colors fade into white.

Google tests colors & can control the flow of traffic based not only on result displacement, but also the link colors.

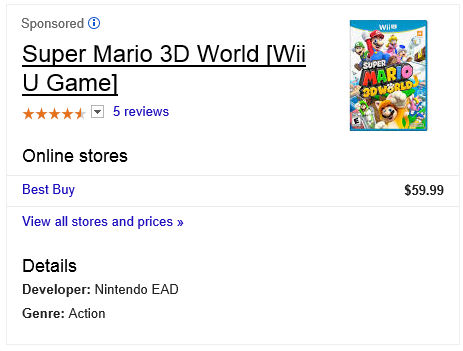

It was reported last month that Google tested adding ads to the knowledge graph. The advertisement link is blue, while the ad disclosure is to the far right out of view & gray.

I was searching for a video game yesterday & noticed that now the entire Knowledge Graph unit itself is becoming an ad unit. Once again, gray disclosure & blue ad links.

Where Google gets paid for the link, the link is blue.

Where Google scrapes third party content & shows excerpts, the link is gray.

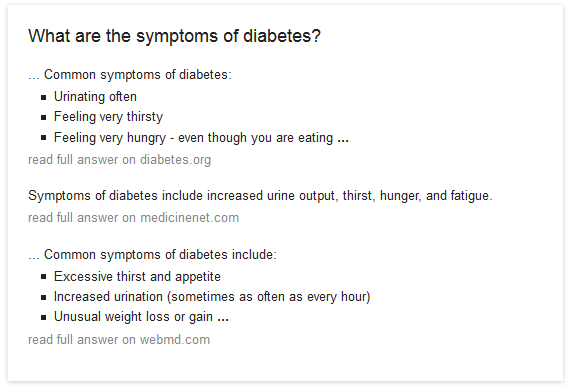

The primary goal of such a knowledge block is result displacement – shifting more clicks to the ads and away from the organic results.

When those blocks appear in the search results, even when Google manages to rank the Mayo Clinic highly, it’s below the fold.

What’s so bad about this practice in health

- Context Matters: Many issues have overlapping symptoms where a quick glance at a few out-of-context symptoms causes a person to misdiagnose themselves. Flu-like symptoms from a few months ago turned out to be indication of a kidney stone. That level of nuance will *never* be in the knowledge graph. Google’s remote rater documents discuss your money your life (YMYL) topics & talk up the importance of knowing who exactly is behind content, but when they use gray font on the source link for their scrape job they are doing just the opposite.

- Hidden Costs: Many of the heavily advertised solutions appearing above the knowledge graph have hidden costs yet to be discovered. You can’t find a pharmaceutical company worth $10s of billions that hasn’t plead guilty to numerous felonies associated with deceptive marketing and/or massaging research.

- Artificially Driving Up Prices: in-patent drugs often cost 100x as much as the associated generic drugs & thus the affordable solutions are priced out of the ad auctions where the price for a click can vastly exceed than the profit from selling a generic prescription drug.

Where’s the business model for publishers when they have real editorial cost & must fact check and regularly update their content, their content is good enough to be featured front & center on Google, but attribution is nearly invisible (and thus traffic flow is cut off)? As the knowledge graph expands, what does that publishing business model look like in the future?

Does the knowledge graph eventually contain sponsored self-assessment medical quizzes? How far does this cancer spread?

Where do you place your chips?

Google believes it can ultimately fulfil people’s data needs by sending results directly to microchips implanted into its user’s brains.

Quickly Reversing the Fat Webmaster Curse

Long story short, -38 pounds in about 2 months or so. Felt great the entire time and felt way more focused day to day. Maybe you don’t have a lot of weight to lose but this whole approach can significantly help you cognitively.

In fact, the diet piece was originally formed for cognitive enhancements rather than weight loss.

Before I get into this post I just want to explicitly state that I am not a doctor, medical professional, medical researcher, or any type of medical/health anything. This is not advice, I am just sharing my experience.

You should consult a healthcare professional before doing any of this stuff.

Unhealthy Work Habits

The work habits associated with being an online marketer lend themselves to packing on some weight. Many of us are in front of a screen for large chunks of the day while also being able to take breaks whenever and wherever we want.

Sometimes those two things add up to a rather sedentary lifestyle with poor eating habits (I’m leaving travel out at the moment but that doesn’t help either).

In addition to the mechanics of our jobs being an issue we also tend to work longer/odd hours because all we really need to get a chunk of our work done is a computer or simply access to the internet. If you take all of those things and add them into the large amount of opportunity that exists on the web you have the perfect recipe for unhealthy, stressful work habits.

These habits tend to carry over into offline areas as well. Think about the things we touch or access every day:

- Computers

- Tablets

- Smartphones

- Search Engines

- Online Tools

- Instant Messaging

- Social Networks

What do many of these have in common? Instant “something”. Instant communication, results, gratification, and on and on. This is what we live in every day. We expect and probably prefer fast, instant, and quick. With that mindset, who has time to cook a healthy meal 3x per day on a regular basis? Some do, for sure. However, much like the office work environment this environment can be one that translates into lots of unhealthy habits and poor health.

I got to the point where I was about 40 pounds overweight with poor physicals and lackluster lipid profiles (high cholesterol, blood pressure, etc). I tried many things, many times but what ultimately turned the corner for me were 3 different investments.

Investment #1 – Standup/Sitdown Desk

Sitting down all day is no bueno. I bought a really high quality standup desk with an electrical motor so I can periodically sit down for a few minutes in between longer periods of standing.

It has a nice, wide table component and is quite sturdy. It also allows for different height adjustments via a simple up and down control:

A couple of tips here:

- Wear comfy shoes

- Take periodic breaks (I do so hourly) to go walk around the house or office or yard

- I also like to look away from the CPU every 20-30 minutes or so, sometimes I get eyestrain but I bought these glasses from Gunnar and it’s relieved those symptoms

Investment #2 – Treaddesk

The reason I didn’t buy the full-on treadmill desk is because I wanted a bigger desk with more options. I bought the Treaddesk, which is essentially the bottom part of the treadmill, and I move it around during the week based on my workflow needs:

They have packages available as well (see the above referenced link).

I have a second, much cheaper standup desk that I hacked together from IKEA:

This desk acts as a holder for my coffee stuff but also allows me to put my laptop on it (which is paired with an external keyboard and trackpad) in case I want to do some lighter work (I have a hard time doing deeper work when doing the treadmill part while working).

I move the Treaddesk back and forth sometimes, but mostly it stays with this IKEA desk. If I have a week where the work is not as deeply analytical and more administrative then I’ll walk at a lower speed on the main computer for a longer period of time.

I tend to walk about 5-7 miles a day on this thing, usually in a block of time where I do that lighter-type work (Quickbooks, cobbling reports together, email triage, very light research/writing, reading, and so on).

Investment #3 – Bulletproof Coffee and the Bulletproof Diet

I’m a big fan of Joe Rogan in general, and I enjoy his podcast. I heard about Dave Asprey on the JRE podcast so I eventually ended up on his site, bulletproofexec.com. I purchased some private coaching with the relevant products and I was off to the races.

I did my own research on some of the stuff and came away confident that “good” fat had been erroneously hated on for years. I highly encourage you to conduct your own research based on your own personal situation, again this is not advice.

I really wanted to drop about 50 pounds so I went all in with Bulletproof Intermittent Fasting. A few quick points:

- I felt great the entire time

- In rare moments where I was hungry at night I just had a tablespoon of organic honey

- I certainly felt a cognitive benefit

- I was never hungry

- I was much more patient with things

- I felt way more focused

So yeah, butter in the coffee and a mostly meat/veggie diet. I cheated from time to time, certainly over the holiday. I lost 38 pounds in slightly over 60 days. Here’s a before and after:

Fat Eric

Not So Fat Eric

I kept this post kind of short and to the point because my desire is not to argue or fight about whether carbs are good or bad, whether fat is good or bad, whether X is right, or whether Y is wrong. This is what worked for me and I was amazed by it, totally amazed by the outcome.

I also do things like cycling and martial arts but I’ve been doing those for awhile, along with running, and while I’ve lost weight I’ve never had it melt away like this.

I’ve stopped the fasting portion and none of the weight has piled back on. Lipid tests have been very positive as well, best in years.

Even if you don’t have a ton of weight to lose, seriously think about the standup desk and treadmill.

Happy New Year!

My Must Have Tools of 2014

There are a lot of tools in the SEO space (sorry, couldn’t resist :D) and over the years we’ve seen tools fall into 2 broad categories. Tools that aim to do just about everything and tools that focus on one discipline of online marketing.

As we contin…

Should Venture Backed Startups Engage in Spammy SEO?

Here’s a recent video of the founders of RapGenius talking at TechCrunch disrupt.

Oops, wrong video. Here’s the right one. Same difference.

Recently a thread on Hacker News highlighted a blog post which pointed how RapGenius was engaging in reciprocal promotional arrangements where they would promote blogs on their Facebook or Twitter accounts if those bloggers would post a laundry list of keyword rich deeplinks at RapGenius.

Matt Cutts quickly chimed in on Hacker News “we’re investigating this now.”

A friend of mine and I were chatting yesterday about what would happen. My prediction was that absolutely nothing would happen to RapGenius, they would issue a faux apology, they would put no effort into cleaning up the existing links, and the apology alone would be sufficient evidence of good faith that the issue dies there.

Today RapGenius published a mea culpa where ultimately they defended their own spam by complaining about how spammy other lyrics websites are. The self-serving jackasses went so far as including this in their post: “With limited tools (Open Site Explorer), we found some suspicious backlinks to some of our competitors”

It’s one thing to in private complain about dealing in a frustrating area, but it’s another thing to publicly throw your direct competitors under the bus with a table of link types and paint them as being black hat spammers.

Google can’t afford to penalize Rap Genius, because if they do Google Ventures will lose deal flow on the start ups Google co-invests in.

In the past some of Google’s other investments were into companies that were pretty overtly spamming. RetailMeNot held multiple giveaways where if you embedded a spammy sidebar set of deeplinks to their various pages they gave you a free t-shirt:

Google’s behavior on such arrangements has usually been to hit the smaller players while looking the other way on the bigger site on the other end of the transaction.

That free t-shirt for links post was from 2010 – the same year that Google invested in RetailMeNot. They did those promotions multiple times & long enough that they ran out of t-shirts!. Now that RTM is a publicly traded billion Dollar company which Google already endorsed by investing in, there’s a zero percent chance of them getting penalized.

To recap, if you are VC-backed you can: spam away, wait until you are outed, when outed reply with a combined “we didn’t know” and a “our competitors are spammers” deflective response.

For the sake of clarity, let’s compare that string of events (spam, warning but no penalty, no effort needed to clean up, insincere mea culpa) to how a websites are treated when not VC backed. For smaller sites it is “shoot on sight” first and then ask questions later, perhaps coupled with a friendly recommendation to start over.

Here’s a post from today highlighting a quote from Google’s John Mueller:

My personal & direct recommendation here would be to treat this site as a learning experience from a technical point of view, and then to find something that you’re absolutely passionate & knowledgeable about and create a website for that instead.

Growth hack inbound content marketing, but just don’t call it SEO.

Growth hacking = using 2005-era spam tactics. http://t.co/5ISCPmMEkp cc @samfbiddle @nitashatiku

— Max Woolf (@minimaxir) December 23, 2013

What’s worse, is with the new fearmongering disavow promotional stuff, not only are some folks being penalized for the efforts of others, but some are being penalized for links that were in place BEFORE Google even launched as a company.

Google wants me to disavow links that existed back when backrub was foreplay and not an algo. Hubris much?

— Cygnus SEO (@CygnusSEO) December 21, 2013

Given that money allegedly shouldn’t impact rankings, its sad to note that as everything that is effective gets labeled as spam, capital and connections are the key SEO innovations in the current Google ecosystem.

Beware Of SEO Truthiness

When SEO started, many people routinely used black-box testing to try any figure out what pages the search engines rewarded.

Black box testing is terminology used in IT. It’s a style of testing that doesn’t assume knowledge of the internal workings of a machine or computer program. Rather, you can only test how the system responds to inputs.

So, for many years, SEO was about trying things out and watching how the search engine responded. If rankings went up, SEOs assumed correlation meant causation, so they did a lot more of whatever it was they thought was responsible for the boost. If the trick was repeatable, they could draw some firmer conclusions about causation, at least until the search engine introduced some new algorithmic code and sent everyone back to their black-box testing again.

Well, it sent some people back to testing. Some SEO’s don’t do much, if any, testing of their own, and so rely on the strategies articulated by other people. As a result, the SEO echo chamber can be a pretty misleading place as “truthiness” – and a lot of false information – gets repeated far and wide, until it’s considered gospel. One example of truthiness is that paid placement will hurt you. Well, it may do, but not having it may hurt you more, because it all really…..depends.

Another problem is that SEO testing can seldom be conclusive, because you can’t be sure of the state of the thing you’re testing. The thing you’re testing may not be constant. For example, you throw up some more links, and your rankings rise, but the rise could be due to other factors, such as a new engagement algorithm that Google implemented in the middle of your testing, you just didn’t know about it.

It used to be a lot easier to conduct this testing. Updates were periodic. Up until that point, you could reasonably assume the algorithms were static, so cause and effect were more obvious than they are today. Danny Sullivan gave a good overview of search history at Moz earlier in the year:

That history shows why SEO testing is getting harder. There are a lot more variables to isolate that there used to be. The search engines have also been clever. A good way to thwart SEO black box testing is to keep moving the target. Continuously roll out code changes and don’t tell people you’re doing it. Or send people on a wild goose chase by arm-waving about a subtle code change made over here, when the real change has been made over there.

That’s the state of play in 2013.

However….(Ranting Time :)

Some SEO punditry is bordering on the ridiculous!

I’m not going to link to one particular article I’ve seen recently, as, ironically, that would mean rewarding them for spreading FUD. Also, calling out people isn’t really the point. Suffice to say, the advice was about specifics, such as how many links you can “safely” get from one type of site, that sort of thing….

The problem comes when we can easily find evidence to the contrary. In this case, a quick look through the SERPs and you’ll find evidence of top ranking sites that have more than X links from Site Type Y, so this suggests….what? Perhaps these sites are being “unsafe”, whatever that means. A lot of SEO punditry is well meaning, and often a rewording of Google’s official recommendations, but can lead people up the garden path if evidence in the wild suggests otherwise.

If one term defined SEO in 2013, it is surely “link paranoia”.

What’s Happening In The Wild

When it comes to what actually works, there are few hard and fast rules regarding links. Look at the backlink profiles for top ranked sites across various categories and you’ll see one thing that is constant….

Nothing is constant.

Some sites have links coming from obviously automated campaigns, and it seemingly doesn’t affect their rankings. Other sites have credible link patterns, and rank nowhere. What counts? What doesn’t? What other factors are in play? We can only really get a better picture by asking questions.

Google allegedly took out a few major link networks over the weekend. Anglo Rank came in for special mention from Matt Cutts.

So, why are Google making a point of taking out link networks if link networks don’t work? Well, it’s because link networks work. How do we know? Look at the back link profiles in any SERP area where there is a lot of money to be made, and the area isn’t overly corporate i.e. not dominated by major brands, and it won’t be long before you spot aggressive link networks, and few “legitimate” links, in the backlink profiles.

Sure, you wouldn’t want aggressive link networks pointing at brand sites, as there are better approaches brand sites can take when it comes to digital marketing, but such evidence makes a mockery of the tips some people are freely handing out. Are such tips the result of conjecture, repeating Google’s recommendations, or actual testing in the wild? Either the link networks work, or they don’t work but don’t affect rankings, or these sites shouldn’t be ranking.

There’s a good reason some of those tips are free, I guess.

Risk Management

Really, it’s a question of risk.

Could these sites get hit eventually? Maybe. However, those using a “disposable domain” approach will do anything that works as far as linking goes, as their main risk is not being ranked. Being penalised is an occupational hazard, not game-over. These sites will continue so long as Google’s algorithmic treatment rewards them with higher ranking.

If your domain is crucial to your brand, then you might choose to stay away from SEO entirely, depending on how you define “SEO”. A lot of digital marketing isn’t really SEO in the traditional sense i.e. optimizing hard against an algorithm in order to gain higher rankings, a lot of digital marketing is based on optimization for people, treating SEO as a side benefit. There’s nothing wrong with this, of course, and it’s a great approach for many sites, and something we advocate. Most sites end up somewhere along that continuum, but no matter where you are on that scale, there’s always a marketing risk to be managed, with perhaps “non-performance” being a risk that is often glossed over.

So, if there’s a take-away, it’s this: check out what actually happens in the wild, and then evaluate your risk before emulating it. When pundits suggest a rule, check to see if you can spot times it appears to work, and perhaps more interestingly, when it doesn’t. It’s in those areas of personal inquiry and testing where gems of SEO insight are found.

SEO has always been a mix of art and science. You can test, but only so far. The art part is dealing with the unknown past the testing point. Performing that art well is to know how to pick truthiness from reality.

And that takes experience.

But mainly a little fact checking :)

SEO Discussions That Need to Die

Sometimes the SEO industry feels like one huge Groundhog Day. No matter how many times you have discussions with people on the same old topics, these issues seem to pop back into blogs/social media streams with almost regular periodicity. And every time it does, just the authors are new, the arguments and the contra arguments are all the same.

Due to this sad situation, I have decided to make a short list of such issues/discussions and hopefully if one of you is feeling particularly inspired by it and it prevents you from starting/engaging in such a debate, then it was worth writing.

So here are SEO’s most annoying discussion topics, in no particular order:

Blackhat vs. Whitehat

This topic has been chewed over and over again so many times, yet people still jump into it with both their feet, having righteous feeling that their, and no one else’s argument is going to change someone’s mind. This discussion is becomes particularly tiresome when people start claiming moral high ground because they are using one over the other. Let’s face it once and for all times: there are no generally moral (white) and generally immoral (black) SEO tactics.

This topic has been chewed over and over again so many times, yet people still jump into it with both their feet, having righteous feeling that their, and no one else’s argument is going to change someone’s mind. This discussion is becomes particularly tiresome when people start claiming moral high ground because they are using one over the other. Let’s face it once and for all times: there are no generally moral (white) and generally immoral (black) SEO tactics.

This is where people usually pull out the argument about harming clients’ sites, argument which is usually moot. Firstly, there is a heated debate about what is even considered whitehat and what blackhat. Definition of these two concepts is highly fluid and changes over time. One of the main reasons for this fluidity is Google moving the goal posts all the time. What was once considered purely whitehat technique, highly recommended by all the SEOs (PR submissions, directories, guest posts, etc.) may as of tomorrow become “blackhat”, “immoral” and what not. Also some people consider “blackhat” anything that dares to not adhere to Google Webmaster Guidelines as if it was carved in on stone tablets by some angry deity.

Just to illustrate how absurd this concept is, imagine some other company, Ebay say, creates a list of rules, one of which is that anyone who wants to sell an item on their site, is prohibited from trying to sell it also on Gumtree or Craigslist. How many of you would practically reduce the number of people your product is effectively reaching because some other commercial entity is trying to prevent competition? If you are not making money off search, Google is and vice versa.

It is not about the morals, it is not about criminal negligence of your clients. It is about taking risks and as long as you are being truthful with your clients and yourself and aware of all the risks involved in undertaking this or some other activity, no one has the right to pontificate about “morality” of a competing marketing strategy. If it is not for you, don’t do it, but you can’t both decide that the risk is too high for you while pseudo-criminalizing those who are willing to take that risk.

The same goes for “blackhatters” pointing and laughing at “whitehatters”. Some people do not enjoy rebuilding their business every 2 million comment spam links. That is OK. Maybe they will not climb the ranks as fast as your sites do, but maybe when they get there, they will stay there longer? These are two different and completely legitimate strategies. Actually, every ecosystem has representatives of those two strategies, one is called “r strategy” which prefers quantity over quality, while the K strategy puts more investment in a smaller number of offsprings.

You don’t see elephants calling mice immoral, do you?

Rank Checking is Useless/Wrong/Misleading

This one has been going around for years and keeps raising its ugly head every once in a while, particularly after Google forces another SaaS provider to give up part of its services because of either checking rankings themselves or buying ranking data from a third party provider. Then we get all the holier-than-thou folks, mounting their soap boxes and preaching fire and brimstone on SEOs who report rankings as the main or even only KPI. So firstly, again, just like with black vs. white hat, horses for courses. If you think your way of reporting to clients is the best, stick with it, preach it positively, as in “this is what I do and the clients like it” but stop telling other people what to do!

More importantly, vast majority of these arguments are based on a totally imaginary situation in which SEOs use rankings as their only or main KPI. In all of my 12 years in SEO, I have never seen any marketer worth their salt report “increase in rankings for 1000s of keywords”. As far back as 2002, I remember people were writing reports to clients which had a separate chapter for keywords which were defined as optimization targets, client’s site reached top rankings but no significant increase in traffic/conversions was achieved. Those keywords were then dropped from the marketing plan altogether.

It really isn’t a big leap to understand that ranking isn’t important if it doesn’t result in increased conversions in the end. I am not going to argue here why I do think reporting and monitoring rankings is important. The point is that if you need to make your argument against a straw man, you should probably rethink whether you have a good argument at all.

PageRank is Dead/it Doesn’t Matter

Another strawman argument. Show me a linkbuilder who today thinks that getting links based solely on toolbar PageRank is going to get them to rank and I will show you a guy who has probably not engaged in active SEO since 2002. And not a small amount of irony can be found in the fact that the same people who decry use of Pagerank, a closest thing to an actual Google ranking factor they can see, are freely using proprietary metrics created by other marketing companies and treating them as a perfectly reliable proxy for esoteric concepts which even Google finds hard to define, such as relevance and authority. Furthermore, all other things equal, show me the SEO who will take a pass on a PR6 link for the sake of a PR3 one.

Blogging on “How Does XXX Google Update Change Your SEO” – 5 Seconds After it is Announced

Matt hasn’t turned off his video camera to switch his t-shirt for the next Webmaster Central video and there are already dozens of blog posts discussing to the most intricate of details on how the new algorithm update/penalty/infrastructure change/random- monochromatic-animal will impact everyone’s daily routine and how we should all run for the hills.

Best-case scenario, these prolific writers only know the name of the update and they are already suggesting strategies on how to avoid being slapped or, even better, get out of the doghouse. This was painfully obvious in the early days of Panda, when people were writing their “experiences” on how to recover from the algorithm update even before the second update was rolled out, making any testimony of recovery, in the worst case, a lie or (given a massive benefit of the doubt) a misinterpretation of ranking changes (rank checking anyone).

Put down your feather and your ink bottle skippy, wait for the dust to settle and unless you have a human source who was involved in development or implementation of the algorithm, just sit tight and observe for the first week or two. After that you can write those observations and it will be considered a legitimate, even interesting reporting on the new algorithm but anything earlier than that will paint you as a clueless, pageview chaser, looking to ride the wave of interest with blog post that are often closed with “we will probably not even know what the XXX update is all about until we give it some time to get implemented”. Captain Obvious to the rescue.

Adwords Can Help Your Organic Rankings

This one is like a mythological Hydra – you cut one head off, two new one spring out. This question was answered so many times by so many people, both from within search engines and from the SEO community, that if you are addressing this question today, I am suspecting that you are actually trying to refrain from talking about something else and are using this topic as a smoke screen. Yes, I am looking at you Google Webmaster Central videos. Is that *really* the most interesting question you found on your pile? What, no one asked about <not provided> or about social signals or about role authorship plays on non-personalized rankings or on whether it flows through links or million other questions that are much more relevant, interesting and, more importantly, still unanswered?

Infographics/Directories/Commenting/Forum Profile Links Don’t Work

This is very similar to the blackhat/whitehat argument and it is usually supported by a statement that looks something like “what do you think that Google with hundreds of PhDs haven’t already discounted that in their algorithm?”. This is a typical “argument from incredulity” by a person who glorifies post graduate degrees as a litmus of intelligence and ingenuity. My claim is that these people have neither looked at backlink profiles of many sites in many competitive niches nor do they know a lot of people doing or having a PhD. They highly underrate former and overrate the latter.

A link is a link is a link and the only difference is between link profiles and percentages that each type of link occupies in a specific link profile. Funnily enough, the same people who claim that X type of links don’t work are the same people who will ask for link removal from totally legitimate, authoritative sources who gave them a totally organic, earned link. Go figure.

“But Matt/John/Moultano/anyone-with a brother in law who has once visited Mountain View” said…

Hello. Did you order “not provided will be maximum 10% of your referral data”? Or did you have “I would be surprised if there was a PR update this year”? How about “You should never use nofollow on-site links that you don’t want crawled. But it won’t hurt you. Unless something.”?

People keep thinking that people at Google sit around all day long, thinking how they can help SEOs do their job. How can you build your business based on advice given out by an entity who is actively trying to keep visitors from coming to your site? Can you imagine that happening in any other business environment? Can you imagine Nike marketing department going for a one day training session in Adidas HQ, to help them sell their sneakers better?

Repeat after me THEY ARE NOT YOUR FRIENDS. Use your own head. Even better, use your own experience. Test. Believe your own eyes.

We Didn’t Need Keyword Data Anyway

This is my absolute favourite. People who were as of yesterday basing their reporting, link building, landing page optimization, ranking reports, conversion rate optimization and about every other aspect of their online campaign on referring keywords, all of a sudden fell the need to tell the world how they never thought keywords were an important metric. That’s right buster, we are so much better off flying blind, doing iteration upon iteration of a derivation of data based on past trends, future trends, landing pages, third party data, etc.

It is ok every once in a while to say “crap, Google has really shafted us with this one, this is seriously going to affect the way I track progress”. Nothing bad will happen if you do. You will not lose face over it. Yes there were other metrics that were ALSO useful for different aspects of SEO but it is not as if when driving a car and your brakes die on you, you say “pfffftt stopping is for losers anyway, who wants to stop the car when you can enjoy the ride, I never really used those brakes in the past anyway. What really matters in the car is that your headlights are working”.

Does this mean we can’t do SEO anymore? Of course not. Adaptability is one of the top required traits of an SEO and we will adapt to this situation as we did to all the others in the past. But don’t bullshit yourself and everyone else that 100% <not provided> didn’t hurt you.

Responses to SEO is Dead Stories

It is crystal clear why the “SEO is dead” stories themselves deserve to die a slow and painful death. I am talking here about hordes of SEOs who rise to the occasion every freeking time some 5th rate journalist decides to poke the SEO industry through the cage bars and convince them, nay, prove to them how SEO is not only not dying but is alive and kicking and bigger than ever. And I am not innocent of this myself, I have also dignified this idiotic topic with a response (albeit a short one) but how many times can we rise to the same occasion and repeat the same points? What original angle can you give to this story after 16 years of responding to the same old claims? And if you can’t give an original angle, how in the world are you increasing our collective knowledge by re-warming and serving the same old dish that wasn’t very good first time it was served? Don’t you have rankings to check instead?

There is No #10.

But that’s what everyone does, writes a “Top 10 ways…” article, where they will force the examples until they get to a linkbaity number. No one wants to read a “Top 13…” or a “Top 23…” article. This needs to die too. Write what you have to say. Not what you think will get most traction. Marketing is makeup, but the face needs to be pretty before you apply it. Unless you like putting lipstick on pigs.

Branko Rihtman has been optimizing sites for search engines since 2001 for clients and own web properties in a variety of competitive niches. Over that time, Branko realized the importance of properly done research and experimentation and started publishing findings and experiments at SEO Scientist, with some additional updates at @neyne. He currently consults a number of international clients, helping them improve their organic traffic and conversions while questioning old approaches to SEO and trying some new ones.

Value Based SEO Strategy

One approach to search marketing is to treat the search traffic as a side-effect of a digital marketing strategy. I’m sure Google would love SEOs to think this way, although possibly not when it comes to PPC! Even if you’re taking a more direct, rankings-driven approach, the engagement and relevancy scores that come from delivering what the customer values should serve you well, too.

In this article, we’ll look at a content strategy based on value based marketing. Many of these concepts may be familiar, but bundled together, they provide an alternative search provider model to one based on technical quick fixes and rank. If you want to broaden the value of your SEO offering beyond that first click, and get a few ideas on talking about value, then this post is for you.

In any case, the days of being able to rank well without providing value beyond the click are numbered. Search is becoming more about providing meaning to visitors and less about providing keyword relevance to search engines.

What Is Value Based Marketing?

Value based marketing is customer, as opposed to search engine, centric. In Values Based Marketing For Bottom Line Success, the authors focus on five areas:

- Discover and quantify your customers’ wants and needs

- Commit to the most important things that will impact your customers

- Create customer value that is meaningful and understandable

- Assess how you did at creating true customer value

- Improve your value package to keep your customers coming back

Customers compare your offer against those of competitors, and divide the benefits by the cost to arrive at value. Marketing determines and communicates that value.

This is the step beyond keyword matching. When we use keyword matching, we’re trying to determine intent. We’re doing a little demographic breakdown. This next step is to find out what the customer values. If we give the customer what they value, they’re more likely to engage and less likely to click back.

What Does The Customer Value?

A key question of marketing is “which customers does this business serve”? Seems like an obvious question, but it can be difficult to answer. Does a gym serve people who want to get fit? Yes, but then all gyms do that, so how would they be differentiated?

Obviously, a gym serves people who live in a certain area. So, if our gym is in Manhattan, our customer becomes “someone who wants to get fit in Manhattan”. Perhaps our gym is upmarket and expensive. So, our customer becomes “people who want to get fit in Manhattan and be pampered and are prepared to pay more for it”. And so on, and so on. They’re really questions and statements about the value proposition as perceived by the customer, and then delivered by the business.

So, value based marketing is about delivering value to a customer. This syncs with Google’s proclaimed goal in search, which is to put users first by delivering results they deem to have value, and not just pages that match a keyword term. Keywords need to be seen in a wider context, and that context is pretty difficult to establish if you’re standing outside the search engine looking in, so thinking in terms of concepts related to the value proposition might be a good way to go.

Value Based SEO Strategy

The common SEO approach, for many years, has started with keywords. It should start with customers and the business.

The first question is “who is the target market” and then ask what they value.

Relate what they value to the business. What is the value proposition of the business? Is it aligned? What would make a customer value this business offering over those of competitors? It might be price. It might be convenience. It’s probably a mix of various things, but be sure to nail down the specific value propositions.

Then think of some customer questions around these value propositions. What would be the likely customer objections to buying this product? What would be points that need clarifying? How does this offer differ from other similar offers? What is better about this product or service? What are the perceived problems in this industry? What are the perceived problems with this product or service? What is difficult or confusing about it? What could go wrong with it? What risks are involved? What aspects have turned off previous customers? What complaints did they make?

Make a list of such questions. These are your article topics.

You can glean this information by either interviewing customers or the business owner. Each of these questions, and accompanying answer, becomes an article topic on your site, although not necessarily in Q&A format. The idea is to create a list of topics as a basis for articles that address specific points, and objections, relating to the value proposition.

For example, buying SEO services is a risk. Customers want to know if the money they spend is going to give them a return. So, a valuable article might be a case study on how the company provided return on spend in the past, and the process by which it will achieve similar results in future. Another example might be a buyer concerned about the reliability of a make of car. A page dedicated to reliability comparisons, and another page outlining the customer care after-sale plan would provide value. Note how these articles aren’t keyword driven, but value driven.

Ever come across a FAQ that isn’t really a FAQ? Dreamed-up questions? They’re frustrating, and of little value if the information doesn’t directly relate to the value we seek. Information should be relevant and specific so when people land on the site, there’s more chance they will perceive value, at least in terms of addressing the questions already on their mind.

Compare this approach with generic copy around a keyword term. A page talking about “SEO” in response to the keyword term “SEO“might closely match a keyword term, so that’s a relevance match, but unless it’s tied into providing a customer the value they seek, it’s probably not of much use. Finding relevance matches is no longer a problem for users. Finding value matches often is. Even if you’re keyword focused, added these articles provides you semantic variation that may capture keyword searches that aren’t appearing in keyword tools.

Keyword relevance was a strategy devised at a time when information was less readily available and search engines weren’t as powerful. Finding something relevant was more hit and miss that it is today. These days, there’s likely thousands, if not millions, of pages that will meet relevance criteria in terms of keyword matching, so the next step is to meet value criteria. Providing value is less likely to earn a click back and more likely to create engagement than mere on-topic matching.

The Value Chain

Deliver value. Once people perceive value, then we have to deliver it. Marketing, and SEO in particular, used to be about getting people over the threshold. Today, businesses have to work harder to differentiate themselves and a sound way of doing this is to deliver on promises made.

So the value is in the experience. Why do we return to Amazon? It’s likely due to the end-to-end experience in terms of delivering value. Any online e-commerce store can deliver relevance. Where competition is fierce, Google is selective.

In the long term, delivering value should drive down the cost of marketing as the site is more likely to enjoy repeat custom. As Google pushes more and more results beneath the fold, the cost of acquisition is increasing, so we need to treat each click like gold.

Monitor value. Does the firm keep delivering value? To the same level? Because people talk. They talk on Twitter and Facebook and the rest. We want them talking in a good way, but even if they talk in a negative way, it can still useful. Their complaints can be used as topics for articles. They can be used to monitor value, refine the offer and correct problems as they arise. Those social signals, whilst not a guaranteed ranking boost, are still signals. We need to adopt strategies whereby we listen to all the signals, so to better understand our customers, in order to provide more value, and hopefully enjoy a search traffic boost as a welcome side-effect, so long as Google is also trying to determine what users value. .

Not sounding like SEO? Well, it’s not optimizing for search engines, but for people. If Google is to provide value, then it needs to ensure results provide not just relevant, but offer genuine value to end users. Do Google do this? In many cases, not yet, but all their rhetoric and technical changes suggest that providing value is at the ideological heart of what they do. So the search results will most likely, in time, reflect the value people seek, and not just relevance.

In technical terms, this provides some interesting further reading:

Today, signals such as keyword co-occurrence, user behavior, and previous searches do in fact inform context around search queries, which impact the SERP landscape. Note I didn’t say the signals “impact rankings,” even though rank changes can, in some cases, be involved. That’s because there’s a difference. Google can make a change to the SERP landscape to impact 90 percent of queries and not actually cause any noticeable impact on rankings.

The way to get the context right, and get positive user behaviour signals, and align with their previous searches, is to first understand what people value.

Creating an Experience for Your Product

In a recent post I talked about the benefits of productizing your business model along with some functional ways to achieve productization.

A product, in and of itself is really only 1/2 of what you are selling to your clients. The other 1/2 of the equation is the “experience”.

It sounds a bit “fluffy” but in my career as a service provider and in my purchasing history as a consumer the experience matters. I would even go so far as to say that in some very noticeable cases the experience can outweigh the product itself (to some extent anyways).

These halves, the product and the experience, can cut both ways.

Sometimes a product is so good that the experience can be average or even below average and the provider will still make out and sometimes the experience is so fantastic that an otherwise average or above average product is elevated to what can be priced as a premium product or service.

Let’s get a few obvious variables out of the way first. It is understood that:

- Experience matters more to some people than others

- Experience matters more in certain industries than others

- The actual product matters more to some

- The actual product matters more in some industries

If we stipulate that the 4 scenarios mentioned above are true, which they are, it still doesn’t change the basic premise that you are probably leaving revenue and growth on the table if you settle on one side or the other.

While it’s true that you can be successful even if your product to experience ratio is like a seesaw heavily weighted in one direction over the other, it is also true that you would probably be more successful if you made both the best each could be.

Defining Where Product Meets Experience

I’ll layout a couple of examples here to help illustrate the point:

- The “Big Four” in the link research tools space; Ahrefs, Link Research Tools, Majestic, and Open Site Explorer

- The two more well-known “tool/reporting suites” Raven and Moz outside of much more expensive enterprise toolkits

In my experience Ahrefs has been the best combination of product and experience, especially lately. Their dataset continues to grow and recent UI changes have made it even easier to use. Exports are super fast and I’ve had quick and useful interactions with their support staff. Perhaps it isn’t a coincidence that, from groups of folks I interact with and follow online, Ahrefs continues to pop up more often in conversation than not.

To me, Majestic and Link Research Tools are examples of where the product is really, really strong (copious amounts of data across many segments) but the UI/UX is not quite as good as the others. I realize some of this is subjective but in other comparisons online this seems to be a prevailing theme.

Open Site Explorer has a fantastic UI/UX but the data can be a bit behind the others and getting data out (exporting) is bit more of a chore than point, click, download. It seems like over a period of time OSE has had a rougher road to data and update growth than the other tools I mentioned.

In the case of two of more popular reporting and research suites, Moz and Raven, Raven has really caught up (if not surpassed) Moz in terms of UI/UX. Raven pulls in data from multiple sources, including Moz, and has quite a few more (and easier to get to and cross-reference) features than Moz.

Moz may not be interested in getting into some of the other pieces of the online marketing puzzle that Raven is into but I think it’s still a valid comparison based on the very similar, basic purpose of each tool suite.

Assessing Your Current Position

When assessing or reassessing your products and offerings, a lot of it goes back to targeting the right market.

- Is the market big enough to warrant investment into a product?

- How many different segments of a given market do you need to appeal to?

- Where’s the balance between feature bloat (think Zoho CRM) versus “good enough” functionality with an eye towards an incredible UX (think Highrise CRM)?

If the market isn’t big enough and you have to go outside your initial target, how will that affect the balance between the functionality of your product and the experience for your users, customers, or clients?

If you are providing SEO services your “functionality” might be how easy it is to determine the reports you provide and their relationship(s) to a client’s profitability or goals (or both). Your “experience” is likely a combination of things:

- The graphical presentation of your documents

- The language used in your reports and other interactions with the client

- The consistency of your “brand” across the web

- The consistency of your brand presentation (website, invoices, reports, etc)

- Client ability to access reports and information quickly without having to ask you for it

- Consistency of your information delivery (are you always on-time, late, or erratic with due dates, meetings, etc)

When you breakdown what you think is your “product” and “experience” you’ll likely find that it is pretty simple to develop a plan to improve both, rather than beating the vague “let’s do great things” company line that no one really understands but just nods at.

Example of Experience in Action

In just about every Consumer Reports survey Apple comes out on top for customer satisfaction. Apple, whether you like their products/”culture” or not, creates a fairly reliable, if not expensive, end to end experience. This is doubly true if you live near an Apple store.

If you look at laptop failure rates Apple is generally in the middle of the pack. There are other things that go into the Apple experience (using the OS and such) but part of the reason people are willing to pay that premium is due to their support options and ability to fix bugs fairly quickly.

To tie this into our industry, I think Moz is a good parallel example here. Their design is generally heralded as being quite pleasant and it’s pretty easy to use their tools; there isn’t a steep learning curve to using most of their products.

I think their product presentation is top notch, even though I generally prefer some of their competitors products. They are pretty active on social media and their support is generally very good.

So, in the case of Moz it’s pretty clear that people are willing to pay for less robust data or at least less features and options partly (or wholly) due to their product experience and product presentation.

Redesigning Your Experience

You might already have some of these but it’s worthwhile to revisit a very basic style guide (excluding audience development):

- Consistent logo and colors

- Fonts

- Vocabulary and Language Style (the tone of your brand, is it My Brand or MyBrand or myBrand, etc)

Some Additional Resources

Here are some visual/text-based resources that I have found helpful during my own redefining process:

- How to quantify user experience

- Krug’s Rocket Surgery Made Easy

- Don’t Make Me Think Revisited

- Lynda.Com Developing a Style Guide

- A free course from the Wharton School at the University of Pennsylvania on branding and marketing

These are some of the tools you might want to use to help in this process:

- Running copy through Word for readability Scores- Office 2013

- A Windows tool that can help improve your writing- Stylewriter

- A Mac tool to help with graphics and charts- Omnigraffle

- A Windows tool to help with charts and graphics- SmartDraw

- A cloud-based presentation tool that helps the less artistically inclined (like me)- Prezi

- Online proposal software- Proposable

- A text expander for Mac, comes in handy with consistent “messaging”- TextExpander

- Windows alternative that syncs with TextExpander- Breevy

Historical Revisionism

A stopped clock is right two times a day.

There’s some amusing historical revisionism going on in SEO punditry world right now, which got me thinking about the history of SEO. I’d like to talk about some common themes of this historical revision, which goes along the lines of “what I predicted all those years ago came true – what a visionary I am! .” No naming names, as I don’t meant this to be anything personal – as the same theme has popped up in a number of places – just making some observations :)

See if you agree….

Divided We Fall

The SEO world has never been united. There are no industry standards and qualifications like you’d find in the professions, such as being a doctor, or lawyer or a builder. If you say you’re an SEO, then you’re an SEO.

Part of the reason for the lack of industry standard is that the search engines never came to the party. Sure, they talked at conferences, and still do. They offered webmasters helpful guidelines. They participated in search engine discussion forums. But this was mainly to do with risk management. Keep your friends close, and your enemies closer.

In all these years, you won’t find one example of a representative from a major search engine saying “Hey, let’s all get together and form an SEO standard. It will help promote and legitimize the industry!”.

No, it has always been decrees from on high. “Don’t do this, don’t do that, and here are some things we’d like you to do”. Webmasters don’t get a say in it. They either do what the search engines say, or they go against them, but make no mistake, there was never any partnership, and the search engines didn’t seek one.

This didn’t stop some SEOs seeing it as a form of quasi-partnership, however.

Hey Partner

Some SEOs chose to align themselves with search engines and do their bidding. If the search engine reps said “do this”, they did it. If the search engines said “don’t do this”, they’d wrap themselves up in convoluted rhetorical knots pretending not to do it. This still goes on, of course.

In the early 2000’s, it turned, curiously, into a question of morality. There was “Ethical SEO”, although quite what it had to do with ethics remains unclear. Really, it was another way of saying “someone who follows the SEO guidelines”, presuming that whatever the search engines decree must be ethical, objectively good and have nothing to do self-interest. It’s strange how people kid themselves, sometimes.

What was even funnier was the search engine guidelines were kept deliberately vague and open to interpretation, which, of course, led to a lot of heated debate. Some people were “good” and some people were “bad”, even though the distinction was never clear. Sometimes it came down to where on the page someone puts a link. Or how many times someone repeats a keyword. And in what color.

It got funnier still when the search engines moved the goal posts, as they are prone to do. What was previously good – using ten keywords per page – suddenly became the height of evil, but using three was “good” and so all the arguments about who was good and who wasn’t could start afresh. It was the pot calling the kettle black, and I’m sure the search engines delighted in having the enemy warring amongst themselves over such trivial concerns. As far as the search engines were concerned, none of them were desirable, unless they became paying customers, or led paying customers to their door. Or perhaps that curious Google+ business.

It’s hard to keep up, sometimes.

Playing By The Rules

There’s nothing wrong with playing by the rules. It would have been nice to think there was a partnership, and so long as you followed the guidelines, high rankings would naturally follow, the bad actors would be relegated, and everyone would be happy.

But this has always been a fiction. A distortion of the environment SEOs were actually operating in.

Jason Calacanis, never one to miss an opportunity for controversy, fired some heat seekers at Google during his WebmasterWorld keynote address recently…..