Google Mobilepocalypse Update

A day after the alleged major update, I thought it would make sense to highlight where we are at in the cycle.

Yesterday Google suggested their fear messaging caused 4.7% of webmasters to move over to mobile friendly design since the update was origin…

Google Mobilepocalypse Update

A day after the alleged major update, I thought it would make sense to highlight where we are at in the cycle.

Yesterday Google suggested their fear messaging caused 4.7% of webmasters to move over to mobile friendly design since the update was origin…

Consensus Bias

The Truth About Subjective Truths

A few months ago there was an article in New Scientist about Google’s research paper on potentially ranking sites based on how factual their content is. The idea is generally and genuinely absurd.

- You can’t copyright facts, which means that if this were a primary ranking signal & people focused on it then they would be optimizing their site to be scraped-n-displaced into the knowledge graph. Some people may sugar coat the knowledge graph and rich answers as opportunity, but it is Google outsourcing the cost of editorial labor while reaping the rewards.

.@mattcutts I think I have spotted one, Matt. Note the similarities in the content text: pic.twitter.com/uHux3rK57f— dan barker (@danbarker) February 27, 2014

- If Google is going to scrape, displace & monetize data sets, then the only ways to really profit are:

- focus on creating the types of content which can’t be easily scraped-n-displaced, or

- create proprietary metrics of your own, such that if they scrape them (and don’t cheat by hiding the source) they are marketing you

- In some areas (especially religion and politics) certain facts are verboten & people prefer things which provide confirmation bias of their pre-existing beliefs. End user usage data creates a “relevancy” signal out of comfortable false facts and personalization reinforces it.

- In some areas well known “facts” are sponsored falsehoods. In other areas some things slip through the cracks.

- In some areas Google changes what is considered fact based on where you are located.

How Google keeps everyone happy pic.twitter.com/KmBBzpfzdf— Amazing Maps (@Amazing_Maps) March 31, 2015

- Those who have enough money can create their own facts. It might be painting the perception of a landscape, hiring thousands of low waged workers to manipulate public perception on key issues and new technologies, or more sophisticated forms of social network analysis and manipulation to manipulate public perceptions.

- The previously mentioned links were governmental efforts. However such strategies are more common in the commercial market. Consider how Google has sponsored academic conferences while explicitly telling the people who put them on to hide the sponsorship as part of their lobbying efforts.

- Then there is the blurry area where government and commerce fuse, like when Google put about a half-dozen home team players in key governmental positions during the FTC investigation of Google. Google claimed lobbying was disgusting until they experienced the ROI firsthand.

- In some areas “facts” are backward looking views of the market which are framed, distorted & intentionally incomplete. There was a significant gap between internal voices and external messaging in the run up to the recent financial crisis. Even large & generally trustworthy organizations have some serious skeletons in their closets.

@mattcutts I wonder, what sort of impact does http://t.co/vdg3ARGSz2 have on their E-A-T? expertise +1, authority +1, trustworthiness -_?— aaron wall (@aaronwall) April 6, 2015

- In other areas the inconvenient facts get washed away over time by money.

For a search engine to be driven primarily by group think (see unity100’s posts here) is the death of diversity.

Less Diversity, More Consolidation

The problem is rarely attributed to Google, but as ecosystem diversity has declined (and entire segments of the ecosystem are unprofitable to service), more people are writing things like: “The market for helping small businesses maintain a home online isn’t one with growing profits – or, for the most part, any profits. It’s one that’s heading for a bloody period of consolidation.”

As companies grow in power the power gets monetized. If you can manipulate people without appearing to do so you can make a lot of money.

If you don’t think Google wants to disrupt you out of a job, you’ve been asleep at the wheel for the past decade— Michael Gray (@graywolf) March 13, 2015

We Just Listen to the Data (Ish)

As Google sucks up more data, aggregates intent, and scrapes-n-displaces the ecosystem they get air cover for some of their gray area behaviors by claiming things are driven by the data & putting the user first.

Those “data” and altruism claims from Google recently fell flat on their face when the Wall Street Journal published a number of articles about a leaked FTC document.

- How Google Skewed Search Results

- Inside the U.S. Antitrust Probe of Google

- Key quotes from the document from the WSJ & more from Danny Sullivan

- The PDF document is located here.

That PDF has all sorts of goodies in it about things like blocking competition, signing a low margin deal with AOL to keep monopoly marketshare (while also noting the general philosophy outside of a few key deals was to squeeze down on partners), scraping content and ratings from competing sites, Google force inserting itself in certain verticals anytime select competitors ranked in the organic result set, etc.

As damning as the above evidence is, more will soon be brought to light as the EU ramps up their formal statement of objection, as Google is less politically connected in Europe than they are in the United States:

“On Nov. 6, 2012, the night of Mr. Obama’s re-election, Mr. Schmidt was personally overseeing a voter-turnout software system for Mr. Obama. A few weeks later, Ms. Shelton and a senior antitrust lawyer at Google went to the White House to meet with one of Mr. Obama’s technology advisers. … By the end of the month, the FTC had decided not to file an antitrust lawsuit against the company, according to the agency’s internal emails.”

What is wild about the above leaked FTC document is it goes to great lengths to show an anti-competitive pattern of conduct toward the larger players in the ecosystem. Even if you ignore the distasteful political aspects of the FTC non-decision, the other potential out was:

“The distinction between harm to competitors and harm to competition is an important one: according to the modern interpretation of antitrust law, even if a business hurts individual competitors, it isn’t seen as breaking antitrust law unless it has also hurt the competitive process—that is, that it has taken actions that, for instance, raised prices or reduced choices, over all, for consumers.” – Vauhini Vara

Part of the reason the data set was incomplete on that front was for the most part only larger ecosystem players were consulted. Google engineers have went on record stating they aim to break people’s spirits in a game of psychological warfare. If that doesn’t hinder consumer choice, what does?

@aaronwall rofl. Feed the dragon Honestly these G investigations need solid long term SEOs to testify as well as brands.— Rishi Lakhani (@rishil) April 2, 2015

When the EU published their statement of objections Google’s response showed charts with the growth of Amazon and eBay as proof of a healthy ecosystem.

The market has been consolidated down into a few big winners which are still growing, but that in and of itself does not indicate a healthy nor neutral overall ecosystem.

The long tail of smaller e-commerce sites which have been scrubbed from the search results is nowhere to be seen in such charts / graphs / metrics.

The other obvious “untruth” hidden in the above Google chart is there is no way product searches on Google.com are included in Google’s aggregate metrics. They are only counting some subset of them which click through a second vertical ad type while ignoring Google’s broader impact via the combination of PLAs along with text-based AdWords ads and the knowledge graph, or even the recently rolled out rich product answer results.

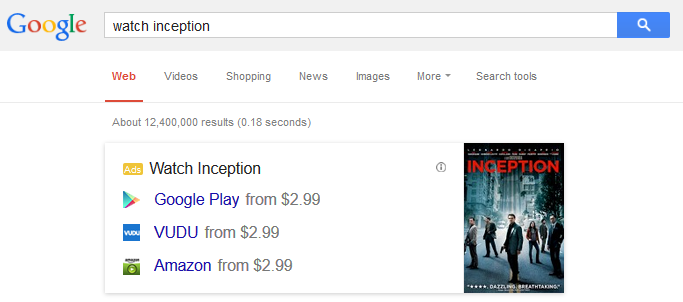

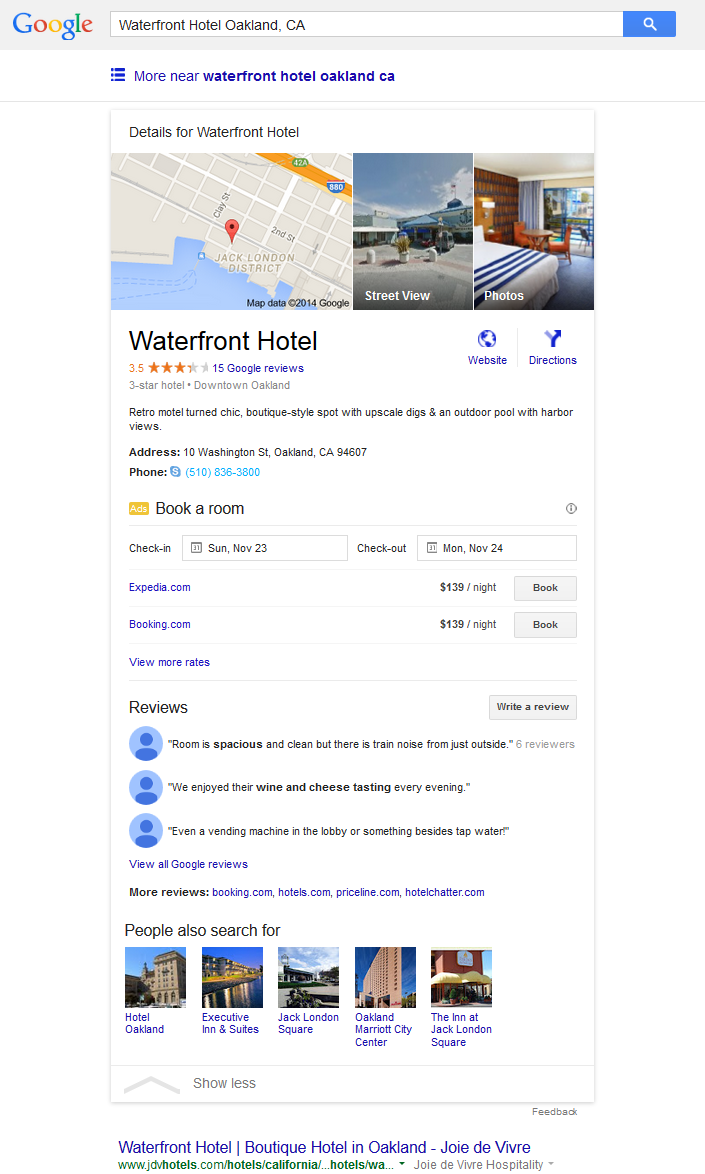

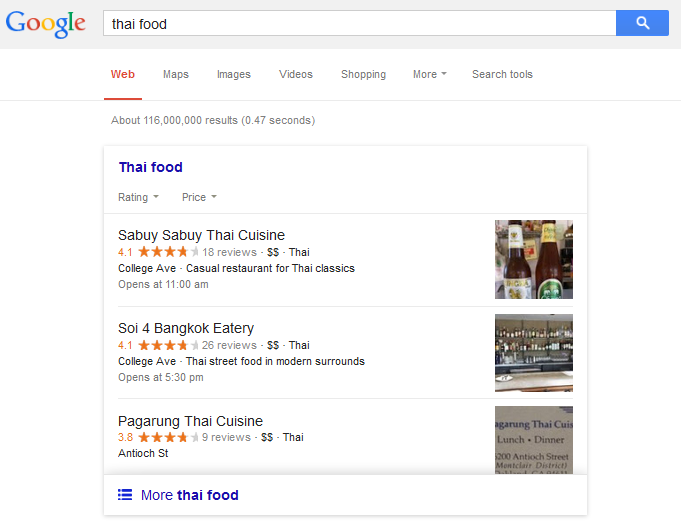

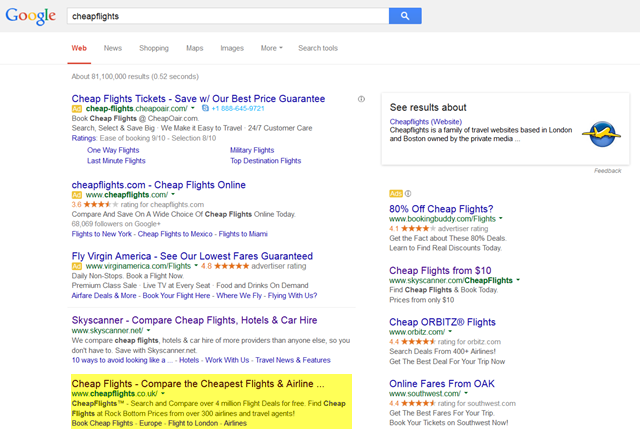

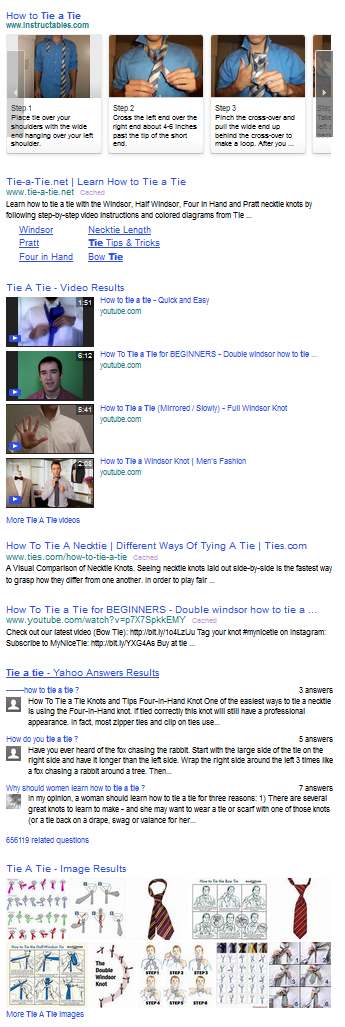

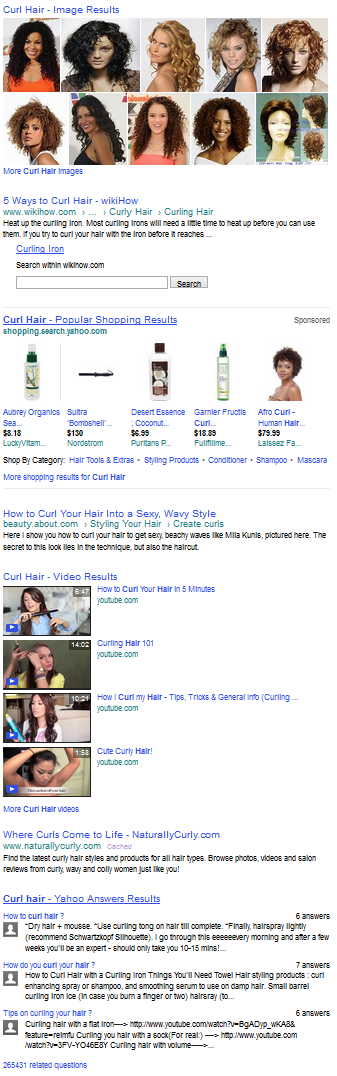

Who could look at the following search result (during anti-trust competitive review no less) and say “yeah, that looks totally reasonable?”

Google has allegedly spent the last couple years removing “visual clutter” from the search results & yet they manage to product SERPs looking like that – so long as the eye candy leads to clicks monetized directly by Google or other Google hosted pages.

The Search Results Become a Closed App Store

Search was an integral piece of the web which (in the past) put small companies on a level playing field with larger players.

That it no longer is.

WOW. RT @aimclear: 89% of domains that ranked over the last 7 years are now invisible, #SEO extinction. SRSLY, @marcustober #SEJSummit— Jonah Stein (@Jonahstein) April 15, 2015

“What kind of a system do you have when existing, large players are given a head start and other advantages over insurgents? I don’t know. But I do know it’s not the Internet.” – Dave Pell

The above quote was about app stores, but it certainly parallels a rater system which enforces the broken window fallacy against smaller players while looking the other way on larger players, unless they are in a specific vertical Google itself decides to enter.

“That actually proves my point that they use Raters to rate search results. aka: it *is* operated manually in many (how high?) cases. There is a growing body of consensus that a major portion of Googles current “algo” consists of thousands of raters that score results for ranking purposes. The “algorithm” by machine, on the majority of results seen by a high percentage of people, is almost non-existent.” … “what is being implied by the FTC is that Googles criteria was: GoogleBot +10 all Yelp content (strip mine all Yelp reviews to build their database). GoogleSerps -10 all yelp content (downgrade them in the rankings and claim they aren’t showing serps in serps). That is anticompetitive criteria that was manually set.” – Brett Tabke

The remote rater guides were even more explicitly anti-competitive than what was detailed in the FTC report. For instance, requiring hotel affiliate sites rated as spam even if they are helpful, for no reason other than being affiliate sites.

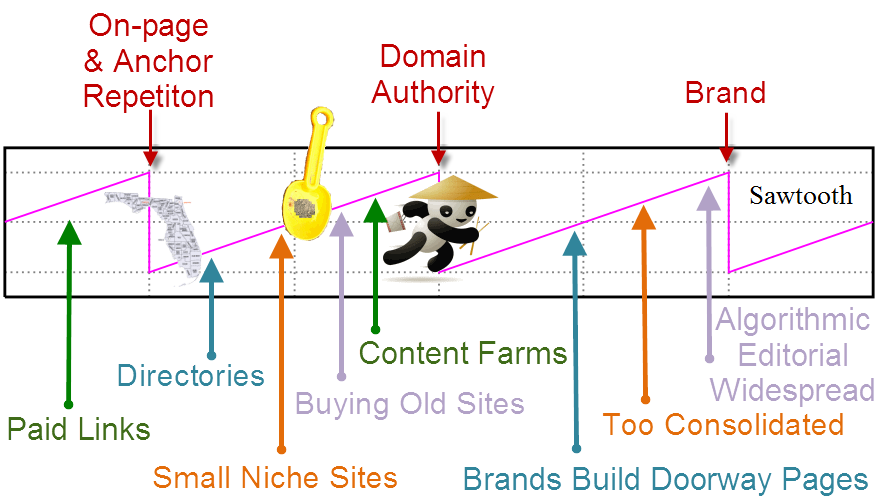

Is Brand the Answer?

About 3 years ago I wrote a blog post about how branding plays into SEO & why it might peak. As much as I have been accused of having a cynical view, the biggest problem with my post was it was naively optimistic. I presumed Google’s consolidation of markets would end up leading Google to alter their ranking approach when they were unable to overcome the established consensus bias which was subsidizing their competitors. The problem with my presumption is Google’s reliance on “data” was a chimera. When convenient (and profitable) data is discarded on an as need basis.

Or, put another way, the visual layout of the search result page trumps the underlying ranking algorithms.

Google has still highly disintermediated brand value, but they did it via vertical search, larger AdWords ad units & allowing competitive bidding on trademark terms.

If Not Illegal, then Scraping is Certainly Morally Deplorable…

As Google scraped Yelp & TripAdvisor reviews & gave them an ultimatum, Google was also scraping Amazon sales rank data and using it to power Google Shopping product rankings.

Around this same time Google pushed through a black PR smear job of Bing for doing a similar, lesser offense to Google on rare, made-up longtail searches which were not used by the general public.

While Google was outright stealing third party content and putting it front & center on core keyword searches, they had to use “about 100 “synthetic queries”—queries that you would never expect a user to type” to smear Bing & even numerous of these queries did not show the alleged signal.

Here are some representative views of that incident:

- “We look forward to competing with genuinely new search algorithms out there—algorithms built on core innovation, and not on recycled search results from a competitor. So to all the users out there looking for the most authentic, relevant search results, we encourage you to come directly to Google. And to those who have asked what we want out of all this, the answer is simple: we’d like for this practice to stop.” – Google’s Amit Singhal

- “It’s cheating to me because we work incredibly hard and have done so for years but they just get there based on our hard work. I don’t know how else to call it but plain and simple cheating. Another analogy is that it’s like running a marathon and carrying someone else on your back, who jumps off just before the finish line.” Amit Singhal, more explicitly.

- “One comment that I’ve heard is that “it’s whiny for Google to complain about this.” I agree that’s a risk, but at the same time I think it’s important to go on the record about this.” – Matt Cutts

- “I’ve got some sympathy for Google’s view that Bing is doing something it shouldn’t.” – Danny Sullivan

What is so crazy about the above quotes is Google engineers knew at the time what Google was doing with Google’s scraping. I mentioned that contrast shortly after the above PR fiasco happened:

when popular vertical websites (that have invested a decade and millions of Dollars into building a community) complain about Google disintermediating them by scraping their reviews, Google responds by telling those webmasters to go pound sand & that if they don’t want Google scraping them then they should just block Googlebot & kill their search rankings

Learning the Rules of the Road

If you get a sense “the rules” are arbitrary, hypocritical & selectively enforced – you may be on to something:

- “The bizrate/nextag/epinions pages are decently good results. They are usually well-format[t]ed, rarely broken, load quickly and usually on-topic. Raters tend to like them” … which is why … “Google repeatedly changed the instructions for raters until raters assessed Google’s services favorably”

- and while claimping down on those services (“business models to avoid“) … “Google elected to show its product search OneBox “regardless of the quality” of that result and despite “pretty terribly embarrassing failures” ”

- and since Google knew their offerings were vastly inferior, “most of us on geo [Google Local] think we won’t win unless we can inject a lot more of local directly into google results” … thus they added “a ‘concurring sites’ signal to bias ourselves toward triggering [display of a Google local service] when a local-oriented aggregator site (i.e. Citysearch) shows up in the web results””

Google’s justification for not being transparent is “spammer” would take advantage of transparency to put inferior results front and center – the exact same thing Google does when it benefits the bottom line!

Around the same time Google hard-codes the self-promotion of their own vertical offerings, they may choose to ban competing business models through “quality” score updates and other similar changes:

The following types of websites are likely to merit low landing page quality scores and may be difficult to advertise affordably. In addition, it’s important for advertisers of these types of websites to adhere to our landing page quality guidelines regarding unique content.

- eBook sites that show frequent ads

- ‘Get rich quick’ sites

- Comparison shopping sites

- Travel aggregators

- Affiliates that don’t comply with our affiliate guidelines

The anti-competitive conspiracy theory is no longer conspiracy, nor theory.

Key points highlighted by the European Commission:

- Google systematically positions and prominently displays its comparison shopping service in its general search results pages, irrespective of its merits. This conduct started in 2008.

- Google does not apply to its own comparison shopping service the system of penalties, which it applies to other comparison shopping services on the basis of defined parameters, and which can lead to the lowering of the rank in which they appear in Google’s general search results pages.

- Froogle, Google’s first comparison shopping service, did not benefit from any favourable treatment, and performed poorly.

- As a result of Google’s systematic favouring of its subsequent comparison shopping services “Google Product Search” and “Google Shopping”, both experienced higher rates of growth, to the detriment of rival comparison shopping services.

- Google’s conduct has a negative impact on consumers and innovation. It means that users do not necessarily see the most relevant comparison shopping results in response to their queries, and that incentives to innovate from rivals are lowered as they know that however good their product, they will not benefit from the same prominence as Google’s product.

Overcoming Consensus Bias

Consensus bias is set to an absurdly high level to block out competition, slow innovation, and make the search ecosystem easier to police. This acts as a tax on newer and lesser-known players and a subsidy toward larger players.

Eventually that subsidy would be a problem to Google if the algorithm was the only thing that matters, however if the entire result set itself can be displaced then that subsidy doesn’t really matter, as it can be retracted overnight.

Whenever Google has a competing offering ready, they put it up top even if they are embarrassed by it and 100% certain it is a vastly inferior option to other options in the marketplace.

That is how Google reinforces, then manages to overcome consensus bias.

How do you overcome consensus bias?

Consensus Bias

The Truth About Subjective Truths

A few months ago there was an article in New Scientist about Google’s research paper on potentially ranking sites based on how factual their content is. The idea is generally and genuinely absurd.

- You can’t copyright facts, which means that if this were a primary ranking signal & people focused on it then they would be optimizing their site to be scraped-n-displaced into the knowledge graph. Some people may sugar coat the knowledge graph and rich answers as opportunity, but it is Google outsourcing the cost of editorial labor while reaping the rewards.

.@mattcutts I think I have spotted one, Matt. Note the similarities in the content text: pic.twitter.com/uHux3rK57f— dan barker (@danbarker) February 27, 2014

- If Google is going to scrape, displace & monetize data sets, then the only ways to really profit are:

- focus on creating the types of content which can’t be easily scraped-n-displaced, or

- create proprietary metrics of your own, such that if they scrape them (and don’t cheat by hiding the source) they are marketing you

- In some areas (especially religion and politics) certain facts are verboten & people prefer things which provide confirmation bias of their pre-existing beliefs. End user usage data creates a “relevancy” signal out of comfortable false facts and personalization reinforces it.

- In some areas well known “facts” are sponsored falsehoods. In other areas some things slip through the cracks.

- In some areas Google changes what is considered fact based on where you are located.

How Google keeps everyone happy pic.twitter.com/KmBBzpfzdf— Amazing Maps (@Amazing_Maps) March 31, 2015

- Those who have enough money can create their own facts. It might be painting the perception of a landscape, hiring thousands of low waged workers to manipulate public perception on key issues and new technologies, or more sophisticated forms of social network analysis and manipulation to manipulate public perceptions.

- The previously mentioned links were governmental efforts. However such strategies are more common in the commercial market. Consider how Google has sponsored academic conferences while explicitly telling the people who put them on to hide the sponsorship as part of their lobbying efforts.

- Then there is the blurry area where government and commerce fuse, like when Google put about a half-dozen home team players in key governmental positions during the FTC investigation of Google. Google claimed lobbying was disgusting until they experienced the ROI firsthand.

- In some areas “facts” are backward looking views of the market which are framed, distorted & intentionally incomplete. There was a significant gap between internal voices and external messaging in the run up to the recent financial crisis. Even large & generally trustworthy organizations have some serious skeletons in their closets.

@mattcutts I wonder, what sort of impact does http://t.co/vdg3ARGSz2 have on their E-A-T? expertise +1, authority +1, trustworthiness -_?— aaron wall (@aaronwall) April 6, 2015

- In other areas the inconvenient facts get washed away over time by money.

For a search engine to be driven primarily by group think (see unity100’s posts here) is the death of diversity.

Less Diversity, More Consolidation

The problem is rarely attributed to Google, but as ecosystem diversity has declined (and entire segments of the ecosystem are unprofitable to service), more people are writing things like: “The market for helping small businesses maintain a home online isn’t one with growing profits – or, for the most part, any profits. It’s one that’s heading for a bloody period of consolidation.”

As companies grow in power the power gets monetized. If you can manipulate people without appearing to do so you can make a lot of money.

If you don’t think Google wants to disrupt you out of a job, you’ve been asleep at the wheel for the past decade— Michael Gray (@graywolf) March 13, 2015

We Just Listen to the Data (Ish)

As Google sucks up more data, aggregates intent, and scrapes-n-displaces the ecosystem they get air cover for some of their gray area behaviors by claiming things are driven by the data & putting the user first.

Those “data” and altruism claims from Google recently fell flat on their face when the Wall Street Journal published a number of articles about a leaked FTC document.

- How Google Skewed Search Results

- Inside the U.S. Antitrust Probe of Google

- Key quotes from the document from the WSJ & more from Danny Sullivan

- The PDF document is located here.

That PDF has all sorts of goodies in it about things like blocking competition, signing a low margin deal with AOL to keep monopoly marketshare (while also noting the general philosophy outside of a few key deals was to squeeze down on partners), scraping content and ratings from competing sites, Google force inserting itself in certain verticals anytime select competitors ranked in the organic result set, etc.

As damning as the above evidence is, more will soon be brought to light as the EU ramps up their formal statement of objection, as Google is less politically connected in Europe than they are in the United States:

“On Nov. 6, 2012, the night of Mr. Obama’s re-election, Mr. Schmidt was personally overseeing a voter-turnout software system for Mr. Obama. A few weeks later, Ms. Shelton and a senior antitrust lawyer at Google went to the White House to meet with one of Mr. Obama’s technology advisers. … By the end of the month, the FTC had decided not to file an antitrust lawsuit against the company, according to the agency’s internal emails.”

What is wild about the above leaked FTC document is it goes to great lengths to show an anti-competitive pattern of conduct toward the larger players in the ecosystem. Even if you ignore the distasteful political aspects of the FTC non-decision, the other potential out was:

“The distinction between harm to competitors and harm to competition is an important one: according to the modern interpretation of antitrust law, even if a business hurts individual competitors, it isn’t seen as breaking antitrust law unless it has also hurt the competitive process—that is, that it has taken actions that, for instance, raised prices or reduced choices, over all, for consumers.” – Vauhini Vara

Part of the reason the data set was incomplete on that front was for the most part only larger ecosystem players were consulted. Google engineers have went on record stating they aim to break people’s spirits in a game of psychological warfare. If that doesn’t hinder consumer choice, what does?

@aaronwall rofl. Feed the dragon Honestly these G investigations need solid long term SEOs to testify as well as brands.— Rishi Lakhani (@rishil) April 2, 2015

When the EU published their statement of objections Google’s response showed charts with the growth of Amazon and eBay as proof of a healthy ecosystem.

The market has been consolidated down into a few big winners which are still growing, but that in and of itself does not indicate a healthy nor neutral overall ecosystem.

The long tail of smaller e-commerce sites which have been scrubbed from the search results is nowhere to be seen in such charts / graphs / metrics.

The other obvious “untruth” hidden in the above Google chart is there is no way product searches on Google.com are included in Google’s aggregate metrics. They are only counting some subset of them which click through a second vertical ad type while ignoring Google’s broader impact via the combination of PLAs along with text-based AdWords ads and the knowledge graph, or even the recently rolled out rich product answer results.

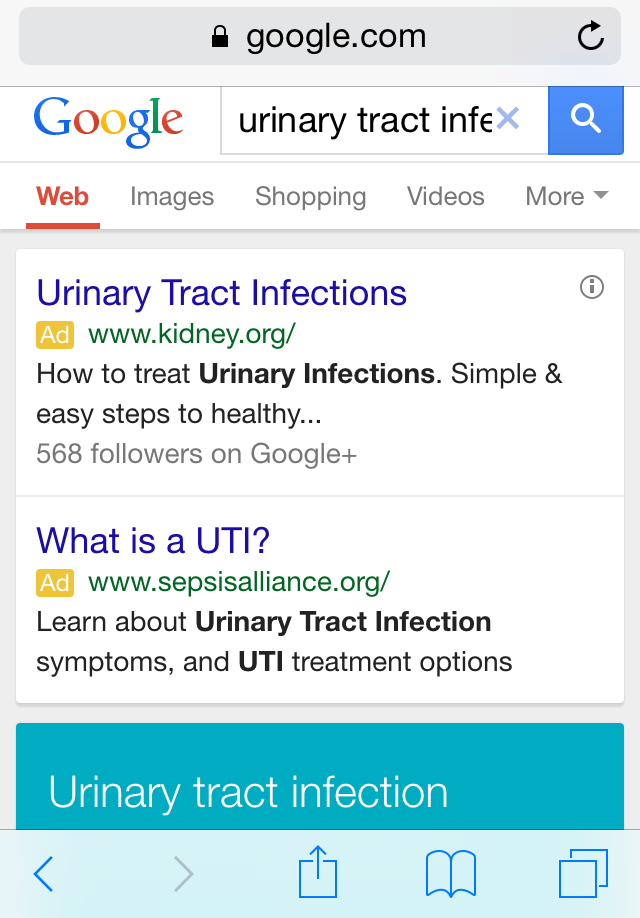

Who could look at the following search result (during anti-trust competitive review no less) and say “yeah, that looks totally reasonable?”

Google has allegedly spent the last couple years removing “visual clutter” from the search results & yet they manage to product SERPs looking like that – so long as the eye candy leads to clicks monetized directly by Google or other Google hosted pages.

The Search Results Become a Closed App Store

Search was an integral piece of the web which (in the past) put small companies on a level playing field with larger players.

That it no longer is.

WOW. RT @aimclear: 89% of domains that ranked over the last 7 years are now invisible, #SEO extinction. SRSLY, @marcustober #SEJSummit— Jonah Stein (@Jonahstein) April 15, 2015

“What kind of a system do you have when existing, large players are given a head start and other advantages over insurgents? I don’t know. But I do know it’s not the Internet.” – Dave Pell

The above quote was about app stores, but it certainly parallels a rater system which enforces the broken window fallacy against smaller players while looking the other way on larger players, unless they are in a specific vertical Google itself decides to enter.

“That actually proves my point that they use Raters to rate search results. aka: it *is* operated manually in many (how high?) cases. There is a growing body of consensus that a major portion of Googles current “algo” consists of thousands of raters that score results for ranking purposes. The “algorithm” by machine, on the majority of results seen by a high percentage of people, is almost non-existent.” … “what is being implied by the FTC is that Googles criteria was: GoogleBot +10 all Yelp content (strip mine all Yelp reviews to build their database). GoogleSerps -10 all yelp content (downgrade them in the rankings and claim they aren’t showing serps in serps). That is anticompetitive criteria that was manually set.” – Brett Tabke

The remote rater guides were even more explicitly anti-competitive than what was detailed in the FTC report. For instance, requiring hotel affiliate sites rated as spam even if they are helpful, for no reason other than being affiliate sites.

Is Brand the Answer?

About 3 years ago I wrote a blog post about how branding plays into SEO & why it might peak. As much as I have been accused of having a cynical view, the biggest problem with my post was it was naively optimistic. I presumed Google’s consolidation of markets would end up leading Google to alter their ranking approach when they were unable to overcome the established consensus bias which was subsidizing their competitors. The problem with my presumption is Google’s reliance on “data” was a chimera. When convenient (and profitable) data is discarded on an as need basis.

Or, put another way, the visual layout of the search result page trumps the underlying ranking algorithms.

Google has still highly disintermediated brand value, but they did it via vertical search, larger AdWords ad units & allowing competitive bidding on trademark terms.

If Not Illegal, then Scraping is Certainly Morally Deplorable…

As Google scraped Yelp & TripAdvisor reviews & gave them an ultimatum, Google was also scraping Amazon sales rank data and using it to power Google Shopping product rankings.

Around this same time Google pushed through a black PR smear job of Bing for doing a similar, lesser offense to Google on rare, made-up longtail searches which were not used by the general public.

While Google was outright stealing third party content and putting it front & center on core keyword searches, they had to use “about 100 “synthetic queries”—queries that you would never expect a user to type” to smear Bing & even numerous of these queries did not show the alleged signal.

Here are some representative views of that incident:

- “We look forward to competing with genuinely new search algorithms out there—algorithms built on core innovation, and not on recycled search results from a competitor. So to all the users out there looking for the most authentic, relevant search results, we encourage you to come directly to Google. And to those who have asked what we want out of all this, the answer is simple: we’d like for this practice to stop.” – Google’s Amit Singhal

- “It’s cheating to me because we work incredibly hard and have done so for years but they just get there based on our hard work. I don’t know how else to call it but plain and simple cheating. Another analogy is that it’s like running a marathon and carrying someone else on your back, who jumps off just before the finish line.” Amit Singhal, more explicitly.

- “One comment that I’ve heard is that “it’s whiny for Google to complain about this.” I agree that’s a risk, but at the same time I think it’s important to go on the record about this.” – Matt Cutts

- “I’ve got some sympathy for Google’s view that Bing is doing something it shouldn’t.” – Danny Sullivan

What is so crazy about the above quotes is Google engineers knew at the time what Google was doing with Google’s scraping. I mentioned that contrast shortly after the above PR fiasco happened:

when popular vertical websites (that have invested a decade and millions of Dollars into building a community) complain about Google disintermediating them by scraping their reviews, Google responds by telling those webmasters to go pound sand & that if they don’t want Google scraping them then they should just block Googlebot & kill their search rankings

Learning the Rules of the Road

If you get a sense “the rules” are arbitrary, hypocritical & selectively enforced – you may be on to something:

- “The bizrate/nextag/epinions pages are decently good results. They are usually well-format[t]ed, rarely broken, load quickly and usually on-topic. Raters tend to like them” … which is why … “Google repeatedly changed the instructions for raters until raters assessed Google’s services favorably”

- and while claimping down on those services (“business models to avoid“) … “Google elected to show its product search OneBox “regardless of the quality” of that result and despite “pretty terribly embarrassing failures” ”

- and since Google knew their offerings were vastly inferior, “most of us on geo [Google Local] think we won’t win unless we can inject a lot more of local directly into google results” … thus they added “a ‘concurring sites’ signal to bias ourselves toward triggering [display of a Google local service] when a local-oriented aggregator site (i.e. Citysearch) shows up in the web results””

Google’s justification for not being transparent is “spammer” would take advantage of transparency to put inferior results front and center – the exact same thing Google does when it benefits the bottom line!

Around the same time Google hard-codes the self-promotion of their own vertical offerings, they may choose to ban competing business models through “quality” score updates and other similar changes:

The following types of websites are likely to merit low landing page quality scores and may be difficult to advertise affordably. In addition, it’s important for advertisers of these types of websites to adhere to our landing page quality guidelines regarding unique content.

- eBook sites that show frequent ads

- ‘Get rich quick’ sites

- Comparison shopping sites

- Travel aggregators

- Affiliates that don’t comply with our affiliate guidelines

The anti-competitive conspiracy theory is no longer conspiracy, nor theory.

Key points highlighted by the European Commission:

- Google systematically positions and prominently displays its comparison shopping service in its general search results pages, irrespective of its merits. This conduct started in 2008.

- Google does not apply to its own comparison shopping service the system of penalties, which it applies to other comparison shopping services on the basis of defined parameters, and which can lead to the lowering of the rank in which they appear in Google’s general search results pages.

- Froogle, Google’s first comparison shopping service, did not benefit from any favourable treatment, and performed poorly.

- As a result of Google’s systematic favouring of its subsequent comparison shopping services “Google Product Search” and “Google Shopping”, both experienced higher rates of growth, to the detriment of rival comparison shopping services.

- Google’s conduct has a negative impact on consumers and innovation. It means that users do not necessarily see the most relevant comparison shopping results in response to their queries, and that incentives to innovate from rivals are lowered as they know that however good their product, they will not benefit from the same prominence as Google’s product.

Overcoming Consensus Bias

Consensus bias is set to an absurdly high level to block out competition, slow innovation, and make the search ecosystem easier to police. This acts as a tax on newer and lesser-known players and a subsidy toward larger players.

Eventually that subsidy would be a problem to Google if the algorithm was the only thing that matters, however if the entire result set itself can be displaced then that subsidy doesn’t really matter, as it can be retracted overnight.

Whenever Google has a competing offering ready, they put it up top even if they are embarrassed by it and 100% certain it is a vastly inferior option to other options in the marketplace.

That is how Google reinforces, then manages to overcome consensus bias.

How do you overcome consensus bias?

Designing for Privacy

Information is a commodity. Corporations are passing around consumer behavioral profiles like brokers with stocks, and the vast majority of the American public is none the wiser of this market’s scope. Very few people actually check the permissions portion of the Google Play store page before downloading a new app, and who has time to pore over the tedious 48-page monstrosity that is the iTunes terms and conditions contract?

With the advent of wearables, ubiquitous computing, and widespread mobile usage, the individual’s market share of their own information is shrinking at an alarming rate. In response, a growing (and vocal) group of consumers is voicing its concerns about the impact of the effective end of privacy online. And guess what? It’s up to designers to address those concerns in meaningful ways to assuage consumer demand.

But how can such a Sisyphean feat be managed? In a world that demands personalized service at the cost of privacy, how can you create and manage a product that strikes the right balance between the two?

That’s a million dollar question, so let’s break it into more affordable chunks.

Transparency

The big problem with informed consent is the information. It’s your responsibility to be up front with your users as to what exactly they’re trading you in return for your product/service. Not just the cash flow, but the data stream as well. Where’s it going? What’s it being used for?

99.99% of all smartphone apps ask for permission to modify and delete the contents of a phone’s data storage. 99.9999% of the time that doesn’t mean it’s going to copy and paste contact info, photos, or personal correspondences. But that .0001% is mighty worrisome.

Let your users know exactly what you’re asking from them, and what you’ll do with their data. Advertise the fact that you’re not sharing it with corporate interests to line your pockets. And if you are, well, stop that. It’s annoying and you’re ruining the future.

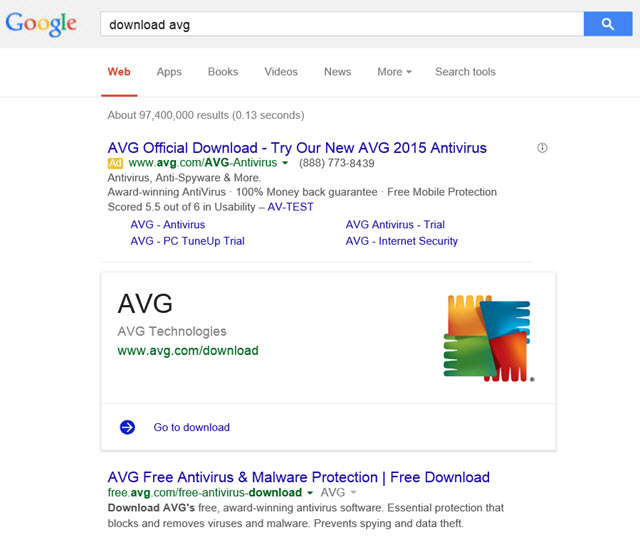

How can you advertise the key points of your privacy policies? Well, you could take a cue from noted online retailer Zappos.com. Their “PROTECTING YOUR PERSONAL INFORMATION” page serves as a decent template for transparency.

They have clearly defined policies about what they will and won’t do to safeguard shopper information. For one, they promise never to “rent, sell or share” user data to anyone, and immediately below, they link to their privacy policy, which weighs in a bit heavy at over 2500 words, but is yet dwarfed by other more convoluted policies.

They also describe their efforts to safeguard user data from malicious hacking threats through the use of SSL tech and firewalls. Then they have an FAQ addressing commonly expressed security concerns. Finally, they have a 24/7 contact line to assure users of personal attention to their privacy queries.

Now it should be noted that this is a template for a good transparency practices, and not precisely a great example of it. The content and intention is there, so what’s missing?

Good UX.

The fine print is indeed a little too fine, the text is a bit too dense (at least where the actual privacy policy is concerned), and the page itself is buried within the fat footer on the main page.

So who does a better job?

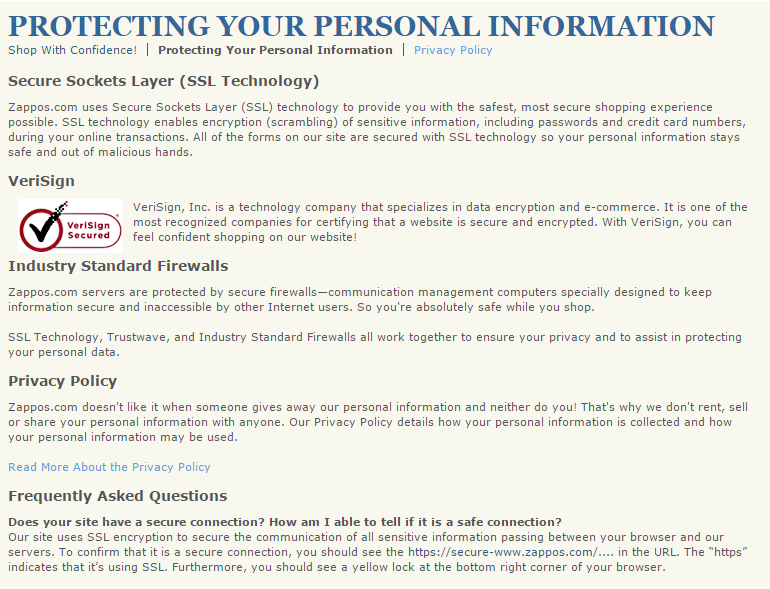

CodePen has actually produces an attractively progressive solution.

As you can see, CodePen has taken the time to produce two different versions of their ToS. A typical, lengthy bit of legalese on the left, and an easily readable layman’s version on the right. Providing these as a side by side comparison shows user appreciation and an emphasis on providing a positive UX.

This is all well and good for the traditional web browsing environment, but most of the problems with privacy these days stem from mobile usage. Let’s take a look at how mobile applications are taking advantage of the lag between common knowledge and current technology to make a profit off of private data.

Mobile Permissions

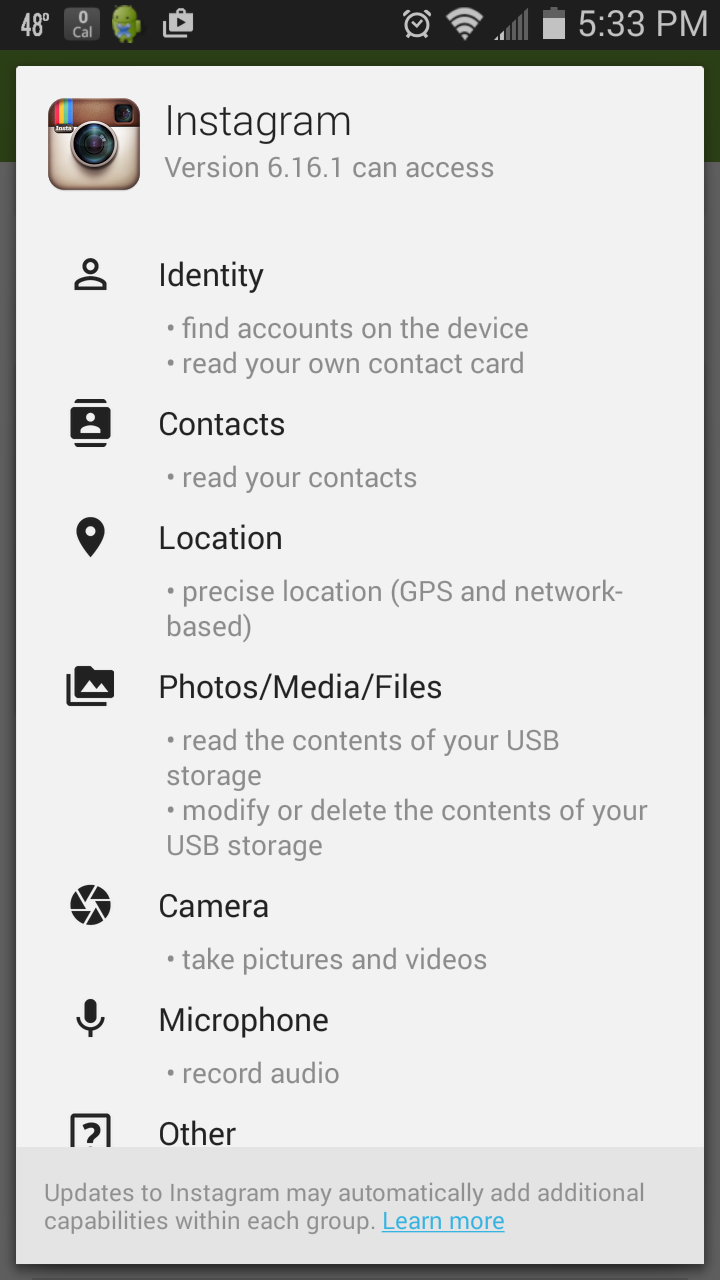

In the mobile space, the Google Play store does a decent job of letting users know what permissions they’re giving, whenever they download an app with its “Permission details” tab:

As you can see, Instagram is awfully nosy, but that’s no surprise. Instagram has come under fire for their privacy policies before. What’s perhaps more surprising, is the unbelievable ubiquity with which invasive data gathering is practiced in the mobile space. Compare Instagram’s permissions to another popular application you might have added to your smartphone’s repertoire:

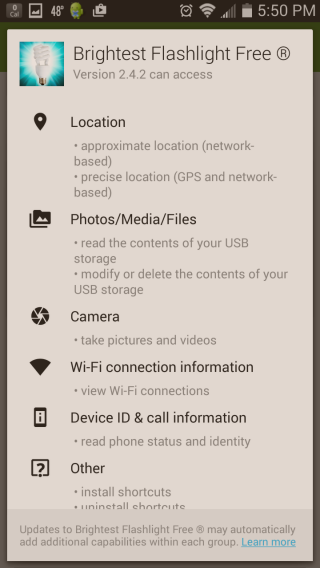

Why, pray tell, does a flashlight have any need for your location, photos/media/files, device ID and/or call information? I’ll give you a clue: it doesn’t.

“Brightest Flashlight Free” scoops up personal data and sells it to advertisers. The developer was actually sued in 2013 for having a poorly written privacy policy. One that did not disclose the apps malicious intentions to sell user data.

Now the policy is up to date, but the insidious data gathering and selling continues. Unfortunately, it isn’t the only flashlight application to engage in the same sort of dirty data tactics. The fact is, you have to do a surprising amount of research to find any application that doesn’t grab a bit more data than advertised, especially when the global market for mobile-user data approaches nearly $10 billion.

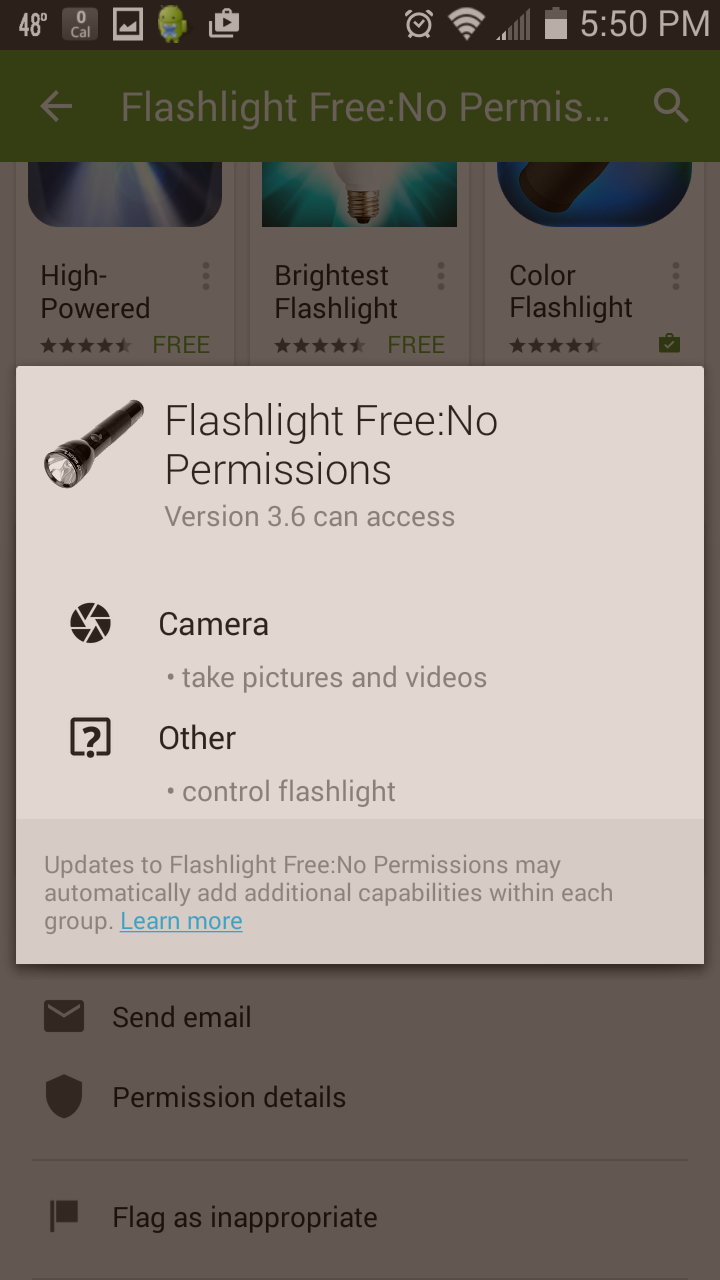

For your peace of mind, there is at least one example of an aptly named flashlight application which doesn’t sell your personal info to the highest bidder.

But don’t get too enthusiastic just yet. This is just one application. How many do you have downloaded on your smartphone? Chances are pretty good that you’re harboring a corporate spy on your mobile device.

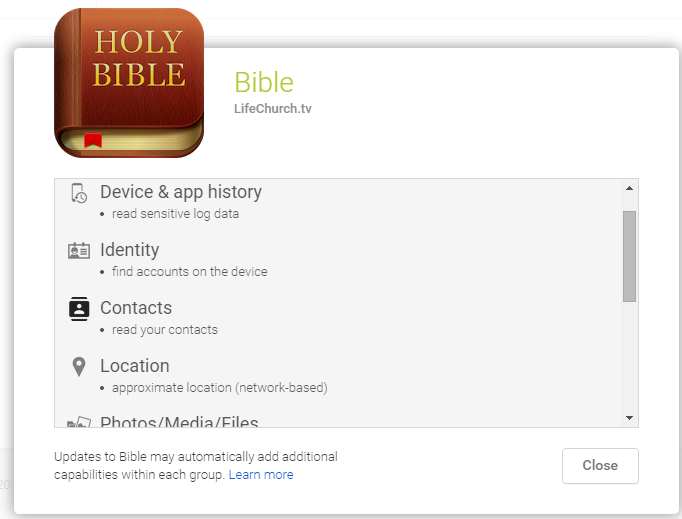

Hell, even the Holy Bible takes your data:

Is nothing sacred? To the App developer’s credit, they’ve expressed publicly that they’ll never sell user data to third party interests, but it’s still a wakeup call.

Privacy and UX

What then, are some UX friendly solutions? Designers are forced to strike a balance. Apps need data to run more efficiently, and to better serve users. Yet users aren’t used to the concerns associated with the wholesale data permissions required of most applications. What kind of design patterns can be implemented to bring in a bit of harmony?

First and foremost, it’s important to be utilitarian in your data gathering. Offering informed consent is important, letting your users know what permissions they’re granting and why, but doing so in performant user flows is paramount.

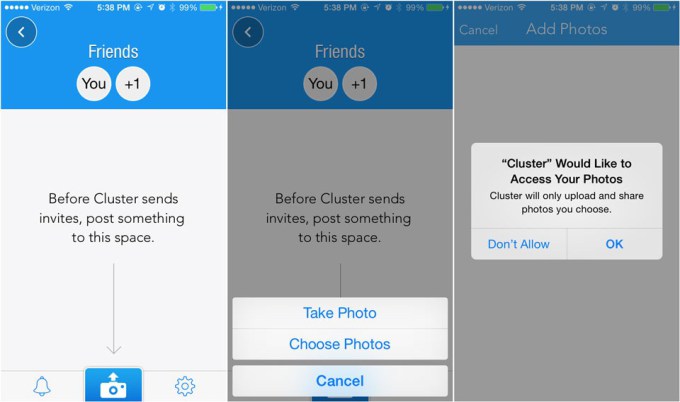

For example, iOS has at least one up on Android with their “dynamic permissions.” This means iOS users have the option of switching up their permissions in-app, rather than having to decide all or nothing upon installation as with Android apps.

http://techcrunch.com/2014/04/04/the-right-way-to-ask-users-for-ios-permissions/

Note how the Cluster application prompts the user to give access to their photos as their interacting with the application, and reassures them of exactly what the app will do. The user is fully informed, and offers their consent as a result of being asked for a certain level of trust.

All of this is accomplished while they’re aiming to achieve a goal within the app. This effectively moves permission granting to 100% because the developers have created a sense of comfort with the application’s inner workings. That’s what designing for privacy is all about: slowly introducing a user to the concept of shared data, and never taking undue advantage of an uninformed user.

Of course, this is just one facet of the privacy/UX conversation. Informing a user of what they’re allowing is important, but reassuring them that their data is secure is even more so.

Safeguarding User Data

Asking a user to trust your brand is essential to a modern business model, you’re trying to engender a trust based relationship with all of your visitors, after all. The real trick, however, is convincing users that their data is safe in your hands—in other words, it won’t be sold to or stolen by 3rd parties, be they legitimate corporations or malicious hackers.

We touched on this earlier with the Zappos example. Zappos reassures its shoppers with SSL, firewalls, and a personalized promise never to share or sell data. All of which should be adopted as industry standards and blatantly advertised to assuage privacy concerns.

Building these safeguards into your service/application/website/what-have-you is extremely important. To gain consumer trust is to first provide transparency in your own practices, and then to protect your users from the wolves at the gate.

Fortunately, data protection is a booming business with a myriad of effective solutions currently in play. Here are just a few of the popular cloud-based options:

Whatever security solutions you choose, the priorities remain the same. Build trust, and more importantly: actually deserve whatever trust you build.

It hardly needs to be stated, but the real key to a future where personal privacy still exists, is to actually be better people. The kind that can be trusted to hold sensitive data.

Is such a future still possible? Let us know what you think in the comment section.

Kyle Sanders is a member of SEOBook and founder of Complete Web Resources, an Austin-based SEO and digital marketing agency.

Google Mobile Search Result Highlights

Google recently added highlights at the bottom of various sections of their mobile search results. The highlights appear on ads, organic results, and other various vertical search insertion types. The colors vary arbitrarily by section and are pattern…

Responsive Design for Mobile SEO

Why Is Mobile So Important?

If you look just at your revenue numbers as a publisher, it is easy to believe mobile is of limited importance. In our last post I mentioned an AdAge article highlighting how the New York Times was generating over half their traffic from mobile with it accounting for about 10% of their online ad revenues.

Large ad networks (Google, Bing, Facebook, Twitter, Yahoo!, etc.) can monetize mobile *much* better than other publishers can because they aggressively blend the ads right into the social stream or search results, causing them to have a much higher CTR than ads on the desktop. Bing recently confirmed the same trend RKG has highlighted about Google’s mobile ad clicks:

While mobile continues to be an area of rapid and steady growth, we are pleased to report that the Yahoo Bing Network’s search and click volumes from smart phone users have more than doubled year-over-year. Click volumes have generally outpaced growth in search volume

Those ad networks want other publishers to make their sites mobile friendly for a couple reasons…

- If the downstream sites are mobile friendly, then users are more likely to go back to the central ad / search / social networks more often & be more willing to click out on the ads from them.

- If mobile is emphasized in importance, then those who are critical of the value of the channel may eat some of the blame for relative poor performance, particularly if they haven’t spent resources optimizing user experience on the channel.

Further Elevating the Importance of Mobile

Modern Love, by Banksy. @SachinKalbag pic.twitter.com/Xzcxnkmmnx— Anand Ranganathan (@ARangarajan1972) November 29, 2014

Google has hinted at the importance of having a mobile friendly design, labeling friendly sites, testing labeling slow sites & offering tools to test how mobile friendly a site design is.

Today Google put out an APB warning they are going to increase the importance of mobile friendly website design:

Starting April 21, we will be expanding our use of mobile-friendliness as a ranking signal. This change will affect mobile searches in all languages worldwide and will have a significant impact in our search results.

In the past Google would hint that they were working to clean up link spam or content farms or website hacking and so on. In some cases announcing such efforts was done to try to discourage investment in the associated strategies, but it is quite rare that Google pre-announces an algorithmic shift which they state will be significant & they put an exact date on it.

I wouldn’t recommend waiting until the last day to implement the design changes, as it will take Google time to re-crawl your site & recognize if the design is mobile friendly.

Those who ignore the warning might be in for significant pain.

Some sites which were hit by Panda saw a devastating 50% to 70% decline in search traffic, but given how small mobile phone screen sizes are, even ranking just a couple spots lower could cause an 80% or 90% decline in mobile search traffic.

Another related issue referenced in the above post was tying in-app content to mobile search personalization:

Starting today, we will begin to use information from indexed apps as a factor in ranking for signed-in users who have the app installed. As a result, we may now surface content from indexed apps more prominently in search. To find out how to implement App Indexing, which allows us to surface this information in search results, have a look at our step-by-step guide on the developer site.

Google also announced today they are extending AdWords-styled ads to their Google Play search results, so they now have a direct economic incentive to allow app activity to bleed into their organic ranking factors.

m. Versus Responsive Design

Some sites have a separate m. version for mobile visitors, while other sites keep consistent URLs & employ responsive design. How the m. version works is on the regular version of their site (say like www.seobook.com) a webmaster could add an alternative reference to the mobile version in the head section of the document

<link rel=”alternate” media=”only screen and (max-width: 640px)” href=”http://m.seobook.com/” >

…and then on the mobile version, they would rel=canonical it back to the desktop version, likeso…

<link rel=”canonical” href=”http://www.seobook.com/” >

With the above sort of code in place, Google would rank the full version of the site on desktop searches & the m. version in mobile search results.

3 or 4 years ago it was a toss up as to which of these 2 options would win, but over time it appears the responsive design option is more likely to win out.

Here are a couple reasons responsive is likely to win out as a better solution:

- If people share a mobile-friendly URL on Twitter, Facebook or other social networks & the URL changes, then when someone on a desktop computer clicks on the shared m. version of the page with fewer ad units & less content on the page, then the publisher is providing a worse user experience & is losing out on incremental monetization they would have achieved with the additional ad units.

- While some search engines and social networks might be good at consolidating the performance of the same piece of content across multiple URL versions, some of them will periodically mess it up. That in turn will lead in some cases to lower rankings in search results or lower virality of content on social networks.

- Over time there is an increasing blur between phones and tablets with phablets. Some high pixel density screens on cross over devices may appear large in terms of pixel count, but still not have particularly large screens, making it easy for users to misclick on the interface.

- When Bing gave their best practices for mobile, they stated: “Ideally, there shouldn’t be a difference between the “mobile-friendly” URL and the “desktop” URL: the site would automatically adjust to the device — content, layout, and all.” In that post Bing shows some examples of m. versions of sites ranking in their mobile search results, however for smaller & lesser known sites they may not rank the m. version the way they do for Yelp or Wikipedia, which means that even if you optimize the m. version of the site to a great degree, that isn’t the version all mobile searchers will see. Back in 2012 Bing also stated their preference for a single version of a URL, in part based on lowering crawl traffic & consolidation of ranking signals.

In addition to responsive web design & separate mobile friendly URLs, a third configuration option is dynamic serving, which uses the Vary HTTP header to detect the user-agent & use that to drive the layout.

Solutions for Quickly Implementing Responsive Design

New Theme / Design

If your site hasn’t been updated in years you might be suprised at what you find available on sites like ThemeForest for quite reasonable prices. Many of the options are responsive out of the gate & look good with a day or two of customization. Theme subscription services like WooThemes and Elegant Themes also have responsive options.

Child Themes

Some of the default Wordpress themes are responsive. Creating a child theme is quite easy. The popular Thesis and Studiopress frameworks also offer responsive skins.

PSD to HTML HTML to Responsive HTML

Eeek! … 11% Of Americans Think #HTML Is A Sexually Transmitted Disease http://t.co/np0irmI1DW via @broderick— L2Code HTML (@L2CodeHTML) January 10, 2015

Some of the PSD to HTML conversion services like PSD 2 HTML, HTML Slicemate & XHTML Chop offer responsive design conversion of existing HTML sites in as little as a day or two, though you will likely need to do at least a few minor changes when you put the designs live to compensate for issues like third party ad units and other minor issues.

If you have an existing Wordpress theme, you might want to see if you can zip it up and send it to them, or else they may make your new theme as a child theme of 2015 or such. If you are struggling to get them to convert your Wordpress theme over (like they are first converting it to a child theme of 2015 or such) then another option would be to have them do a static HTML file conversion (instead of a Wordpress conversion) and then feed that through a theme creation tool like Themespress.

Other Things to Look Out For

Third Party Plug-ins & Ad Code Gotchas

Google allows webmasters to alter the ad calls on their mobile responsive AdSense ad units to show different sized ad units to different screen sizes & skip showing some ad units on smaller screens. An AdSense code example is included in an expandable section at the bottom of this page.

<style type=”text/css”>

.adslot_1 { display:inline-block; width: 320px; height: 50px; }

@media (max-width: 400px) { .adslot_1 { display: none; } }

@media (min-width:500px) { .adslot_1 { width: 468px; height: 60px; } }

@media (min-width:800px) { .adslot_1 { width: 728px; height: 90px; } }

</style>

<ins class=”adsbygoogle adslot_1″

data-ad-client=”ca-pub-1234″

data-ad-slot=”5678″></ins>

<script async src=”//pagead2.googlesyndication.com/pagead/js/adsbygoogle.js”></script>

<script>(adsbygoogle = window.adsbygoogle || []).push({});</script>

For other ads which perhaps don’t have a “mobile friendly” option you could use CSS to either set the ad unit to display none or to set the ad unit to overflow using code like either of the following

hide it:

@media only screen and (max-width: ___px) {

.bannerad {

display: none;

}

}

overflow it:

@media only screen and (max-width: ___px) {

.ad-unit {

max-width: ___px;

overflow: scroll;

}

}

Before Putting Your New Responsive Site Live…

Back up your old site before putting the new site live.

For static HTML sites or sites with PHP or SHTML includes & such…

- Download a copy of your existing site to local.

- Rename that folder to something like sitename.com-OLDVERSION

- Upload the sitename.com-OLDVERSION folder to your server. If anything goes drastically wrong during your conversion process you can rename the new site design to something like sitename.com-HOSED then set the sitename.com-OLDVERSION folder to sitename.com to quickly restore the site.

- Download your site to local again.

- Ensure your new site design is using a different CSS folder or CSS filename such that they old and new versions of the design can be live at the same time while you are editing the site.

- Create a test file with the responsive design on your site & test that page until things work well enough.

- Once that page works well enough, test changing your homepage over to the new design & then save and upload it to verify it works properly. In addition to using your cell phone you could see how it looks on a variety of devices via the mobile browser testing emulation tool in Chrome, or a wide array of third party tools like: MobileTest.me, iPadPeek, Mobile Phone Emulator, Browshot, Matt Kersley’s responsive web design testing tool, BrowserStack, Cross Browser Testing, & the W3C mobileOK Checker. Paid services like Keynote offer manual testing rather than emulation on a wide variety of devices. There is also paid downloadable desktop emulation software like Multi-browser view.

- Once you have the general “what needs changed in each file” down, then use find & replace to bulk edit the remaining files to make the changes to make them responsive.

- Use a tool like FileZilla to quickly bulk upload the files.

- Look through key pages and if there are only a few minor errors then fix them and re-upload them. If things are majorly screwed up then revert to the old design being live and schedule a do over on the upgrade.

- If you have a decently high traffic site, it might make sense to schedule the above process for late Friday night or an off hour on the weekend, such that if anything goes astray you have fewer people seeing the errors while you frantically rush to fix them. :)

If you have little faith in the above test-it-live “methodology” & would prefer a slower & lower stress approach, you could create a test site on another domain name for testing purposes. Just be sure to include a noindex directive in the robots.txt file or password protect access to the site while testing. When you get things worked out on it, make sure your internal links are referencing the correct domain name, and that you have removed any block via robots.txt or password protection.

For a site with a CMS the above process is basically the same, except for how you might need to create a different backup. If you are uploading a Wordpress or Drupal theme, then change the name at least slightly so you can keep the old and new designs live at the same time so you can quickly switch back to the old design if you need to.

If you have a mixed site with Wordpress & static files or such then it might make sense to test changing the static files first, get those to work well & then create a Wordpress theme after that.

You Can’t Copyright Facts

The Facts of Life

When Google introduced the knowledge graph one of their underlying messages behind it was “you can’t copyright facts.”

Facts are like domain names or links or pictures or anything else in terms of being a layer of information which can be highly valued or devalued through commoditization.

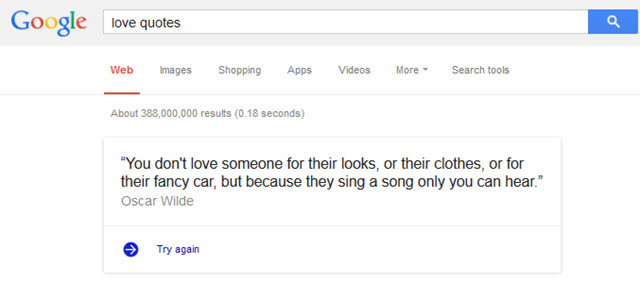

When you search for love quotes, Google pulls one into their site & then provides another “try again” link.

Since quotes mostly come from third parties they are not owned by BrainyQuotes and other similar sites. But here is the thing, if those other sites which pay to organize and verify such collections have their economics sufficiently undermined then they go away & then Google isn’t able to pull them into the search results either.

The same is true with song lyrics. If you are one of the few sites paying to license the lyrics & then Google puts lyrics above the search results, then the economics which justified the investment in licensing might not back out & you will likely go bankrupt. That bankruptcy wouldn’t be the result of being a spammer trying to work an angle, but rather because you had a higher cost structure from trying to do things the right way.

Never trust a corporation to do a librarian’s job.

What’s Behind Door Number One?

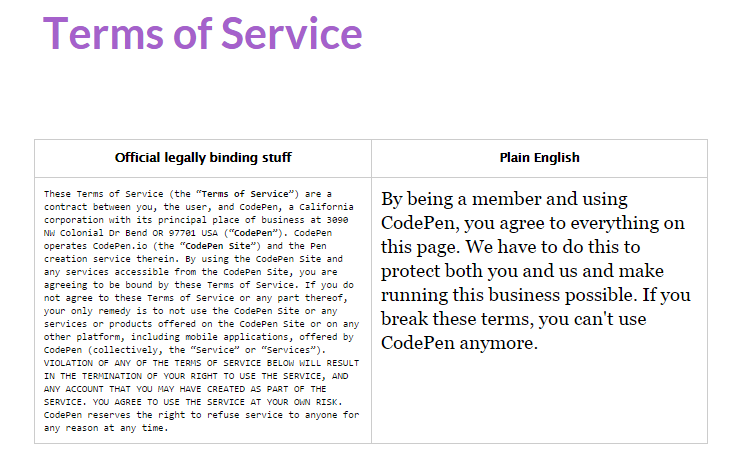

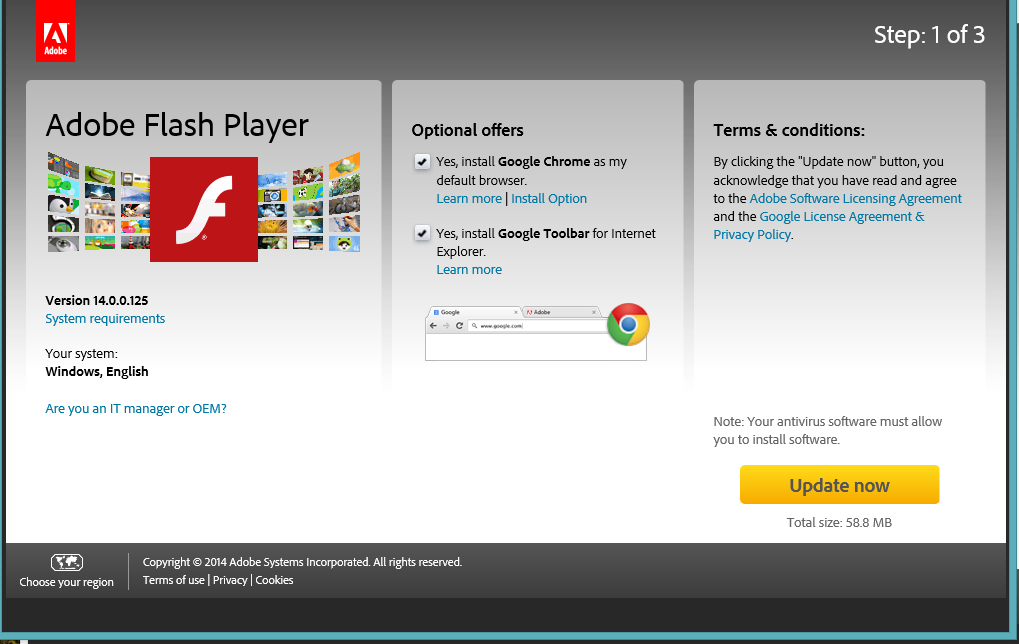

Google has also done the above quote-like “action item” types of onebox listings in other areas like software downloads

Where there are multiple versions of the software available, Google is arbitrarily selecting the download page, even though a software publisher might have a parallel SAAS option or other complex funnels based on a person’s location or status as a student or such.

Mix in Google allowing advertisers to advertise bundled adware, and it becomes quite easy for Google to gum up the sales process and undermine existing brand equity by sending users to the wrong location. Here’s a blog post from Malwarebytes referencing

- their software being advertised on their brand term in Google via AdWords ads, engaging in trademark infringement and bundled with adware.

- numerous user complaints they received about the bundleware

- required legal actions they took to take the bundler offline

Brands are forced to buy their own brand equity before, during & after the purchase, or Google partners with parasites to monetize the brand equity:

The company used this cash to build more business, spending more than $1 million through at least seven separate advertising accounts with Google.

…

The ads themselves said things like “McAfee Support – Call +1-855-[redacted US phone number]” and pointed to domains like mcafee-support.pccare247.com.

…

One PCCare247 ad account with Google produced 71.7 million impressions; another generated 12.4 million more. According to records obtained by the FTC, these combined campaigns generated 1.5 million clicks

Since Google requires Chrome extensions be installed from their own website it makes it hard (for anyone other than Google) to monetize them, which in turn makes it appealing for people to sell the ad-ons to malware bundlers. Android apps in the Google Play store are yet another “open” malware ecosystem.

FACT: This Isn’t About Facts

Google started the knowledge graph & onebox listings on some utterly banal topics which were easy for a computer to get right, though their ambitions vastly exceed the starting point. The starting point was done where it was because it was low-risk and easy.

When Google’s evolving search technology was recently covered on Medium by Steven Levy he shared that today the Knowledge Graph appears on roughly 25% of search queries and that…

Google is also trying to figure out how to deliver more complex results — to go beyond quick facts and deliver more subjective, fuzzier associations. “People aren’t interested in just facts,” she says. “They are interested in subjective things like whether or not the television shows are well-written. Things that could really help take the Knowledge Graph to the next level.”

Even as the people who routinely shill for Google parrot the “you can’t copyright facts” mantra, Google is telling you they have every intent of expanding far beyond it. “I see search as the interface to all computing,” says Singhal.

Even if You Have Copyright…

What makes the “you can’t copyright facts” line so particularly disingenuous was Google’s support of piracy when they purchased YouTube:

cofounder Jawed Karim favored “lax” copyright policy to make YouTube “huge” and hence “an excellent acquisition target.” YouTube at one point added a “report copyrighted content” button to let users report infringements, but removed the button when it realized how many users were reporting unauthorized videos. Meanwhile, YouTube managers intentionally retained infringing videos they knew were on the site, remarking “we should KEEP …. comedy clips (Conan, Leno, etc.) [and] music videos” despite having licenses for none of these. (In an email rebuke, cofounder Steve Chen admonished: “Jawed, please stop putting stolen videos on the site. We’re going to have a tough time defending the fact that we’re not liable for the copyrighted material on the site because we didn’t put it up when one of the co-founders is blatantly stealing content from other sites and trying to get everyone to see it.”)

To some, the separation of branding makes YouTube distinct and separate from Google search, but that wasn’t so much the case when many sites lost their video thumbnails and YouTube saw larger thumbnails on many of their listings in Google. In the above Steven Levy article he wrote: “one of the highest ranked general categories was a desire to know “how to” perform certain tasks. So Google made it easier to surface how-to videos from YouTube and other sources, featuring them more prominently in search.”

Altruism vs Disruption for the Sake of it

Whenever Google implements a new feature they can choose not to monetize it so as to claim they are benevolent and doing it for users without commercial interest. But that same unmonetized & for users claim was also used with their shopping search vertical until one day it went paid. Google claimed paid inclusion was evil right up until the day it claimed paid inclusion was a necessity to improve user experience.

There was literally no transition period.

Many of the “informational” knowledge block listings contain affiliate links pointing into Google Play or other sites. Those affiliate ads were only labeled as advertisements after the FTC complained about inconsistent ad labeling in search results.

Health is Wealth

Google recently went big on the knowledge graph by jumping head first into the health vertical:

starting in the next few days, when you ask Google about common health conditions, you’ll start getting relevant medical facts right up front from the Knowledge Graph. We’ll show you typical symptoms and treatments, as well as details on how common the condition is—whether it’s critical, if it’s contagious, what ages it affects, and more. For some conditions you’ll also see high-quality illustrations from licensed medical illustrators. Once you get this basic info from Google, you should find it easier to do more research on other sites around the web, or know what questions to ask your doctor.

Google’s links to the Mayo Clinic in their knowledge graph are, once again, a light gray font.

In case you didn’t find enough background in Google’s announcement article, Greg Sterling shared more of Google’s views here. A couple notable quotes from Greg…

Cynics might say that Google is moving into yet another vertical content area and usurping third-party publishers. I don’t believe this is the case. Google isn’t going to be monetizing these queries; it appears to be genuinely motivated by a desire to show higher-quality health information and educate users accordingly.

- Google doesn’t need to directly monetize it to impact the economics of the industry. If they shift a greater share of clicks through AdWords then that will increase competition and ad prices in that category while lowering investment in SEO.

- If this is done out of benevolence, it will appear *above* the AdWords ads on the search results — unlike almost every type of onebox or knowledge graph result Google offers.

- If it is fair for him to label everyone who disagrees with his thesis as a cynic then it is of course fair for those “cynics” to label Greg Sterling as a shill.

Google told me that it hopes this initiative will help motivate the improvement of health content across the internet.

By defunding and displacing something they don’t improve its quality. Rather they force the associated entities to cut their costs to try to make the numbers work.

If their traffic drops and they don’t do more with less, then…

- their margins will fall

- growth slows (or they may even shrink)

- their stock price will tank

- management will get fired & replaced and/or they will get took private by private equity investors and/or they will need to do some “bet the company” moves to find growth elsewhere (and hope Google doesn’t enter that parallel area anytime soon)

When the numbers don’t work, publishers need to cut back or cut corners.

Things get monetized directly, monetized indirectly, or they disappear.

Some of the more hated aspects of online publishing (headline bait, idiotic correlations out of context, pagination, slideshows, popups, fly in ad units, auto play videos, full page ad wraps, huge ads eating most the above the fold real estate, integration of terrible native ad units promoting junk offers with shocking headline bait, content scraping answer farms, blending unvetted user generated content with house editorial, partnering with content farms to create subdomains on trusted blue chip sites, using Narrative Science or Automated Insights to auto-generate content, etc.) are not done because online publishers want to be jackasses, but because it is hard to make the numbers work in a competitive environment.

Publishers who were facing an “oh crap” moment when seeing print Dollars turn into digital dimes are having round number 2 when they see those digital dimes turn into mobile pennies:

At The New York Times, for instance, more than half its digital audience comes from mobile, yet just 10% of its digital-ad revenue is attributed to these devices.

If we lose some diversity in news it isn’t great, though it isn’t the end of the world. But what makes health such an important area is it is literally a matter of life & death.

Its importance & the amount of money flowing through the market ensures there is heavy investment in misinforming the general population. The corruption is so bad some people (who should know better) instead fault science.

@johnandrews @aaronwall it must be nice to say, you know what we’re keeping that traffic for ourselves, and nobody says a damn thing— Michael Gray (@graywolf) February 10, 2015

… and, only those who hate free speech, democracy & the country could possibly have anything negative to say about it. :D

Not to worry though. Any user trust built through the health knowledge graph can be monetized through a variety of other fantastic benevolent offers.

Once again, Google puts the user first.

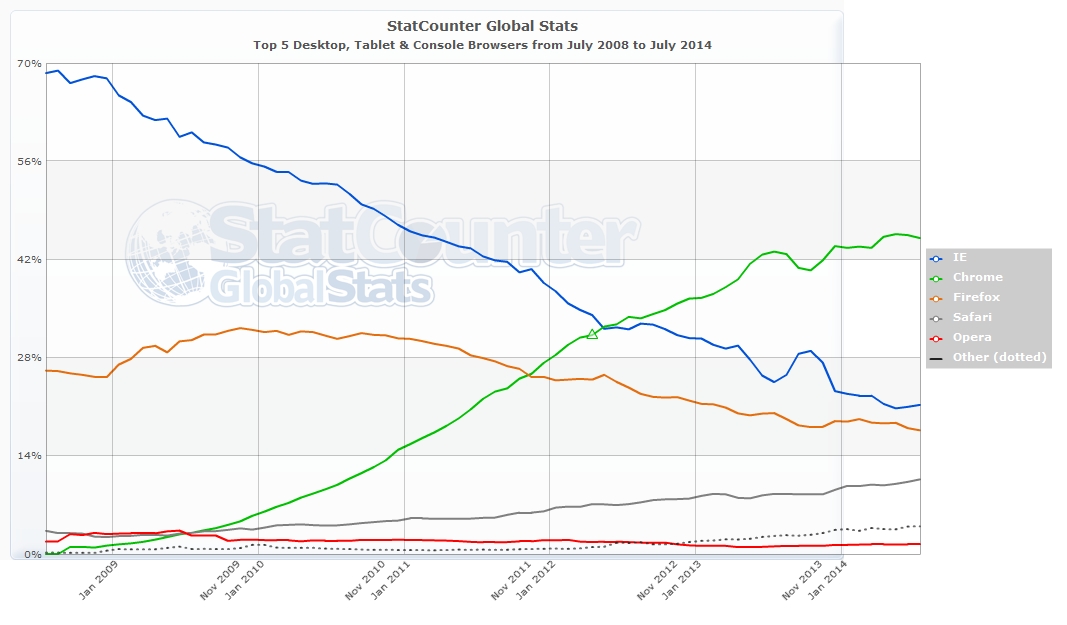

Mozilla Firefox Dumps Google in Favor of Yahoo! Search

Firefox users conduct over 100 billion searches per year & starting in December Yahoo! will be the default search choice in the US, under a new 5 year agreement.

Google has been the Firefox global search default since 2004. Our agreement came up for renewal this year, and we took this as an opportunity to review our competitive strategy and explore our options.

In evaluating our search partnerships, our primary consideration was to ensure our strategy aligned with our values of choice and independence, and positions us to innovate and advance our mission in ways that best serve our users and the Web. In the end, each of the partnership options available to us had strong, improved economic terms reflecting the significant value that Firefox brings to the ecosystem. But one strategy stood out from the rest.

In Russia they’ll default to Yandex & in China they’ll default to Baidu.

One weird thing about that announcement is there is no mention of Europe & Google’s dominance is far greater in Europe. I wonder if there was a quiet deal with Google in Europe, if they still don’t have their Europe strategy in place, or what their strategy is.

Google paid Firefox roughly $300 million per year for the default search placement. Yahoo!’s annual search revenue is on the order of $1.8 billion per year, so if they came close to paying $300 million a year, then Yahoo! has to presume they are going to get at least a few percentage points of search marketshare lift for this to pay for itself.

It also makes sense that Yahoo! would be a more natural partner fit for Mozilla than Bing would. If Mozilla partnered with Bing they would risk developer blowback from pent up rage about anti-competitive Internet Explorer business practices from 10 or 15 years ago.

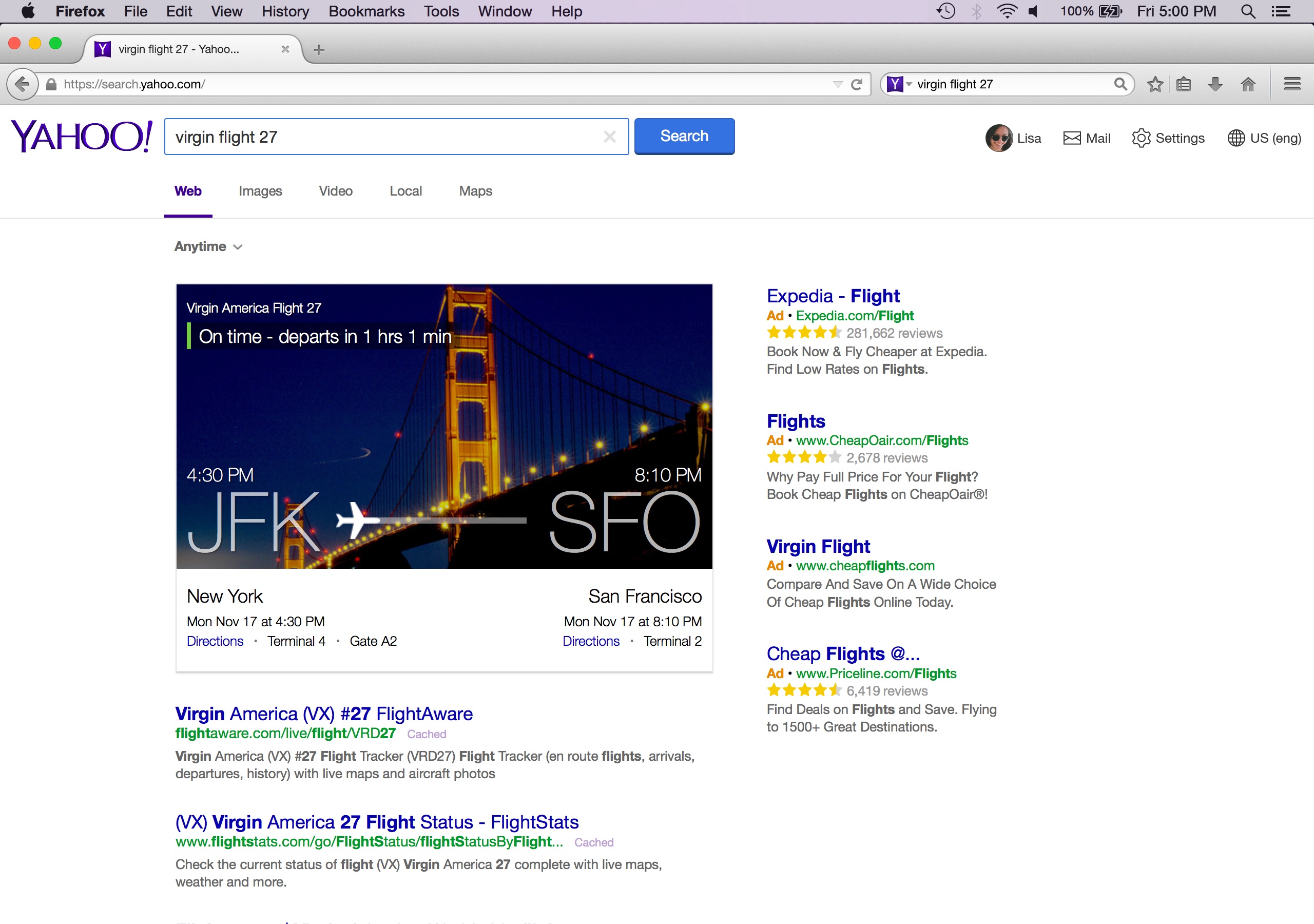

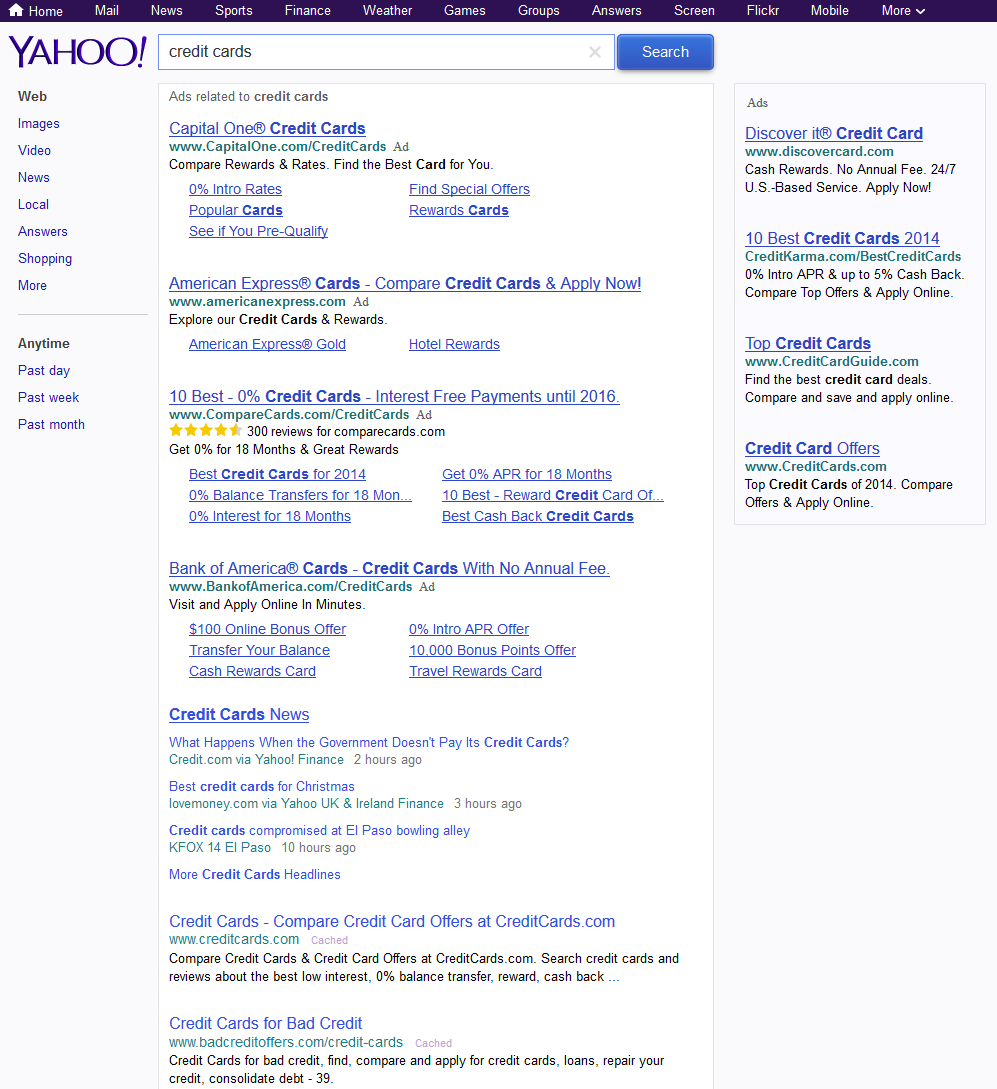

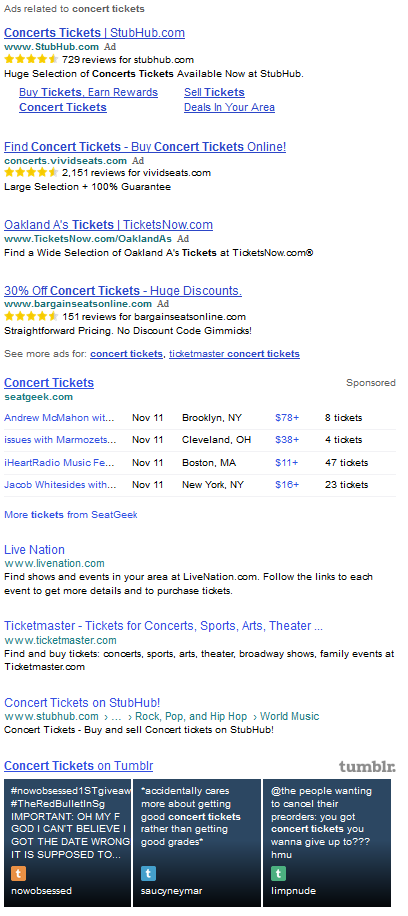

It is also worth mentioning our recent post about how Yahoo! boosts search RPM by doing about a half dozen different tricks to preference paid search results while blending in the organic results.

| Yahoo Ads | Yahoo Organic Results | |

| Placement | top of the page | below the ads |

| Background color | none / totally blended | none |

| Ad label | small gray text to right of advertiser URL | n/a |

| Sitelinks | often 5 or 6 | usually none, unless branded query |

| Extensions | star ratings, etc. | typically none |

| Keyword bolding | on for title, description, URL & sitelinks | off |

| Underlines | ad title & sitelinks, URL on scroll over | off |

| Click target | entire background of ad area is clickable | only the listing title is clickable |

Though the revenue juicing stuff from above wasn’t present in the screenshot Mozilla shared about Yahoo!’s new clean search layout they will offer Firefox users.

It shows red ad labels to the left of the ads and bolding on both the ads & organics.

Here is Marissa Mayer’s take:

At Yahoo, we believe deeply in search – it’s an area of investment and opportunity for us. It’s also a key growth area for us – we’ve now seen 11 consecutive quarters of growth in our search revenue on an ex-TAC basis. This partnership helps to expand our reach in search and gives us an opportunity to work even more closely with Mozilla to find ways to innovate in search, communications, and digital content. I’m also excited about the long-term framework we developed with Mozilla for future product integrations and expansion into international markets.

Our teams worked closely with Mozilla to build a clean, modern, and immersive search experience that will launch first to Firefox’s U.S. users in December and then to all Yahoo users in early 2015.

Even if Microsoft is only getting a slice of the revenues, this makes the Bing organic & ad ecosystem stronger while hurting Google. (Unless of course this is a step 1 before Marissa finds a way to nix the Bing deal and partner back up with Google on search). Yahoo! already has a partnership to run Google contextual ads. A potential Yahoo! Google search partnership was blocked back in 2008. Yahoo! also syndicates Bing search ads in a contextual format to other sites through Media.net and has their Gemini Stream Ads product which powers some of their search ads on mobile devices and on content sites is a native ad alternative to Outbrain and Taboola. When they syndicate the native ads to other sites, the ads are called Yahoo! Recommends.

Both Amazon and eBay have recently defected (at least partially) from the Google ad ecosystem. Amazon has also been pushing to extend their ad network out to other sites.

Greg Sterling worries this might be a revenue risk for Firefox: “there may be some monetary risk for Firefox in leaving Google.” Missing from that perspective:

- How much less Google paid Mozilla before the most recent contract lifted by a competitive bid from Microsoft

- If Bing goes away, Google will drastically claw down on the revenue share offered to other search partners.

- Google takes 45% from YouTube publishers

- Google took over a half-decade (and a lawsuit) to even share what their AdSense revenue share was

- look at eHow’s stock performance

- While Google’s search ad revenue has grown about 20% per year their partner ad network revenues have stagnated as their traffic acquisition costs as a percent of revenue have dropped

The good thing about all the Google defections is the more networks there are the more opportunities there are to find one which works well / is a good fit for whatever you are selling, particularly as Google adds various force purchased junk to their ad network – be it mobile “Enhanced” campaigns or destroying exact match keyword targeting.

Peak Google? Not Even Close

Search vs Native Ads

Google owns search, but are they a one trick pony?

A couple weeks ago Ben Thompson published an interesting article suggesting Google may follow IBM and Microsoft in peaking, perhaps with native ads becoming more dominant than online search ads.

According to Forrester, in a couple years digital ad spend will overtake TV ad spend. In spite of the rise of sponsored content, native isn’t even broken out as a category.

Part of the issue with native advertising is it can be blurry to break out some of it. Some of it is obvious, but falls into multiple categories, like video ads on YouTube. Some of it is obvious, but relatively new & thus lacking in scale. Amazon is extending their payment services & Prime shipping deals to third party sites of brands like AllSaints & listing inventory from those sites on Amazon.com, selling them traffic on a CPC basis. Does that count as native advertising? What about a ticket broker or hotel booking site syndicating their inventory to a meta search site?

And while native is not broken out, Google already offers native ad management features in DoubleClick and has partnered with some of the more well known players like BuzzFeed.

The Penny Gap’s Impact on Search

Time intends to test paywalls on all of its major titles next year & they are working with third parties to integrate affiliate ads on sites like People.com.

The second link in the above sentence goes to an article which is behind a paywall. On Twitter I often link to WSJ articles which are behind a paywall. Any important information behind a paywall may quickly spread beyond it, but typically a competing free site which (re)reports on whatever is behind the paywall is shared more, spreads further on social, generates more additional coverage on forums and discussion sites like Hacker News, gets highlighted on aggregators like TechMeme, gets more links, ranks higher, and becomes the default/canonical source of the story.

Part of the rub of the penny gap is the cost of the friction vastly exceeds the financial cost. Those who can flow attention around the payment can typically make more by tracking and monetizing user behavior than they could by charging users incrementally a cent here and a nickel there.

Well known franchises are forced to offer a free version or they eventually cede their market position.

There are sites which do roll up subscriptions to a variety of sites at once, but some of them which had stub articles requiring payment to access like Highbeam Research got torched by Panda. If the barrier to entry to get to the content is too high the engagement metrics are likely to be terrible & a penalty ensues. Even a general registration wall is too high of a barrier to entry for some sites. Google demands whatever content is shown to them be visible to end users & if there is a miss match that is considered cloaking – unless the miss match is due to monetizing by using Google’s content locking consumer surveys.