Internet Wayback Machine Adds Historical TextDiff

The Wayback Machine has a cool new feature for looking at the historical changes of a web page.

The color scale shows how much a page has changed since it was last cached & you can select between any two documents to see how a page has changed ove…

Dofollow, Nofollow, Sponsored, UGC

A Change to Nofollow

Last month Google announced they were going to change how they treated nofollow, moving it from a directive toward a hint. As part of that they also announced the release of parallel attributes rel=”sponsored” for sponsored links & rel=”ugc” for user generated content in areas like forums & blog comments.

Why not completely ignore such links, as had been the case with nofollow? Links contain valuable information that can help us improve search, such as how the words within links describe content they point at. Looking at all the links we encounter can also help us better understand unnatural linking patterns. By shifting to a hint model, we no longer lose this important information, while still allowing site owners to indicate that some links shouldn’t be given the weight of a first-party endorsement.

In many emerging markets the mobile web is effectively the entire web. Few people create HTML links on the mobile web outside of on social networks where links are typically nofollow by default. This reduces the potential signal available to either tracking what people do directly and/or shifting how the nofollow attribute is treated.

Google shifting how nofollow is treated is a blanket admission that Penguin & other elements of “the war on links” were perhaps a bit too effective and have started to take valuable signals away from Google.

Google has suggested the shift in how nofollow is treated will not lead to any additional blog comment spam. When they announced nofollow they suggested it would lower blog comment spam. Blog comment spam remains a growth market long after the gravity of the web has shifted away from blogs onto social networks.

Changing how nofollow is treated only makes any sort of external link analysis that much harder. Those who specialize in link audits (yuck!) have historically ignored nofollow links, but now that is one more set of things to look through. And the good news for professional link auditors is that increases the effective cost they can charge clients for the service.

Some nefarious types will notice when competitors get penalized & then fire up Xrummer to help promote the penalized site, ensuring that the link auditor bankrupts the competing business even faster than Google.

Links, Engagement, or Something Else…

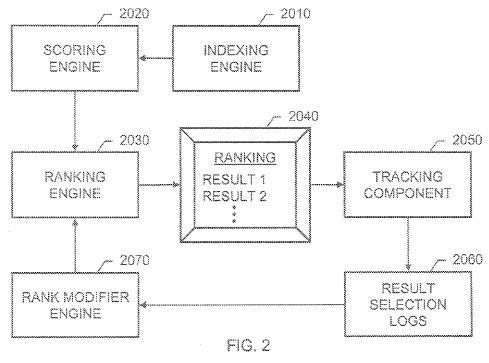

When Google was launched they didn’t own Chrome or Android. They were not yet pervasively spying on billions of people:

If, like most people, you thought Google stopped tracking your location once you turned off Location History in your account settings, you were wrong. According to an AP investigation published Monday, even if you disable Location History, the search giant still tracks you every time you open Google Maps, get certain automatic weather updates, or search for things in your browser.

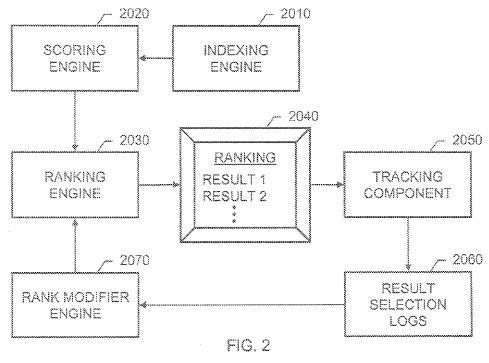

Thus Google had to rely on external signals as their primary ranking factor:

The reason that PageRank is interesting is that there are many cases where simple citation counting does not correspond to our common sense notion of importance. For example, if a web page has a link on the Yahoo home page, it may be just one link but it is a very important one. This page should be ranked higher than many pages with more links but from obscure places. PageRank is an attempt to see how good an approximation to “importance” can be obtained just from the link structure. … The denition of PageRank above has another intuitive basis in random walks on graphs. The simplied version corresponds to the standing probability distribution of a random walk on the graph of the Web. Intuitively, this can be thought of as modeling the behavior of a “random surfer”.

Google’s reliance on links turned links into a commodity, which led to all sorts of fearmongering, manual penalties, nofollow and the Penguin update.

As Google collected more usage data those who overly focused on links often ended up scoring an own goal, creating sites which would not rank.

Google no longer invests heavily in fearmongering because it is no longer needed. Search is so complex most people can’t figure it out.

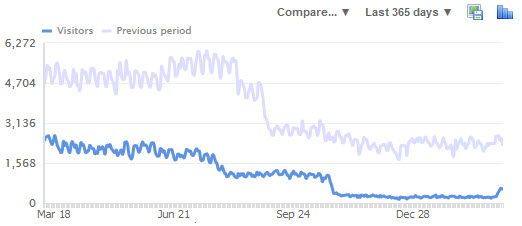

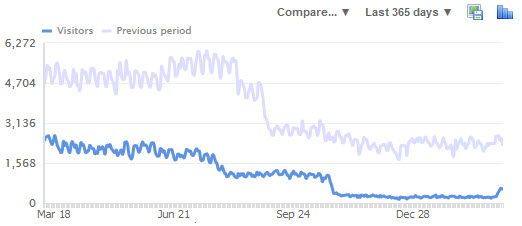

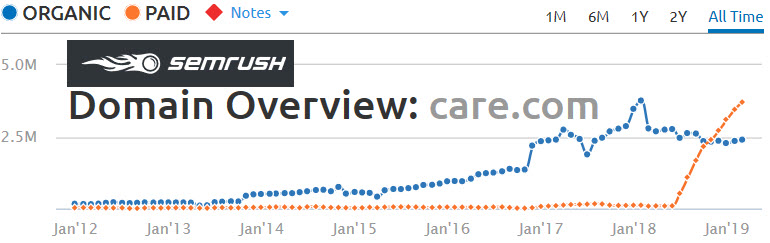

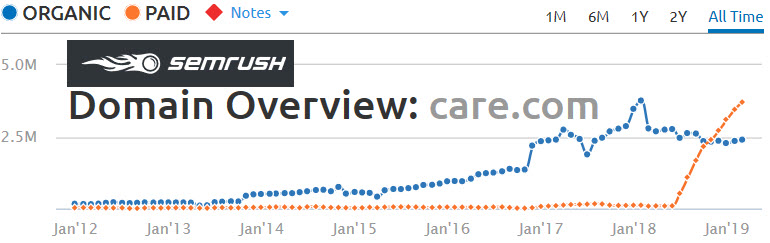

Many SEOs have reduced their link building efforts as Google dialed up weighting on user engagement metrics, though it appears the tide may now be heading in the other direction. Some sites which had decent engagement metrics but little in the way of link building slid on the update late last month.

As much as Google desires relevancy in the short term, they also prefer a system complex enough to external onlookers that reverse engineering feels impossible. If they discourage investment in SEO they increase AdWords growth while gaining greater control over algorithmic relevancy.

Google will soon collect even more usage data by routing Chrome users through their DNS service: “Google isn’t actually forcing Chrome users to only use Google’s DNS service, and so it is not centralizing the data. Google is instead configuring Chrome to use DoH connections by default if a user’s DNS service supports it.”

If traffic is routed through Google that is akin to them hosting the page in terms of being able to track many aspects of user behavior. It is akin to AMP or YouTube in terms of being able to track users and normalize relative engagement metrics.

Once Google is hosting the end-to-end user experience they can create a near infinite number of ranking signals given their advancement in computing power: “We developed a new 54-qubit processor, named “Sycamore”, that is comprised of fast, high-fidelity quantum logic gates, in order to perform the benchmark testing. Our machine performed the target computation in 200 seconds, and from measurements in our experiment we determined that it would take the world’s fastest supercomputer 10,000 years to produce a similar output.”

Relying on “one simple trick to…” sorts of approaches are frequently going to come up empty.

EMDs Kicked Once Again

I was one of the early promoters of exact match domains when the broader industry did not believe in them. I was also quick to mention when I felt the algorithms had moved in the other direction.

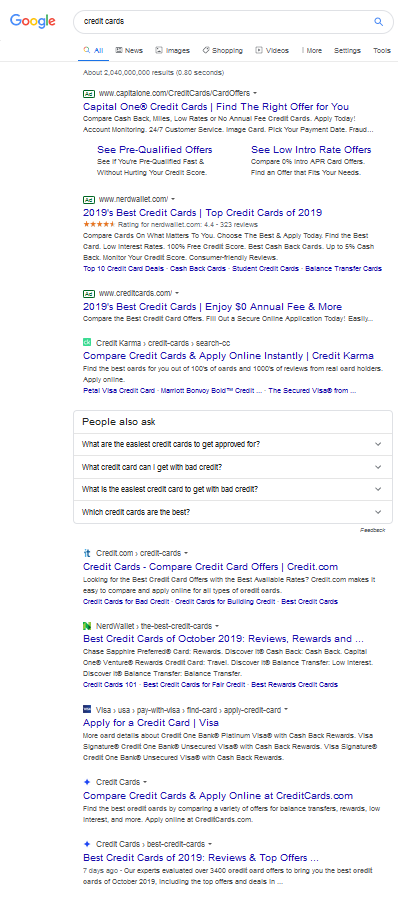

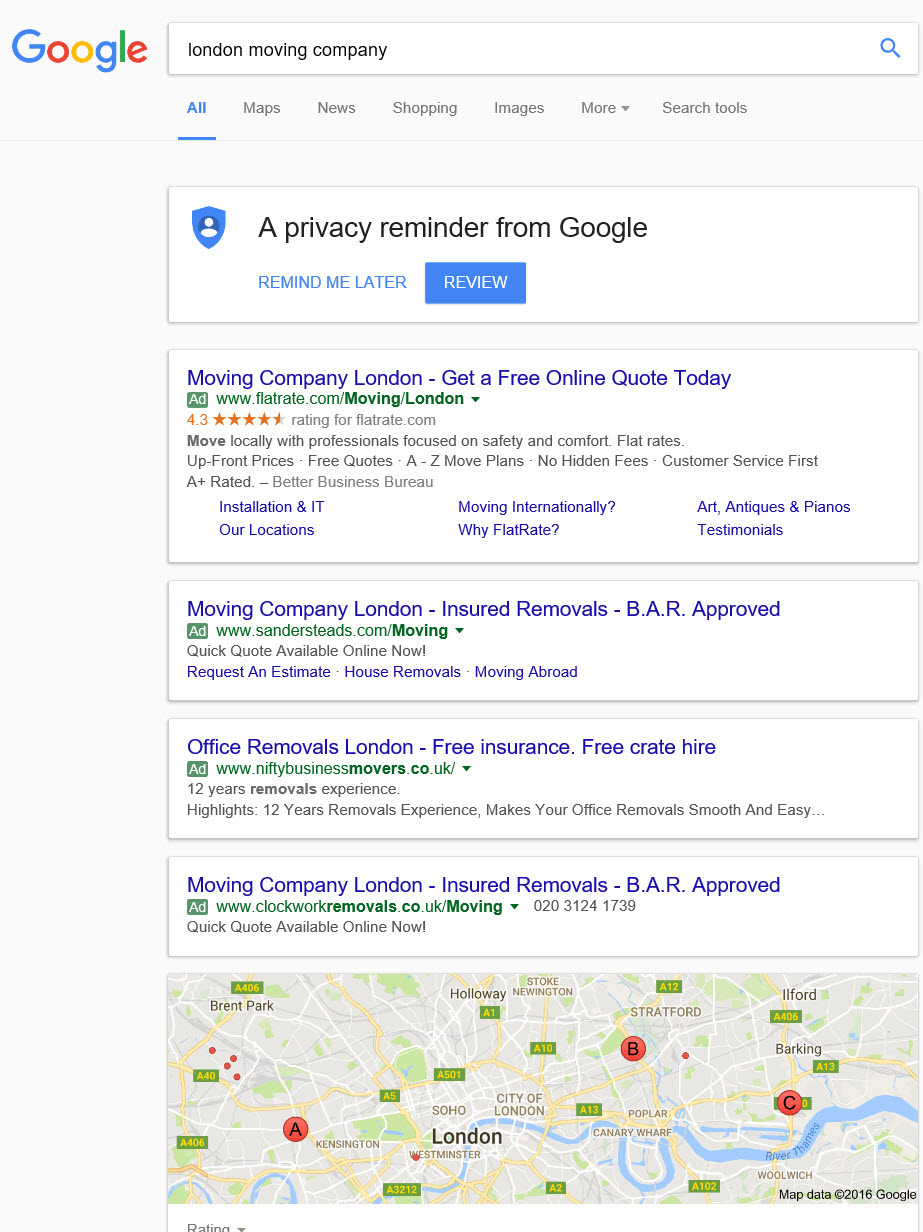

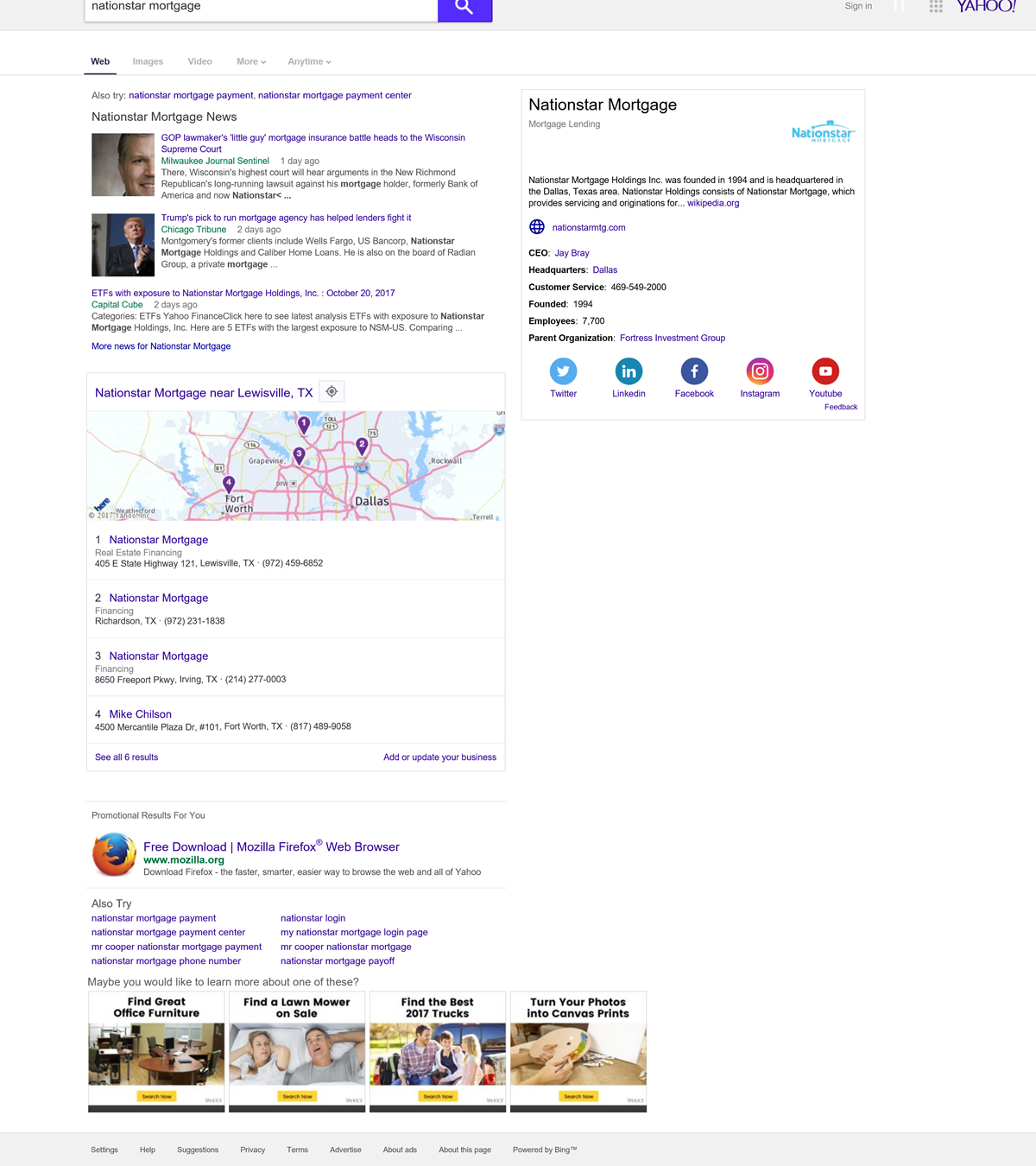

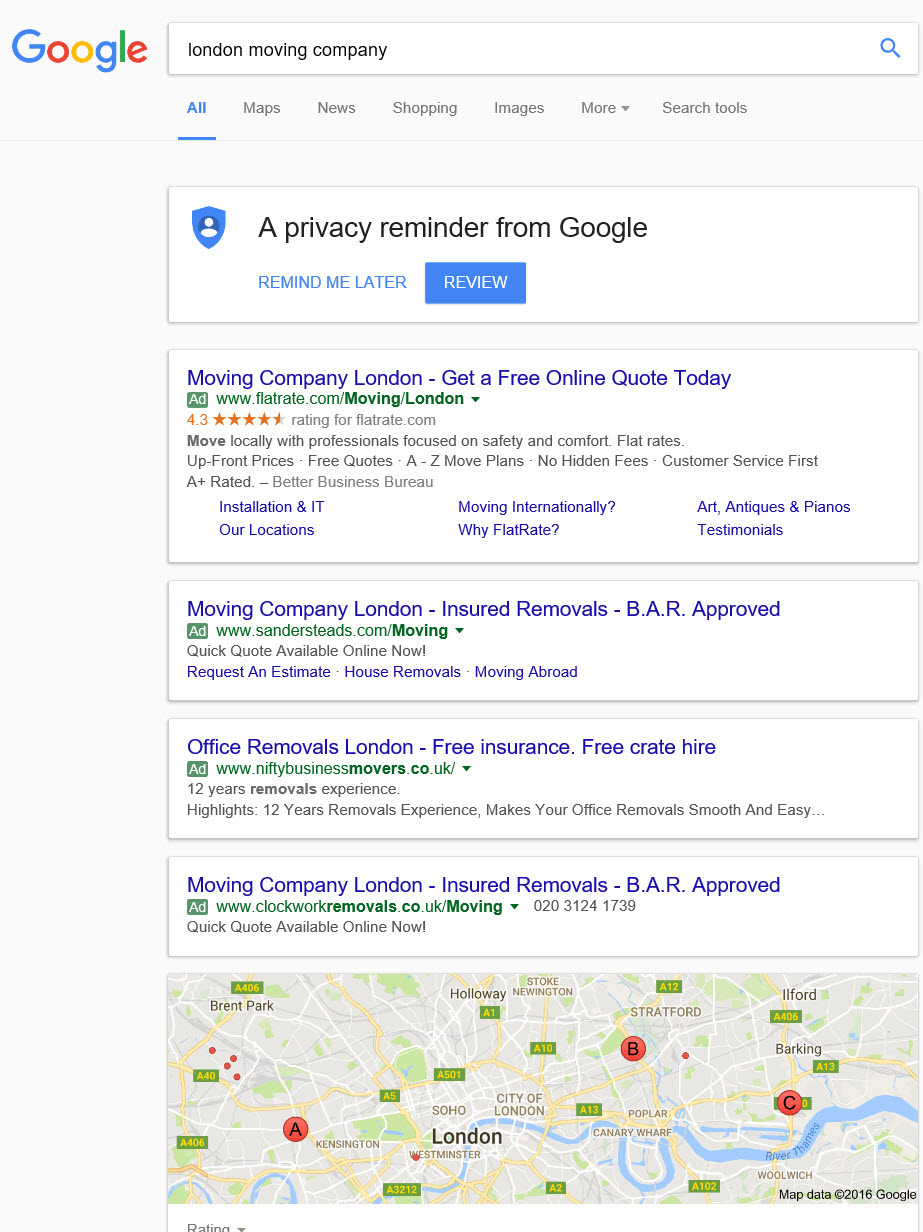

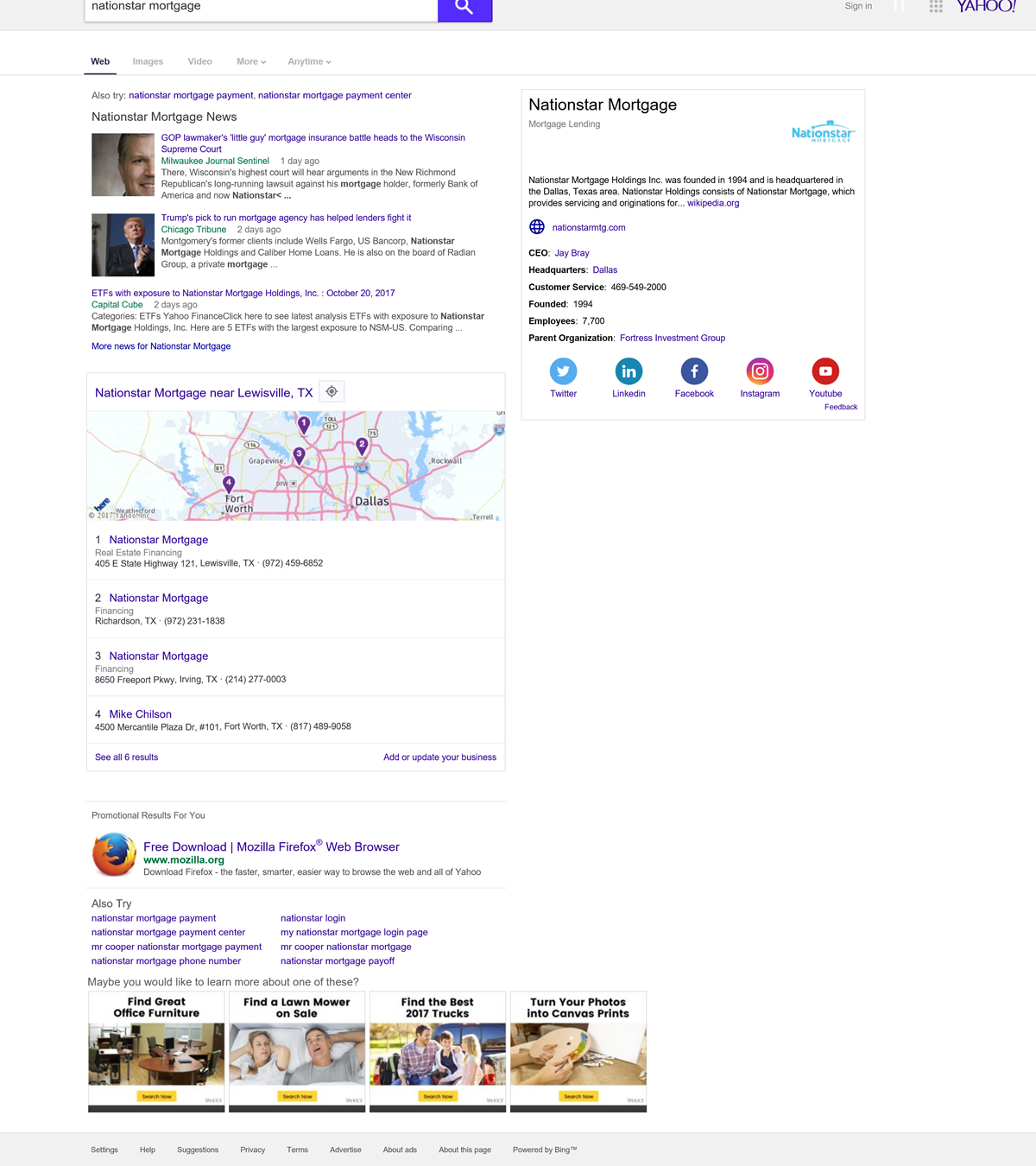

Google’s mobile layout, which they are now testing on desktop computers as well, replaces green domain names with gray words which are easy to miss. And the favicon icons sort of make the organic results look like ads. Any boost a domain name like CreditCards.ext might have garnered in the past due to matching the keyword has certainly gone away with this new layout that further depreciates the impact of exact-match domain names.

At one point in time CreditCards.com was viewed as a consumer destination. It is now viewed … below the fold.

If you have a memorable brand-oriented domain name the favicon can help offset the above impact somewhat, but matching keywords is becoming a much more precarious approach to sustaining rankings as the weight on brand awareness, user engagement & authority increase relative to the weight on anchor text.

Dofollow, Nofollow, Sponsored, UGC

A Change to Nofollow

Last month Google announced they were going to change how they treated nofollow, moving it from a directive toward a hint. As part of that they also announced the release of parallel attributes rel=”sponsored” for sponsored links & rel=”ugc” for user generated content in areas like forums & blog comments.

Why not completely ignore such links, as had been the case with nofollow? Links contain valuable information that can help us improve search, such as how the words within links describe content they point at. Looking at all the links we encounter can also help us better understand unnatural linking patterns. By shifting to a hint model, we no longer lose this important information, while still allowing site owners to indicate that some links shouldn’t be given the weight of a first-party endorsement.

In many emerging markets the mobile web is effectively the entire web. Few people create HTML links on the mobile web outside of on social networks where links are typically nofollow by default. This reduces the potential signal available to either tracking what people do directly and/or shifting how the nofollow attribute is treated.

Google shifting how nofollow is treated is a blanket admission that Penguin & other elements of “the war on links” were perhaps a bit too effective and have started to take valuable signals away from Google.

Google has suggested the shift in how nofollow is treated will not lead to any additional blog comment spam. When they announced nofollow they suggested it would lower blog comment spam. Blog comment spam remains a growth market long after the gravity of the web has shifted away from blogs onto social networks.

Changing how nofollow is treated only makes any sort of external link analysis that much harder. Those who specialize in link audits (yuck!) have historically ignored nofollow links, but now that is one more set of things to look through. And the good news for professional link auditors is that increases the effective cost they can charge clients for the service.

Some nefarious types will notice when competitors get penalized & then fire up Xrummer to help promote the penalized site, ensuring that the link auditor bankrupts the competing business even faster than Google.

Links, Engagement, or Something Else…

When Google was launched they didn’t own Chrome or Android. They were not yet pervasively spying on billions of people:

If, like most people, you thought Google stopped tracking your location once you turned off Location History in your account settings, you were wrong. According to an AP investigation published Monday, even if you disable Location History, the search giant still tracks you every time you open Google Maps, get certain automatic weather updates, or search for things in your browser.

Thus Google had to rely on external signals as their primary ranking factor:

The reason that PageRank is interesting is that there are many cases where simple citation counting does not correspond to our common sense notion of importance. For example, if a web page has a link on the Yahoo home page, it may be just one link but it is a very important one. This page should be ranked higher than many pages with more links but from obscure places. PageRank is an attempt to see how good an approximation to “importance” can be obtained just from the link structure. … The denition of PageRank above has another intuitive basis in random walks on graphs. The simplied version corresponds to the standing probability distribution of a random walk on the graph of the Web. Intuitively, this can be thought of as modeling the behavior of a “random surfer”.

Google’s reliance on links turned links into a commodity, which led to all sorts of fearmongering, manual penalties, nofollow and the Penguin update.

As Google collected more usage data those who overly focused on links often ended up scoring an own goal, creating sites which would not rank.

Google no longer invests heavily in fearmongering because it is no longer needed. Search is so complex most people can’t figure it out.

Many SEOs have reduced their link building efforts as Google dialed up weighting on user engagement metrics, though it appears the tide may now be heading in the other direction. Some sites which had decent engagement metrics but little in the way of link building slid on the update late last month.

As much as Google desires relevancy in the short term, they also prefer a system complex enough to external onlookers that reverse engineering feels impossible. If they discourage investment in SEO they increase AdWords growth while gaining greater control over algorithmic relevancy.

Google will soon collect even more usage data by routing Chrome users through their DNS service: “Google isn’t actually forcing Chrome users to only use Google’s DNS service, and so it is not centralizing the data. Google is instead configuring Chrome to use DoH connections by default if a user’s DNS service supports it.”

If traffic is routed through Google that is akin to them hosting the page in terms of being able to track many aspects of user behavior. It is akin to AMP or YouTube in terms of being able to track users and normalize relative engagement metrics.

Once Google is hosting the end-to-end user experience they can create a near infinite number of ranking signals given their advancement in computing power: “We developed a new 54-qubit processor, named “Sycamore”, that is comprised of fast, high-fidelity quantum logic gates, in order to perform the benchmark testing. Our machine performed the target computation in 200 seconds, and from measurements in our experiment we determined that it would take the world’s fastest supercomputer 10,000 years to produce a similar output.”

Relying on “one simple trick to…” sorts of approaches are frequently going to come up empty.

EMDs Kicked Once Again

I was one of the early promoters of exact match domains when the broader industry did not believe in them. I was also quick to mention when I felt the algorithms had moved in the other direction.

Google’s mobile layout, which they are now testing on desktop computers as well, replaces green domain names with gray words which are easy to miss. And the favicon icons sort of make the organic results look like ads. Any boost a domain name like CreditCards.ext might have garnered in the past due to matching the keyword has certainly gone away with this new layout that further depreciates the impact of exact-match domain names.

At one point in time CreditCards.com was viewed as a consumer destination. It is now viewed … below the fold.

If you have a memorable brand-oriented domain name the favicon can help offset the above impact somewhat, but matching keywords is becoming a much more precarious approach to sustaining rankings as the weight on brand awareness, user engagement & authority increase relative to the weight on anchor text.

New Keyword Tool

Our keyword tool is updated periodically. We recently updated it once more.

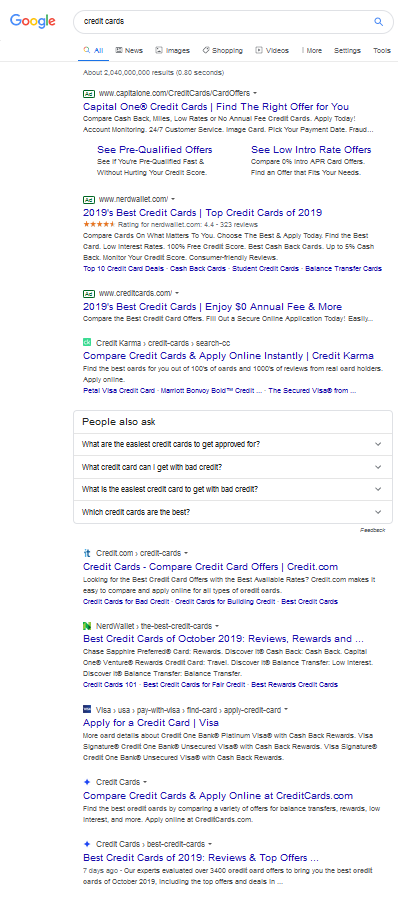

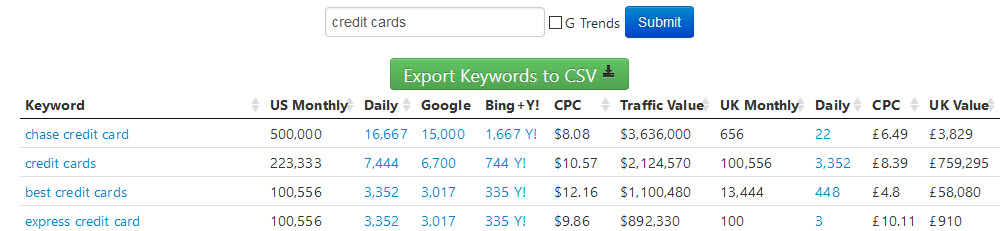

For comparison sake, the old keyword tool looked like this

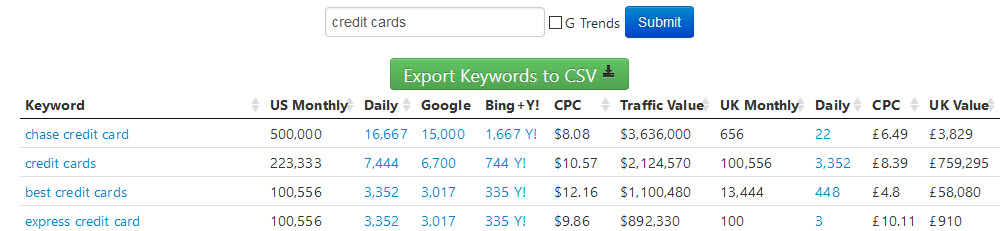

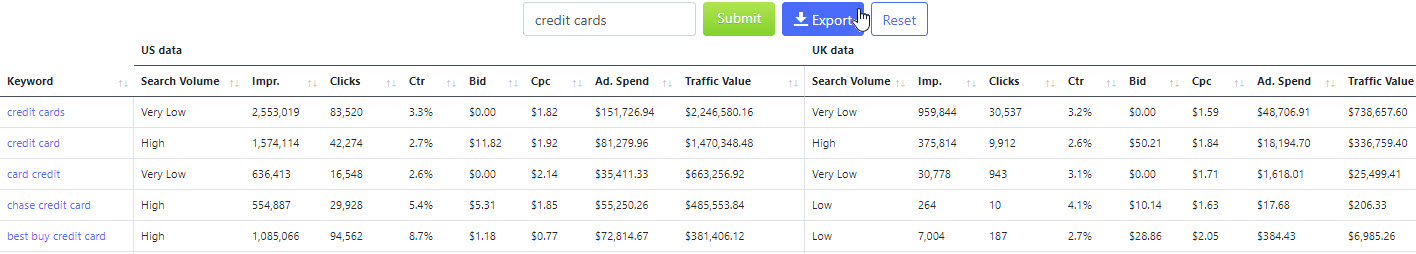

Whereas the new keyword tool looks like this

The upsides of the new keyword tool are:

- fresher data from this year

- more granular data on ad bids vs click prices

- lists ad clickthrough rate

- more granular estimates of Google AdWords advertiser ad bids

- more emphasis on commercial oriented keywords

With the new columns of [ad spend] and [traffic value] here is how we estimate those.

- paid search ad spend: search ad clicks * CPC

- organic search traffic value: ad impressions * 0.5 * (100% – ad CTR) * CPC

The first of those two is rather self explanatory. The second is a bit more complex. It starts with the assumption that about half of all searches do not get any clicks, then it subtracts the paid clicks from the total remaining pool of clicks & multiplies that by the cost per click.

The new data also has some drawbacks:

- Rather than listing search counts specifically it lists relative ranges like low, very high, etc.

- Since it tends to tilt more toward keywords with ad impressions, it may not have coverage for some longer tail informational keywords.

For any keyword where there is insufficient coverage we re-query the old keyword database for data & merge it across. You will know if data came from the new database if the first column says something like low or high & the data came from the older database if there are specific search counts in the first column

For a limited time we are still allowing access to both keyword tools, though we anticipate removing access to the old keyword tool in the future once we have collected plenty of feedback on the new keyword tool. Please feel free to leave your feedback in the below comments.

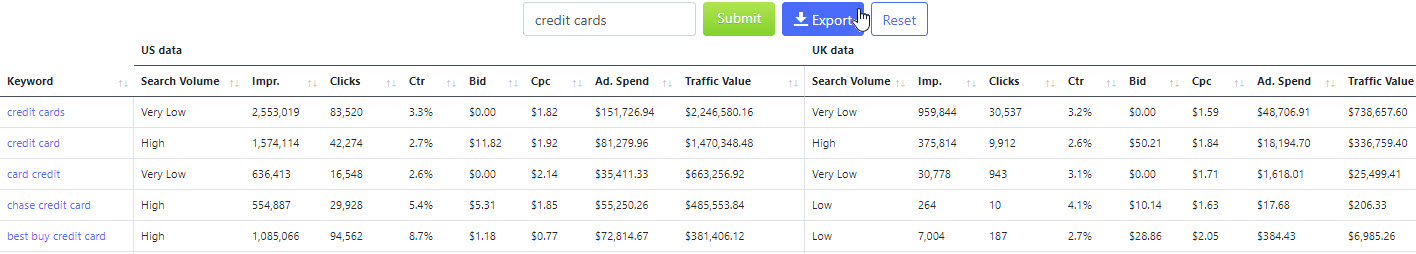

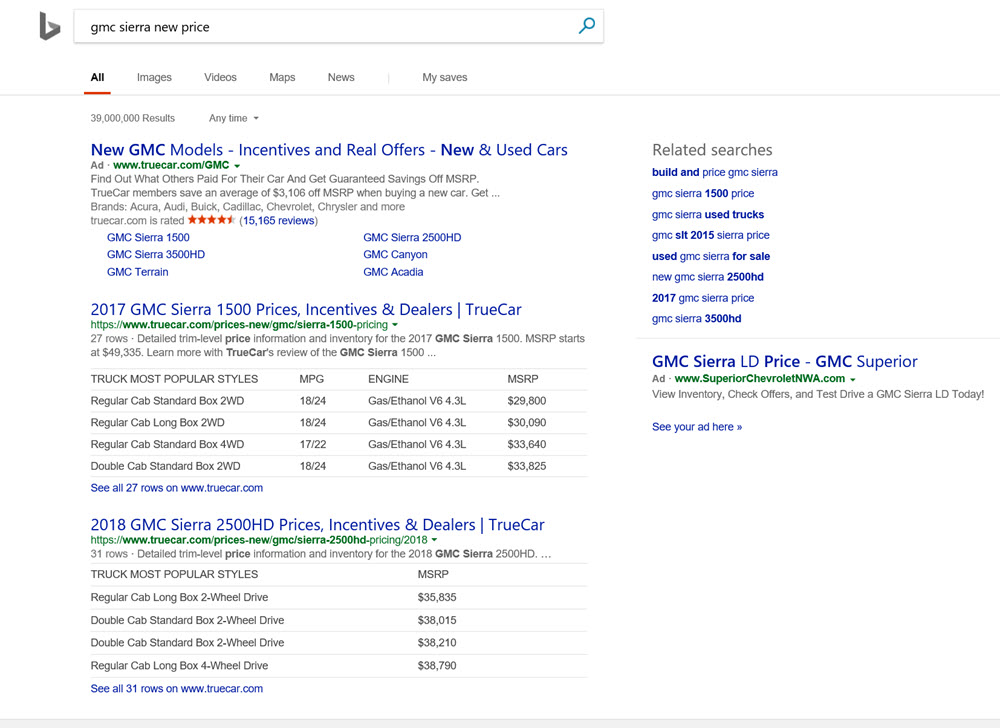

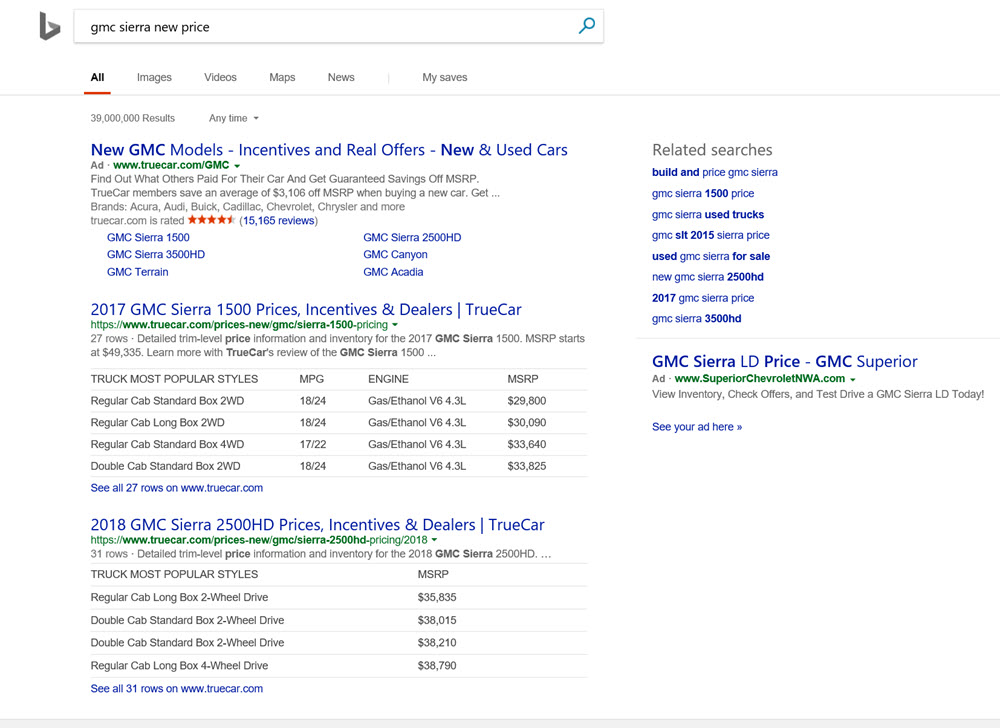

One of the cool features of the new keyword tools worth highlighting further is the difference between estimated bid prices & estimated click prices. In the following screenshot you can see how Amazon is estimated as having a much higher bid price than actual click price, largely because due to low keyword relevancy entities other than the official brand being arbitraged by Google require much higher bids to appear on competing popular trademark terms.

Historically, this difference between bid price & click price was a big source of noise on lists of the most valuable keywords.

Recently some advertisers have started complaining about the “Google shakedown” from how many brand-driven searches are simply leaving the .com part off of a web address in Chrome & then being forced to pay Google for their own pre-existing brand equity.

When Google puts 4 paid ads ahead of the first organic result for your own brand name, you’re forced to pay up if you want to be found. It’s a shakedown. It’s ransom. But at least we can have fun with it. Search for Basecamp and you may see this attached ad. pic.twitter.com/c0oYaBuahL

— Jason Fried (@jasonfried) September 3, 2019

New Keyword Tool

Our keyword tool is updated periodically. We recently updated it once more.

For comparison sake, the old keyword tool looked like this

Whereas the new keyword tool looks like this

The upsides of the new keyword tool are:

- fresher data from this year

- more granular data on ad bids vs click prices

- lists ad clickthrough rate

- more granular estimates of Google AdWords advertiser ad bids

- more emphasis on commercial oriented keywords

With the new columns of [ad spend] and [traffic value] here is how we estimate those.

- paid search ad spend: search ad clicks * CPC

- organic search traffic value: ad impressions * 0.5 * (100% – ad CTR) * CPC

The first of those two is rather self explanatory. The second is a bit more complex. It starts with the assumption that about half of all searches do not get any clicks, then it subtracts the paid clicks from the total remaining pool of clicks & multiplies that by the cost per click.

The new data also has some drawbacks:

- Rather than listing search counts specifically it lists relative ranges like low, very high, etc.

- Since it tends to tilt more toward keywords with ad impressions, it may not have coverage for some longer tail informational keywords.

For any keyword where there is insufficient coverage we re-query the old keyword database for data & merge it across. You will know if data came from the new database if the first column says something like low or high & the data came from the older database if there are specific search counts in the first column

For a limited time we are still allowing access to both keyword tools, though we anticipate removing access to the old keyword tool in the future once we have collected plenty of feedback on the new keyword tool. Please feel free to leave your feedback in the below comments.

One of the cool features of the new keyword tools worth highlighting further is the difference between estimated bid prices & estimated click prices. In the following screenshot you can see how Amazon is estimated as having a much higher bid price than actual click price, largely because due to low keyword relevancy entities other than the official brand being arbitraged by Google require much higher bids to appear on competing popular trademark terms.

Historically, this difference between bid price & click price was a big source of noise on lists of the most valuable keywords.

Recently some advertisers have started complaining about the “Google shakedown” from how many brand-driven searches are simply leaving the .com part off of a web address in Chrome & then being forced to pay Google for their own pre-existing brand equity.

When Google puts 4 paid ads ahead of the first organic result for your own brand name, you’re forced to pay up if you want to be found. It’s a shakedown. It’s ransom. But at least we can have fun with it. Search for Basecamp and you may see this attached ad. pic.twitter.com/c0oYaBuahL

— Jason Fried (@jasonfried) September 3, 2019

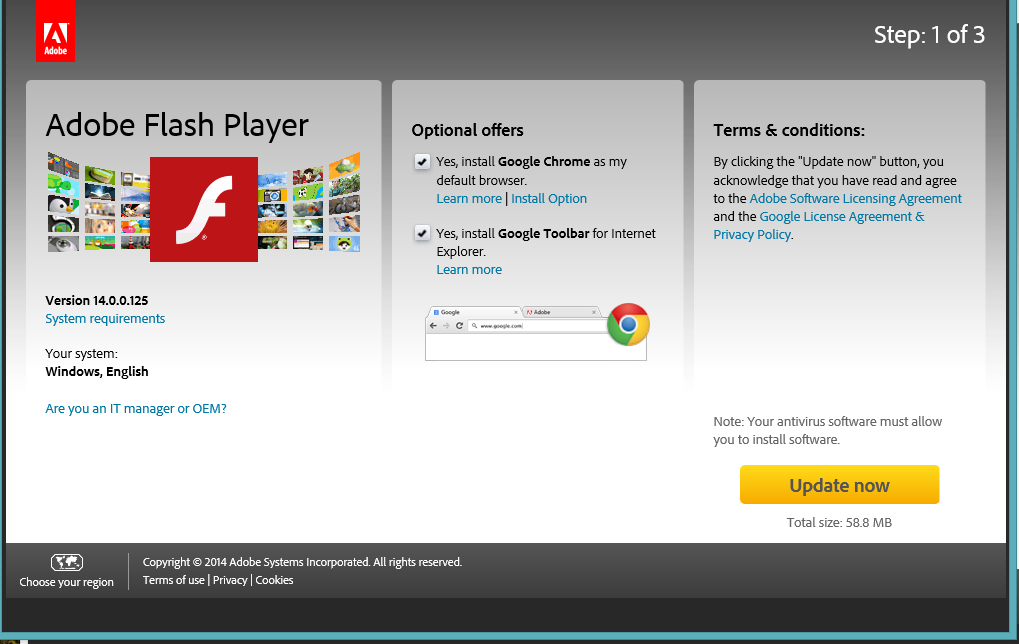

AMP’d Up for Recaptcha

Beyond search Google controls the leading distributed ad network, the leading mobile OS, the leading web browser, the leading email client, the leading web analytics platform, the leading free video hosting site.

They win a lot.

And they take winnings from one market & leverage them into manipulating adjacent markets.

Embrace. Extend. Extinguish.

Imagine taking a universal open standard that has zero problems with it and then stripping it down to it’s most basic components and then prepending each element with your own acronym. Then spend years building and recreating what has existed for decades. That is @amphtml— Jon Henshaw (@henshaw) April 4, 2019

AMP is an utterly unnecessary invention designed to further shift power to Google while disenfranchising publishers. From the very start it had many issues with basic things like supporting JavaScript, double counting unique users (no reason to fix broken stats if they drive adoption!), not supporting third party ad networks, not showing publisher domain names, and just generally being a useless layer of sunk cost technical overhead that provides literally no real value.

Over time they have corrected some of these catastrophic deficiencies, but if it provided real value, they wouldn’t have needed to force adoption with preferential placement in their search results. They force the bundling because AMP sucks.

Absurdity knows no bounds. Googlers suggest: “AMP isn’t another “channel” or “format” that’s somehow not the web. It’s not a SEO thing. It’s not a replacement for HTML. It’s a web component framework that can power your whole site. … We, the AMP team, want AMP to become a natural choice for modern web development of content websites, and for you to choose AMP as framework because it genuinely makes you more productive.”

Meanwhile some newspapers have about a dozen employees who work on re-formatting content for AMP:

The AMP development team now keeps track of whether AMP traffic drops suddenly, which might indicate pages are invalid, and it can react quickly.

All this adds expense, though. There are setup, development and maintenance costs associated with AMP, mostly in the form of time. After implementing AMP, the Guardian realized the project needed dedicated staff, so it created an 11-person team that works on AMP and other aspects of the site, drawing mostly from existing staff.

Feeeeeel the productivity!

Some content types (particularly user generated content) can be unpredictable & circuitous. For many years forums websites would use keywords embedded in the search referral to highlight relevant parts of the page. Keyword (not provided) largely destroyed that & then it became a competitive feature for AMP: “If the Featured Snippet links to an AMP article, Google will sometimes automatically scroll users to that section and highlight the answer in orange.”

That would perhaps be a single area where AMP was more efficient than the alternative. But it is only so because Google destroyed the alternative by stripping keyword referrers from search queries.

The power dynamics of AMP are ugly:

“I see them as part of the effort to normalise the use of the AMP Carousel, which is an anti-competitive land-grab for the web by an organisation that seems to have an insatiable appetite for consuming the web, probably ultimately to it’s own detriment. … This enables Google to continue to exist after the destination site (eg the New York Times) has been navigated to. Essentially it flips the parent-child relationship to be the other way around. … As soon as a publisher blesses a piece of content by packaging it (they have to opt in to this, but see coercion below), they totally lose control of its distribution. … I’m not that smart, so it’s surely possible to figure out other ways of making a preload possible without cutting off the content creator from the people consuming their content. … The web is open and decentralised. We spend a lot of time valuing the first of these concepts, but almost none trying to defend the second. Google knows, perhaps better than anyone, how being in control of the user is the most monetisable position, and having the deepest pockets and the most powerful platform to do so, they have very successfully inserted themselves into my relationship with millions of other websites. … In AMP, the support for paywalls is based on a recommendation that the premium content be included in the source of the page regardless of the user’s authorisation state. … These policies demonstrate contempt for others’ right to freely operate their businesses.

After enough publishers adopted AMP Google was able to turn their mobile app’s homepage into an interactive news feed below the search box. And inside that news feed Google gets to distribute MOAR ads while 0% of the revenue from those ads find its way to the publishers whose content is used to make up the feed.

Appropriate appropriation. :D

Each additional layer of technical cruft is another cost center. Things that sound appealing at first blush may not be:

The way you verify your identity to Let’s Encrypt is the same as with other certificate authorities: you don’t really. You place a file somewhere on your website, and they access that file over plain HTTP to verify that you own the website. The one attack that signed certificates are meant to prevent is a man-in-the-middle attack. But if someone is able to perform a man-in-the-middle attack against your website, then he can intercept the certificate verification, too. In other words, Let’s Encrypt certificates don’t stop the one thing they’re supposed to stop. And, as always with the certificate authorities, a thousand murderous theocracies, advertising companies, and international spy organizations are allowed to impersonate you by design.

Anything that is easy to implement & widely marketed often has costs added to it in the future as the entity moves to monetize the service.

This is a private equity firm buying up multiple hosting control panels & then adjusting prices.

This is Google Maps drastically changing their API terms.

This is Facebook charging you for likes to build an audience, giving your competitors access to those likes as an addressable audience to advertise against, and then charging you once more to boost the reach of your posts.

This is Grubhub creating shadow websites on your behalf and charging you for every transaction created by the gravity of your brand.

Shivane believes GrubHub purchased her restaurant’s web domain to prevent her from building her own online presence. She also believes the company may have had a special interest in owning her name because she processes a high volume of orders. … it appears GrubHub has set up several generic, templated pages that look like real restaurant websites but in fact link only to GrubHub. These pages also display phone numbers that GrubHub controls. The calls are forwarded to the restaurant, but the platform records each one and charges the restaurant a commission fee for every order

Settling for the easiest option drives a lack of differentiation, embeds additional risk & once the dominant player has enough marketshare they’ll change the terms on you.

Small gains in short term margins for massive increases in fragility.

“Closed platforms increase the chunk size of competition & increase the cost of market entry, so people who have good ideas, it is a lot more expensive for their productivity to be monetized. They also don’t like standardization … it looks like rent seeking behaviors on top of friction” – Gabe Newell

The other big issue is platforms that run out of growth space in their core market may break integrations with adjacent service providers as each want to grow by eating the other’s market.

Those who look at SaaS business models through the eyes of a seasoned investor will better understand how markets are likely to change:

“I’d argue that many of today’s anointed tech “disruptors” are doing little in the way of true disruption. … When investors used to get excited about a SAAS company, they typically would be describing a hosted multi-tenant subscription-billed piece of software that was replacing a ‘legacy’ on-premise perpetual license solution in the same target market (i.e. ERP, HCM, CRM, etc.). Today, the terms SAAS and Cloud essentially describe the business models of every single public software company.

Most platform companies are initially required to operate at low margins in order to buy growth of their category & own their category. Then when they are valued on that, they quickly need to jump across to adjacent markets to grow into the valuation:

Twilio has no choice but to climb up the application stack. This is a company whose ‘disruption’ is essentially great API documentation and gangbuster SEO spend built on top of a highly commoditized telephony aggregation API. They have won by marketing to DevOps engineers. With all the hype around them, you’d think Twilio invented the telephony API, when in reality what they did was turn it into a product company. Nobody had thought of doing this let alone that this could turn into a $17 billion company because simply put the economics don’t work. And to be clear they still don’t. But Twilio’s genius CEO clearly gets this. If the market is going to value robocalls, emergency sms notifications, on-call pages, and carrier fee passed through related revenue growth in the same way it does ‘subscription’ revenue from Atlassian or ServiceNow, then take advantage of it while it lasts.

Large platforms offering temporary subsidies to ensure they dominate their categories & companies like SoftBank spraying capital across the markets is causing massive shifts in valuations:

I also think if you look closely at what is celebrated today as innovation you often find models built on hidden subsidies. … I’d argue the very distributed nature of microservices architecture and API-first product companies means addressable market sizes and unit economics assumptions should be even more carefully scrutinized. … How hard would it be to create an Alibaba today if someone like SoftBank was raining money into such a greenfield space? Excess capital would lead to destruction and likely subpar returns. If capital was the solution, the 1.5 trillion that went into telcos in late ’90s wouldn’t have led to a massive bust. Would a Netflix be what it is today if a SoftBank was pouring billions into streaming content startups right as the experiment was starting? Obviously not. Scarcity of capital is another often underappreciated part of the disruption equation. Knowing resources are finite leads to more robust models. … This convergence is starting to manifest itself in performance. Disney is up 30% over the last 12 months while Netflix is basically flat. This may not feel like a bubble sign to most investors, but from my standpoint, it’s a clear evidence of the fact that we are approaching a something has got to give moment for the way certain businesses are valued.”

Circling back to Google’s AMP, it has a cousin called Recaptcha.

Recaptcha is another AMP-like trojan horse:

According to tech statistics website Built With, more than 650,000 websites are already using reCaptcha v3; overall, there are at least 4.5 million websites use reCaptcha, including 25% of the top 10,000 sites. Google is also now testing an enterprise version of reCaptcha v3, where Google creates a customized reCaptcha for enterprises that are looking for more granular data about users’ risk levels to protect their site algorithms from malicious users and bots. … According to two security researchers who’ve studied reCaptcha, one of the ways that Google determines whether you’re a malicious user or not is whether you already have a Google cookie installed on your browser. … To make this risk-score system work accurately, website administrators are supposed to embed reCaptcha v3 code on all of the pages of their website, not just on forms or log-in pages.

About a month ago when logging into Bing Ads I saw recaptcha on the login page & couldn’t believe they’d give Google control at that access point. I think they got rid of that, but lots of companies are perhaps shooting themselves in the foot through a combination of over-reliance on Google infrastructure AND sloppy implementation

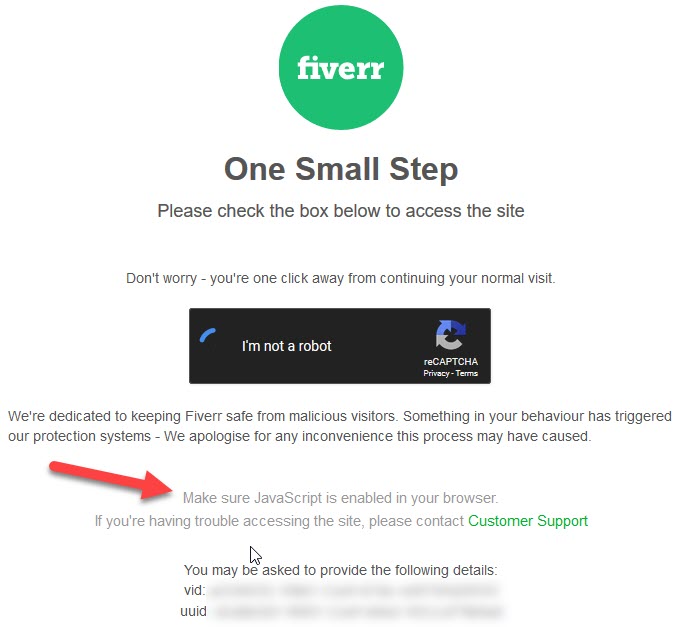

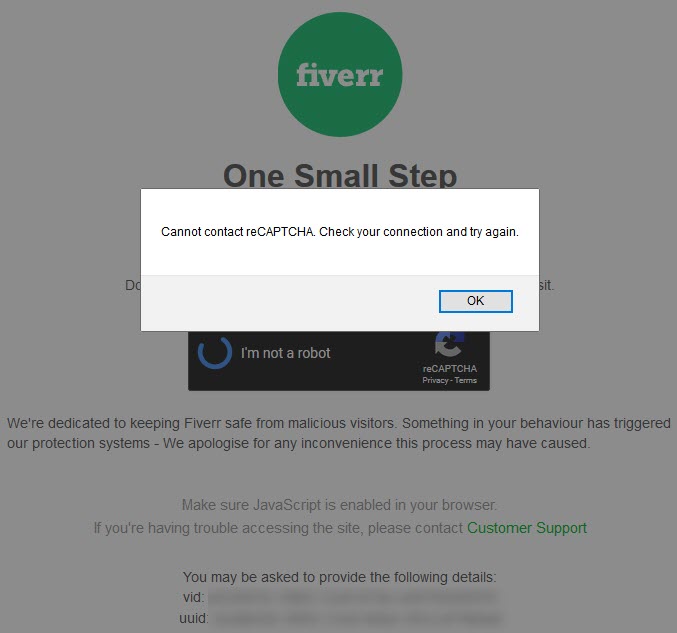

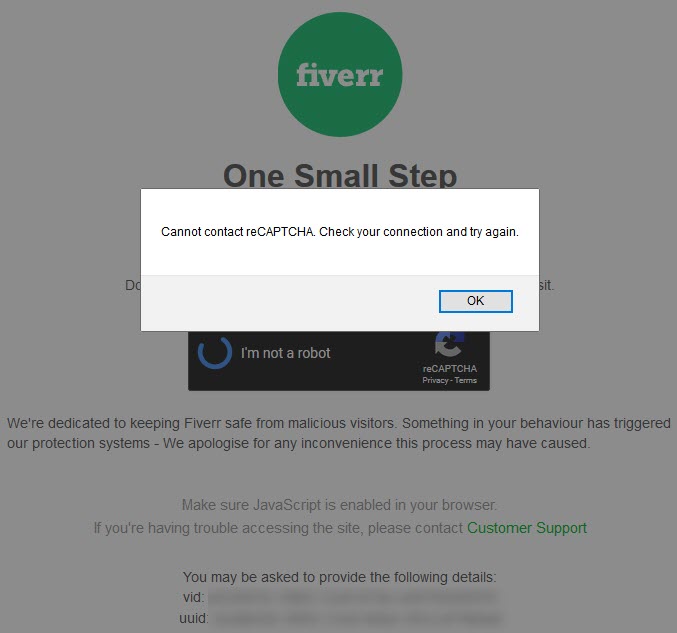

Today when making a purchase on Fiverr, after converting, I got some of this action

Hmm. Maybe I will enable JavaScript and try again.

Oooops.

That is called snatching defeat from the jaws of victory.

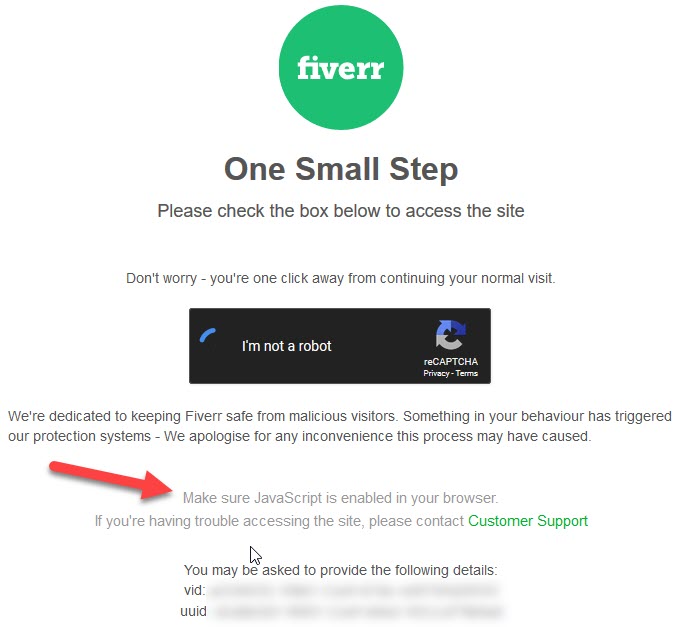

My account is many years old. My payment type on record has been used for years. I have ordered from the particular seller about a dozen times over the years. And suddenly because my web browser had JavaScript turned off I was deemed a security risk of some sort for making an utterly ordinary transaction I have already completed about a dozen times.

On AMP JavaScript was the devil. And on desktop not JavaScript was the devil.

Pro tip: Ecommerce websites that see substandard conversion rates from using Recaptcha can boost their overall ecommerce revenue by buying more Google AdWords ads.

—

As more of the infrastructure stack is driven by AI software there is going to be a very real opportunity for many people to become deplatformed across the web on an utterly arbitrary basis. That tech companies like Facebook also want to create digital currencies on top of the leverage they already have only makes the proposition that much scarier.

If the tech platforms host copies of our sites, process the transactions & even create their own currencies, how will we know what level of value they are adding versus what they are extracting?

Who measures the measurer?

And when the economics turn negative, what will we do if we are hooked into an ecosystem we can’t spend additional capital to get out of when things head south?

AMP’d Up for Recaptcha

Beyond search Google controls the leading distributed ad network, the leading mobile OS, the leading web browser, the leading email client, the leading web analytics platform, the leading free video hosting site.

They win a lot.

And they take winnings from one market & leverage them into manipulating adjacent markets.

Embrace. Extend. Extinguish.

Imagine taking a universal open standard that has zero problems with it and then stripping it down to it’s most basic components and then prepending each element with your own acronym. Then spend years building and recreating what has existed for decades. That is @amphtml— Jon Henshaw (@henshaw) April 4, 2019

AMP is an utterly unnecessary invention designed to further shift power to Google while disenfranchising publishers. From the very start it had many issues with basic things like supporting JavaScript, double counting unique users (no reason to fix broken stats if they drive adoption!), not supporting third party ad networks, not showing publisher domain names, and just generally being a useless layer of sunk cost technical overhead that provides literally no real value.

Over time they have corrected some of these catastrophic deficiencies, but if it provided real value, they wouldn’t have needed to force adoption with preferential placement in their search results. They force the bundling because AMP sucks.

Absurdity knows no bounds. Googlers suggest: “AMP isn’t another “channel” or “format” that’s somehow not the web. It’s not a SEO thing. It’s not a replacement for HTML. It’s a web component framework that can power your whole site. … We, the AMP team, want AMP to become a natural choice for modern web development of content websites, and for you to choose AMP as framework because it genuinely makes you more productive.”

Meanwhile some newspapers have about a dozen employees who work on re-formatting content for AMP:

The AMP development team now keeps track of whether AMP traffic drops suddenly, which might indicate pages are invalid, and it can react quickly.

All this adds expense, though. There are setup, development and maintenance costs associated with AMP, mostly in the form of time. After implementing AMP, the Guardian realized the project needed dedicated staff, so it created an 11-person team that works on AMP and other aspects of the site, drawing mostly from existing staff.

Feeeeeel the productivity!

Some content types (particularly user generated content) can be unpredictable & circuitous. For many years forums websites would use keywords embedded in the search referral to highlight relevant parts of the page. Keyword (not provided) largely destroyed that & then it became a competitive feature for AMP: “If the Featured Snippet links to an AMP article, Google will sometimes automatically scroll users to that section and highlight the answer in orange.”

That would perhaps be a single area where AMP was more efficient than the alternative. But it is only so because Google destroyed the alternative by stripping keyword referrers from search queries.

The power dynamics of AMP are ugly:

“I see them as part of the effort to normalise the use of the AMP Carousel, which is an anti-competitive land-grab for the web by an organisation that seems to have an insatiable appetite for consuming the web, probably ultimately to it’s own detriment. … This enables Google to continue to exist after the destination site (eg the New York Times) has been navigated to. Essentially it flips the parent-child relationship to be the other way around. … As soon as a publisher blesses a piece of content by packaging it (they have to opt in to this, but see coercion below), they totally lose control of its distribution. … I’m not that smart, so it’s surely possible to figure out other ways of making a preload possible without cutting off the content creator from the people consuming their content. … The web is open and decentralised. We spend a lot of time valuing the first of these concepts, but almost none trying to defend the second. Google knows, perhaps better than anyone, how being in control of the user is the most monetisable position, and having the deepest pockets and the most powerful platform to do so, they have very successfully inserted themselves into my relationship with millions of other websites. … In AMP, the support for paywalls is based on a recommendation that the premium content be included in the source of the page regardless of the user’s authorisation state. … These policies demonstrate contempt for others’ right to freely operate their businesses.

After enough publishers adopted AMP Google was able to turn their mobile app’s homepage into an interactive news feed below the search box. And inside that news feed Google gets to distribute MOAR ads while 0% of the revenue from those ads find its way to the publishers whose content is used to make up the feed.

Appropriate appropriation. :D

Thank you for your content!!!

Well this issue (bug?) is going to cause a sh*t storm… Google @AMPhtml not allowing people to click through to full site? You can’t see but am clicking the link in top right iOS Chrome 74.0.3729.155 pic.twitter.com/dMt5QSW9fu— Scotch.io (@scotch_io) June 11, 2019

The mainstream media is waking up to AMP being a trap, but their neck is already in it:

European and American tech, media and publishing companies, including some that originally embraced AMP, are complaining that the Google-backed technology, which loads article pages in the blink of an eye on smartphones, is cementing the search giant’s dominance on the mobile web.

Each additional layer of technical cruft is another cost center. Things that sound appealing at first blush may not be:

The way you verify your identity to Let’s Encrypt is the same as with other certificate authorities: you don’t really. You place a file somewhere on your website, and they access that file over plain HTTP to verify that you own the website. The one attack that signed certificates are meant to prevent is a man-in-the-middle attack. But if someone is able to perform a man-in-the-middle attack against your website, then he can intercept the certificate verification, too. In other words, Let’s Encrypt certificates don’t stop the one thing they’re supposed to stop. And, as always with the certificate authorities, a thousand murderous theocracies, advertising companies, and international spy organizations are allowed to impersonate you by design.

Anything that is easy to implement & widely marketed often has costs added to it in the future as the entity moves to monetize the service.

This is a private equity firm buying up multiple hosting control panels & then adjusting prices.

This is Google Maps drastically changing their API terms.

This is Facebook charging you for likes to build an audience, giving your competitors access to those likes as an addressable audience to advertise against, and then charging you once more to boost the reach of your posts.

This is Grubhub creating shadow websites on your behalf and charging you for every transaction created by the gravity of your brand.

Shivane believes GrubHub purchased her restaurant’s web domain to prevent her from building her own online presence. She also believes the company may have had a special interest in owning her name because she processes a high volume of orders. … it appears GrubHub has set up several generic, templated pages that look like real restaurant websites but in fact link only to GrubHub. These pages also display phone numbers that GrubHub controls. The calls are forwarded to the restaurant, but the platform records each one and charges the restaurant a commission fee for every order

Settling for the easiest option drives a lack of differentiation, embeds additional risk & once the dominant player has enough marketshare they’ll change the terms on you.

Small gains in short term margins for massive increases in fragility.

“Closed platforms increase the chunk size of competition & increase the cost of market entry, so people who have good ideas, it is a lot more expensive for their productivity to be monetized. They also don’t like standardization … it looks like rent seeking behaviors on top of friction” – Gabe Newell

The other big issue is platforms that run out of growth space in their core market may break integrations with adjacent service providers as each want to grow by eating the other’s market.

Those who look at SaaS business models through the eyes of a seasoned investor will better understand how markets are likely to change:

“I’d argue that many of today’s anointed tech “disruptors” are doing little in the way of true disruption. … When investors used to get excited about a SAAS company, they typically would be describing a hosted multi-tenant subscription-billed piece of software that was replacing a ‘legacy’ on-premise perpetual license solution in the same target market (i.e. ERP, HCM, CRM, etc.). Today, the terms SAAS and Cloud essentially describe the business models of every single public software company.

Most platform companies are initially required to operate at low margins in order to buy growth of their category & own their category. Then when they are valued on that, they quickly need to jump across to adjacent markets to grow into the valuation:

Twilio has no choice but to climb up the application stack. This is a company whose ‘disruption’ is essentially great API documentation and gangbuster SEO spend built on top of a highly commoditized telephony aggregation API. They have won by marketing to DevOps engineers. With all the hype around them, you’d think Twilio invented the telephony API, when in reality what they did was turn it into a product company. Nobody had thought of doing this let alone that this could turn into a $17 billion company because simply put the economics don’t work. And to be clear they still don’t. But Twilio’s genius CEO clearly gets this. If the market is going to value robocalls, emergency sms notifications, on-call pages, and carrier fee passed through related revenue growth in the same way it does ‘subscription’ revenue from Atlassian or ServiceNow, then take advantage of it while it lasts.

Large platforms offering temporary subsidies to ensure they dominate their categories & companies like SoftBank spraying capital across the markets is causing massive shifts in valuations:

I also think if you look closely at what is celebrated today as innovation you often find models built on hidden subsidies. … I’d argue the very distributed nature of microservices architecture and API-first product companies means addressable market sizes and unit economics assumptions should be even more carefully scrutinized. … How hard would it be to create an Alibaba today if someone like SoftBank was raining money into such a greenfield space? Excess capital would lead to destruction and likely subpar returns. If capital was the solution, the 1.5 trillion that went into telcos in late ’90s wouldn’t have led to a massive bust. Would a Netflix be what it is today if a SoftBank was pouring billions into streaming content startups right as the experiment was starting? Obviously not. Scarcity of capital is another often underappreciated part of the disruption equation. Knowing resources are finite leads to more robust models. … This convergence is starting to manifest itself in performance. Disney is up 30% over the last 12 months while Netflix is basically flat. This may not feel like a bubble sign to most investors, but from my standpoint, it’s a clear evidence of the fact that we are approaching a something has got to give moment for the way certain businesses are valued.”

Circling back to Google’s AMP, it has a cousin called Recaptcha.

Recaptcha is another AMP-like trojan horse:

According to tech statistics website Built With, more than 650,000 websites are already using reCaptcha v3; overall, there are at least 4.5 million websites use reCaptcha, including 25% of the top 10,000 sites. Google is also now testing an enterprise version of reCaptcha v3, where Google creates a customized reCaptcha for enterprises that are looking for more granular data about users’ risk levels to protect their site algorithms from malicious users and bots. … According to two security researchers who’ve studied reCaptcha, one of the ways that Google determines whether you’re a malicious user or not is whether you already have a Google cookie installed on your browser. … To make this risk-score system work accurately, website administrators are supposed to embed reCaptcha v3 code on all of the pages of their website, not just on forms or log-in pages.

About a month ago when logging into Bing Ads I saw recaptcha on the login page & couldn’t believe they’d give Google control at that access point. I think they got rid of that, but lots of companies are perhaps shooting themselves in the foot through a combination of over-reliance on Google infrastructure AND sloppy implementation

Today when making a purchase on Fiverr, after converting, I got some of this action

Hmm. Maybe I will enable JavaScript and try again.

Oooops.

That is called snatching defeat from the jaws of victory.

My account is many years old. My payment type on record has been used for years. I have ordered from the particular seller about a dozen times over the years. And suddenly because my web browser had JavaScript turned off I was deemed a security risk of some sort for making an utterly ordinary transaction I have already completed about a dozen times.

On AMP JavaScript was the devil. And on desktop not JavaScript was the devil.

Pro tip: Ecommerce websites that see substandard conversion rates from using Recaptcha can boost their overall ecommerce revenue by buying more Google AdWords ads.

—

As more of the infrastructure stack is driven by AI software there is going to be a very real opportunity for many people to become deplatformed across the web on an utterly arbitrary basis. That tech companies like Facebook also want to create digital currencies on top of the leverage they already have only makes the proposition that much scarier.

If the tech platforms host copies of our sites, process the transactions & even create their own currencies, how will we know what level of value they are adding versus what they are extracting?

Who measures the measurer?

And when the economics turn negative, what will we do if we are hooked into an ecosystem we can’t spend additional capital to get out of when things head south?

The Fractured Web

Anyone can argue about the intent of a particular action & the outcome that is derived by it. But when the outcome is known, at some point the intent is inferred if the outcome is derived from a source of power & the outcome doesn’t change.

Or, put another way, if a powerful entity (government, corporation, other organization) disliked an outcome which appeared to benefit them in the short term at great lasting cost to others, they could spend resources to adjust the system.

If they don’t spend those resources (or, rather, spend them on lobbying rather than improving the ecosystem) then there is no desired change. The outcome is as desired. Change is unwanted.

Engagement is a toxic metric.Products which optimize for it become worse. People who optimize for it become less happy.It also seems to generate runaway feedback loops where most engagable people have a) worst individual experiences and then b) end up driving the product bus.— Patrick McKenzie (@patio11) April 9, 2019

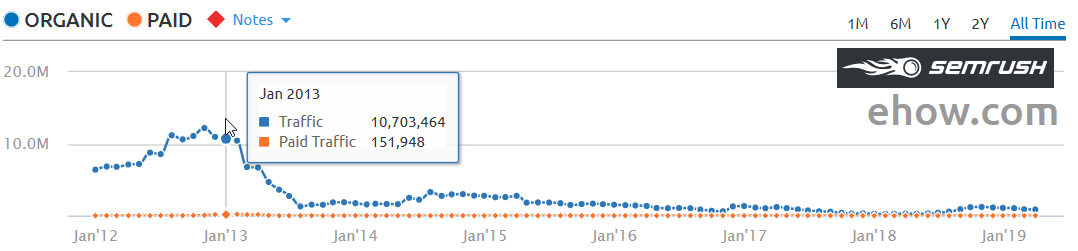

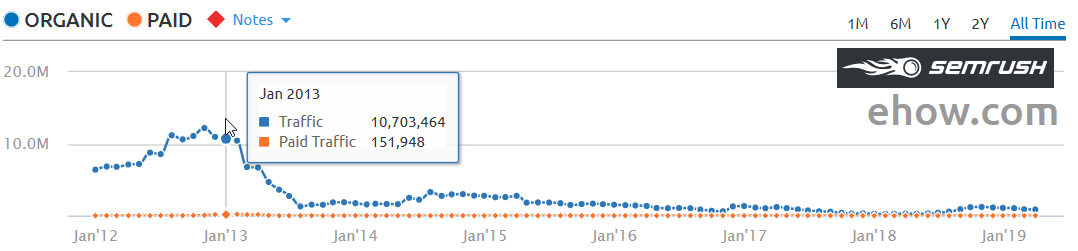

News is a stock vs flow market where the flow of recent events drives most of the traffic to articles. News that is more than a couple days old is no longer news. A news site which stops publishing news stops becoming a habit & quickly loses relevancy. Algorithmically an abandoned archive of old news articles doesn’t look much different than eHow, in spite of having a much higher cost structure.

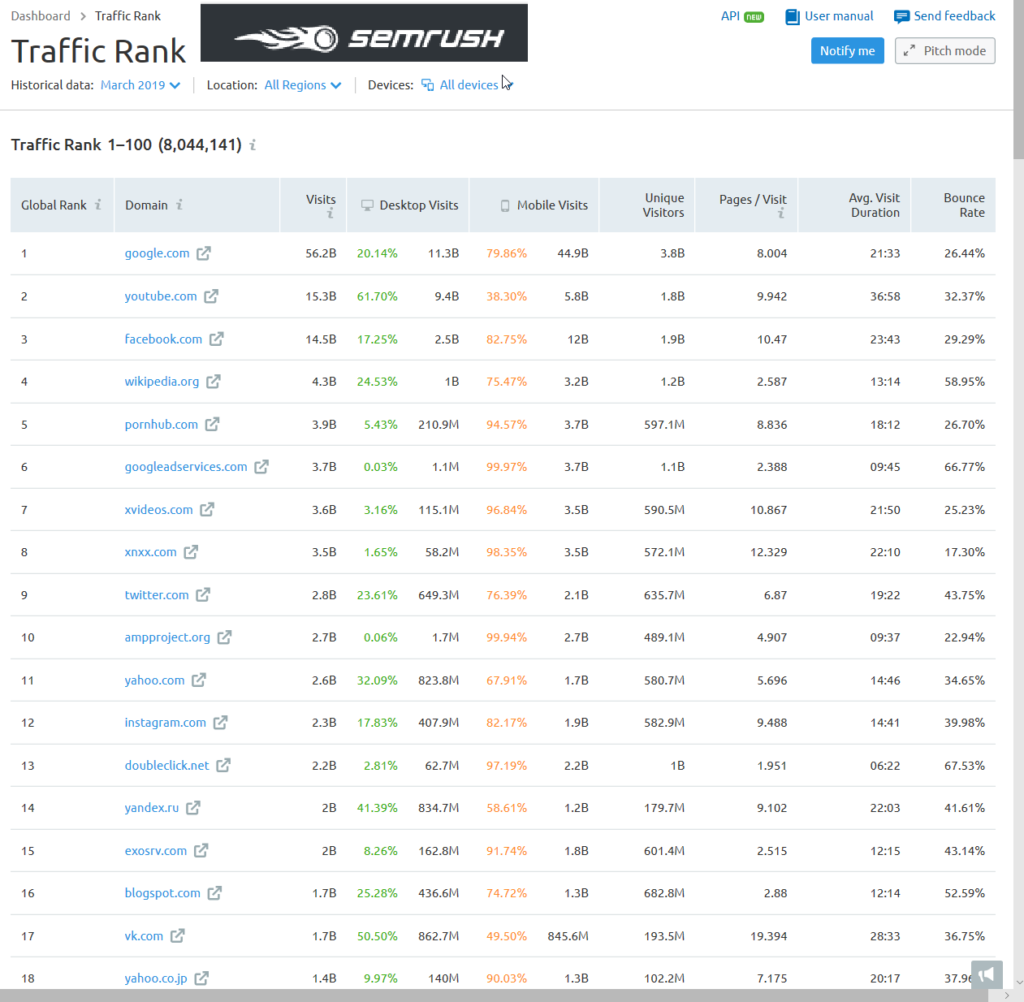

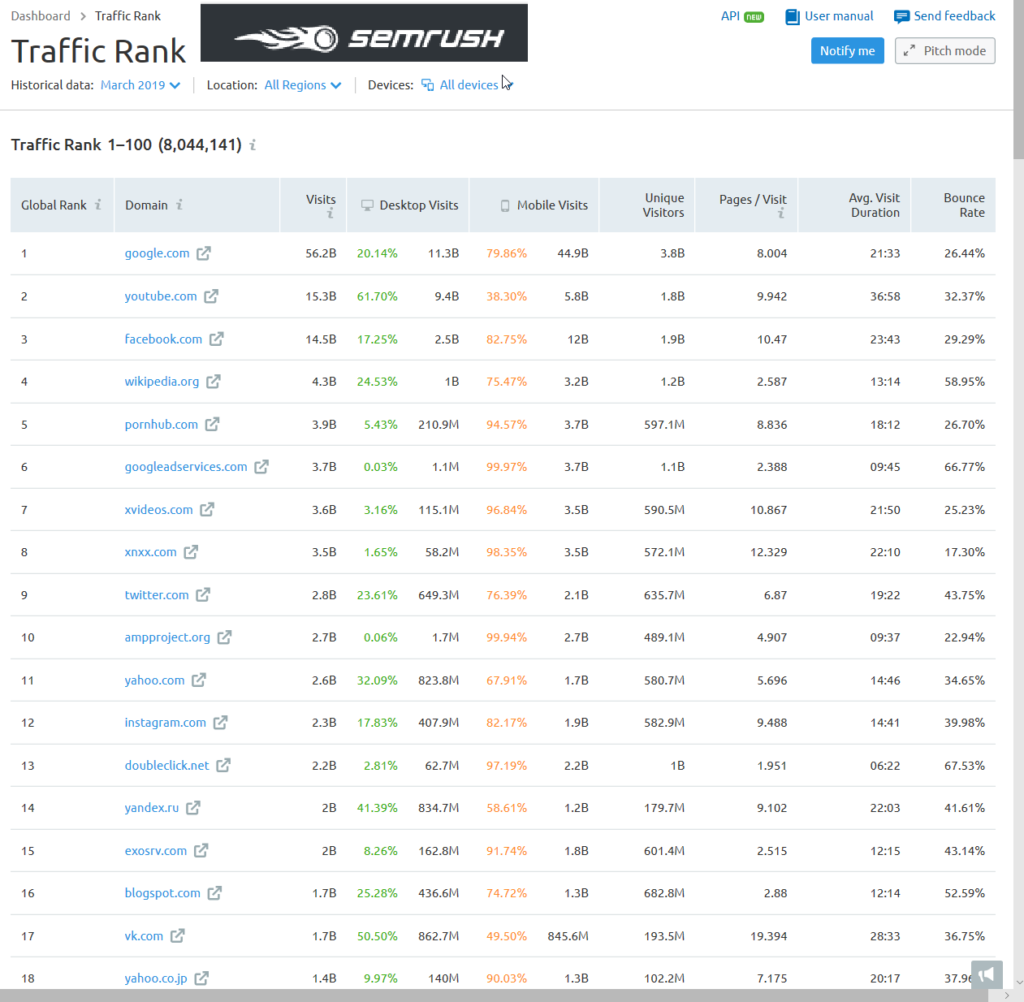

According to SEMrush’s traffic rank, ampproject.org gets more monthly visits than Yahoo.com.

That actually understates the prevalence of AMP because AMP is generally designed for mobile AND not all AMP-formatted content is displayed on ampproject.org.

Part of how AMP was able to get widespread adoption was because in the news vertical the organic search result set was displaced by an AMP block. If you were a news site either you were so differentiated that readers would scroll past the AMP block in the search results to look for you specifically, or you adopted AMP, or you were doomed.

Some news organizations like The Guardian have a team of about a dozen people reformatting their content to the duplicative & proprietary AMP format. That’s wasteful, but necessary “In theory, adoption of AMP is voluntary. In reality, publishers that don’t want to see their search traffic evaporate have little choice. New data from publisher analytics firm Chartbeat shows just how much leverage Google has over publishers thanks to its dominant search engine.”

It seems more than a bit backward that low margin publishers are doing duplicative work to distance themselves from their own readers while improving the profit margins of monopolies. But it is what it is. And that no doubt drew the ire of many publishers across the EU.

And now there are AMP Stories to eat up even more visual real estate.

If you spent a bunch of money to create a highly differentiated piece of content, why would you prefer that high spend flaghship content appear on a third party website rather than your own?

Google & Facebook have done such a fantastic job of eating the entire pie that some are celebrating Amazon as a prospective savior to the publishing industry. That view – IMHO – is rather suspect.

Where any of the tech monopolies dominate they cram down on partners. The New York Times acquired The Wirecutter in Q4 of 2016. In Q1 of 2017 Amazon adjusted their affiliate fee schedule.

Amazon generally treats consumers well, but they have been much harder on business partners with tough pricing negotiations, counterfeit protections, forced ad buying to have a high enough product rank to be able to rank organically, ad displacement of their organic search results below the fold (even for branded search queries), learning suppliers & cutting out the partners, private label products patterned after top sellers, in some cases running pop over ads for the private label products on product level pages where brands already spent money to drive traffic to the page, etc.

They’ve made things tougher for their partners in a way that mirrors the impact Facebook & Google have had on online publishers:

“Boyce’s experience on Amazon largely echoed what happens in the offline world: competitors entered the market, pushing down prices and making it harder to make a profit. So Boyce adapted. He stopped selling basketball hoops and developed his own line of foosball tables, air hockey tables, bocce ball sets and exercise equipment. The best way to make a decent profit on Amazon was to sell something no one else had and create your own brand. … Amazon also started selling bocce ball sets that cost $15 less than Boyce’s. He says his products are higher quality, but Amazon gives prominent page space to its generic version and wins the cost-conscious shopper.”

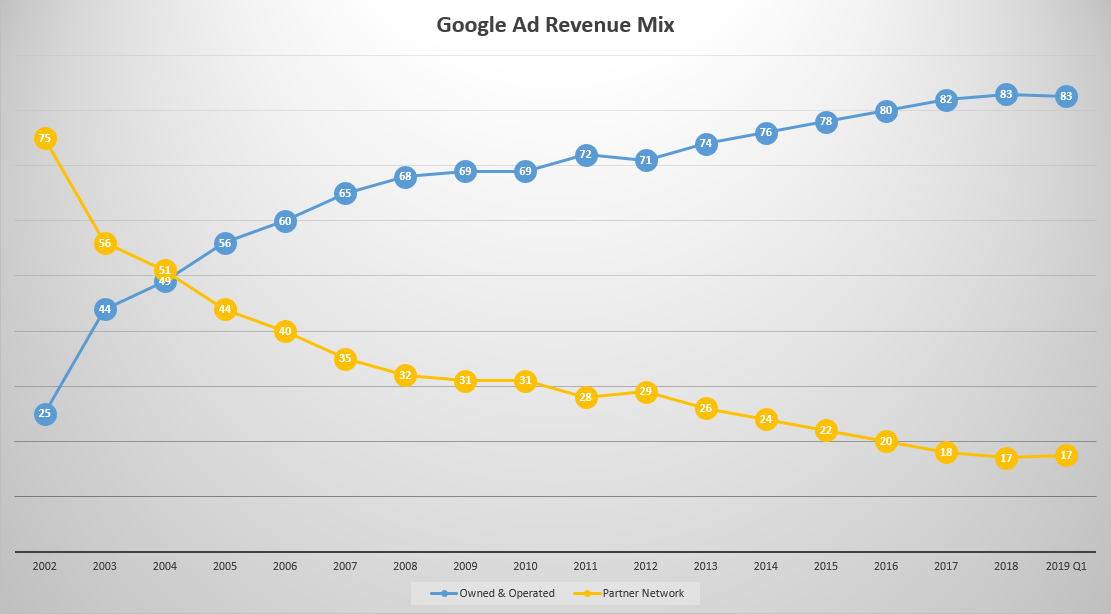

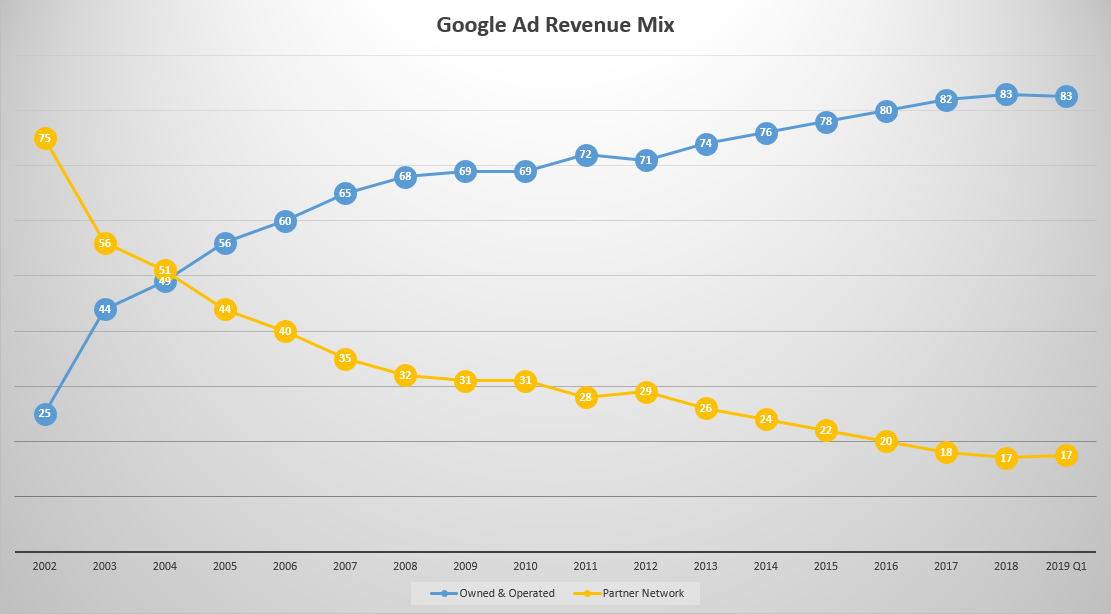

Google claims they have no idea how content publishers are with the trade off between themselves & the search engine, but every quarter Alphabet publish the share of ad spend occurring on owned & operated sites versus the share spent across the broader publisher network. And in almost every quarter for over a decade straight that ratio has grown worse for publishers.

When Google tells industry about how much $ it funnels to rest of ecosystem, just show them this chart. It’s good to be the “revenue regulator” (note: G went public in 2004). pic.twitter.com/HCbCNgbzKc— Jason Kint (@jason_kint) February 5, 2019

The aggregate numbers for news publishers are worse than shown above as Google is ramping up ads in video games quite hard. They’ve partnered with Unity & promptly took away the ability to block ads from appearing in video games using googleadsenseformobileapps.com exclusion (hello flat thumb misclicks, my name is budget & I am gone!)

They will also track video game player behavior & alter game play to maximize revenues based on machine learning tied to surveillance of the user’s account: “We’re bringing a new approach to monetization that combines ads and in-app purchases in one automated solution. Available today, new smart segmentation features in Google AdMob use machine learning to segment your players based on their likelihood to spend on in-app purchases. Ad units with smart segmentation will show ads only to users who are predicted not to spend on in-app purchases. Players who are predicted to spend will see no ads, and can simply continue playing.”

And how does the growth of ampproject.org square against the following wisdom?

If you do use a CDN, I’d recommend using a domain name of your own (eg, https://t.co/fWMc6CFPZ0), so you can move to other CDNs if you feel the need to over time, without having to do any redirects.— John (@JohnMu) April 15, 2019

Literally only yesterday did Google begin supporting instant loading of self-hosted AMP pages.

China has a different set of tech leaders than the United States. Baidu, Alibaba, Tencent (BAT) instead of Facebook, Amazon, Apple, Netflix, Google (FANG). China tech companies may have won their domestic markets in part based on superior technology or better knowledge of the local culture, though those same companies have largely went nowhere fast in most foreign markets. A big part of winning was governmental assistance in putting a foot on the scales.

Part of the US-China trade war is about who controls the virtual “seas” upon which value flows:

it can easily be argued that the last 60 years were above all the era of the container-ship (with container-ships getting ever bigger). But will the coming decades still be the age of the container-ship? Possibly not, for the simple reason that things that have value increasingly no longer travel by ship, but instead by fiberoptic cables! … you could almost argue that ZTE and Huawei have been the “East India Company” of the current imperial cycle. Unsurprisingly, it is these very companies, charged with laying out the “new roads” along which “tomorrow’s value” will flow, that find themselves at the center of the US backlash. … if the symbol of British domination was the steamship, and the symbol of American strength was the Boeing 747, it seems increasingly clear that the question of the future will be whether tomorrow’s telecom switches and routers are produced by Huawei or Cisco. … US attempts to take down Huawei and ZTE can be seen as the existing empire’s attempt to prevent the ascent of a new imperial power. With this in mind, I could go a step further and suggest that perhaps the Huawei crisis is this century’s version of Suez crisis. No wonder markets have been falling ever since the arrest of the Huawei CFO. In time, the Suez Crisis was brought to a halt by US threats to destroy the value of sterling. Could we now witness the same for the US dollar?

China maintains Huawei is an employee-owned company. But that proposition is suspect. Broadly stealing technology is vital to the growth of the Chinese economy & they have no incentive to stop unless their leading companies pay a direct cost. Meanwhile, China is investigating Ericsson over licensing technology.

India has taken notice of the success of Chinese tech companies & thus began to promote “national champion” company policies. That, in turn, has also meant some of the Chinese-styled laws requiring localized data, antitrust inquiries, foreign ownership restrictions, requirements for platforms to not sell their own goods, promoting limits on data encryption, etc.

The secretary of India’s Telecommunications Department, Aruna Sundararajan, last week told a gathering of Indian startups in a closed-door meeting in the tech hub of Bangalore that the government will introduce a “national champion” policy “very soon” to encourage the rise of Indian companies, according to a person familiar with the matter. She said Indian policy makers had noted the success of China’s internet giants, Alibaba Group Holding Ltd. and Tencent Holdings Ltd. … Tensions began rising last year, when New Delhi decided to create a clearer set of rules for e-commerce and convened a group of local players to solicit suggestions. Amazon and Flipkart, even though they make up more than half the market, weren’t invited, according to people familiar with the matter.

Amazon vowed to invest $5 billion in India & they have done some remarkable work on logistics there. Walmart acquired Flipkart for $16 billion.

Other emerging markets also have many local ecommerce leaders like Jumia, MercadoLibre, OLX, Gumtree, Takealot, Konga, Kilimall, BidOrBuy, Tokopedia, Bukalapak, Shoppee, Lazada. If you live in the US you may have never heard of *any* of those companies. And if you live in an emerging market you may have never interacted with Amazon or eBay.

It makes sense that ecommerce leadership would be more localized since it requires moving things in the physical economy, dealing with local currencies, managing inventory, shipping goods, etc. whereas information flows are just bits floating on a fiber optic cable.

If the Internet is primarily seen as a communications platform it is easy for people in some emerging markets to think Facebook is the Internet. Free communication with friends and family members is a compelling offer & as the cost of data drops web usage increases.

At the same time, the web is incredibly deflationary. Every free form of entertainment which consumes time is time that is not spent consuming something else.

Add the technological disruption to the wealth polarization that happened in the wake of the great recession, then combine that with algorithms that promote extremist views & it is clearly causing increasing conflict.

If you are a parent and you think you child has no shot at a brighter future than your own life it is easy to be full of rage.

Empathy can radicalize otherwise normal people by giving them a more polarized view of the world:

Starting around 2000, the line starts to slide. More students say it’s not their problem to help people in trouble, not their job to see the world from someone else’s perspective. By 2009, on all the standard measures, Konrath found, young people on average measure 40 percent less empathetic than my own generation … The new rule for empathy seems to be: reserve it, not for your “enemies,” but for the people you believe are hurt, or you have decided need it the most. Empathy, but just for your own team. And empathizing with the other team? That’s practically a taboo.

A complete lack of empathy could allow a psychopath to commit extreme crimes while feeling no guilt, shame or remorse. Extreme empathy can have the same sort of outcome:

“Sometimes we commit atrocities not out of a failure of empathy but rather as a direct consequence of successful, even overly successful, empathy. … They emphasized that students would learn both sides, and the atrocities committed by one side or the other were always put into context. Students learned this curriculum, but follow-up studies showed that this new generation was more polarized than the one before. … [Empathy] can be good when it leads to good action, but it can have downsides. For example, if you want the victims to say ‘thank you.’ You may even want to keep the people you help in that position of inferior victim because it can sustain your feeling of being a hero.” – Fritz Breithaupt

News feeds will be read. Villages will be razed. Lynch mobs will become commonplace.

Many people will end up murdered by algorithmically generated empathy.

As technology increases absentee ownership & financial leverage, a society led by morally agnostic algorithms is not going to become more egalitarian.

The more I think about and discuss it, the more I think WhatsApp is simultaneously the future of Facebook, and the most potentially dangerous digital tool yet created. We haven’t even begun to see the real impact yet of ubiquitous, unfettered and un-moderatable human telepathy.— Antonio García Martínez (@antoniogm) April 15, 2019

When politicians throw fuel on the fire it only gets worse:

It’s particularly odd that the government is demanding “accountability and responsibility” from a phone app when some ruling party politicians are busy spreading divisive fake news. How can the government ask WhatsApp to control mobs when those convicted of lynching Muslims have been greeted, garlanded and fed sweets by some of the most progressive and cosmopolitan members of Modi’s council of ministers?

Mark Zuckerburg won’t get caught downstream from platform blowback as he spends $20 million a year on his security.

The web is a mirror. Engagement-based algorithms reinforcing our perceptions & identities.

And every important story has at least 2 sides!

The Rohingya asylum seekers are victims of their own violent Jihadist leadership that formed a militia to kill Buddhists and Hindus. Hindus are being massacred, where’s the outrage for them!? https://t.co/P3m6w4B1Po— Imam Tawhidi (@Imamofpeace) May 23, 2018

Some may “learn” vaccines don’t work. Others may learn the vaccines their own children took did not work, as it failed to protect them from the antivax content spread by Facebook & Google, absorbed by people spreading measles & Medieval diseases.

Passion drives engagement, which drives algorithmic distribution: “There’s an asymmetry of passion at work. Which is to say, there’s very little counter-content to surface because it simply doesn’t occur to regular people (or, in this case, actual medical experts) that there’s a need to produce counter-content.”

As the costs of “free” become harder to hide, social media companies which currently sell emerging markets as their next big growth area will end up having embedded regulatory compliance costs which will end up exceeding any sort of prospective revenue they could hope to generate.

The Pinterest S1 shows almost all their growth is in emerging markets, yet almost all their revenue is inside the United States.

As governments around the world see the real-world cost of the foreign tech companies & view some of them as piggy banks, eventually the likes of Facebook or Google will pull out of a variety of markets they no longer feel worth serving. It will be like Google did in mainland China with search after discovering pervasive hacking of activist Gmail accounts.

Just tried signing into Gmail from a new device. Unless I provide a phone number, there is no way to sign in and no one to call about it. Oh, and why do they say they need my phone? If you guessed “for my protection,” you would be correct. Talk about Big Brother…— Simon Mikhailovich (@S_Mikhailovich) April 16, 2019

Lower friction & lower cost information markets will face more junk fees, hurdles & even some legitimate regulations. Information markets will start to behave more like physical goods markets.

The tech companies presume they will be able to use satellites, drones & balloons to beam in Internet while avoiding messy local issues tied to real world infrastructure, but when a local wealthy player is betting against them they’ll probably end up losing those markets: “One of the biggest cheerleaders for the new rules was Reliance Jio, a fast-growing mobile phone company controlled by Mukesh Ambani, India’s richest industrialist. Mr. Ambani, an ally of Mr. Modi, has made no secret of his plans to turn Reliance Jio into an all-purpose information service that offers streaming video and music, messaging, money transfer, online shopping, and home broadband services.”

Publishers do not have “their mojo back” because the tech companies have been so good to them, but rather because the tech companies have been so aggressive that they’ve earned so much blowback which will in turn lead publishers to opting out of future deals, which will eventually lead more people back to the trusted brands of yesterday.

Publishers feeling guilty about taking advertorial money from the tech companies to spread their propaganda will offset its publication with opinion pieces pointing in the other direction: “This is a lobbying campaign in which buying the good opinion of news brands is clearly important. If it was about reaching a target audience, there are plenty of metrics to suggest his words would reach further – at no cost – on Facebook. Similarly, Google is upping its presence in a less obvious manner via assorted media initiatives on both sides of the Atlantic. Its more direct approach to funding journalism seems to have the desired effect of making all media organisations (and indeed many academic institutions) touched by its money slightly less questioning and critical of its motives.”

When Facebook goes down direct visits to leading news brand sites go up.

When Google penalizes a no-name me-too site almost nobody realizes it is missing. But if a big publisher opts out of the ecosystem people will notice.

The reliance on the tech platforms is largely a mirage. If enough key players were to opt out at the same time people would quickly reorient their information consumption habits.

If the platforms can change their focus overnight then why can’t publishers band together & choose to dump them?

CEO Jack Dorsey said Twitter is looking to change the focus from following specific individuals to topics of interest, acknowledging that what’s incentivized today on the platform is at odds with the goal of healthy dialoguehttps://t.co/31FYslbePA— Axios (@axios) April 16, 2019

In Europe there is GDPR, which aimed to protect user privacy, but ultimately acted as a tax on innovation by local startups while being a subsidy to the big online ad networks. They also have Article 11 & Article 13, which passed in spite of Google’s best efforts on the scaremongering anti-SERP tests, lobbying & propaganda fronts: “Google has sparked criticism by encouraging news publishers participating in its Digital News Initiative to lobby against proposed changes to EU copyright law at a time when the beleaguered sector is increasingly turning to the search giant for help.”

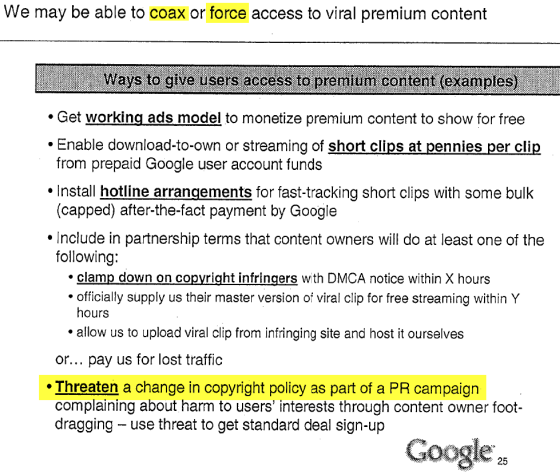

Remember the Eric Schmidt comment about how brands are how you sort out (the non-YouTube portion of) the cesspool? As it turns out, he was allegedly wrong as Google claims they have been fighting for the little guy the whole time:

Article 11 could change that principle and require online services to strike commercial deals with publishers to show hyperlinks and short snippets of news. This means that search engines, news aggregators, apps, and platforms would have to put commercial licences in place, and make decisions about which content to include on the basis of those licensing agreements and which to leave out. Effectively, companies like Google will be put in the position of picking winners and losers. … Why are large influential companies constraining how new and small publishers operate? … The proposed rules will undoubtedly hurt diversity of voices, with large publishers setting business models for the whole industry. This will not benefit all equally. … We believe the information we show should be based on quality, not on payment.

Facebook claims there is a local news problem: “Facebook Inc. has been looking to boost its local-news offerings since a 2017 survey showed most of its users were clamoring for more. It has run into a problem: There simply isn’t enough local news in vast swaths of the country. … more than one in five newspapers have closed in the past decade and a half, leaving half the counties in the nation with just one newspaper, and 200 counties with no newspaper at all.”

Google is so for the little guy that for their local news experiments they’ve partnered with a private equity backed newspaper roll up firm & another newspaper chain which did overpriced acquisitions & is trying to act like a PE firm (trying to not get eaten by the PE firm).

Does the above stock chart look in any way healthy?

Does it give off the scent of a firm that understood the impact of digital & rode it to new heights?

If you want good market-based outcomes, why not partner with journalists directly versus operating through PE chop shops?

If Patch is profitable & Google were a neutral ranking system based on quality, couldn’t Google partner with journalists directly?

Throwing a few dollars at a PE firm in some nebulous partnership sure beats the sort of regulations coming out of the EU. And the EU’s regulations (and prior link tax attempts) are in addition to the three multi billion Euro fines the European Union has levied against Alphabet for shopping search, Android & AdSense.

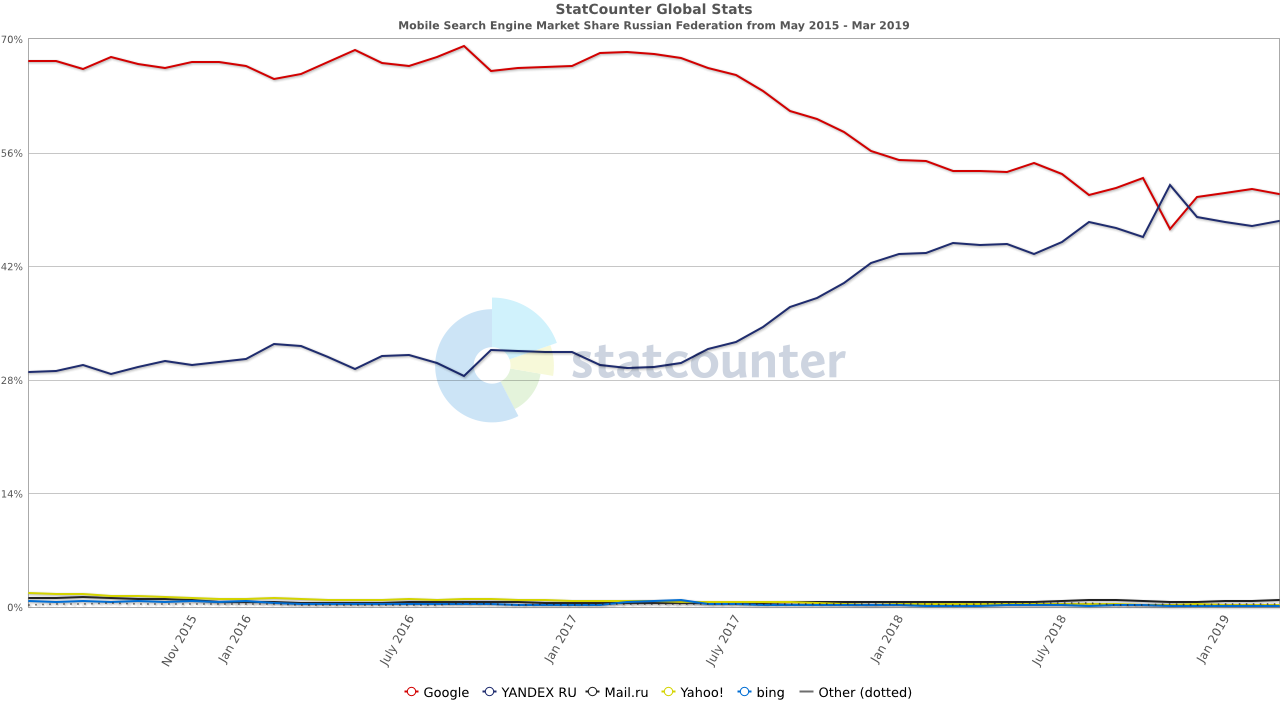

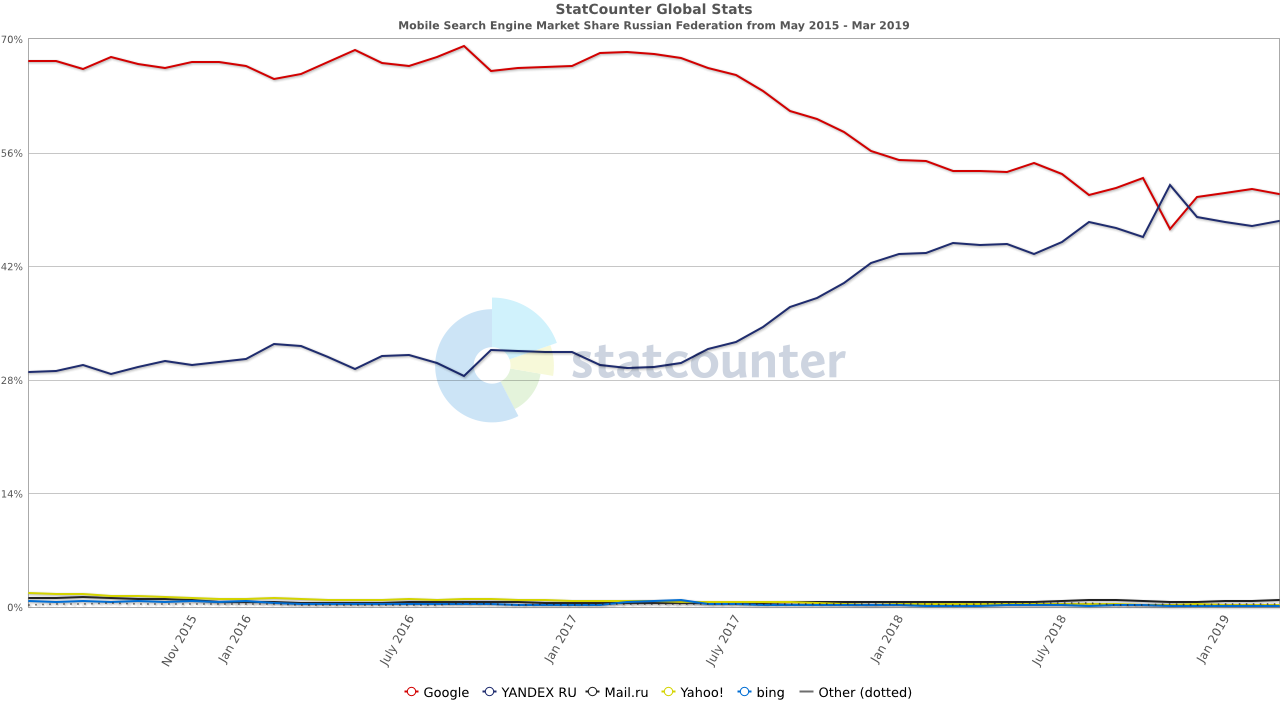

Google was also fined in Russia over Android bundling. The fine was tiny, but after consumers gained a search engine choice screen (much like Google pushed for in Europe on Microsoft years ago) Yandex’s share of mobile search grew quickly.

The UK recently published a white paper on online harms. In some ways it is a regulation just like the tech companies might offer to participants in their ecosystems:

Companies will have to fulfil their new legal duties or face the consequences and “will still need to be compliant with the overarching duty of care even where a specific code does not exist, for example assessing and responding to the risk associated with emerging harms or technology”.

If web publishers should monitor inbound links to look for anything suspicious then the big platforms sure as hell have the resources & profit margins to monitor behavior on their own websites.

Australia passed the Sharing of Abhorrent Violent Material bill which requires platforms to expeditiously remove violent videos & notify the Australian police about them.

There are other layers of fracturing going on in the web as well.

Programmatic advertising shifted revenue from publishers to adtech companies & the largest ad sellers. Ad blockers further lower the ad revenues of many publishers. If you routinely use an ad blocker, try surfing the web for a while without one & you will notice layover welcome AdSense ads on sites as you browse the web – the very type of ad they were allegedly against when promoting AMP.

There has been much more press in the past week about ad blocking as Google’s influence is being questioned as it rolls out ad blocking as a feature built into Google’s dominant Chrome web browser. https://t.co/LQmvJu9MYB— Jason Kint (@jason_kint) February 19, 2018

Tracking protection in browsers & ad blocking features built directly into browsers leave publishers more uncertain. And who even knows who visited an AMP page hosted on a third party server, particularly when things like GDPR are mixed in? Those who lack first party data may end up having to make large acquisitions to stay relevant.

Voice search & personal assistants are now ad channels.

Google Assistant Now Showing Sponsored Link Ads for Some Travel Related Queries “Similar results are delivered through both Google Home and Google Home Hub without the sponsored links.” https://t.co/jSVKKI2AYT via @bretkinsella pic.twitter.com/0sjAswy14M— Glenn Gabe (@glenngabe) April 15, 2019

App stores are removing VPNs in China, removing Tiktok in India, and keeping female tracking apps in Saudi Arabia. App stores are centralized chokepoints for governments. Every centralized service is at risk of censorship. Web browsers from key state-connected players can also censor messages spread by developers on platforms like GitHub.

Microsoft’s newest Edge web browser is based on Chromium, the source of Google Chrome. While Mozilla Firefox gets most of their revenue from a search deal with Google, Google has still went out of its way to use its services to both promote Chrome with pop overs AND break in competing web browsers:

“All of this is stuff you’re allowed to do to compete, of course. But we were still a search partner, so we’d say ‘hey what gives?’ And every time, they’d say, ‘oops. That was accidental. We’ll fix it in the next push in 2 weeks.’ Over and over. Oops. Another accident. We’ll fix it soon. We want the same things. We’re on the same team. There were dozens of oopses. Hundreds maybe?” – former Firefox VP Jonathan Nightingale

This is how it spreads. Google normalizes “web apps” that are really just Chrome apps. Then others follow. We’ve been here before, y’all. Remember IE? Browser hegemony is not a happy place. https://t.co/b29EvIty1H— DHH (@dhh) April 1, 2019

In fact, it’s alarming how much of Microsoft’s cut-off-the-air-supply playbook on browser dominance that Google is emulating. From browser-specific apps to embrace-n-extend AMP “standards”. It’s sad, but sadder still is when others follow suit.— DHH (@dhh) April 1, 2019

YouTube page load is 5x slower in Firefox and Edge than in Chrome because YouTube’s Polymer redesign relies on the deprecated Shadow DOM v0 API only implemented in Chrome. You can restore YouTube’s faster pre-Polymer design with this Firefox extension: https://t.co/F5uEn3iMLR— Chris Peterson (@cpeterso) July 24, 2018

As phone sales fall & app downloads stall a hardware company like Apple is pushing hard into services while quietly raking in utterly fantastic ad revenues from search & ads in their app store.

Part of the reason people are downloading fewer apps is so many apps require registration as soon as they are opened, or only let a user engage with them for seconds before pushing aggressive upsells. And then many apps which were formerly one-off purchases are becoming subscription plays. As traffic acquisition costs have jumped, many apps must engage in sleight of hand behaviors (free but not really, we are collecting data totally unrelated to the purpose of our app & oops we sold your data, etc.) in order to get the numbers to back out. This in turn causes app stores to slow down app reviews.

Apple acquired the news subscription service Texture & turned it into Apple News Plus. Not only is Apple keeping half the subscription revenues, but soon the service will only work for people using Apple devices, leaving nearly 100,000 other subscribers out in the cold: “if you’re part of the 30% who used Texture to get your favorite magazines digitally on Android or Windows devices, you will soon be out of luck. Only Apple iOS devices will be able to access the 300 magazines available from publishers. At the time of the sale in March 2018 to Apple, Texture had about 240,000 subscribers.”

Apple is also going to spend over a half-billion Dollars exclusively licensing independently developed games:

Several people involved in the project’s development say Apple is spending several million dollars each on most of the more than 100 games that have been selected to launch on Arcade, with its total budget likely to exceed $500m. The games service is expected to launch later this year. … Apple is offering developers an extra incentive if they agree for their game to only be available on Arcade, withholding their release on Google’s Play app store for Android smartphones or other subscription gaming bundles such as Microsoft’s Xbox game pass.

Verizon wants to launch a video game streaming service. It will probably be almost as successful as their Go90 OTT service was. Microsoft is pushing to make Xbox games work on Android devices. Amazon is developing a game streaming service to compliment Twitch.