The recent seismic events in the Google SEO industry – namely, the landmark DOJ v. Google antitrust trial and the unprecedented content warehouse data leaks – are not disparate occurrences.

They represent a single, clarifying moment of validation for a specific, long-standing SEO philosophy. For those who have built careers on chasing algorithmic loopholes, this is a moment of reckoning.

For those who have focused on enduring principles, it is a time of irrefutable confirmation.

Since I moved in 2006 to a full-time SEO specialist, my approach at Hobo has been rooted in recommending a strict adherence to Google’s guidelines, a focus on demonstrable quality, and the creation of sustainable, long-term value for businesses.

My thinking since Penguin in 2012 was that what Google tells us is actually a reflection of how their algorithms work, distilled down to the best, shortest trueisms they can give without naming internal processes at Google – eg focus on the users and all else follows”.

The recent revelations are not a surprise; they are a public affirmation of the “white hat” methodology SEOs like myself have long looked for.

The leaked internal documents and court testimonies did not present a new strategy; they provided validation for the professional SEO who has been implementing these techniques for years.

For too long, the SEO industry has operated by interpreting – and misinterpreting – Google’s spokespeople’s discussion of Google’s rules to rank in their search engine.

Now, the box is grey. While the full algorithm remains proprietary, its core architecture and, more importantly, its value systems are no longer a matter of pure speculation.

We now possess court testimony and leaked internal data that provide a blueprint for what Google truly measures and rewards. This article deconstructs that blueprint and translates it into a definitive strategy for 2025 and beyond.

The future of SEO is no longer about chasing the ghost of the next algorithm update.

It is about building defensible digital assets grounded in three newly confirmed pillars: a persistent, site-level Authority Score (Q*), a human-trained AI perception of Quality, and a demonstrable investment in Content Effort.

These revelations have created a profound strategic divergence in the industry.

Business models and agencies that relied on gaming the system through scaled, low-quality content and technical manipulation are clearly fundamentally at risk.

Their tactics are directly at odds with Google’s confirmed internal evaluation systems.

Conversely, those who have consistently invested in brand-building, genuine expertise, and user value have been handed a durable, long-term competitive advantage.

This is not merely a tactical shift; it is an economic one that redefines what a viable and effective SEO strategy looks like.

Inside the Machine – Deconstructing Google’s Revealed Ranking Pipeline

To succeed in 2025, we must stop thinking of a single, monolithic “algorithm.”

The evidence reveals a sophisticated, multi-stage pipeline where distinct, modular systems evaluate different attributes of a page and site before a final ranking is produced.

Contrary to the narrative of an all-powerful AI, trial exhibits revealed that “Almost every signal, aside from RankBrain and DeepRank… are hand-crafted… to be analysed and adjusted by engineers“.

This deliberate engineering is done so that “if anything breaks, Google knows what to fix“.

A failure at any stage of this pipeline can nullify success at another. This section will break down the critical components of that pipeline, moving from long-held industry myths to the confirmed reality.

The paradigm shift is best summarised by contrasting the old playbook with the new, evidence-based reality.

The Two Pillars of Ranking: Quality (Q*) and Popularity (P*)

The trial revealed that Google’s ranking architecture is built upon two “fundamental top-level ranking signals”: Quality (Q*) and Popularity (P*).

These two signals determine a webpage’s final score and influence how frequently Google crawls pages to keep its index fresh. All other systems, like Navboost and Topicality, are best understood as the essential building blocks that feed into these two pillars.

The Core Systems – Topicality, Navboost, and Quality

The ranking pipeline begins with a series of evaluations. A page can be perfectly relevant but fail if it has a history of poor user satisfaction or resides on a low-authority site.

This interconnectedness means that technical SEO, user experience (UX) design, and brand-level content strategy must work in concert. A failure in one domain will negate success in another.

- Topicality (T)*: This is the initial filter. Described as a “hand-crafted” system, it determines a document’s direct, fundamental relevance to the terms in a query. It also considers anchor text from incoming links to understand what other sites say a page is about. Passing this stage is the price of entry into the ranking consideration set.

- Navboost: This is Google’s powerful, data-driven system for measuring historical user satisfaction. It refines rankings based on 13 months of aggregated user interaction data, not live user behaviour. Navboost analyses critical click metrics, such as “good clicks” (successful interactions), “bad clicks” (which can indicate “pogo-sticking,” where a user clicks a result and quickly returns to the SERP), and, most importantly, “last longest clicks.” This final metric identifies the result that ultimately satisfied the user, ending their search session, and is an incredibly strong positive signal.

- Quality (Q): This is the third major component, an overarching system that assesses the trustworthiness and authority of a source. It is within this system that the crucial Q* metric operates, acting as a powerful, site-wide modifier on rankings.

These systems all work in concert with one another to identify helpful content and determine a site’s quality score over time.

QBST – The ‘Memorisation’ of Relevance

Trial testimony also revealed another foundational system called QBST (Query-Based Salient Terms), described as a “memorisation system”.

According to testimony from Google engineer Dr Eric Lehman, “Navboost and QBST are memorisation systems that have ‘become very good at memorising little facts about the world‘”.

QBST analyses a search query and “remembers” a list of specific words, phrases, and concepts – salient terms – that it expects to find on any truly relevant webpage. For example, for the query “best running shoes,” QBST has learned that a high-quality page must also include terms like “cushioning,” “stability,” “mileage,” and specific brand names.

During the initial ranking stages, pages containing these expected terms receive a higher relevance score.

A page that lacks them is considered less relevant and is less likely to proceed to the final ranking stages. This validates the long-held SEO strategy of building topically comprehensive content that naturally includes the full constellation of words and concepts that top-ranking pages share.

Q* – The Authority Score That Changes Everything

For years, the SEO community has debated whether Google uses a site-wide authority score akin to third-party metrics. Publicly, Google representatives have consistently avoided confirming such a concept. The trial changed everything.

Court testimony explicitly confirmed the existence of Q* (pronounced “Q-star”), an internal, largely static, and query-independent metric that functions as a site-level quality score.

According to a trial exhibit, “Q∗ (page quality (i.e., the notion of trustworthiness)) is incredibly important”. This score is engineered to assess the overall trustworthiness of a website, most often at the domain level. Its largely static nature means that if a site earns a high Q* score, it is considered a reliable source across all topics for which it might rank, granting it a persistent boost. This explains why certain authoritative domains consistently appear in search results for a wide range of queries.

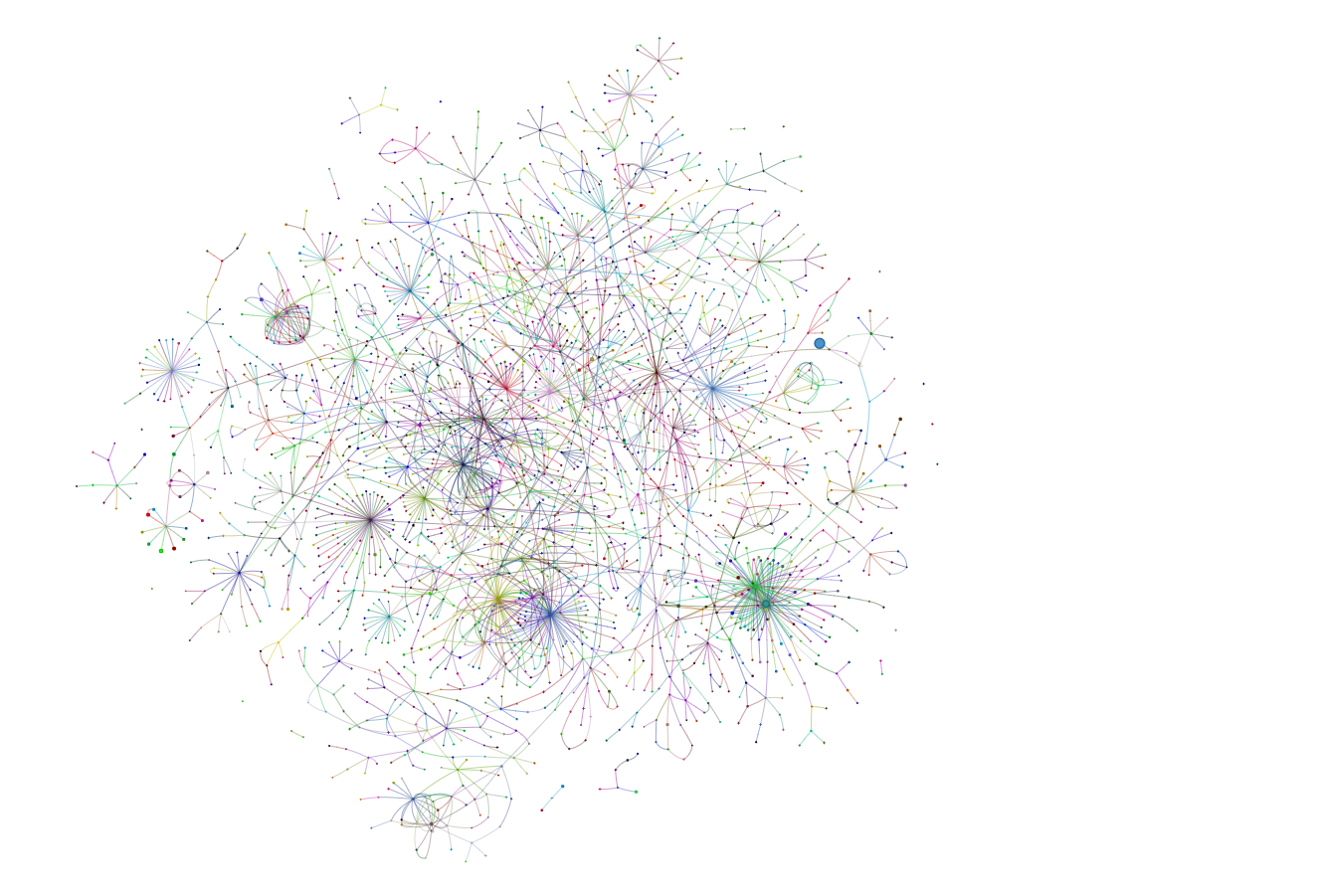

The mechanics of Q* are deeply tied to an evolved version of PageRank. Testimony revealed that “PageRank… is a single signal relating to distance from a known good source, and it is used as an input to the Quality score”.

The system uses a set of trusted “seed” sites for a given topic.

Pages and sites that are closer to these authoritative seeds within the web’s link graph receive a stronger PageRank score, which in turn is a key input into the overall Q* score.

A high Q* acts as a significant boost, while a low score can suppress an otherwise relevant page, effectively functioning as a foundational ranking gate that a site must pass to compete effectively.

The Rater’s Shadow – How Human Judgment Trains the AI

Perhaps the most significant deviation from Google’s long-standing public narrative concerns the role of its human Search Quality Raters. The official line has always been that raters provide feedback to help “benchmark the quality of our results,” implying an indirect influence.

The DOJ v. Google remedial phase opinion revealed a far more direct and systemic link.

The court documents state unequivocally that scores from human quality raters are a direct and foundational training input for Google’s core AI ranking models, namely RankEmbed and its successor, RankEmbedBERT.

As one trial document noted, “The data underlying RankEmbed models is a combination of click-and-query data and scoring of web pages by human raters”.

These sophisticated models are trained on two primary data sources: search logs and the scores generated by human raters. Testimony from Google’s Vice President of Search confirmed that these rater-trained models significantly improved Google’s ability to handle complex, long-tail queries, directly contributing to the company’s “quality edge over competitors”.

The implication of this is profound. The Search Quality Rater Guidelines (QRG) are not a theoretical document for webmasters to ponder; they are the literal instruction manual used to generate the data that trains the ranking AI.

The collective judgments of thousands of raters, guided by this manual, are systematically embedded into the core of the search engine. Therefore, aligning every page of a website with the principles of the QRG is not about pleasing a hypothetical evaluator; it is about providing the correct signals to the machine learning models that determine rankings.

The Economics of Excellence: ‘Content Effort’ as a Core Business Strategy

Understanding how Google’s pipeline works is only half the battle. The other half is understanding what it values, and testimony from the DOJ trial made it clear that “Most of Google’s quality signal is derived from the webpage itself”.

The leak of Google’s internal API documentation provided the missing link with the confirmation of an attribute called contentEffort.

This isn’t just another piece of technical jargon; it is the internal terminology that validates an entire quality-first approach to content and signals a fundamental shift in the economics of SEO.

Defining ‘Content Effort’

From a business perspective, contentEffort is Google’s way of measuring the total investment in a piece of content. It goes far beyond mere keyword density.

It is a holistic assessment of the resources, expertise, and originality behind a digital asset. The core components that constitute high Content Effort include:

- Unique and useful information: Content that provides novel insights or data not readily available elsewhere.

- Original research: Proprietary studies, surveys, or data analysis that establish the publisher as a primary source.

- Original media: Custom images, diagrams, infographics, or videos created specifically for the content.

- Expert authorship: Content written by a demonstrable expert with a proven track record.

- Reputable publication: The content is published on a website with a history of producing high-effort material.

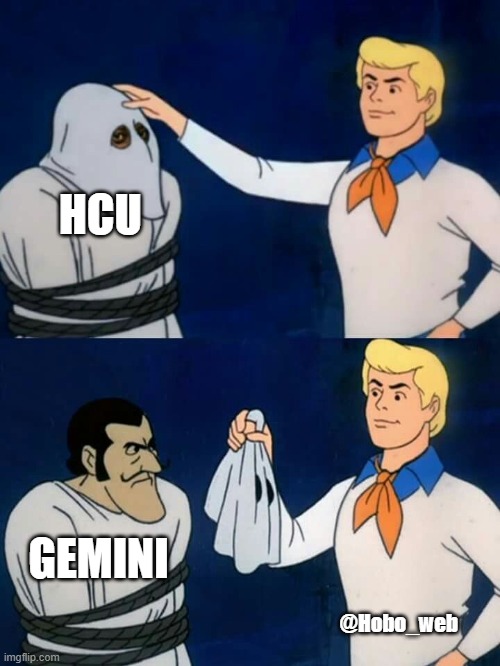

With some logical inference, AI, Gemini, is now rating how much effort went into the creation of a page (and AI can work out how much experience is shown in the article when it has proper authorship on the site). This coincidentally happened around the same time as the Helpful Content Update.

Effort as the Input, E-E-A-T as the Outcome

The concepts of Content Effort and E-E-A-T (Experience, Expertise, Authoritativeness, Trust) are intrinsically linked. The clearest way to frame the relationship is that Content Effort is the input, while E-E-A-T is the outcome.

A business cannot achieve genuine E-E-A-T without making a demonstrable investment of Content Effort.

Google’s system rewards E-E-A-T by quantifying the effort that went into producing the content. This is reflected in the Quality Rater Guidelines, which state, “If very little or no time, effort, expertise, or talent/skill has gone into creating the MC, use the Lowest quality rating“.

This connection becomes even clearer when considering the Helpful Content Update (HCU) and the underlying principle that “Helpful = Trustworthy“.

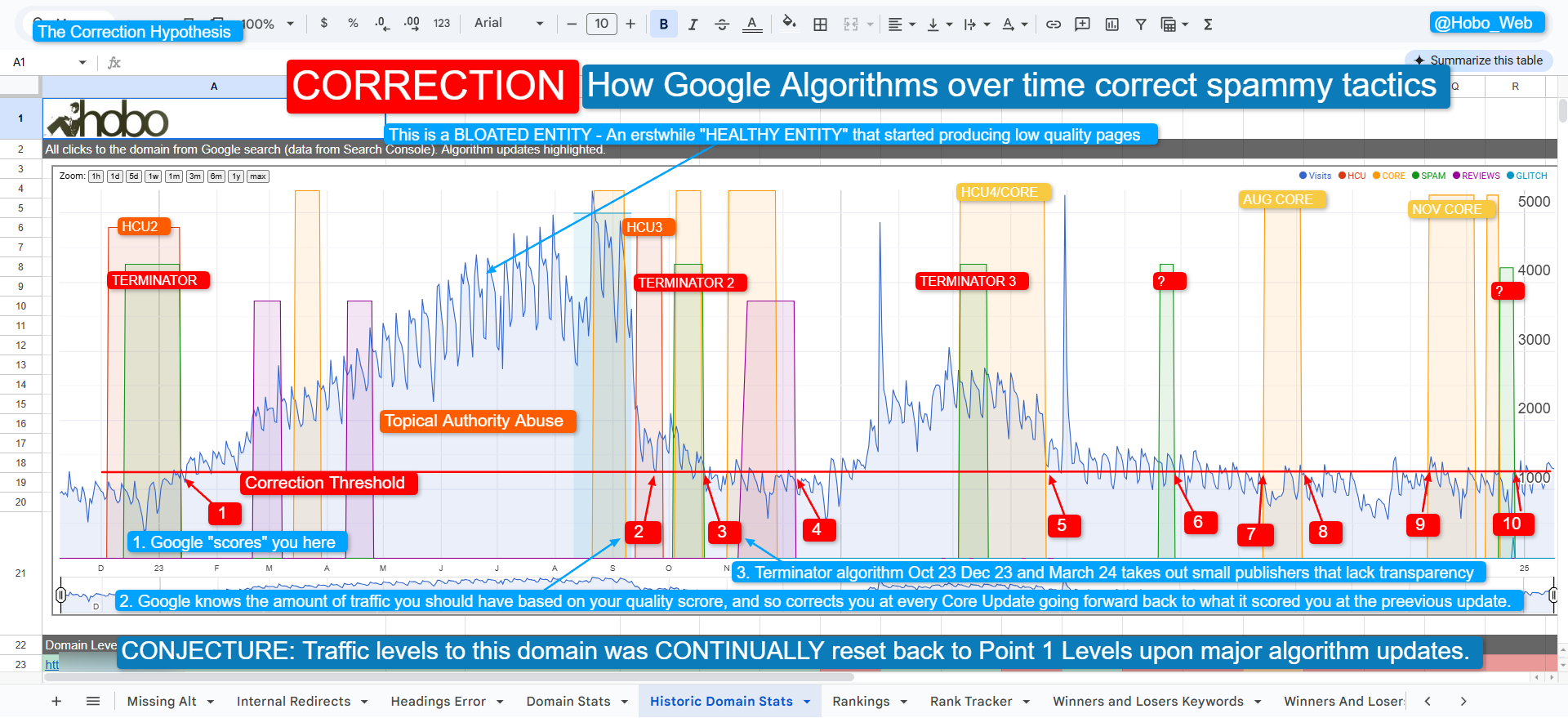

Based on my research over the year or two on this topic, with an interest in Quality Score dating back decades (as featured in my ebooks), the HCU (Helpful Content Update) is perhaps (in part at least) the algorithmic manifestation of the contentEffort signal, designed to connect helpfulness with the core signal of Trustworthiness, which Google states is the most important aspect of E-E-A-T.

My own firsthand experience guiding businesses through Google’s recent volatile updates confirms this direct link.

The HCU has been described as a system “designed to kill low-effort content and the entire industry built on providing it”.

Recovering from an HCU classification is not a simple technical fix.

As Google’s John Mueller explained, “These are not ‘recoveries’ in the sense that someone fixes a technical issue and they’re back on track – they are essentially changes in a business’s priorities”.

This system is now the primary mechanism by which Google identifies and devalues content that lacks originality, depth, and demonstrable investment. As Google’s Gary Illyes stated in 2016, “We figured that site is trying to game our systems… So we will adjust the rank. We will push the site back just to make sure that it’s not working anymore“.

Building Defensible Digital Assets in the Age of AI

The rise of generative AI has made the creation of low-effort content nearly free, flooding the web with generic, unoriginal text. In this environment, high Content Effort becomes the primary strategic moat.

AI struggles to replicate the core components of high-effort assets: it cannot conduct original research, create truly novel insights based on real-world experience, or build a trusted brand reputation.

This aligns with a long-held view from former Google CEO Eric Schmidt, who stated in 2014, “Brands are the solution, not the problem. Brands are how you sort out the cesspool“.

Examples of high-effort, defensible digital assets include a proprietary industry report based on original survey data, an interactive financial calculator that solves a complex user problem, or a definitive guide filled with custom-made diagrams and expert video tutorials.

These are not simple blog posts; they are valuable digital products that are difficult and expensive to replicate.

This reality signals a fundamental increase in the “cost of entry” for competitive search rankings.

The ROI calculation for content marketing has inverted. Previously, a common strategy was to spread investment across many cheap, low-effort articles. Now, that investment is likely to yield zero or even negative returns if it triggers a site-wide HCU classifier.

The safer, higher-return strategy is to concentrate the budget into producing a smaller number of flagship assets.

This forces a strategic reallocation of marketing resources away from content volume and towards genuine asset creation, impacting hiring (requiring true experts over generic writers), budgeting (funding research and design), and timelines (accepting longer production cycles).

The New Strategic Imperatives: A Post-Leak Playbook

The revelations from the Google leaks demand a fundamental re-evaluation of digital strategy. The era of focusing on narrow, technical SEO tactics in a vacuum is over.

The new playbook requires a holistic, integrated approach where brand marketing, user experience design, and content strategy are core components of a successful search presence. The leak provides the blueprint for four key strategic imperatives.

- Strategy 1: Brand is the Ultimate Ranking Factor: The single most important takeaway is that a strong brand is the most durable competitive advantage. The existence of

siteAuthorityconfirms that Google measures a domain’s overall reputation. The dominance of Navboost proves that Google rewards sites that users actively seek out and trust. This necessitates a shift in marketing priorities, moving beyond a narrow focus on technical SEO to embrace broader brand-building initiatives like public relations and community engagement. As Google’s Search Liaison, Danny Sullivan, advised smaller sites: “If you’re a smaller site that feels like you haven’t really developed your brand, develop it”. A key performance indicator (KPI) should be the growth of branded search volume, as this is a direct input into the Navboost system and a clear indicator of growingsiteAuthority. - Strategy 2: User Experience is the New Core SEO: The path to authority is paved with positive user interactions. The confirmation of Navboost as a powerful re-ranking system means that user satisfaction is a direct ranking factor. Every aspect of the user experience – from fast page load speeds to intuitive navigation – is now a core SEO activity. The goal is no longer just to rank, but to be the definitive answer that ends the user’s search, generating the “last longest click” that Navboost rewards so highly.

- Strategy 3: Build Topical Authority, Not Just Keyword-Optimised Pages: A successful strategy involves developing deep and comprehensive “content hubs” or “topic clusters” that thoroughly cover a business’s core areas of expertise. This demonstrates a clear topical focus to Google’s systems and establishes the site as an authority in its niche. It also requires ruthless and regular content audits to prune or update low-quality, outdated, or off-topic pages that can dilute a site’s authority and send negative signals to Google.

- Strategy 4: Adopt a Scientist’s Mindset – Test, Don’t Just Trust: The most significant casualty of the leaks is the unquestioning trust in Google’s public statements by the wider public (although when you dig down into Googler statements as I have (it took me over a year to put this all together in a cohesive way) you soon find out that, on the whole, advice Google spokespeople give telling us to do to win long-time in SEO is aligned with Search Essentials, quality rater guidelines, api leaks, exploits and sworn testimony from the antitrust trial. The revelations demand a culture of experimentation. Businesses must treat these new insights as a powerful set of hypotheses to be tested against their own data. This means diversifying information sources and investing in analytics that measure what Google is now known to measure: user engagement, branded search growth, and performance across entire topic clusters.

The Blueprint for 2025 – A Framework for Demonstrable Authority

Black Hat SEO will carry on as normal in certain highly competitive niches, by buying expired domains (For Trust and Authority) and lots of high-quality paid links (for Quality Score).

If the site is popular with users, it leaves a gap where purely black hat SEO can still operate (3-6 months). I think the clear trend is to get that window down over time.

But unless you are an expert black hat SEO, this is not a viable strategy for your business’s reputation.

The strategies you are reading in this guide are.

Translating these revelations into practice requires a methodical, integrated framework.

The following three pillars summarise the new reality of SEO into an actionable strategy, drawing upon the methodologies and tools I have developed at Hobo Web.

This is the blueprint for building and demonstrating authority in 2025. These pillars do not operate in isolation; they form a virtuous feedback loop where success in one area amplifies the results in the others.

Pillar I – Foundational Trust and Technical Integrity

This is the price of admission.

A site must be technically efficient and transparent for its quality signals to be properly evaluated.

A clean technical foundation improves user experience, which strengthens Navboost scores and allows Google to crawl and index high-effort content efficiently.

- Technical Excellence: A deep, technical analysis is non-negotiable. Using a tool like the Screaming Frog SEO Spider is essential for finding and fixing root-cause issues such as broken links (404s), inefficient redirect chains, and duplicate content. A clean, logical site architecture is a fundamental signal of a well-maintained, trustworthy resource.

- User Experience as a Quality Signal: Google’s Core Web Vitals (Largest Contentful Paint, First Input Delay, Cumulative Layout Shift) are not arbitrary metrics; they are an attempt to quantify the user’s experience of a page. Site speed is a confirmed, albeit lightweight, ranking signal, but its true impact is on user behaviour. Data shows that user patience is incredibly low, with the probability of a bounce increasing dramatically with every second of delay. A fast, stable site directly contributes to positive Navboost signals.

- Transparency and Responsibility: The QRG places significant emphasis on identifying “Who is Responsible for the Website and Who Created the Content on the Page“. The guidelines are explicit: “To understand a website, start by finding out who is responsible for the website and who created the content on the page.” A site that lacks clear ownership, author biographies, and contact information is considered “disconnected” and inherently untrustworthy. This is the core of the “Disconnected Entity Hypothesis,” which posits that sites failing this fundamental transparency test are systematically devalued because they lack the trust signals Google’s systems are designed to reward. These “unhealthy entities” are targeted by Google’s corrective algorithms (HCU, SPAM, CORE) and are continually devalued, making it impossible to build sustainable rankings. This is precisely why tools like the Hobo EEAT Tool are critical, as they help generate the necessary policy pages and author schema to demonstrate transparency and accountability, directly addressing these quality guidelines.

Pillar II – The Pursuit of Verifiable Expertise

With a solid foundation in place, the focus shifts to building a high Q* score through demonstrable expertise and authority. This involves a strategic approach to content creation and link acquisition.

- Executing on Content Effort: This is the implementation of the strategy from the previous section. Every piece of content must be viewed as an investment in a digital asset. A methodical process, such as following the comprehensive Premium SEO Checklist, ensures that content meets the highest standards of helpfulness, originality, and E-E-A-T, systematically building the signals that Google’s quality systems are designed to reward.

- Strategic Link Building: The confirmation of Q* and its reliance on PageRank redefines link building. The goal is no longer about the quantity of links but about “reducing the distance from a known good source”. This means strategically acquiring links from sites that Google already considers authoritative seeds in your topic area. Each of these links directly strengthens the PageRank component of your site’s Q* score.

- Entity SEO: Google has moved from evaluating “strings” (keywords) to understanding “things” (entities). Building a recognised brand entity within Google’s Knowledge Graph is a crucial component of demonstrating authority. Practical steps to implement this include securing your presence in authoritative databases, designating an “entity home” on your website with Schema markup, building a cohesive topical content structure, highlighting authors to build E-E-A-T signals, and maintaining consistent information across the web. A strong entity profile provides Google with verifiable information that reinforces trustworthiness and directly impacts the perception of your site’s quality.

Pillar III – The Zero-Click Playbook: From Optimisation to Influence

The final pillar acknowledges that the battle for visibility is increasingly fought on the search engine results page (SERP) itself, not just for the click. The old playbook of optimising a list of blue links is obsolete.

Thriving in the zero-click era requires a new framework that shifts the mindset from Search Engine Optimisation to Search Experience Optimisation. The goal is no longer just to rank; it is to be the answer, wherever that answer is delivered, and to build influence that transcends the click.

- On-SERP SEO: Winning on Google’s Turf: The first priority is to adapt to the new terrain. This means optimising content to capture valuable SERP real estate like Featured Snippets, “People Also Ask” boxes, and Knowledge Panels. This involves, for example, using structured data to become eligible for rich results and structuring content with clear questions and answers.

- Building a Moat: Becoming Click-Independent: This strategy focuses on creating a strong digital presence that doesn’t rely solely on organic clicks. The objective is to build brand awareness and be the definitive answer wherever the user is looking. This builds influence and authority even if it does not result in a direct website visit, making your brand synonymous with your core topics.

- Rethinking Measurement: A New Scorecard for Success: This pillar calls for a change in how success is measured. Instead of focusing solely on traffic and rankings, the new scorecard should include metrics that reflect influence and on-SERP visibility, such as growth in branded search volume and share of voice in SERP features. The future of marketing is influence.

Proactive AI-Readiness: The AI SEO Framework for 2025

The revelations about Google’s internal systems provide a clear blueprint for success in traditional search.

However, the digital landscape is undergoing another profound transformation: the shift to an AI-first world where information is increasingly mediated by generative AI systems like Google’s AI Overviews.

To thrive, a modern strategy must move beyond optimising for a list of blue links and begin actively shaping the knowledge base of these answer engines. This new discipline is AI SEO: a fusion of AI and SEO.

Understanding ‘Query Fan-Out’: How Answer Engines Think

At the heart of this new AI-driven landscape is a technique called “query fan-out”.

When a user enters a query, an AI system like Google’s doesn’t just look for a single matching page. Instead, it “explodes” or “fans out” the initial prompt into a multitude of related, more specific sub-queries to fulfil the user’s underlying intent.

The AI acts as a research assistant, issuing numerous queries simultaneously to retrieve specific, semantically rich “chunks” of content from diverse, authoritative sources.

It then synthesises these chunks into a single, comprehensive answer, often presented in an AI Overview.

According to Google’s own help documentation, AI Overviews appear when their systems determine that generative AI can be “especially helpful, for example, when you want to quickly understand information from a range of sources”. This means you are no longer competing to rank for one keyword; you are competing to be the factual building block for dozens of hidden queries the AI generates on the user’s behalf.

The Canonical Source of Truth: Your Website as the Master Record

In an era of information chaos and hallucination by AI, the most critical strategic mandate is to establish a “Canonical Source of Ground Truth” – a single, authoritative digital hub that your business exclusively owns and controls.

This hub is your website. Unlike social media platforms, which are “borrowed land” subject to algorithmic whims, your website is digital real estate you own. It is the only platform structurally and philosophically suited to serve as the master record of your identity, values, and offerings.

This concept is validated by Google’s E-E-A-T framework. A website’s architecture provides unique “affordances” for demonstrating credibility: dedicated “About Us” pages, detailed author bios, and the ability to publish in-depth original research. These features are not just design choices; they are the mechanisms for proving Experience, Expertise, Authoritativeness, and, most importantly, Trust.

Optimising the Synthetic Content Data Layer (SCDL)

The “Synthetic Content Data Layer” (SCDL) is the invisible knowledge space where AI systems generate responses about your entity by piecing together fragmented information from across the web.

It is an AI-constructed “fog” of probability and understanding about your business. Left unmanaged, this layer is filled with outdated data, competitor information, or outright fabrications.

The core AI SEO strategy is to take control of this layer. This involves a systematic process:

- Audit: Use AI tools to query what the SCDL currently contains about your business, identifying inaccuracies and information gaps.

- Gather “Ground Truth”: Collect all true, internal, and authoritative information about your entity – product details, company history, expert knowledge, and unique case studies. This is information only you can provide.

- Create and Verify: Use an AI assistant to draft extensive content based only on your provided ground truth. This “synthetic content” must then be meticulously reviewed by a human expert to fact-check every detail and infuse it with real-world experience (E-E-A-T).

- Publish: Post this verified and enriched content on your website, making it the canonical source of truth for that set of facts.

This process transforms your website into a trusted knowledge base for the AI ecosystem, eliminating the AI’s need to guess and ensuring that when users ask questions, the answers are drawn from your fact-checked narrative.

This is the antidote to the “Disconnected Entity Hypothesis,” where sites that lack transparency and a clear connection to a real-world entity are devalued.

The Cyborg Technique: Human-AI Symbiosis

The scale required to manage the SCDL is immense. This is where the “Cyborg Technique” becomes essential. The principle is augmentation, not replacement. It represents a strategic fusion of human experience and machine efficiency to achieve a 10x output in marketing efforts.

In this workflow, the human expert provides the irreplaceable elements of E-E-A-T: the strategic “why,” the real-world “experience,” and the ethical “trust.” The AI assistant, guided by this expertise, provides the scale: the rapid drafting of content based on your ground truth, the tireless analysis of the SCDL, and the scaled production of factual material for your canonical source.

A practical way to implement this is through “Agentica” – specialised, pre-packaged instruction sets or prompts that temporarily transform a general LLM into an expert analyst for a specific task, such as checking topical authority.

By giving the AI a specific job title, a rulebook, and a workflow, you command it to execute expert-level analysis, bridging the gap to true AI agency.

This is not a shortcut; it is a framework that leverages AI to do what was previously impossible due to resource limitations, allowing even a small business to build a knowledge base with the depth of a much larger organisation.

Advanced AISEO Tactics and Operations

With a canonical source established, more advanced tactics become possible:

- Positive Inference Optimisation: The goal is to provide AI with such a deep fortress of core facts that it can accurately infer correct answers to countless long-tail questions without you having to write a page for each one.

- Disambiguation Factoids: This is a reactive online reputation management tactic. If an AI confuses your brand with another entity, you can publish a clear, simple, and verifiable statement on your website to correct the record and provide a powerful counter-signal.

To operationalise this entire framework, it is recommended to appoint an “AI Reputation Watchdog.”

This is a dedicated role or function responsible for continuously auditing what AI says about the brand, maintaining the ground truth record, and deploying these strategies to ensure the brand is represented accurately.

Conclusion: The Enduring Principles of a Validated Approach

The revelations from the DOJ v. Google trial and the content warehouse leaks have pulled back the curtain on Google’s inner workings. We now have confirmation of a multi-stage ranking pipeline, the reality of the Q* site-level authority score, the direct role of human raters in training Google’s core AI, and the internal validation of ‘Content Effort’ as a key measure of quality.

Also, the rise of generative AI demands a new, proactive strategy.

The Ai SEO framework provides a path to not only succeed in traditional search but to establish and defend your brand’s narrative in the emerging world of AI-driven answer engines. The future of SEO is a decisive return to the fundamentals of good business: building a trusted brand, creating tangible value for customers, and investing in genuine, demonstrable expertise.

The choice for businesses and marketers is now clearer than ever. One path is to continue chasing shortcuts and fleeting tactics in a system now proven to be designed to devalue them. The other is to adopt a methodical, quality-first approach that aligns directly with how Google measures and rewards authority, while simultaneously building a canonical source of truth to inform the AI systems of tomorrow.

The path to sustainable, long-term success in search is no longer a guess; it is a blueprint grounded in hard evidence. The cost of content production has gone up, but for those willing to make the investment, the return on true quality has never been higher.

Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 2.5 Deep Research. I have over 20 years writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived, edited and verified as correct by me (and is under constant development). See my AI policy.