Disclaimer: This is not official. Any article (like this) dealing with the Google Content Data Warehouse leak requires a lot of logical inference when putting together the framework for SEOs, as I have done with this article. I urge you to double-check my work and use critical thinking when applying anything for the leaks to your site. My aim with these articles is essentially to confirm that Google does, as it claims, try to identify trusted sites to rank in its index. The aim is to irrefutably confirm white hat SEO has purpose in 2025 – and that purpose is to build high-quality websites. Feedback and corrections welcome. This article was first published on 6 October 2025.

Following on from my ongoing analysis of the recent Google data leak, where we’ve already dived into crucial components like LocalWWWInfo and its signals for local SEO, I’m now turning my attention to another cornerstone of the Content Warehouse: the GoogleApi.ContentWarehouse.V1.Model.ImageData protocol buffer.

That’s right, how Google handles images in Google Search and in Google Image Search. I’m happy with this article; I think it opens up a world of verifiable image optimisation possibilities, based on ground source data – the Google Content Warehouse data leak of 2024.

This is the core data structure Google uses to store, understand, and ultimately rank the visual content that populates its search results.

By deconstructing this technical blueprint, my analysis moves beyond the conventional SEO wisdom we’ve all relied on, revealing the foundational principles that truly drive modern image search.

What my analysis of the ImageData schema reveals is a multi-layered and deeply computational process.

QUOTE: “…The Google leak, Shaun Anderson Hobo Web just released all these ranking factors about you know, image uniqueness… wonderful study…” Cyrus Shephard, 2025

It all starts with an architectural framework obsessed with managing the web’s immense visual scale and redundancy, pinpointing a single, canonical source of truth for every unique image. Upon this foundation, Google unleashes a sophisticated suite of machine learning models to achieve total semantic mastery – extracting text with OCR, identifying real-world objects and entities, and classifying an image’s genre and style.

It’s this deep semantic understanding that forms the bedrock for Google’s advanced multimodal search capabilities.

Furthermore, the schema exposes a fascinating two-pronged model for quality assessment.

On one hand, intrinsic quality is quantified through algorithmic scores for aesthetics and technical merit. On the other hand, extrinsic performance is measured by granular user engagement and click signals from the real world. This dual approach ensures that the images Google ranks are not just beautiful, but demonstrably useful to searchers.

Top 10 Insights from the Article

- Original Source Detection: Google uses a timestamp called

contentFirstCrawlTimeto identify the original source of an image across the web, giving it preference over duplicates. - Algorithmic Aesthetics: Google doesn’t just guess; it uses a Neural Image Assessment (NIMA) model to score your images on technical quality (focus, lighting) and aesthetic beauty (composition, appeal).

- Anti-Clickbait Score: A

clickMagnetScoreactively penalizes images that get a lot of clicks from irrelevant “bad queries,” directly fighting visual clickbait. - Entity Linking: Objects in images are linked directly to Google’s Knowledge Graph via

multibangKgEntities, turning a picture of a tower into a direct connection to the “Eiffel Tower” entity. - Indexing Quality Gate: Not all images make it into the index. A system internally named “Amarna” acts as a quality gate, filtering out low-quality visuals.

- Text is Fully Indexed: Multiple Optical Character Recognition (OCR) systems read and index the text within your images, making every word on an infographic or product shot searchable.

- White Backgrounds Signal Trust: A

whiteBackgroundScoreis used as a proxy for professional product photography, signaling commercial trustworthiness to Google. - Hierarchy of Duplicates: Even when images are identical, Google ranks them within a

rankInNeardupCluster, giving the top spot to the one on the more authoritative or higher-quality page. - Licensing Powered by Metadata: The “Licensable” badge you see in search results is directly populated by metadata (

imageLicenseInfo) found in the image file (IPTC) or page schema. - Context-Aware Safety Score: An image’s final SafeSearch rating isn’t just about the pixels; it’s a

finalPornScorethat fuses visual analysis with contextual signals, including the search queries it ranks for.

Commerce and content moderation aren’t bolted on; they’re core, native functions.

We see rich data structures for product and licensing information deeply integrated into the schema, turning images into potential points of sale.

At the same time, a robust, multi-model system for SafeSearch acts as a guardian, fusing pixel analysis with contextual signals to ensure brand safety.

With this article, I’ve translated these complex technical realities into a strategic framework for Search Engine Optimisation. It proves that success in image search is no longer about simple metadata tweaks.

It demands a holistic strategy that aligns on-page context with in-image semantics and entity associations.

It requires us to create visually compelling content that satisfies both Google’s algorithmic quality models and human users, to implement flawless technical data for commercial visibility, and to produce information-rich visuals ready for the next generation of AI-driven, multimodal search.

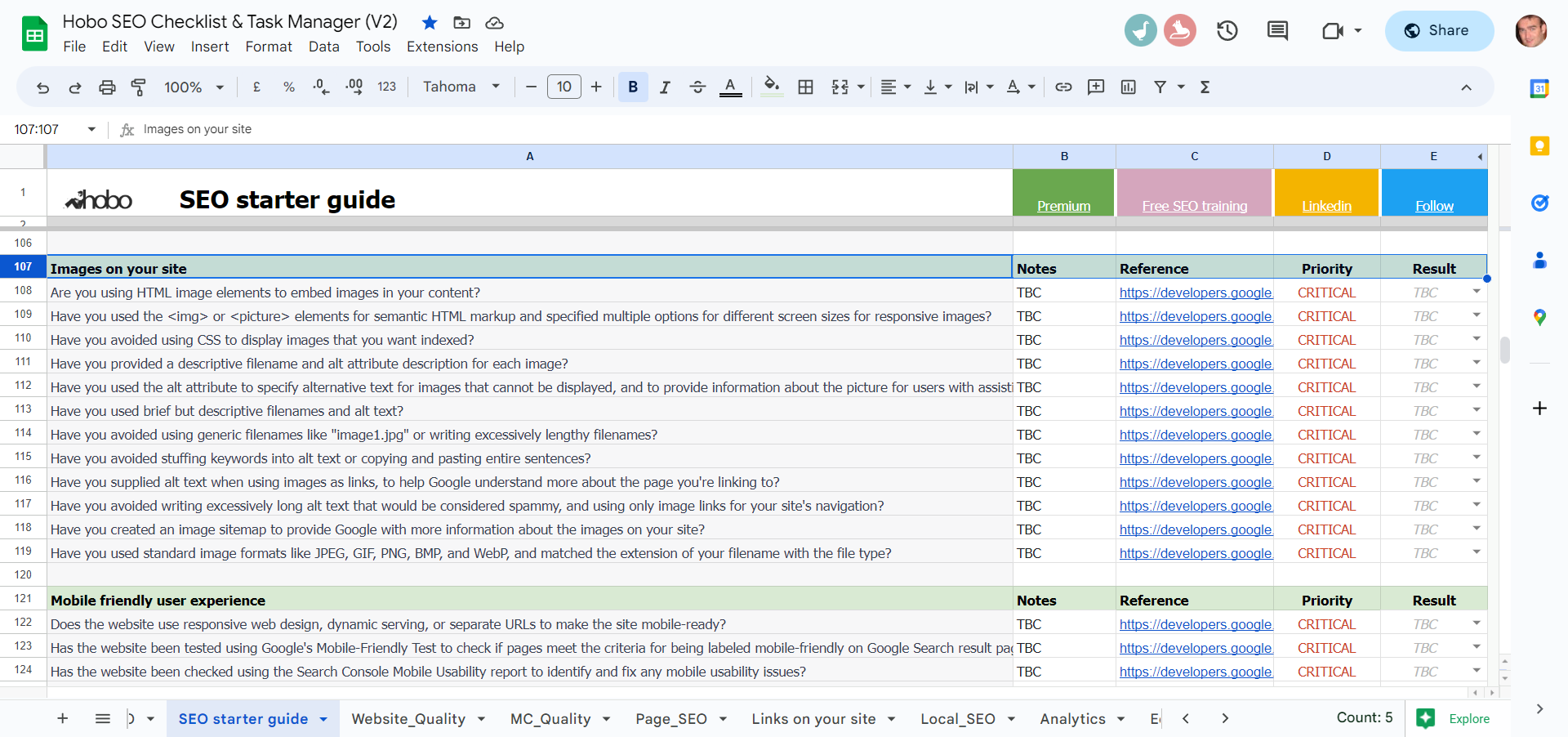

Hobo SEO Starter Guide

Table 1: Architecture & Provenance

This group of attributes relates to how Google identifies, stores, crawls, and ranks images at a foundational level, establishing a single source of truth for each visual asset.

Table 2: Semantic Understanding

These attributes detail how Google uses machine learning to comprehend the content of an image, extracting text, identifying objects, and linking them to real-world entities.

Table 3: Quality & Aesthetics

This group of attributes shows how Google algorithmically assesses an image’s quality, combining both its technical and artistic merit with real-world user engagement signals.

Table 4: Commerce & Licensing

These attributes are specifically designed to handle the commercial aspects of an image, including product information and licensing rights.

Table 5: Safety & Policy

This final group of attributes details the systems Google uses to moderate content and enforce safety policies, from SafeSearch classifiers to detectors for specific harmful content.

1. The Architectural Blueprint: How Google Indexes and Serves Images

Before an image can be understood or ranked, it must exist within Google’s vast and complex infrastructure.

The ImageDataschema provides a detailed blueprint of this architecture, revealing a system obsessed with managing redundancy, establishing provenance, and efficiently processing trillions of visual assets.

This section deconstructs the foundational attributes that govern an image’s identity and its journey through the indexing pipeline, from initial discovery by crawlers to its first evaluation by the core ranking engine.

1.1. Identity, Provenance, and Canonicity: Establishing a Single Source of Truth

The internet is rife with duplication; a single popular image can appear on millions of different URLs. Google’s first and most critical engineering challenge is to resolve this chaos into a single, manageable entity. The schema reveals a sophisticated system for this purpose, centred on the distinction between an image’s location and its identity.

The url attribute represents the straightforward, canonicalised absolute URL where an image file resides.

However, Google’s internal systems rely on more robust identifiers.

The docid is a fingerprint generated from the non-canonicalised URL, serving as a raw, initial identifier.

The crucial attribute, however, is canonicalDocid. The documentation explicitly states this is “the image docid used in image search” and that for data coming from core indexing systems like “Alexandria/Freshdocs,” it is a required field that must be populated.

This is the technical implementation of canonicalization for images. It is the key to which all other signals – from quality scores to click data – are attached.

Without a definitivecanonicalDocid, ranking signals would be fragmented across countless duplicate URLs, making coherent evaluation impossible.

The entire architecture is fundamentally designed to combat this visual content entropy, elevating the strategic importance of originality and demonstrable authority from a mere “best practice” to an architectural necessity for visibility.

This process of establishing a single source of truth is further informed by a suite of temporal attributes. firstCrawlTime and lastCrawlTime provide a history for a specific image instance at a given URL. More revealing is contentFirstCrawlTime, defined as the “earliest known crawl time among all neardups of this image.”

This is a powerful signal of provenance.

By tracking the first time the image’s content was seen anywhere on the web, Google can make a highly educated guess as to the original source.

An image whose contentFirstCrawlTime aligns closely with its publication on a high-authority domain, is far more likely to be considered the original than a copy discovered months later on a low-quality aggregator site.

The reference to “Alexandria” as a source for this data is a direct link to Google’s primary indexing system, named in recent documentation leaks. Just as the ancient Library of Alexandria sought to collect and organise all knowledge,

Google’s Alexandria system serves as the foundational repository for indexed web content, including images. ThecanonicalDocid is, in essence, the unique catalogue number for an image within this grand library.

1.2. Inside the Warehouse: The Indexing and Selection Pipeline

Discovery does not guarantee inclusion. The schema makes it clear that a selective, quality-gated process determines whether an image is worthy of being added to the main search index.

The isIndexedByImagesearchboolean flag and the corresponding noIndexReasonfield provide a definitive answer to whether an image was selected.

This proves the existence of an “Image Indexing Quality Gate.”

The corpusSelectionInfoattribute offers a clue as to how this selection is made, explicitly referencing “Amarna” as a system for corpus scoring.

While public information on Amarna is scarce, its function can be inferred from context. It appears to be an early-stage processing or scoring system that evaluates images for inclusion in various corpora.

An image that fails to meet a minimum quality or relevance threshold at this stage may be discarded, with the reason noted innoIndexReason.

This is analogous to the “Discovered – currently not indexed” status for web pages in Google Search Console, confirming that a quality threshold exists for images as well.

This aligns with the broader concept of a tiered indexing architecture, reportedly managed by a system called “SegIndexer“. It is plausible that systems like Amarna perform the initial assessment that determines not only if an image is indexed, but which tier of the index it is placed into.

Higher-quality, more authoritative images would likely be placed in a more frequently updated, higher-priority tier, analogous to the flash drive storage mentioned for the most important content.

TheindexedVerticals attribute further supports this, suggesting that images can be specifically processed and indexed for different verticals like Shopping or News, each with its own criteria and quality thresholds.

Therefore, image SEO must be a two-step process: first, ensuring the image and its context are of sufficient quality to pass the “Amarna gate,” and second, optimising its semantic and ranking signals for the main serving engine.

1.3. From Storage to SERP: The Mustang Serving Engine and Initial Ranking

Once an image is indexed and tiered, it becomes eligible for ranking by Google’s primary serving systems.

The schema provides direct evidence linking this process to an internal system named “Mustang”. The packedFullFaceInfo attribute, which encodes data about faces in an image, is explicitly noted as being packaged “for storage in mustang”.

This, combined with information from leaked documents confirming Mustang as the primary ranking system, solidifies its role in the image search pipeline.

Several attributes represent the output of this initial ranking process. The imagerank field is a straightforward, high-level score representing the image’s overall rank.

More nuanced is the rankInNeardupCluster attribute. This field, which ranks an image within its own cluster of visual duplicates, is a fascinating window into Google’s evaluation logic.

It demonstrates that even when two images are pixel-for-pixel identical, Google creates a preference hierarchy.

The image on the more authoritative domain, with a higher resolution, better surrounding context, or an earlier contentFirstCrawlTime, will receive a better rank (closer to 1) within the cluster.

This is the mechanism that allows Google to surface the original creator’s work over a copy on an aggregator site.

The imageContentQueryBoost attribute reveals another layer of sophistication, directly referencing the “pamir algorithm.”

Research into PAMIR identifies it as a machine learning algorithm designed specifically for “multimodal retrieval, such as the retrieval of images from text queries”.

It is a scalable, discriminative model that learns a ranking function to order images based on their relevance to a given text query. The inclusion of a PAMIR-derived score in theImageData schema is a critical piece of evidence.

It shows that Google’s ranking is not based solely on generic, query-agnostic signals like PageRank or image quality.

Instead, it involves query-dependent boosts calculated by sophisticated ML models that assess the specific relevance of an image to the user’s intent. This represents an early and foundational use of the multimodal principles that now power advanced systems like Gemini and MUM.

2. Semantic Mastery: Decoding the Content and Context of Visuals

For Google to effectively rank an image, it must move beyond its architectural properties and achieve a deep, human-like understanding of its content.

The ImageData schema details a formidable arsenal of technologies dedicated to this task, transforming opaque pixels into structured, machine-readable data.

This process of semantic extraction is not a single action but a multi-layered analysis that encompasses text recognition, object identification, entity linking, and genre classification. The heavy investment in multiple, overlapping systems implies that the visual content of an image is now as important, if not more so, than the surrounding text for determining relevance.

2.1. Reading the Unreadable: The Power of Optical Character Recognition (OCR)

Any text embedded within an image represents a rich source of contextual information. The schema reveals that Google employs multiple, redundant OCR systems to ensure this data is captured.

The presence of both ocrGoodoc and ocrTaser fields suggests at least two distinct OCR engines are run on images, likely with different strengths and specialisations. This turns every meme, infographic, product label, presentation slide, and screenshot into a fully indexable text document.

The ocrTextboxes attribute adds another layer of sophistication.

It doesn’t just store the extracted text; it stores the text associated with specific bounding boxes within the image.

This allows Google to understand the spatial relationship of text to other elements. For example, it can differentiate between a headline at the top of an infographic and a source citation at the bottom, or associate a product name with the specific item it’s printed on.

This capability is foundational for answering highly specific queries and makes the visual design of information-dense images a direct SEO consideration. Clear, high-contrast, and logically placed text within an image is more likely to be accurately parsed and utilised for ranking.

2.2. From Pixels to Entities: Object, Face, and Concept Recognition

Beyond text, Google performs a comprehensive analysis to identify the objects, people, and concepts depicted in an image.

This suite of attributes reveals that Google’s approach is not just recognition, but relational knowledge graphing. The system is designed to understand not just what is in an image, but how those objects and entities relate to each other and to the broader world of information.

The process begins with imageRegions, which identifies discrete objects and assigns them labels within bounding boxes.

This is the base layer of object recognition. The deepTags attribute, often used for shopping images, provides more granular and commercially-oriented classifications, such as “long-sleeve shirt” or “leather handbag.”

The true power of this system is unlocked by multibangKgEntities.

This field links the recognised objects to specific entities within Google’s massive Knowledge Graph.

An image containing a depiction of the Eiffel Tower is not merely tagged with the string “tower”; it is annotated with a direct link to the unique Knowledge Graph entity for the Eiffel Tower.

This transforms the image from a simple collection of pixels into a node in an “interconnected web of knowledge”.

This is the technical underpinning of entity-based SEO. An image containing clear, identifiable entities that are contextually relevant to the page’s primary topic directly feeds and reinforces Google’s understanding of that topic, allowing the image and the page to rank for a much broader set of conceptual and semantic queries.

2.3. Classifying Intent and Genre: Is it a Photo, Clipart, or Line Art?

User intent in image search is often tied to the type of visual required.

A user searching for “business growth chart” likely wants a graphic or line art, not a photograph of a stockbroker.

The schema includes specific classifiers to address this need. The photoDetectorScore, clipartDetectorScore, and lineartDetectorScore attributes each provide a confidence score for an image belonging to one of these fundamental genres.

This classification allows Google to pre-filter results and serve a more relevant set of images that match the user’s implicit intent. The presence of associated ...Version fields for each of these detectors indicates that these are active areas of development, with the underlying machine learning models being continuously trained and updated.

For content creators, this means that the stylistic choice of an image is a direct ranking consideration.

Creating a photograph when a user is looking for an illustration may result in the image being filtered out, regardless of its other qualities.

2.4. The Rise of Multimodal Understanding

The combination of OCR, entity recognition, and genre classification detailed in the ImageData schema provides the rich, structured data necessary to power Google’s most advanced AI initiatives. This schema is the source of truth that enables multimodal models like MUM and Gemini to perform complex, cross-modal tasks.

ImageData proto for the shirt already contains the necessary structured information: imageRegions identifies it as a shirt, deepTags might classify the pattern as “paisley,” and colorScore quantifies its colour profile. The MUM algorithm can then use this structured data to formulate a new search for ties that match those attributes.

TheImageData proto is the critical bridge that translates unstructured pixels into the structured knowledge that these advanced AI systems require to function.

This signals a future where the most valuable images are those that are information-rich, containing multiple, clearly-depicted, and machine-readable concepts.

3. The Quality Equation: Gauging Aesthetics, Engagement, and Trust

Relevance alone is insufficient for high rankings in Google Images.

The ImageData schema reveals a sophisticated and multi-faceted system for evaluating an image’s “quality.”

This evaluation is not a single score but a composite of signals that measure an image’s intrinsic visual appeal, its real-world performance with users, and various technical proxies for professionalism and trustworthiness.

This analysis demonstrates that Google has effectively bifurcated the concept of “image quality” into two distinct, measurable paths: intrinsic aesthetic quality and extrinsic user-perceived quality. A successful image must excel in both.

3.1. Algorithmic Aesthetics: NIMA and the Quantification of Beauty

Historically, aesthetic quality was considered a subjective domain, impossible for a machine to evaluate. Google’s Neural Image Assessment (NIMA) framework represents a direct challenge to this notion, and its outputs are stored directly in the ImageData schema.

NIMA is a deep convolutional neural network (CNN) trained not to assign a simple high/low score, but to predict the distribution of human opinion scores on a scale of 1 to 10. This allows it to capture the nuance of human perception, including the degree of consensus among raters.

The schema contains two distinct NIMA-related fields: nimaVq and nimaAva. Based on Google’s research, these likely correspond to two different aspects of quality. nimaVq likely represents the “technical quality” score, measuring objective, pixel-level attributes like sharpness, lighting, exposure, and the absence of noise or compression artefacts.

nimaAva, referencing the Aesthetic Visual Analysis (AVA) dataset used to train the model, likely represents the “aesthetic” score, which captures more subjective characteristics like composition, colour harmony, and emotional impact.

The inclusion of these scores is a paradigm shift for image SEO. It confirms that Google is algorithmically assessing the artistic and technical merit of photographs.

Images that are out of focus, poorly lit, or awkwardly composed will receive lower NIMA scores, directly impacting their quality evaluation.

The schema also contains fields like styleAestheticsScore and deepImageEngagingness, suggesting that NIMA is part of a broader, evolving suite of models dedicated to quantifying visual appeal. This means that investing in professional photography and high-quality graphic design is no longer just a matter of branding; it is a direct input into Google’s ranking systems.

3.2. The User as the Ultimate Arbiter: Click and Engagement Signals

While algorithmic aesthetics can predict potential quality, Google relies on real-world user behaviour as the ultimate measure of an image’s success. The schema contains a rich set of attributes dedicated to capturing user engagement signals, which are noted as being sensitive “Search CPS Personal Data.”

The imageQualityClickSignalsfield is a general container for this data.

More specific are h2i (hovers-to-impressions) and h2c (hovers-to-clicks). These ratios likely measure the performance of an image’s thumbnail in the search results.

A high h2i ratio would indicate that when a user’s mouse hovers over the thumbnail, it is compelling enough to generate a larger impression.

A high h2c ratio would indicate that the larger impression successfully convinces the user to click through to the source page. Together, these metrics provide a granular view of an image’s ability to attract attention and satisfy intent in a competitive SERP environment. This system is the image-centric equivalent of NavBoost, the powerful click-based re-ranking system used in web search.

Crucially, not all clicks are considered equal.

The clickMagnetScore is defined as a score indicating how likely an image is “considered as a click magnet based on clicks received from bad queries.”

This is a direct algorithmic countermeasure to visual clickbait. An image with a shocking or ambiguous thumbnail might generate a high click-through rate, but if those clicks come from irrelevant queries and result in users immediately bouncing back to the SERP, this negative signal will be captured.

This proves that Google’s system is sophisticated enough to differentiate between a relevant, satisfying click and a misleading one. The strategic implication is clear: the goal is not to attract any click, but to attract the right click from a satisfied user, as the wrong ones are being measured and may actively harm an image’s long-term ranking potential.

3.3. Proxies for Trust and E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness)

While E-E-A-T is a framework for content on a page, not a direct property of an image, the schema contains several technical attributes that serve as powerful proxies for the visual aspects of trust and professionalism.

The whiteBackgroundScore is a prime example. This classifier identifies images that are likely objects isolated on a clean, white background.

This style is the hallmark of professional product photography used by reputable e-commerce sites. A high score in this field is a strong signal of commercial intent and trustworthiness. It helps Google distinguish a polished product shot from a casual photo of the same item.

The isVisible attribute is a simple but fundamental signal. It distinguishes between an image that is inlined on a page (typically via an <img> tag) and one that is merely linked to. An inlined image is an integral part of the page’s content, whereas a linked image is not.

This basic distinction helps Google understand the publisher’s intent.

A more technical but significant signal is codomainStrength.

This measures the confidence that an image is hosted on a “companion domain,” such as a dedicated Content Delivery Network (CDN). Well-structured, professional websites often serve their images from a CDN for performance reasons.

A high codomainStrength value is therefore a technical fingerprint of a sophisticated and well-maintained web property, contributing to its perceived authoritativeness. A site composed of low-resolution, non-inlined images served from disparate, low-reputation domains will score poorly on these proxies, visually undermining its E-E-A-T profile.

4. The Commerce Engine: Images in a Commercial Context

Google Images is not merely a repository of pictures; it is a powerful engine for commercial discovery and transaction.

The ImageData schema reveals that commerce is not an ancillary feature but a core, native function, with deeply integrated data structures designed to surface products, manage licensing rights, and leverage embedded metadata. This architecture transforms any relevant image into a potential point of sale or a monetisable asset.

4.1. Shoppable Surfaces: Integrating Products into Visual Search

The ambition to make the visual web shoppable is evident in the complexity of the shoppingProductInformationattribute. This is not a simple “isProduct” flag but a comprehensive, nested protocol buffer designed to store all the data necessary to construct a rich product listing.

It includes fields for product details, pricing, availability, and seller information. This is the backend data structure that powers the merchant listing experiences seen in Google Search, Google Images, and Google Lens.

The existence of such a detailed structure within the core ImageData model signifies that from the moment an image is indexed, it is evaluated for its commercial potential. E-commerce SEOs can directly influence the population of this field through the meticulous implementation of Product and Merchant Listing structured data on their websites.

When Google’s crawlers process a page with valid product schema, the data is extracted and used to populate theshoppingProductInformation proto in the Content Warehouse, creating a direct pipeline from on-page markup to enhanced visibility in commercial search results.

Complementing this direct product information is the featuredImagePropattribute, which contains an inspiration_score. This score indicates “how well an image is related to products, or how inspirational it is.” This suggests a separate system, likely used in more discovery-oriented surfaces like Google Discover or style-based searches, that identifies images that may not be direct product shots but are contextually relevant to a commercial journey.

An image of a well-decorated living room, for example, could receive a high inspiration_score and be linked to the shoppable products (sofa, lamp, rug) depicted within it.

4.2. Rights, Royalties, and Responsibility: Managing Image Licensing

For photographers, artists, and stock photo agencies, asserting and monetising image rights is a critical business function. The ImageData schema provides a direct, machine-readable link between an image creator’s metadata and their ability to do so through Google’s search features.

The imageLicenseInfofield is a structured container for storing the specific license details of an image.

This field is the direct driver of the “Licensable” badge that appears on images in the search results. This badge signals to users that licensing information is available and provides a direct link for them to acquire the image legally.

ThelicensedWebImagesOptInState attribute further allows webmasters to control how their images are used in Google products, such as in large previews, providing an additional layer of rights management.

This system creates a clear and powerful incentive for creators to provide accurate metadata.

The primary methods for populating the imageLicenseInfo field are through the use of ImageObjectstructured data on the webpage or by embedding IPTC photo metadata directly into the image file. When Google processes an image with this metadata, it populates the corresponding fields in theImageData proto.

The Mustang serving engine then reads this field and, if the data is valid, displays the “Licensable” badge. This creates a complete, end-to-end system where providing structured metadata results in a tangible commercial benefit, closing the loop between content creation and monetisation.

4.3. The Power of Embedded Metadata

Google’s data collection is not limited to on-page signals or its own analysis. The schema confirms a deep investment in extracting metadata embedded directly within image files. The presence of two distinct fields, embeddedMetadata (for standard EXIF/IPTC data) and the “more comprehensive” extendedExif, indicates an ongoing effort to parse and utilise this rich data source.

This embedded data can include a wealth of information that directly supports E-E-A-T and provenance signals: creator name, copyright notices, creation date, and even GPS location data. This information, coming from the file itself, is considered a strong, first-party signal.

For example, if the creator field in the IPTC data matches the author of the article in which the image is embedded, it creates a powerful signal of authenticity and expertise. This confirms that a robust workflow for embedding complete and accurate IPTC metadata is not just good practice for asset management but is a direct way to provide Google with valuable, trust-building information about an image’s origin and ownership.

5. The Guardian: Policy, Safety, and Content Moderation

Operating a search engine at the scale of Google carries an immense responsibility to protect users and brands from harmful, unsafe, or inappropriate content.

The ImageData schema provides a look inside the sophisticated, multi-layered defence system Google has built to police the visual web.

This system demonstrates a continuous “arms race” in content moderation, driven by advancements in machine learning, and reveals that an image’s safety rating is not static but is a dynamic score influenced by a fusion of visual analysis and contextual data.

5.1. The SafeSearch Spectrum: A Multi-Model Approach

Google’s approach to SafeSearch is not monolithic. The schema reveals the existence of multiple, overlapping scoring systems, reflecting an evolution in technology and a defence-in-depth strategy. Fields like adaboostImageFeaturePornare explicitly marked as deprecated, showing a clear progression from older machine learning techniques.

The modern approach is anchored by brainPornScores. This attribute, named after the Google Brain deep learning project, stores a set of scores for various sensitive categories, including “porn, csai, violence, medical, and spoof.”

This demonstrates that SafeSearch classification extends far beyond adult content to a broader spectrum of brand safety concerns. This score is based on a direct analysis of the “image pixels,” using powerful computer vision models to identify potentially problematic content.

However, pixel analysis alone is not the final word. The schema also contains finalPornScore, which is described as a more holistic score based on a wider range of “image-level features (like content score, referrer statistics, navboost queries, etc.).”

The documentation provides a crucial instruction: “if available prefer final_porn_score as it should be more precise.” This reveals that Google’s ultimate safety classification is a “fusion system.” It combines the initial computer vision analysis from brainPornScores with contextual and user behaviour data.

The “navboost queries” signal is particularly telling; it means that the types of queries for which an image ranks and receives clicks can influence its safety rating.

A completely innocuous image could potentially be flagged as unsafe if it is consistently embedded on problematic websites or starts ranking for inappropriate queries. This makes off-page context and query associations critical components of maintaining a positive brand safety profile.

5.2. Identifying Unwanted and Harmful Content

Beyond the granular classifications required for SafeSearch filtering, Google also has mechanisms for identifying content that should be removed from the index entirely. The isUnwantedContentboolean flag serves as a general-purpose field to mark an image for exclusion from the search index. This is likely used for content that violates Google’s core policies, such as spam or malware.

The hateLogoDetectionattribute demonstrates a more targeted and proactive approach to content moderation. This field stores the output of a classifier from the “VSS logo_recognition module” specifically trained to identify hate symbols.

The existence of such a specialised detector shows that Google actively develops and deploys models to combat specific categories of harmful content, rather than relying solely on general-purpose classifiers.

This reflects a commitment to addressing nuanced and evolving safety challenges on the web, protecting both users and the integrity of the search results. For publishers, this underscores the non-static nature of content policies; as Google’s detection capabilities improve and new threats emerge, the definition of acceptable content evolves, requiring ongoing vigilance to ensure compliance.

6. Strategic Synthesis: An Actionable Framework for Modern Image SEO

The deep analysis of the ImageData schema necessitates a fundamental evolution in the strategic approach to image optimisation. Tactical checklists focused on filenames and alt text are no longer sufficient.

A modern, data-informed strategy must be holistic, acknowledging that Google perceives images through a complex lens of architectural provenance, semantic understanding, quantified quality, commercial intent, and safety protocols. This concluding section synthesises the report’s findings into a cohesive, actionable framework for professionals seeking to achieve sustained visibility and success in image search

6.1. The Multi-Factor Model for Image Relevance: Beyond Alt Text

The schema makes it clear that image relevance is determined by a triad of interconnected factors. A successful strategy must optimise for all three pillars:

- On-Page Context: This is the domain of traditional image SEO. It includes descriptive filenames, keyword-rich alt text, relevant captions, and ensuring the image is placed near topically-aligned text on the page. These signals provide essential hints to Google about the image’s subject matter and are crucial for website accessibility.

- In-Image Semantics: This is the new frontier. As revealed by the OCR, object recognition, and entity detection attributes, Google’s primary source of understanding is now the image’s pixels. The strategy must therefore shift to optimising the content within the image. This means creating infographics with clear, legible text for OCR; using photographs that contain distinct, easily identifiable objects and entities; and ensuring the primary subject is prominent and unambiguous.

- Entity Association: The most advanced pillar is the image’s connection to the Knowledge Graph via the

multibangKgEntitiesfield. The goal is to create visuals that act as a bridge between your content and established real-world entities. For a page about electric vehicles, an image featuring a clear shot of a “Tesla Model 3” (a specific entity) is far more powerful than a generic picture of a car, as it directly reinforces the page’s topical authority within the Knowledge Graph.

An optimal strategy ensures these three pillars are in perfect alignment.

For an article reviewing the “iPhone 15 Pro,” the ideal image would be an original, high-quality photograph (Pillar 2) clearly showing the device (Entity, Pillar 3), embedded on the page with the alt text “iPhone 15 Pro in titanium finish” (Pillar 1).

6.2. Cultivating Algorithmic Favour: Optimising for Quality and Engagement

Google’s bifurcated quality model requires a two-pronged optimisation approach. It is not enough for an image to be aesthetically pleasing if no one clicks on it, and a clickbait image that disappoints users will be penalised.

- Optimise for Intrinsic Quality (NIMA): Invest in professional photography and graphic design. Pay close attention to technical fundamentals like lighting, focus, and composition. For product photography, adhere to best practices like using clean backgrounds, which is measured by the

whiteBackgroundScore. The goal is to create images that would be rated highly by a human judge, as thenimaVqandnimaAvascores are designed to be an algorithmic proxy for that judgment. - Optimise for Extrinsic Quality (User Clicks): An image’s thumbnail is its advertisement in the SERP. A/B test different crops, compositions, and aspect ratios to identify which versions generate the highest click-through rates from relevant queries. Monitor performance to maximise positive signals like

h2c(hovers-to-clicks) while actively avoiding strategies that could trigger a highclickMagnetScore. This creates a feedback loop where you produce aesthetically strong assets and then refine their presentation to maximise user engagement.

6.3. Maximising Commercial and Monetisable Visibility

For e-commerce businesses and content creators, the ImageData schema provides a direct blueprint for driving commercial outcomes.

- For E-commerce: The flawless implementation of

ProductandMerchantListingstructured data is non-negotiable. This is the direct mechanism for populating the richshoppingProductInformationproto in the Content Warehouse, which in turn enables eligibility for shoppable rich results across Google’s surfaces. Treat structured data for images with the same priority as for the product page itself. - For Content Licensors: Establish a rigorous, automated workflow for embedding comprehensive IPTC metadata into every image file before it is uploaded. Specifically, ensure the “Web Statement of Rights” and “Licensor URL” fields are correctly populated. This is the most direct and scalable way to populate the

imageLicenseInfofield, earn the “Licensable” badge, and drive qualified traffic to your licensing or sales pages.

6.4. Future-Proofing for a Multimodal, AI-Driven World

The ImageData schema is not a static blueprint; it is the foundation upon which the future of search is being built. The rich, structured data it contains is the fuel for advanced multimodal AI like MUM and Gemini, which are designed to answer complex, conversational, and cross-format queries.

The strategic imperative is to stop thinking of images as page decorations and start treating them as dense packets of structured data. Future-proof visual assets are those that provide answers and context. This includes:

- Information-Rich Graphics: Create charts, diagrams, and infographics with clear, machine-readable text and data that can be extracted via OCR.

- Entity-Dense Photography: Produce photographs that depict multiple, contextually relevant entities in a clear relationship with one another.

- Contextual Video Content: As Google’s video analysis capabilities grow, providing video content that visually demonstrates processes or concepts will become increasingly important.

By creating visual content that is not just keyword-relevant but conceptually and factually dense, you are not just optimising for today’s search engine; you are providing the structured data that will be essential for visibility in the more intelligent, conversational, and multimodal search engines of tomorrow.

Read next

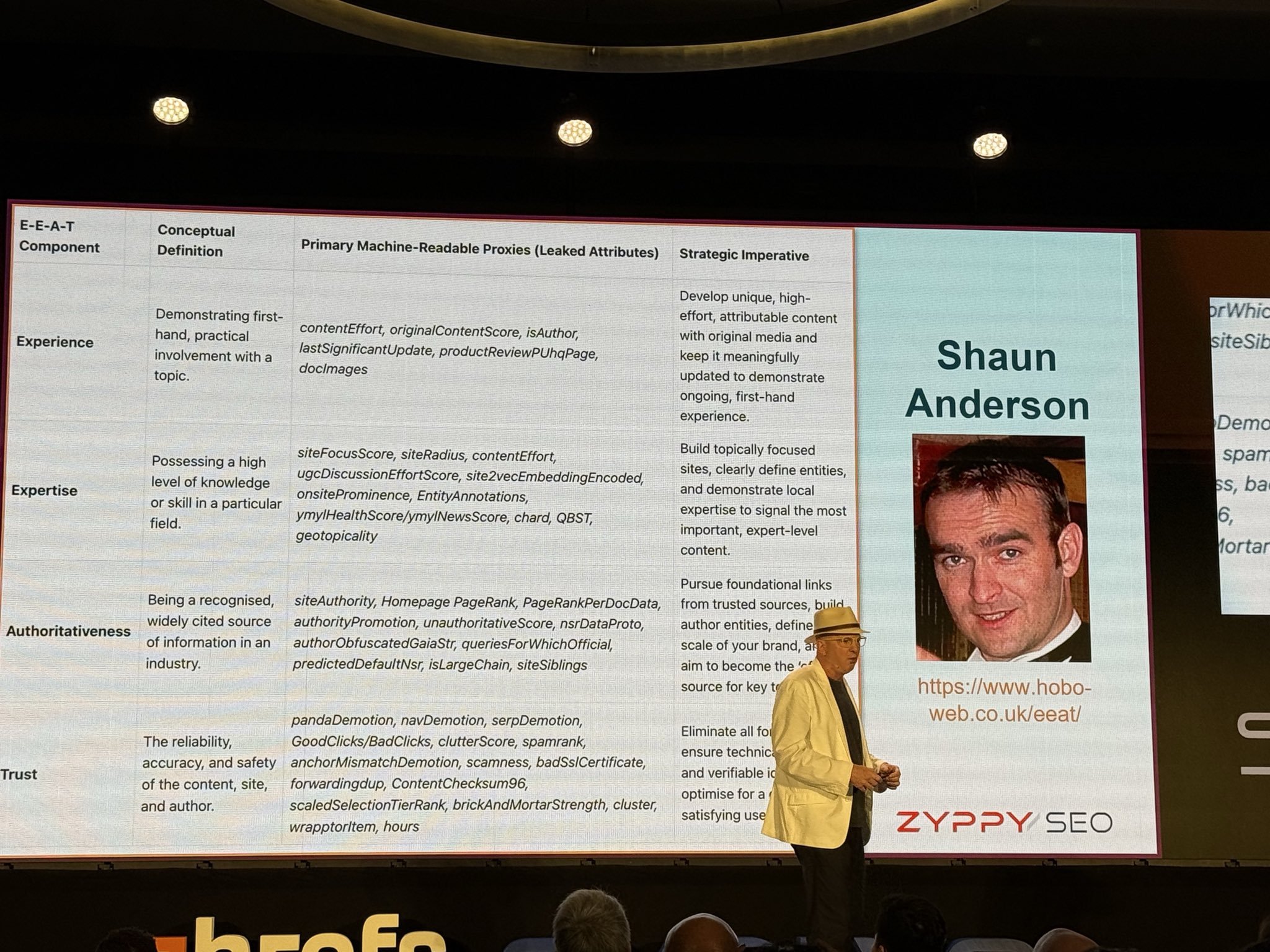

Read my article that Cyrus Shephard so gracefully highlighted at AHREF Evolve 2025 conference: E-E-A-T Decoded: Google’s Experience, Expertise, Authoritativeness, and Trust.

The fastest way to contact me is through X (formerly Twitter). This is the only channel I have notifications turned on for. If I didn’t do that, it would be impossible to operate. I endeavour to view all emails by the end of the day, UK time. LinkedIn is checked every few days. Please note that Facebook messages are checked much less frequently. I also have a Bluesky account.

You can also contact me directly by email.

Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 2.5 Deep Research (and ChatGPT 5 for image generation). I have over 20 years writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived, edited, and verified as correct by me (and is under constant development). See my AI policy.