This is a preview of Chapter 5 from my new ebook – Strategic SEO 2025 – a PDF which is available to download for free here.

Perhaps the most impactful revelation from the DOJ Vs Google Antitrust trial was the detailed exposition of the Navboost system.

This system provides the crucial ‘C’ (Clicks) signal for the T* score.

For decades, the search engine optimisation (SEO) community has debated the role of user clicks in ranking, with Google’s public statements often being evasive or dismissive.

The trial testimony, particularly from Google VP Pandu Nayak, ended this debate.

Navboost was confirmed to be “one of the important signals” that Google uses to refine and prioritise search results based on a massive, historical repository of user interaction data.

I. Introduction: Redefining Relevance Through Observed User Interaction

The precise mechanisms governing Google’s search rankings have been a subject of intense speculation, reverse engineering, and strategic analysis. The information retrieval paradigm has been understood through the public-facing pillars of content analysis and link-based authority.

However, recent disclosures from legal proceedings and Google leaks have provided unprecedented clarity on a long-standing, foundational component of Google’s ranking systems: Navboost.

Perhaps the most impactful revelation from the trial was the detailed exposition of the Navboost system.

Since forever, the search engine optimisation (SEO) community has debated the role of user clicks in ranking, with Google’s public statements often being evasive or dismissive. The trial testimony, particularly from Google VP Pandu Nayak, ended this debate.

Navboost was confirmed to be “one of the important signals” that Google uses to refine and prioritise search results based on a massive, historical repository of user interaction data.

Navboost is not a new algorithm but a mature, deeply integrated system that embodies a fundamental paradigm shift in how search relevance is determined.

It represents the move from systems that primarily infer relevance through proxies – such as keyword density (Term Frequency-Inverse Document Frequency, or TF-IDF) and backlink authority (PageRank) – to a system that directly observes and measures relevance through the lens of aggregated user interaction and satisfaction signals.

Testimony and documentation have characterised Navboost as one of Google’s “strongest” and “most important” ranking signals, underscoring the critical necessity for the SEO and digital marketing communities to develop a granular understanding of its mechanics.

II. The Genesis of Navboost: A Historical and Foundational Analysis

The system is not a recent innovation but a veteran component of Google’s ranking architecture, with roots extending back nearly two decades.

Its conceptual framework, laid out in a 2004 patent, reveals that a user-centric feedback loop was part of Google’s strategic vision long before it became a public topic of discussion.

2.1 A Timeline of Revelation

The public’s understanding of Navboost has evolved through a series of key disclosures, moving from obscure legal mentions to detailed technical revelations.

- Origins (c. 2005): During the 2023 antitrust trial, Pandu Nayak confirmed that the Navboost system dates back to approximately 2005. This timeline establishes Navboost as a mature and deeply integrated system, co-evolving with many of Google’s other core ranking technologies for nearly two decades. Its longevity implies that the models powering it are trained on an immense historical dataset of user interactions, giving it a stable and deeply nuanced understanding of query-level user satisfaction.

- Early Public Mentions (2012): The first known public mention of the “NavBoost” name appeared in footnotes of a 2012 Federal Trade Commission (FTC) case against Google. In this case, Google representatives testified under oath that user signals influence rankings. This early disclosure, though not widely publicised at the time, demonstrates that knowledge of the system and its function has existed within legal and regulatory circles for over a decade, long before the recent trial brought it to mainstream attention. “ In addition, click data (the website links on which a user actually clicks) is important for evaluating the quality of the search results page. As Google’s former chief of search quality Udi Manber testified: “The ranking itself is affected by the click data. If we discover that, for a particular query, hypothetically, 80 percent of people click on Result No. 2 and only 10 percent click on Result No. 1, after a while we figure out, well, probably Result 2 is the one people want. So we’ll switch it.”

- Modern Confirmation (2023-2024): The modern, detailed understanding of Navboost is a direct result of two pivotal events. First, the extensive testimony during the 2023 U.S. DOJ antitrust trial provided high-level confirmation of the system’s existence, its importance, and its basic function of using historical click data. Second, the May 2024 leak of internal Google Search API documentation offered an unprecedented, granular view into the system’s mechanics, including the specific types of click signals it processes and its relationship with other ranking modules. Together, these two sources form the evidentiary foundation for the current, in-depth analysis of the Navboost system.

2.2 The Foundational Blueprint: Patent US8595225B1

Strong evidence suggests that the conceptual blueprint for Navboost is detailed in Google patent US8595225B1, titled “Systems and methods for correlating document topicality and popularity,” which was filed in 2004.

The patent was co-authored by prominent Google engineers, including Amit Singhal, and its filing date precedes Navboost’s operational launch by approximately one year, making it a compelling candidate for the system’s foundational design. An analysis of the patent’s core mechanisms reveals a system that is functionally identical to Navboost as described in modern disclosures.

The development of this patent in 2004, a period when PageRank was the dominant and celebrated model of Google’s innovation, is particularly telling. It indicates that Google’s core engineering team was pursuing a dual-track approach to search ranking from very early on.

While PageRank leveraged the web’s explicit link structure as a proxy for authority, this patent laid the groundwork for a parallel system that would leverage implicit user feedback as a proxy for satisfaction and relevance.

This suggests a strategic foresight – an early recognition that link-based authority was an incomplete and potentially manipulable signal. The system described in the patent was designed to serve as a necessary check and balance, a “human feedback loop” to validate or correct the rankings produced by purely link-based algorithms.

Navboost, therefore, was not an evolutionary afterthought but a core component of Google’s original grand design for a robust and defensible search engine.

- Core Mechanism 1: User Navigational Patterns as a Proxy for Clicks: The patent’s text does not frequently use the explicit term “click.” Instead, it refers to “user navigational patterns,” “documents visited by users,” and user “selections” of documents. In the context of a search engine results page (SERP), these phrases are functional equivalents of a click. A user “visits” or “selects” a document from a list of search results by clicking on its corresponding link. The patent’s framework is built entirely on analysing these user choices to infer document quality and relevance.

- Core Mechanism 2: Popularity Scores: The patent explicitly describes a method for assigning “popularity scores” to documents based on the frequency and patterns of these user interactions. This mechanism is a direct precursor to Navboost’s function. During the antitrust trial, Google executive Eric Lehman confirmed that Navboost assigns relative scores to documents based on user interaction data, validating this core concept from the patent. The patent outlines how these scores are computed by analysing which documents users choose to interact with, establishing a direct link between user behaviour and a quantifiable ranking signal.

- Core Mechanism 3: Topicality and Correlation: A crucial element of the patent is its method for mapping documents to specific topics and then calculating “per-topic popularity information”. This involves correlating the popularity data (derived from user interactions) with the topics associated with each document. This process perfectly mirrors Navboost’s primary objective: to refine search results for a specific query (a topic) by promoting the documents that have proven most popular (most satisfying to users) for that same query in the past. It is a system designed not for general, site-wide popularity, but for query-specific, contextual popularity.

In synthesis, the 2004 patent outlines a dynamic, user-responsive system that ranks documents by correlating their topical relevance with their demonstrated popularity, as measured by user navigational patterns.

This is, in essence, a precise and accurate abstract description of the Navboost system’s core function, providing a clear window into the foundational principles that have guided its development for nearly two decades.

2.3 The “Made Up Crap” Debate: A History of Public Denial and Private Reality

The definitive confirmation of Navboost’s role resolves a long-standing and often contentious debate within the SEO community, a history that now appears layered with irony.

For years, Google representatives publicly and repeatedly denied that user click data was a significant, direct ranking factor. Googlers like Gary Illyes often described clicks as a “very noisy signal” and, in a particularly blunt 2019 dismissal of theories around dwell time and click-through rates (CTR), stated:

“Dwell time, CTR, whatever Fishkin’s new theory is, those are generally made up crap.”

This official stance flew in the face of public experiments, most famously conducted by Rand Fishkin, which demonstrated that a sudden burst of clicks from a large audience could cause a page to temporarily shoot up in the rankings. While Google attributed these results to other correlated factors like social mentions and search volume, long-time proponents of user signals, such as A.J. Kohn, consistently argued that “implicit user feedback” was a core component, citing Google’s own patents as evidence.

The recent revelations have vindicated these long-held theories. The profound irony lies in Illyes’s choice of words.

The leaked documentation reveals that the core module responsible for processing these very click signals is internally named “Craps.”

Whether this was a deliberate, meta-textual joke or a striking coincidence, it perfectly encapsulates the historical dynamic between Google’s public relations and its internal engineering reality: the very “crap” being publicly derided was, in fact, a named and critical component of the ranking architecture.

III. The Architectural Framework: How Navboost Operates Within Google Search

Navboost is not a monolithic ranking algorithm but a specialised component within a complex, multi-stage pipeline. Its architecture is designed for stability, contextual relevance, and resistance to manipulation.

3.1 Position in the Ranking Pipeline: A Re-Ranking and Culling System

The primary function of Navboost within the overall ranking pipeline is to act as a powerful, user-behaviour-driven filter.

It does not function as an initial retrieval system but as a re-ranking and refinement system that operates on a pre-filtered set of candidate documents. This was explicitly confirmed by Pandu Nayak, who stated, “Remember, you get Navboost only after they’re retrieved in the first place“.

This means that other core Google algorithms first assemble a large pool of potentially relevant documents from Google’s index.

According to Pandu Nayak’s testimony, Navboost is then used to dramatically reduce this set from tens of thousands down to a few hundred. This much smaller, higher-quality set of documents is then passed on to more computationally expensive and nuanced machine learning systems for final ranking.

This architectural choice reveals a sophisticated, multi-layered approach to ranking.

Navboost acts as a powerful “wisdom of the crowds” filter.

It does not discover new content; it validates the relevance and quality of content that has already passed an initial algorithmic check.

A key limitation acknowledged in the testimony is that Navboost can only influence the ranking of documents that have already accumulated click data; it cannot help rank brand-new pages or those in niches with very low search volume.

3.2 The 13-Month Memory Window

Navboost’s models are informed by a vast archive of historical click data.

The system operates on a vast time horizon, storing and analysing 13 months of user interaction data to inform its signals. This extended timeframe allows it to look beyond short-term fluctuations and identify persistent, long-term patterns of user satisfaction, effectively using the collective wisdom of billions of past searches to guide future rankings.

- Stability and Seasonality: A 13-month timeframe is long enough to capture and account for seasonal trends in user behaviour. For example, it can learn that queries related to “holiday shopping” have different user satisfaction patterns in November than in May. This prevents the system from being overly influenced by short-term fluctuations and provides a stable, long-term view of document performance.

- Resistance to Manipulation: The long memory window makes the system more resilient to short-term manipulation attempts. A sudden, artificial spike in clicks on a particular result will have a diluted effect when averaged over 13 months of legitimate user data, making it more difficult and costly for bad actors to significantly influence rankings through such methods.

- Recency Tuning: Prior to 2017, the system retained data for a longer period of 18 months. The reduction to 13 months suggests a deliberate tuning of the system to give slightly more weight to more recent user behaviour patterns while still maintaining long-term stability.

Furthermore, for queries where long-term historical data is irrelevant, such as breaking news or rapidly emerging trends, a much faster version of the system exists.

This component, related to a system called “Instant Glue,” operates on a 24-hour data window with a latency of approximately 10 minutes, allowing Google to adapt rankings to real-time events.

3.3 Data Segmentation and Context: The “Slicing” and “Glue” Sub-Systems

To provide contextually relevant results, Navboost employs several sub-systems that segment and analyse user interaction data.

- Slicing: The system segments, or “slices,” its vast repository of click data by critical contextual factors, most notably the user’s geographic location and device type (e.g., mobile or desktop). This allows Navboost to prioritise results that have performed well for users in a similar situation, for example, boosting a local business’s website for mobile users in a specific city. This nuanced, context-aware approach is fundamental to Navboost’s ability to refine and improve the relevance of Google’s search results.

- Glue: This is a related, more real-time system that works alongside Navboost. The “Glue” system specifically monitors user interactions with non-traditional SERP features like knowledge panels, image carousels, and featured snippets. By analysing signals such as hovers and scrolls on these elements, Glue helps Google determine which features to display and how to rank them, especially for fresh or trending queries where historical click data may be sparse.

IV. The Signal Economy: A Granular Examination of User Clicks

The fuel for the Navboost engine is user interaction data.

However, Navboost’s analysis is highly nuanced, moving beyond a simple click count to classify different types of user interactions.

Leaked documents and testimony point to several key click metrics that create a sophisticated “signal economy,” allowing the system to distinguish between fleeting curiosity and genuine fulfilment of search intent.

4.1 The Spectrum of Clicks: From Dissatisfaction to Ultimate Success

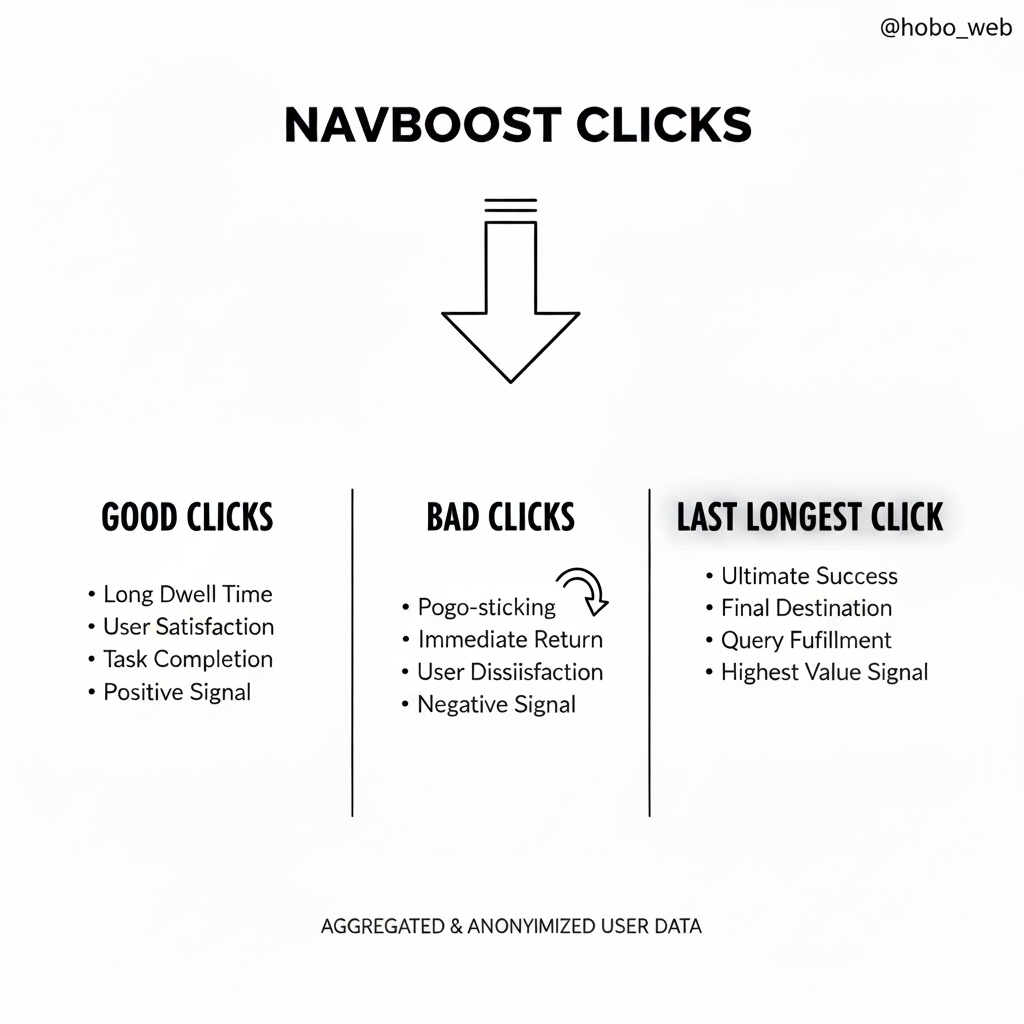

Navboost classifies user clicks along a spectrum that reflects varying degrees of success in satisfying the user’s query. This qualitative distinction is central to its function.

- Good Clicks vs. Bad Clicks: The system distinguishes between positive and negative interactions. A “bad click” is probably a “pogo-stick” event, where a user clicks a result and then immediately returns to the SERP, signalling dissatisfaction. A “good click,” conversely, indicates that the user’s need was met, typically registered when a user clicks on a result and remains on the destination page for a significant period.

- Last Longest Click: This metric appears to be of particular importance. It identifies the final result a user clicks on in a search session and dwells on for a significant period. This interaction is interpreted as the ultimate signal of a successfully completed search task, making the page that received the “last longest click” a highly valuable result for that query context.

4.2 Signal Normalisation: The Concept of ‘Squashed’ vs. ‘Unsquashed’ Clicks

The API documentation introduces a more technical and nuanced layer of signal processing with the terms “unsquashed clicks” and “unsquashed last longest clicks”.

These terms point to a sophisticated mechanism for signal normalisation designed to maintain the integrity of the ranking system.

The concept of “squashing” in this context refers to a data processing technique that prevents a single, overwhelmingly large signal from dominating an entire dataset.

A Google patent related to scoring local search results explicitly explains that “squashing” prevents one large signal from dominating, ensuring no single click signal can manipulate rankings.

In statistical analysis and large-scale data processing, squashing functions (such as logarithmic functions or applying a ceiling value) are used to compress the range of data points, reducing the impact of extreme outliers.

Applying this concept to Navboost, the distinction can be interpreted as follows:

- Unsquashed Click: This likely represents the raw, unprocessed signal of a user interaction. It is the direct measure of a click or a long click before any normalisation has been applied. In its raw form, this signal could have a very high initial weight.

- Squashed Click: This is the same signal after it has been passed through a normalisation or “squashing” function. This process would cap or reduce the influence of any single click or burst of clicks, ensuring that the long-term, 13-month historical data remains stable and representative of broad user consensus.

This distinction reveals a critical defence mechanism within Navboost.

It is designed to protect the system from statistical anomalies, such as a piece of content going viral for reasons unrelated to its relevance for a specific query, or from adversarial attacks like click fraud.

By squashing signals, Google ensures that the “wisdom of the crowds” is not hijacked by a “tyranny of the outlier,” thereby preserving the reliability of its user-behaviour-based rankings.

4.3 The ‘Craps’ System and Signal Integration

The leaked documentation provides a crucial piece of the architectural puzzle by stating that Navboost provides “click and impression signals for Craps,” which is described as a ranking system.

The “Craps” module, specifically the QualityNavboostCrapsCrapsData protocol buffer, is the data structure that captures, aggregates, and transports these user interaction signals.

The Craps system likely functions as the integration point where the various signals – bad clicks, good clicks, last longest clicks, and their squashed/unsquashed variants – are aggregated and weighted to compute a final score or ranking adjustment for a given document.

The name, likely a piece of internal Google humour, may allude to the probabilistic nature of the task: predicting user satisfaction and ranking outcomes based on a complex set of weighted, interacting signals, much like the probabilities in the dice game of the same name.

4.4 Beyond the URL: The ‘Topical Neighbourhood’ Effect

A critical attribute within the Craps data structure, patternLevel, confirms that a URL’s performance is not evaluated in isolation.

The system aggregates click signals at multiple levels: the specific URL, the host and path (directory), and the parent domain.

This means that user satisfaction signals from many individual pages are rolled up to create an aggregate performance score for entire sections of a website.

This “topical neighbourhood” effect directly implies that a site’s architecture is a critical lever for managing and concentrating ranking signals, creating a reputation for sections of a site that can influence how new content in those areas is initially perceived.

V. The Extended Ecosystem: Navboost, Glue, and the Whole-Page SERP

The Navboost system, while powerful, is not the sole arbiter of user interaction signals at Google. It operates as part of a larger, more comprehensive ecosystem designed to optimise the entire search engine results page.

The key counterpart to Navboost is a system known as “Glue,” which extends the same principles of user-behaviour analysis beyond traditional web results to encompass every feature on the SERP.

5.1 Defining the Division of Labour

The distinction between Navboost and Glue is one of scope and focus, as clarified by Pandu Nayak’s testimony.

- Navboost’s Focus: The primary responsibility of Navboost is to analyse user interactions with and refine the rankings of the traditional, organic web results – the “ten blue links”. It is the system dedicated to determining the most satisfying web documents for a given query.

- Glue’s Focus: Glue, by contrast, is the broader system responsible for managing and ranking all other features that appear on the SERP. This includes a wide array of rich results and interactive elements, such as Knowledge Panels, featured snippets, image packs, video carousels, and “People Also Ask” boxes. Nayak described Glue as “another name for Navboost that includes all of the other features on the page”.

This division of labour reveals a modular yet holistic approach to SERP optimisation. Google does not just rank a list of documents; it constructs and ranks a complete user experience.

Navboost is the specialist module for perfecting the list of web pages, while Glue is the generalist module that orchestrates the entire ensemble of rich features around them.

5.2 Glue: The “Super Query Log” for the Entire SERP

The Glue system functions as a comprehensive data aggregation platform, described in court documents as a “super query log” or a “giant table” that captures a wide range of information about queries and user interactions.

Its capabilities extend beyond the simple click tracking associated with Navboost.

- Unified Metric for Diverse Interactions: Glue is designed to aggregate a diverse set of user interactions, including not only clicks but also hovers, scrolls, and swipes. It creates a common, unified metric from these varied signals, allowing it to compare the performance of vastly different SERP elements. For example, it can compare the engagement generated by a user clicking a blue link against the engagement from a user scrolling through an image carousel or hovering over a Knowledge Panel.

- Triggering and Ranking SERP Features: This unified engagement metric is mission-critical for determining both whether a specific SERP feature should be triggered for a query and, if so, where on the page it should be ranked. If users consistently and heavily engage with video results for a particular type of query, the Glue system will learn to trigger and rank the video carousel more prominently for similar queries in the future.

- Powering Whole-Page Ranking: The data from Glue is a critical input for a more advanced system known as “Whole-Page Ranking” (internally codenamed Tetris and later Tangram). This system’s objective is to optimise the layout of the entire SERP, treating it as a single, cohesive unit. It arranges web results and rich features in a configuration predicted to generate the highest overall user engagement and satisfaction.

- Real-Time Adaptability with “Instant Glue”: Similar to Navboost’s faster counterpart, a real-time version called “Instant Glue” operates on a 24-hour window with a latency of approximately 10 minutes. This enables the system to rapidly adjust the SERP layout in response to breaking news or fast-moving trends, for example, by promoting a “Top Stories” feature when user interactions signal a surge in news-related intent.

The existence and function of the Glue system have profound implications for understanding search visibility. It demonstrates that a website’s organic performance is not determined in a vacuum.

Web pages are not merely competing against other web pages for a top position. Instead, they are competing for user attention against every other element on the SERP – videos, images, answer boxes, and more.

If a rich feature, as measured by Glue, proves to be more engaging and satisfying for a particular query, it may be ranked higher than traditional web results, pushing them further down the page, regardless of their individual Navboost scores. This transforms the landscape of SEO from a “ten-blue-link” competition into a holistic, multi-format, whole-page engagement battle.

5.3 Complementary Signals: Firefly and On-Site Prominence

While the Craps module provides the granular, per-query measure of user satisfaction, other systems provide broader context.

- Firefly: The QualityCopiaFireflySiteSignal model operates at a much higher level, containing aggregated, site-level signals that offer a holistic view of a website’s overall quality, authority, and content production cadence. This site-wide reputation score is directly attached to every individual page, meaning a site with strong Firefly signals may receive a higher baseline level of trust, giving its new pages a better starting position before they have had the chance to accumulate their own significant Craps data.

- On-Site Prominence: The connection between user engagement and authority extends beyond the SERP. Another data model contains an attribute called onsiteProminence, which measures a document’s importance within its own site. This score is calculated by simulating traffic flow from the homepage and from “high craps click pages.” This creates a powerful feedback loop: pages that perform well with users (generating high goodClicks and lastLongestClicks) are not only rewarded in search results but are also identified as internal authority hubs, further solidifying their importance.

VI. Privacy and Anonymity in Aggregated Click Data

The operation of a system like Navboost, which analyses billions of user interactions daily, inherently raises significant questions about user privacy.

The potential for such a massive dataset to reveal sensitive information about individuals is substantial, as historical incidents like the 2006 AOL search log release have demonstrated.

However, the available evidence suggests that privacy preservation is not an afterthought but a core architectural principle of the system, designed to decouple the analytical value of a click from the identity of the user who made it.

6.1 Google’s Stated Approach: Aggregation and Anonymisation

Across its public-facing documentation, Google consistently states that the user interaction data it employs for ranking purposes is “aggregated and anonymised”. This serves as the foundational layer of its privacy strategy.

Aggregation ensures that the system analyses broad patterns and trends across large groups of users, rather than the behaviour of specific individuals.

Anonymisation involves stripping the data of personally identifiable information (PII), preventing a direct link between a search history and a known person.

6.2 The “Voters and Votes” Analogy

The internal terminology revealed in the leaked API documents provides a deeper understanding of the system’s privacy-centric design. One document describes a Navboost module as being focused on “click signals representing users as voters. Their clicks are then stored as their votes”.

This “voter” and “vote” analogy is highly powerful.

The cornerstone of modern democratic voting systems is the principle of the secret ballot, which ensures the anonymity of the individual voter while guaranteeing that the aggregate tally of votes is accurate and verifiable.

The use of this specific metaphor strongly implies that the Navboost architecture is designed with a similar goal: to preserve the anonymity of the “voter” (the user) while accurately counting their “vote” (the click signal).

This suggests a system designed to make individual clickstreams non-attributable from the outset, a far more robust privacy protection than simply retroactively scrubbing PII from logs. The concept of “voter tokens” used in anonymous digital voting systems provides a technical parallel for how such a system could be implemented, where a one-time, unlinkable token authenticates an action without revealing the actor’s identity.

6.3 Technical Frameworks for Privacy Preservation

While the specific implementation details are proprietary, the principles described align with established technical frameworks for privacy-preserving data mining (PPDM). These methods allow for large-scale statistical analysis while offering mathematical guarantees about individual privacy.

- Differential Privacy: This is a rigorous mathematical standard for data analysis used by numerous major technology companies, including Google and Apple. The core principle of differential privacy is to add a carefully calibrated amount of statistical “noise” to a dataset before analysis. This noise is small enough that it does not significantly alter the aggregate statistical results (e.g., the overall click rate for a URL) but large enough that the presence or absence of any single individual’s data in the dataset has a negligible effect on the final output. This provides a formal guarantee that an observer of the output cannot determine whether any specific individual’s data was included in the computation. This framework is a highly plausible mechanism for how Google can process Navboost’s aggregated click data in a privacy-preserving manner.

- k-Anonymity and Obfuscation: Other established PPDM techniques provide additional context for how large-scale user data can be sanitised. k-anonymity is a property of a dataset that ensures any individual is indistinguishable from at least k-1 other individuals within the data, typically achieved through generalisation (e.g., replacing an exact age with an age range). Obfuscation techniques involve altering data to hide sensitive information while preserving its utility for analysis. These principles, combined with anonymous routing channels like Tor, form a broad toolkit of privacy-enhancing technologies (PETs) that can be used to build systems like Navboost.

The combination of Google’s public statements, the “voter” analogy from internal documents, and the existence of mature privacy-preserving frameworks like differential privacy strongly suggests that Navboost is not simply analysing raw, user-identified click logs. Instead, the system probably ingests click data that has already passed through a sophisticated privacy-preserving layer.

The architecture is designed to be interested in what was clicked for a given query and in what context (the location/device “slice”), but to be deliberately and architecturally ignorant of who performed the click.

This design choice is crucial, allowing Google to leverage its massive scale advantage in user data without creating an equally massive and untenable privacy liability.

VII. Comparative Analysis: The Evolution from Inferred to Observed Relevance

The emergence of Navboost as a primary ranking signal represents a pivotal stage in the evolution of search engine technology. It marks a transition from ranking models based on inferred relevance to a model that incorporates directly observed relevance.

To appreciate the significance of this shift, it is necessary to compare Navboost with the foundational algorithms that preceded it.

These systems are not obsolete but rather form the underlying layers upon which Navboost’s user-centric validation is built, working in concert to deliver high-quality, authoritative results.

7.1 Pre-Navboost Era: Content and Links as Proxies for Relevance and Trust

Early information retrieval systems relied on signals derived from the content of documents and the structure of the web itself.

- TF-IDF (Term Frequency-Inverse Document Frequency): This is a classical statistical model that establishes foundational relevance by evaluating how important a query’s words are to the text of a document. It operates on two principles:

- Term Frequency (TF): The more frequently a query term appears in a document, the more likely that document is to be relevant to that term.

- Inverse Document Frequency (IDF): Terms that are common across many documents (e.g., “the,” “is”) are less informative and are down-weighted, while rarer, more specific terms are boosted in importance.

In essence, this model answers the question: “How statistically important are the query words within the text of this document?” Its primary limitation is its lack of any concept of quality or authority. - PageRank: PageRank was Google’s groundbreaking innovation that addressed the quality limitation by introducing a model for trust and authority. It uses the web’s hyperlink structure as a massive, peer-review system, where a link from one page to another acts as a “vote” of authority. Votes from pages that are themselves important carry more weight. PageRank answers the question: “How authoritative is this document, based on the quantity and quality of other documents that link to it?” While revolutionary, PageRank is an imperfect proxy for relevance, as high authority does not always equate to high user satisfaction for a specific query.

7.2 The Navboost Paradigm: User Satisfaction as the Ultimate Arbiter

Navboost introduces a third, and arguably most decisive, layer to the ranking process. It moves beyond analysing the static properties of the web to analyse the dynamic interactions of users with the search results.

- A New Foundational Question: Navboost is designed to answer a fundamentally different question: “How satisfying do real users find this document when they are searching for this specific query?”. It uses aggregated click behaviour – long clicks, short clicks,and last longest clicks – as a direct measure of user satisfaction. It is likely based on a COEC (Clicks Over Expected Clicks) model, where it compares the actual number of clicks a result gets to the expected number of clicks for that specific ranking position. A result in the fourth position that gets significantly more clicks than an average fourth-position result would receive a ranking boost.

- A Synergistic, Three-Part Model for Quality: In essence, these three Google systems work in concert to deliver high-quality results. This aligns with the philosophy echoed throughout the trial: Google wants trustworthy content to rank at the top. Witnesses underscored that the search engine deliberately prioritises authoritative sources in rankings. Nayak explained that Google’s “page quality signals” are “tremendously important” because the goal is to “surface authoritative, reliable search results” for users. In the same vein, Dr. Lehman testified that “our goal is to show – when someone issues a query – to give them information that’s relevant and from authoritative, reputable sources.”

The complete ranking process can be understood as a three-stage model where T establishes relevance, Q assesses trust, and Navboost refines the results based on user satisfaction:- Relevance (T): Systems based on principles like TF-IDF establish the initial pool of candidate documents by identifying those that are textually relevant to the query.

- Trust (Q): Systems like PageRank assess the trust and authority of these documents, prioritising those from reputable and established sources.

- Satisfaction (Navboost): Navboost acts as the final validation layer. It takes the list of relevant, trustworthy documents and refines the results based on which ones have historically proven to be the most satisfying to users, as demonstrated by their collective click patterns.

This process represents the “closing of the loop” in search ranking.

The first two stages make predictions about what users might find relevant and trustworthy.

Navboost, in contrast, is a “closed-loop” feedback system that directly measures what users actually do find satisfying and feeds that information back into the model to continuously improve future rankings.

It is an adaptive, self-correcting mechanism that uses real human behaviour as the ultimate arbiter of quality.

VIII. Limitations and Systemic Challenges of a Click-Based Model

While Navboost represents a significant advancement in measuring user satisfaction, a ranking system heavily reliant on user interaction signals is not without its own inherent limitations and vulnerabilities.

Google’s awareness of these challenges is evident in the complex ecosystem of supplementary algorithms and checks that surround Navboost, indicating that the final search ranking is a product of a negotiated equilibrium rather than the output of a single, infallible system.

8.1 The Risk of Popularity Bias and Clickbait

A system that rewards clicks, even with the nuance of measuring post-click behaviour, is inherently susceptible to promoting content with high superficial appeal over content with substantive value.

This creates a risk of elevating “clickbait” – pages with sensationalist or misleading titles and descriptions designed to attract a click but which fail to deliver on their promise.

Google’s primary defence against this within the Navboost framework is the qualitative distinction between different types of clicks.

The system’s emphasis on “good clicks” (long dwell time) and the “last longest click” serves as a direct countermeasure to clickbait, which typically generates a high volume of “bad clicks” (pogo-sticking) as users quickly become dissatisfied and return to the SERP.

However, the tension between what is enticing to click and what is truly satisfying remains a persistent challenge.

8.2 Ranking Inertia and Lack of Diversity

One of the most significant systemic drawbacks of a behaviour-based ranking model is its tendency to create a self-reinforcing feedback loop, leading to ranking inertia. The process unfolds as follows:

- Documents ranked in the top positions receive the vast majority of user visibility and, consequently, the vast majority of clicks.

- By receiving more clicks, these top-ranking documents accumulate more positive Navboost data (assuming they are reasonably satisfying to users).

- This accumulation of positive data reinforces their high ranking, making it even more difficult for documents lower down the page to compete.

This phenomenon, sometimes referred to as “grandfathering,” can stifle diversity in the search results.

A new, potentially superior piece of content may struggle to ever gain the visibility necessary to accumulate the click data required to challenge the established incumbents, even if it would be more satisfying to users.

The system can, over time, favour pages that are “good enough” and have a historical advantage over newer pages that might be “better.”

8.3 The Need for Corrective Systems (“Twiddlers”)

The inherent flaws and potential failure modes of Navboost necessitate the existence of other, corrective algorithms.

Documents from the antitrust trial revealed that Google employs a class of mini-algorithms, referred to internally as “twiddlers,” which act as a “police force” to fine-tune the SERPs and correct for undesirable outcomes.

These twiddlers can address situations where Navboost’s user-centric model might produce problematic results, such as promoting low-quality or inappropriate content (e.g., pornography for an ambiguous query) or demoting official, authoritative pages that may not be as “engaging” as other content.

The existence of these corrective systems demonstrates that Navboost is not given absolute authority. It operates within a broader framework of checks and balances designed to ensure overall search quality, safety, and relevance.

8.4 The Cold Start Problem

Navboost is fundamentally a data-driven system; it requires a sufficient volume of historical user interaction data to function effectively.

This presents a “cold start” problem for new web pages, new websites, or queries that have never been searched before. In these scenarios, there is no Navboost data to inform the rankings.

Consequently, for such cases, Google must rely entirely on its traditional, “open-loop” ranking signals derived from content analysis (like TF-IDF) and link-based authority (like PageRank).

A page must first achieve some initial visibility through these traditional means to begin accumulating the click data necessary for Navboost to evaluate its performance.

8.5 Vulnerability to Adversarial Manipulation

Any system based on a measurable metric becomes a target for manipulation.

Despite architectural defences like the 13-month data window and signal “squashing,” Navboost remains vulnerable to adversarial attacks. The system does employ pre-emptive quality controls, such as the (redacted) attribute, which assigns a prior probability of a click being bad based on (REDACTED), down-weighting clicks from low-quality sources. However, sophisticated actors can still employ techniques such as (REDACTED).

Detecting and filtering this invalid traffic is a continuous and complex challenge, representing an ongoing arms race between the search engine and those seeking to exploit its algorithms.

The limitations of Navboost ultimately reveal a deeper truth about Google’s ranking philosophy.

There is no single, perfect ranking paradigm. The true strength of Google’s search engine lies not in any one algorithm, but in its creation of a complex, internally adversarial ecosystem of algorithms.

Navboost’s user feedback acts as a check on PageRank’s link authority, while rule-based and machine-learning “twiddlers” act as a check on Navboost’s potential biases.

The final ranking that a user sees is not the output of a single system but the negotiated, dynamic equilibrium of these multiple, often competing, algorithmic philosophies. This architectural complexity is Google’s primary defence against manipulation and its core strategy for achieving robust and reliable search quality.

IX. Strategic Imperatives: Navigating a Navboost-Influenced SEO Landscape

The public confirmation and detailed understanding of Navboost fundamentally recalibrate the strategic priorities for search engine optimisation.

It validates a holistic, user-centric approach, transforming abstract concepts like “user experience” and “search intent” into tangible inputs for a primary ranking system. Navigating this landscape requires a strategic shift from purely technical optimisation to a deep focus on creating satisfying and engaging user journeys.

9.1 User Experience (UX) is a Direct Ranking Factor

The mechanics of Navboost elevate user experience – page experience – from a correlated best practice to a direct and measurable ranking factor.

Elements that contribute to a positive on-page experience are the very same elements that generate the “good click” and “last longest click” signals that Navboost is designed to reward. Therefore, a comprehensive SEO strategy must prioritise core UX principles:

- Page Speed: Slow-loading pages are a primary cause of user frustration and lead directly to “bad clicks” or pogo-sticking. Optimising for speed is crucial to retaining users long enough to engage with the content.

- Mobile-First Optimisation: With Navboost maintaining a separate data “slice” for mobile, a seamless and responsive mobile experience is non-negotiable. A clunky or difficult-to-use mobile site will be penalised by the mobile-specific user behaviour data it generates.

- Intuitive Navigation and Site Architecture: A clear, logical site structure allows users to easily find the information they need and explore related content, increasing session duration and pages per session – both positive engagement signals.

9.2 Content Strategy Must Prioritise Satisfying Intent

In a Navboost-driven world, the ultimate goal of content creation is to be the “last longest click” for a user’s query. This requires a profound shift in focus from simply matching keywords to comprehensively satisfying the underlying search intent.

Content must be crafted to be the definitive answer, encouraging long dwell times and eliminating any need for the user to return to the SERP. This means creating content that is not only relevant but also high-quality, comprehensive, well-structured, and engaging.

The strategic objective is to end the user’s search journey on your page, thereby generating the most powerful positive signal Navboost measures.

9.3 Optimising for the Click (CTR Optimisation)

A page cannot generate positive post-click signals if it is never clicked in the first place. Therefore, winning the initial click on the SERP is a critical prerequisite for success in the Navboost system.

This places a renewed and now empirically validated importance on SERP presentation and CTR optimisation. Key tactics include:

- Crafting Compelling Title Tags and Meta Descriptions: These elements are a website’s “advertisement” on the SERP. They must be written not just for keyword relevance but also to be compelling and enticing to a human user, clearly communicating the value of the page and encouraging a click over competitors’ listings.

- Utilising Schema Markup: Implementing structured data to generate rich snippets (e.g., reviews, FAQs, product information) makes a search result more visually prominent and informative, which can significantly increase its CTR.

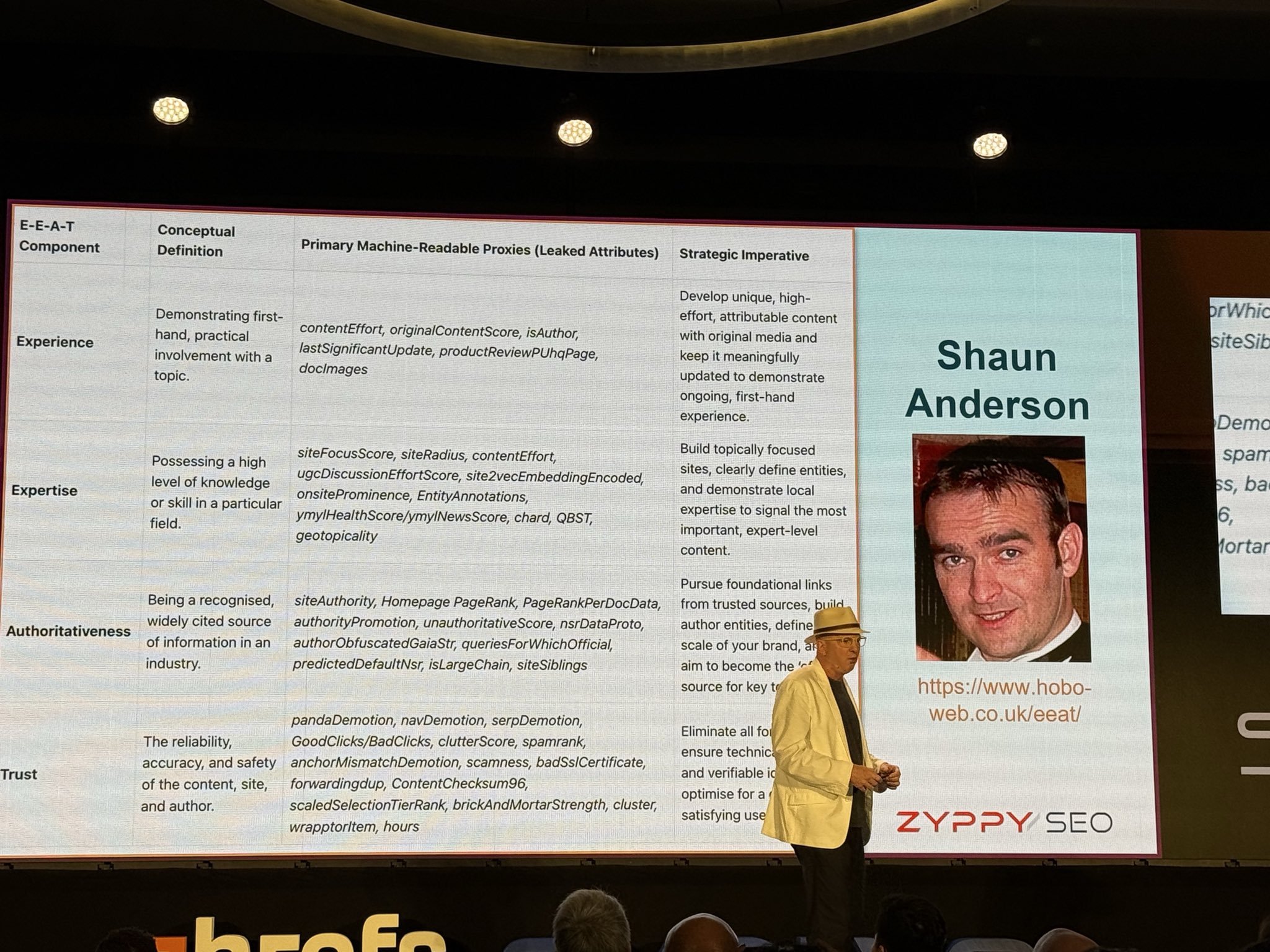

9.4 The Amplifying Role of Brand and E-E-A-T

Navboost acts as an amplifier for the value of brand recognition and established authority. Every search is an “aided awareness test,” where users are more likely to click on brands they recognise and trust.

This creates a powerful feedback loop: familiar brands get more clicks, which the Navboost system interprets as a positive signal, boosting their rank and leading to even more visibility. SEOs should therefore focus on a “multi-search user-journey strategy,” creating content for top-of-funnel queries to build brand exposure, making users more likely to click on that brand later for a commercial query.

- Navboost as a Validation of E-E-A-T: Content that demonstrates high levels of Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T) is inherently more likely to satisfy users. A trustworthy, expert-led article will encourage longer dwell times and build user confidence, directly contributing to positive Navboost signals. In this sense, Navboost functions as a large-scale, user-driven validation system for a site’s E-E-A-T claims.

9.5 Architecting for Signal Concentration

The patternLevel attribute reveals that site architecture is a powerful tool for signal management.

By aggregating click data at the directory and subdomain levels, the Craps system creates “topical neighbourhoods” with distinct performance reputations.

Strategists should architect their sites to group high-quality, topically aligned content into logical subdirectories to concentrate positive user engagement signals and build authority for entire sections of a website.

9.6 Navboost in Specialised Search (Video & Image)

While Navboost itself is primarily focused on traditional web results, its underlying principles of user engagement analysis are applied across all of Google’s search verticals, managed by its partner system, Glue.

- Video Search: For video platforms like YouTube, the ranking algorithms rely on a parallel set of user engagement signals. Metrics such as watch time, average view duration, audience retention, and the CTR on video thumbnails serve the same function as Navboost’s click signals. A video that users click on and watch for a longer duration is deemed more satisfying and is promoted by the algorithm. Social signals like likes, comments, and shares further reinforce this.

- Image Search: For image search, user engagement is also a key factor. While the specific signals are less detailed in the available documentation, it is logical to assume that metrics like the CTR from the image results page to the host website are significant. Furthermore, the user’s subsequent behaviour on that landing page – whether they quickly bounce back or stay and engage – is likely a powerful signal of the image’s relevance and the quality of its context. Therefore, optimising for image search involves not only technical factors like descriptive alt text and filenames but also ensuring the image is placed on a high-quality, fast-loading page that provides a good user experience.

Ultimately, the confirmation of Navboost’s central role solidifies a new mandate for SEO.

The discipline of SEO is no longer solely about technical compliance with a machine’s checklist. It has matured into a human-centric practice focused on deeply understanding and satisfying the end-user.

The most sustainable and effective long-term SEO strategy is to create the best possible digital experience and the most valuable, satisfying content, because it is the users themselves – through their billions of collective clicks – who are now a confirmed, primary force in shaping the landscape of search results.

X. Conclusion

The body of evidence drawn from judicial testimonies and leaked internal documentation provides a clear and detailed portrait of Navboost, confirming its status as a cornerstone of Google’s modern ranking apparatus.

This analysis has deconstructed the system, revealing it to be a sophisticated, mature, and deeply integrated behavioural analysis engine that has been refining search results for nearly two decades.

Its function marks a definitive evolutionary step in information retrieval, shifting the locus of authority from algorithmic proxies like links and keywords to the directly observed, aggregated behaviour of users.

The revelations confirm a synergistic, three-part model for delivering high-quality search results.

- First, foundational systems establish relevance, identifying documents that textually match a user’s query.

- Second, other algorithms assess trust, prioritising content from authoritative and reputable sources, a philosophy that Google’s witnesses repeatedly underscored.

- Finally, Navboost provides the crucial third layer of user satisfaction, acting as a powerful re-ranking filter. It takes the pool of relevant and trustworthy results and refines them based on which ones have historically proven most satisfying to users, as measured by a nuanced economy of click signals.

Navboost operates not in isolation but as part of a broader ecosystem alongside its counterpart, Glue, which applies the same user-centric principles to every feature on the SERP.

This dual system powers a “Whole-Page Ranking” philosophy, where the entire search results page is optimised as a single, cohesive user experience.

This reality reframes the competitive landscape, where traditional web pages must vie for user attention not just against each other, but against an entire suite of potentially more engaging rich features.

The architectural design, particularly the “voters and votes” analogy, strongly suggests that robust, privacy-preserving mechanisms are a foundational element, allowing Google to leverage vast behavioural data without compromising individual user anonymity.

This system of checks and balances is a recurring theme; Navboost itself is checked by other corrective algorithms (“twiddlers”) that guard against its inherent limitations, such as popularity bias and ranking inertia.

The final SERP is therefore not the product of a single algorithm but a negotiated equilibrium between multiple systems representing different ranking philosophies.

For digital strategists, marketers, and SEO professionals, the implications are profound and unequivocal.

The long-debated question of whether user behaviour directly impacts rankings has been answered.

The path to sustainable organic visibility is no longer a purely technical exercise but a fundamentally human-centric one.

Success in a Navboost-influenced search environment is contingent on an obsessive focus on the end-user: creating fast, intuitive, and accessible digital experiences; producing content that comprehensively satisfies search intent; and earning user trust through brand strength and demonstrated expertise.

In essence, Google has deputised its user base as the ultimate quality raters. The most effective SEO strategy is, and will continue to be, to create the best possible answer and the most satisfying experience for the human on the other side of the screen.

This philosophy was echoed throughout the trial: Google wants trustworthy content (e.g. official sites, established experts, high-quality publishers) to rank at the top, rather than sketchy or unverified pages, even if the latter are more crudely optimised for a keyword.

Key Takeaways

In essence, these three Google systems work in concert to deliver high-quality results: T establishes relevance, Q assesses trust, and Navboost refines the results based on user satisfaction.

Read next

Read my article that Cyrus Shephard so gracefully highlighted at AHREF Evolve 2025 conference: E-E-A-T Decoded: Google’s Experience, Expertise, Authoritativeness, and Trust.

The fastest way to contact me is through X (formerly Twitter). This is the only channel I have notifications turned on for. If I didn’t do that, it would be impossible to operate. I endeavour to view all emails by the end of the day, UK time. LinkedIn is checked every few days. Please note that Facebook messages are checked much less frequently. I also have a Bluesky account.

You can also contact me directly by email.

Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 2.5 Deep Research (and ChatGPT 5 for image generation). I have over 20 years writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived, edited, and verified as correct by me (and is under constant development). See my AI policy.

XI. References

- https://www.topoftheresults.com/the-google-navboost-leak-that-validated-ctr-manipulation-techniques/

- ((https://www.researchgate.net/publication/221079847_NewPR-Combining_TFIDF_with_Pagerank))

- https://www.kopp-online-marketing.com/how-google-evaluates-e-e-a-t-80-signals-for-e-e-a-t

- https://www.searchenginejournal.com/category/seo/google-patents/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC4643068/

- https://www.searchenginewatch.com/2014/01/30/building-a-crap-seo-content-strategy/

- https://developers.google.com/search/docs/appearance/google-images

- https://www.researchgate.net/publication/220211626_Preserving_user’s_privacy_in_web_search_engines

- https://stackoverflow.com/questions/26999930/git-merge-to-squash-or-not-to-squash

- https://www.evalueserve.com/blog/preserving-privacy-during-data-aggregation-and-annotation/

- https://www.makingdatamistakes.com/your-team-needs-a-default-glue-system-for-knowledge-use-notion/

- https://news.ycombinator.com/item?id=11407289

- https://softwareengineering.stackexchange.com/questions/263164/why-squash-git-commits-for-pull-requests

- https://www.searchenginejournal.com/googles-trust-ranking-patent-shows-how-user-behavior-is-a-signal/550203/

- https://medium.com/@flaviocopes/git-squashing-vs-not-squashing-af5009f47d9e

- https://www.outerboxdesign.com/articles/seo/googles-navboost-algorithm-highlight-from-leaked-google-search-api/

- https://digitalshiftmedia.com/marketing-term/navboost/

- ((https://patents.google.com/patent/US20180011854A1/en))

- ((https://research.tudelft.nl/files/238586943/Privacy-Preserving_Tools_and_Technologies_Government_Adoption_and_Challenges.pdf))

- https://www.indjcst.com/archiver/archives/user_behaviour_analysis_and_its_impact_on_content_ranking_in_q_and_a_platforms.pdf

- https://academic.oup.com/jrsssb/advance-article/doi/10.1093/jrsssb/qkaf048/8230325?searchresult=1

- https://www.searchenginejournal.com/navboost-patent/506975/

- ((https://www.reddit.com/r/SEO/comments/1manmve/what_do_you_all_think_about_these_google_patents/))

- https://searchengineland.com/how-google-search-ranking-works-pandu-nayak-435395

- https://www.kopp-online-marketing.com/patents-papers

- https://www.amazon.science/blog/from-structured-search-to-learning-to-rank-and-retrieve

- https://www.usenix.org/conference/usenixsecurity24/presentation/palazzo

- https://developers.google.com/search/docs/fundamentals/seo-starter-guide

- (((((https://www.researchgate.net/publication/220451745_Likelihood-Based_Data_Squashing_A_Modeling_Approach_to_Instance_Construction)))))

- https://crises-deim.urv.cat/webCrises/publications/isijcr/CastellaViejo09-ComCom.pdf

- https://www.qualaroo.com/blog/3-click-rule/

- https://www.agencyjet.com/blog/how-do-search-engines-categorise-each-piece-of-content

- https://kinsta.com/blog/google-patents-seo-ranking-factors/

- https://support.google.com/webmasters/answer/7042828?hl=en

- https://www.searchextension.in/blog/major-google-algorithm-updates-explained

- https://docs.aws.amazon.com/glue/latest/dg/what-is-glue.html

- https://central.yourtext.guru/navboost-algorithm/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC4236847/

- https://bendyourmarketing.com/blog/navboost-what-it-is-and-how-google-uses-it/

- https://rankmath.com/seo-glossary/navboost/

- https://searchengineland.com/navboost-user-trust-ux-445240

- https://developers.google.com/search/docs/fundamentals/how-search-works

- https://victorious.com/blog/navboost/

- ((((https://www.cs.princeton.edu/courses/archive/spr04/cos598B/bib/DuMouchel.pdf))))

- https://serpact.com/search-engine-ranking-models-ultimate-guide/

- https://www.privacyguides.org/articles/2025/09/30/differential-privacy/

- https://seranking.com/blog/navboost/

- https://marketbrew.ai/a/probabilistic-graphical-model-seo

- (((((https://www.quora.com/What-are-long-and-short-clicks-in-SEO)))))

- https://www.google.com/intl/en_us/search/howsearchworks/how-search-works/ranking-results

- https://www.popsci.com/technology/google-ai-overview-wrong/

- https://medium.com/data-science/learning-to-rank-a-complete-guide-to-ranking-using-machine-learning-4c9688d370d4

- https://www.searchenginejournal.com/google-algorithm-history/

- https://transistordigital.com/blog/what-is-googles-navboost-algorithm/

- https://pageoneformula.com/how-ux-signals-influence-search-engine-rankings/

- (((((https://www.visitphilly.com/wp-content/uploads/2018/11/9-YouTube-Video-Optimization-VP.pdf)))))

- (((((https://www.apple.com/privacy/docs/Differential_Privacy_Overview.pdf)))))

- https://nightwatch.io/blog/user-behaviour-metrics/

- https://devrix.com/tutorial/navboost/

- https://ppc.land/google-ordered-to-share-glue-data-system-in-landmark-antitrust-ruling/

- https://www.zipy.ai/blog/user-behavior-analytics-challenges

- https://www.reddit.com/r/Craps/comments/kk0scy/does_anyone_happen_to_know_of_a_statistical_model/

- https://arxiv.org/pdf/2509.22965

- https://www.teleprompter.com/blog/whats-a-good-click-through-rate-on-youtube

- ((https://en.wikipedia.org/wiki/Differential_privacy))

- https://www.switcherstudio.com/blog/video-click-through-rate

- (((((https://en.wikipedia.org/wiki/Ranking_(information_retrieval)))))

- https://en.wikipedia.org/wiki/Craps

- ((((https://patents.google.com/patent/US20230306782A1/en))))

- https://cloud.google.com/bigquery/docs/differential-privacy

- https://www.hyperlinker.ai/en/seo/navboost-google

- https://spideraf.com/articles/a-guide-to-click-fraud-and-how-to-prevent-it

- https://en.wikipedia.org/wiki/Ostracism

- https://databox.com/improve-youtube-ctr

- https://support.google.com/youtube/answer/7628154?hl=en

- ((((https://www.researchgate.net/figure/oting-Token-Card-Usage_fig9_249998668))))

- https://stackoverflow.com/questions/14940569/combining-tf-idf-cosine-similarity-with-pagerank

- https://blog.hootsuite.com/youtube-algorithm/

- https://www.everywheremarketer.com/blog/youtube-ranking-optimization

- https://www.blindfiveyearold.com/short-clicks-versus-long-clicks

- https://www.ynotyoumedia.com/blog/challenges-and-opportunities-in-cross-platform-user-behavior-tracking-and-analysis

- https://www.collinsdictionary.com/us/dictionary/english/craps

- https://milvus.io/ai-quick-reference/how-do-you-handle-large-datasets-in-data-analytics

- https://help.klaviyo.com/hc/en-us/articles/115005085427

- https://www.wikihow.com/Play-Craps

- https://searchengineland.com/guide/what-is-seo

- https://www.klipfolio.com/resources/kpi-examples/email-marketing/unique-clicks

- https://replug.io/blog/unique-clicks

- https://keywordseverywhere.com/blog/voice-search-stats/

- https://help.linktr.ee/en/articles/5434178-understanding-your-insights

- https://dashthis.com/kpi-examples/unique-clicks/

- https://www.singlegrain.com/seo/click-probability/#:~:text=Google%20ranks%20content%20based%20on%20click%20probability%2C%20using%20machine%20learning,searches%20and%20high%20interaction%20rates.

- https://www.sendx.io/blog/unique-clicks-vs-total-clicks