Get a Technical SEO audit for your website

For more than 20 years, I have been immersed in the practice of Google SEO (Search Engine Optimisation), a field often shrouded in jargon and perceived as a dark art. I’ve worked freelance, worked in agencies, directed agencies, worked on thousands of websites, written many SEO tutorials, written a beginner’s guide to SEO, run a SEO blog for 20 years and built my own SEO audit and reporting tools.

From my perspective as a Technical SEO specialist, I can tell you that technical SEO is not magic.

It is the fundamental, and often unseen, engineering that underpins digital success in 2025.

It is the process of ensuring a clear, unimpeded dialogue between your website and the search engine crawlers, primarily Googlebot.

Think of it as ensuring your digital storefront has clear signage, doors, and a logical layout so that your most important customer – the search engine – can enter, understand what you sell, and confidently recommend it to others.

My work, spanning thousands of websites from small businesses to large enterprises with millions of pages, has taught me one immutable truth: without a solid technical foundation, even the most brilliant content, the most creative marketing campaigns, and the largest advertising budgets will fail to achieve their potential.

You can have the best product in the world, but if the door to your shop is locked or sticks, no one will ever buy it.

Technical SEO is the practice of finding and unlocking all those doors.

Symptoms of a Deeper Malaise

Businesses rarely come to me when things are going well. They come when they are in pain.

These symptoms are often of an underlying technical sickness, and they manifest in ways that directly impact the bottom line.

Over my career, I’ve seen these scenarios play out time and time again.

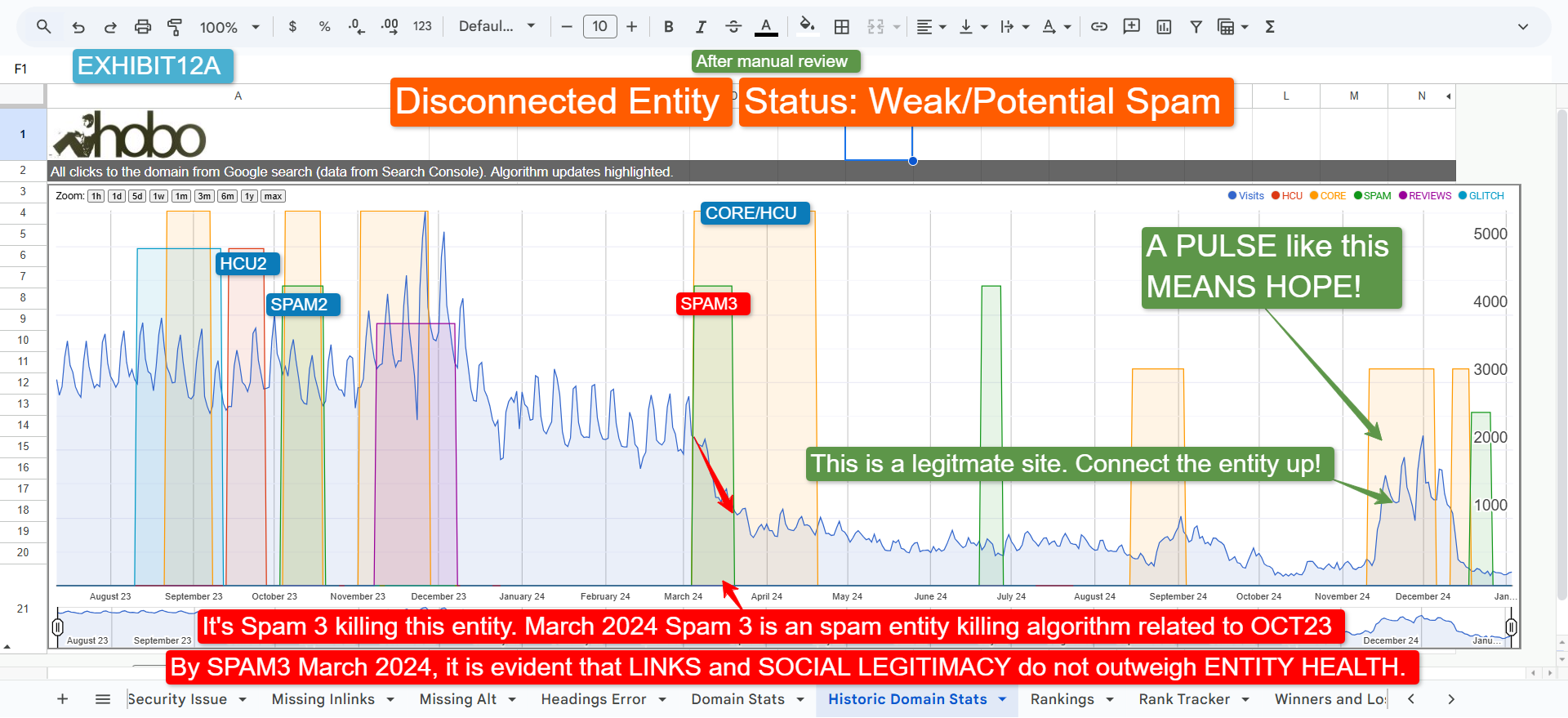

- Sudden & Catastrophic Traffic Drops: A business owner or marketing director wakes up one morning to find their organic traffic, the lifeblood of their lead generation or sales, has plummeted by 30%, 50%, or even more. This is rarely an accident. It is almost always the consequence of a Google Core or Spam Update algorithmically demoting the site for quality issues that have been allowed to fester or spread for months or even years. The penalty is the symptom; the disease is poor site quality.

- The “Invisible” Website: A company invests heavily in launching a new product line or a new section of their website. Weeks go by, and it generates no organic traffic. A quick search on Google reveals the pages are not even in the index. This points to critical, foundational errors in crawling and indexing instructions – perhaps a misconfigured robots.txt file, an incorrectly applied noindex tag, or a web of confusing canonicalization signals that leave Googlebot utterly lost.

- Crawl Budget Wastage on Low-Quality Pages: This is a silent killer of SEO performance, especially for large e-commerce sites or enterprise-level publishers. Google allocates a finite amount of resources – a “crawl budget” – to exploring any given website. When a site’s architecture is flawed, Googlebot can spend the majority of its time crawling thousands of low-value, parameterised URLs generated by faceted navigation, or getting lost in endless loops of duplicate tag and archive pages. Googlebot sometimes never gets to the important, money-making pages, which languish uncrawled and unranked.

- Poor Page Experience & High Bounce Rates: A potential customer clicks on a search result, only to be met with a page that takes an eternity to load. When it finally appears, the layout jarringly shifts as ads and images pop in, causing them to misclick. Frustrated, they hit the back button. These are no longer just user experience (UX) problems; they are direct, measurable ranking factors via Google’s Core Web Vitals. A slow site can be a low-quality site in Google’s eyes, and statistics show that 40% of users will abandon a page that takes longer than three seconds to load.

- The Agony of SEO Agency Roulette: I see this constantly in forums and hear it from new clients. A business has spent tens of thousands of pounds on an SEO agency over a year with absolutely no return on investment. This often happens because the agency focuses on superficial vanity metrics or link-building schemes while ignoring the crumbling technical foundation of the site. This not only wastes money but also breeds a deep and understandable distrust in the entire SEO industry.

These are not just technical SEO errors; they are business problems with severe financial consequences.

The challenge is that the cause and effect are often separated by time.

A developer might introduce a critical error during a site migration, but its catastrophic effect on traffic might not be felt until the next Google Core Update months later.

This delayed-reaction nature is precisely why a one-off audit is insufficient.

What is required is a system of continuous monitoring to detect these issues as they are introduced, preventing the catastrophe before it happens.

| Technical SEO Pain Point | Technical Explanation | Direct Business Impact |

| Index Bloat / Crawl Budget Waste | Search engines are crawling and indexing a large number of low-value, thin, or duplicate URLs (e.g., from faceted navigation, tags, archives). | Wasted crawl budget, dilution of ranking signals across too many pages, potential for algorithmic demotion due to low site-wide quality, leading to reduced visibility for revenue-generating pages. |

| Improper Canonicalization | The site sends conflicting signals about which version of a duplicate or similar page is the “master” version, using rel=”canonical” tags incorrectly or not at all. | Diluted link equity, keyword cannibalisation (where multiple pages compete for the same term), wasted crawl budget, and Google potentially indexing the wrong URL, leading to lower rankings for key pages. |

| Poor Core Web Vitals (LCP, INP, CLS) | The site provides a poor user experience due to slow loading of the main content (LCP), high interactivity latency (INP), and unexpected layout shifts (CLS). | Negative ranking signal, higher bounce rates, lower user engagement, decreased conversion rates, and lost customers due to frustration. |

| Critical Crawl Errors (4xx/5xx) | Googlebot frequently encounters “Page Not Found” (404) errors on internal links or “Server Error” (5xx) responses when trying to access the site. | Wasted crawl budget, poor user experience from broken links, and server errors preventing Google from accessing and indexing content, leading to pages being dropped from search results. |

| Misconfigured robots.txt File | The robots.txt file incorrectly blocks Googlebot from crawling important pages, or worse, critical resources like CSS and JavaScript files. | Prevents pages from being indexed and ranked. If CSS/JS are blocked, Google cannot render the page correctly, leading to it being misinterpreted as low-quality or mobile-unfriendly. |

A Framework for Sustainable SEO Success

My Philosophy: “White Hat” as Pragmatism, Not Dogma

My professional philosophy, honed over more than two decades in this industry, is simple and unwavering: a strict, uncompromising adherence to Google’s publicly available Webmaster Guidelines.

This is not a moral position or an ideological one.

It is a deeply pragmatic business strategy. I manage my clients’ websites in exactly the same way I manage my own properties by following the rules Google itself provides.

Let’s be clear about the fundamental mechanics of search.

Google’s primary business objective is to provide the most relevant, helpful, and high-quality answer to a user’s query.

My primary objective as an SEO professional is to help my clients’ websites be the best answer

Our goals are, therefore, perfectly and permanently aligned.

Any strategy that deviates from this alignment is, by definition, a short-term tactic destined for failure, as Google spends a lot of resources eliminating low-quality sites from its index.

This approach, often labelled “white hat SEO,” stands in stark contrast to “black hat” or “grey hat” techniques.

Those methods are fundamentally about trying to trick, manipulate, or exploit perceived loopholes in the algorithm.

This is a fool’s errand for any legitimate business.

Every major Google algorithm update is a step forward in its ability to better understand users’ preferences, content, evaluate quality, and identify and penalise such manipulation.

Exploiting loopholes is a reactive, defensive, and ultimately losing game, if history is anything to go by (see the Penguin update, for instance).

Building a high-quality website that aligns with Google’s goals is a proactive, offensive, and the only sustainable strategy for your business’s primary website.

The “Why” Behind the Guidelines

To effectively follow Google’s guidelines, one must understand the principles that underpin them.

They are not arbitrary rules; they are a reflection of what Google believes constitutes a good user experience.

The core tenets are:

- Focus on the User: Create your website and its content for humans first, and search engines second. If you provide a genuinely valuable, satisfying experience for your target audience, you have already won half the battle.

- Demonstrate Quality: Quality is not a subjective feeling; it is a set of signals that can be measured and demonstrated. This is where concepts like E-E-A-T (Experience, Expertise, Authoritativeness, Trust) become paramount. Google wants to rank sites that are credible and trustworthy, especially for topics that can impact a person’s health, happiness, or finances.7

- Be Transparent: Do not hide text from users, cloak content (showing one thing to Googlebot and another to users), or use sneaky redirects to send users to a place they didn’t intend to go. Be honest and clear about who you are, what your site does, and how you operate.

This philosophy removes the “gamble” that so many business owners feel they are taking with SEO.

By systematically aligning a website with Google’s stated goals for quality and user experience, you make it resilient to the whims of any single algorithm update.

You are not trying to force a low-quality site into the rankings; you are building a site that Google wants to rank, as opposed to a site using outdated SEO techniques that Google is – every day – eliminating from their index.

The Foundation of My Tools

Every tool, checklist, and piece of software I have created is a direct operationalisation of this philosophy.

They are not built to find clever tricks.

They are built to systematically implement Google’s own rules at scale.

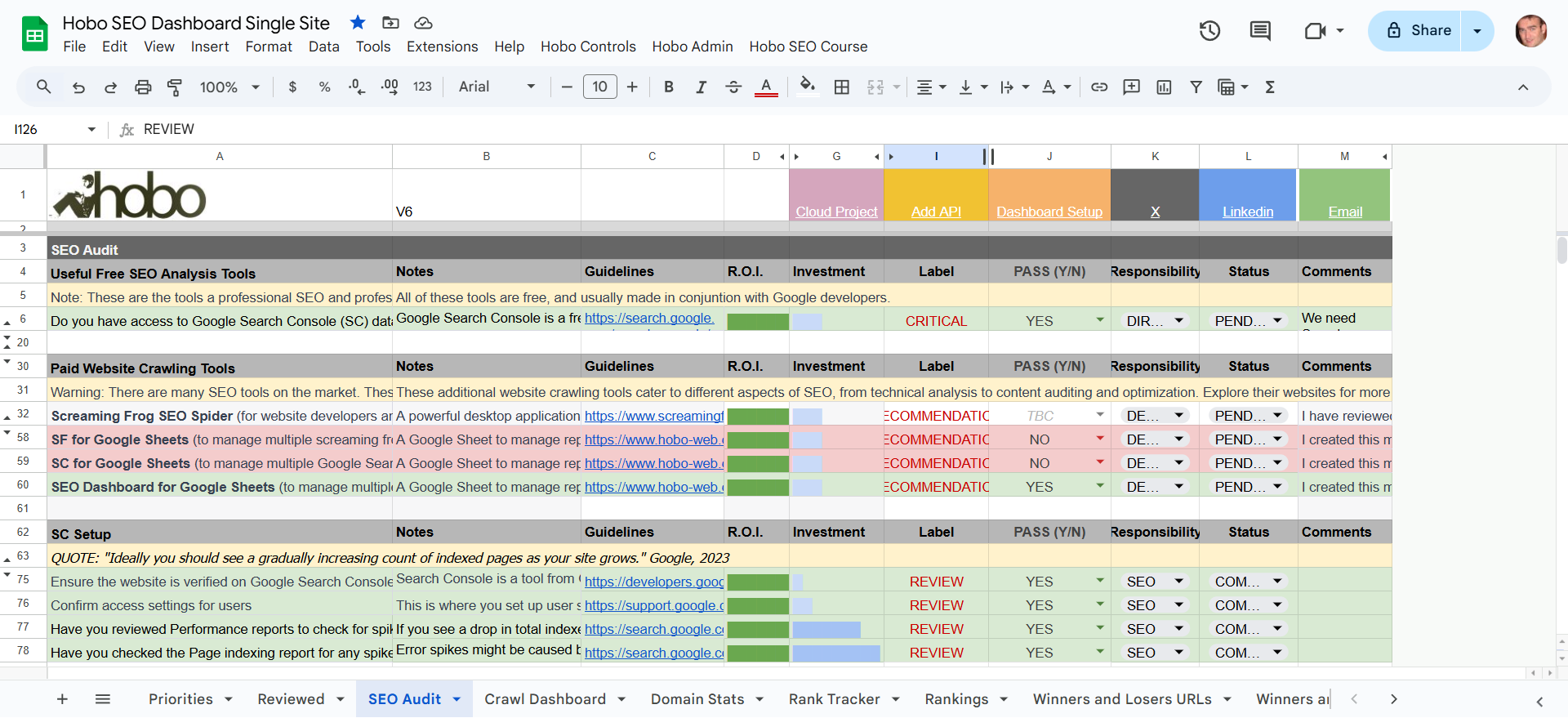

- The Hobo Premium SEO Checklist is not a list of my personal opinions. It is a 1500+ point distillation of Google’s own recommendations, curated and prioritised from their official documentation. It is an end-to-end blueprint for aligning a website with the guidelines.

- The Hobo EEAT Tool is designed specifically to address the “Demonstrate Quality” principle. It provides a systematic process for building the on-site trust signals – site ownership transparency, author bios, transparent contact information, clear policies – that Google’s own Search Quality Rater Guidelines explicitly ask for. A full Hobo EEAT review of your website is part of the SEO Audit.

- The Hobo SEO Dashboard is the monitoring and reporting engine built to track a site’s health against these very guidelines over time. It automates the process of checking for compliance and alerts you in some instances when you deviate from best practice.

My entire methodology is built on these first principles.

Instead of chasing algorithm fluctuations, it focuses on building a technically sound, high-quality, and trustworthy website that will be inherently resilient to future updates.

It is a proactive strategy focused on users, for long-term success, not a reactive scramble for short-term gains.

The Anatomy of a Hobo Technical SEO Audit: A Meticulous Review

Introduction: More Than a Crawl

A Hobo SEO audit is a comprehensive, manual, and automated review process designed to uncover the “why” behind a site’s performance issues.

It is far more than a simple automated crawl and a data dump.

The goal is to produce a continually updated prioritised list of actionable tasks that will deliver the insight needed to increase qualified visitor numbers from Google.

This entire process is meticulously structured and guided by the Hobo SEO Audit Checklist, a comprehensive framework covering hundreds of individual checks.

You cannot fix what you do not fundamentally understand, and this process is designed to build that deep understanding.

Phase 1: Initial Diagnosis & Setup (The First Look)

The audit begins with data acquisition. The single most important source of truth is Google Search Console (GSC), as it provides data directly from Google about how it sees and interacts with your site.

Access to GSC is non-negotiable for a professional audit.

The first step is a manual review of the most critical GSC reports. This is the triage stage, designed to identify immediate, life-threatening emergencies.

- Manual Actions Report: The very first check is to see if Google has applied a manual penalty to the site. This is a rare but catastrophic event that requires immediate and specific remediation.

- Page Indexing Report: I look for sudden spikes in errors, particularly 5xx server errors, redirect errors, or a sharp increase in pages being excluded by a noindex tag. These patterns can indicate recent server problems or a flawed code deployment that is actively harming the site’s indexation.

- Page Experience & Core Web Vitals Reports: A quick review of these reports provides an immediate snapshot of user experience issues. A high percentage of “Poor” URLs is a major red flag that directly impacts ranking and requires urgent attention from developers.

Phase 2: Crawling & Indexing Analysis (The Blueprint of the Site)

With the initial triage complete, the next phase is to create a complete blueprint of the website as a search engine sees it.

For this, a professional desktop crawling tool like the Screaming Frog SEO Spider is essential.

My own Hobo SEO Dashboard is designed to integrate seamlessly with and process data from these crawls.

- Robots.txt & Sitemap Review: The analysis starts with the robots.txt file, the very first file Googlebot requests. I check for Disallow directives that may be inadvertently blocking access to critical sections of the site or, just as importantly, essential resource files like CSS and JavaScript. Without these, Google cannot render the page correctly and may misinterpret it as a low-quality, broken page. I then cross-reference the robots.txt file with the submitted XML sitemaps to identify conflicts, such as URLs that are included in a sitemap but blocked from crawling—a contradictory signal to Google.

- Indexation & Crawl Budget Analysis: The crawl data is used to identify “index bloat.” This is the proliferation of thousands of low-value pages that offer little unique content, such as paginated archives, tag pages, or URLs with tracking parameters. This bloat wastes Google’s finite crawl budget and dilutes the site’s overall authority or “link equity” across too many useless pages. The solution involves a strategic combination of directives: using the noindex tag to keep low-value pages out of the index, and the rel=”canonical” tag to consolidate similar pages to a single authoritative version.

Phase 3: On-Page Technical Fundamentals (The Nuts and Bolts)

This phase delves into the technical details of individual pages, ensuring they are correctly optimised and free from errors that would hinder their performance.

- Canonicalization: This is one of the most powerful and frequently misunderstood concepts in technical SEO. A proper audit involves checking every indexable URL to ensure it has a correctly implemented rel=”canonical” tag. This tag tells Google that even if multiple URLs lead to similar content (e.g., example.com/product and example.com/product?source=email), there is one single “master” or “canonical” version that should receive all ranking signals. Getting this right prevents keyword cannibalisation and ensures that the authority from all inbound links is consolidated to the correct page.

- Structured Data: Modern SEO is about more than just a blue link. It’s about earning enhanced visibility in the search results through Rich Results like FAQ accordions, product star ratings, and event listings. This is achieved through Schema.org structured data. The audit involves using Google’s Rich Results Test to validate all existing structured data for errors and warnings. Furthermore, it involves identifying new opportunities to apply structured data to content that is currently not being enhanced, providing a clear competitive advantage in the SERPs.4

- URL Architecture & Internal Linking: The structure of a website is a powerful signal to Google about the relative importance of its pages. The audit analyses the site’s structure to identify pages that are buried too deep within the site (high crawl depth), requiring too many clicks to reach from the homepage. We also analyse the internal linking patterns, ensuring that key pages are linked to frequently using descriptive, keyword-rich anchor text. A logical, shallow site structure with a clear hierarchy helps both users and search engines navigate and understand the relationships between different pieces of content.4

The Engine Room: The Hobo SEO Dashboard

Introduction: Your Autonomous SEO Reporting Robot

After years of performing these manual audits and wrestling with disparate data sources, I built the tool I always wanted: the Hobo SEO Dashboard.

This is not another expensive, cloud-based SaaS platform.

It is a private, secure, and autonomous SEO reporting system that lives entirely within your own Google Sheets and Google Drive environment.

This is a deliberate architectural choice, designed to give you maximum power, privacy, and control over your own data.

It is your own robot that runs on Google Sheets, autonomously preparing your reports, analysing them, and prioritising your SEO tasks.

Core Functionality & Integration

The dashboard’s power lies in its ability to automatically synthesise data from the three most critical sources for any technical audit, creating a single, unified view of your site’s health.

- Data Synthesis: The dashboard acts as a central hub, automatically pulling data via API from Google Search Console and Google Analytics, and integrating it with raw crawl data from Screaming Frog files that you store in your Google Drive. This eliminates hours of manual, error-prone data wrangling and allows you to focus on analysis, not data collection.

- Automated Audit Reporting: The system can be configured with scheduled triggers to run entirely on its own. It can be set to cycle through every website you manage in your Search Console account, automatically fetch the latest Screaming Frog crawl file from Google Drive, process that data against the logic of the Hobo Premium SEO Checklist, and generate a sophisticated, prioritised SEO audit report. It can even be configured to automatically email these beautiful, client-ready reports on a schedule you set.

- “Winners and Losers” Reporting: This is one of the dashboard’s most powerful features. The system automatically fetches performance data from GSC and compares different time periods, allowing you to see at a glance which URLs and keywords have gained or lost the most visibility. This is invaluable for algorithm impact analysis. When a Google Core Update rolls out, you can run a report comparing the two weeks before the update to the two weeks after. The dashboard will instantly show you which pages were rewarded (“winners”) and which were penalised (“losers”), providing immediate, actionable insight into what the algorithm change valued or devalued on your site.

- Continuous Monitoring: The dashboard is designed for ongoing health monitoring, not just a one-off report. It’s scheduled triggers constantly watch for critical changes. It monitors Core Web Vitals, crawl errors, page indexation counts, and performance drops, and can be configured to send alerts when significant issues arise. This transforms your SEO from a reactive, fire-fighting exercise to a proactive, preventative process.

The Hobo Advantage: Privacy, Cost, and Control

The decision to build the dashboard within the Google ecosystem was driven by a desire to solve the primary frustrations I saw with traditional SEO tooling.

- Privacy & Security: With cloud-based SaaS tools, you are uploading your most sensitive business data – your website performance, traffic, keywords, and crawl data – to a third-party server. With the Hobo SEO Dashboard, all of that data remains within the secure confines of your own Google account. We never see it. This is a critical advantage for any organisation concerned with data privacy and security.

- Cost-Effectiveness: The enterprise SEO software market is dominated by tools that charge thousands of pounds per month in recurring subscription fees. The Hobo SEO Dashboard is offered as a one-time purchase, with no subscription fees and a lifetime access guarantee. It leverages the power of Google’s largely free APIs to provide enterprise-level reporting and analysis at a fraction of the cost, democratizing access to professional-grade tools.

- Control & Customisation: Because the entire system is built in Google Sheets, it is infinitely customizable. Unlike the rigid, locked-down interfaces of SaaS platforms, you have the power to modify reports, add your own data tabs, build custom charts, and integrate the output into any existing workflow. You own the tool, and you can shape it to your exact needs.

This represents a philosophical shift in SEO tooling.

It moves away from a model of dependency on expensive, closed-box platforms toward an empowered, ownership-based model. It argues that what professionals need is not another monthly subscription, but a powerful, private, and customizable engine to process their own data and generate their own insights.

The Blueprint for Excellence: The Hobo Premium SEO Checklist

Introduction: From Chaos to Control

If the Hobo SEO Dashboard is the engine, the Hobo Premium SEO Checklist is the blueprint.

It is far more than a simple list of tasks; it is a comprehensive project management framework designed to bring order to the chaos of SEO.

Testimonials have called it an “end-to-end blueprint for building and running a Tier 1 TechSEO agency”.

Its purpose is to take the vast, complex, and often contradictory world of SEO advice and distil it into a single, actionable, and prioritised system based entirely on Google’s own documentation.

It is designed to help any website manager, developer, or business owner get a firm grip on their SEO.

Structure and Scope

The authority of the checklist comes directly from its source material.

It is a meticulously curated and organised collection of Google’s own recommendations.

- 1500+ Google-Sourced Checks: The checklist contains over 1500 individual checks, tips, and guidelines drawn directly from Google’s official webmaster documentation, help files, and public statements. It is not based on my opinion or industry speculation; it is based on the rules of the game as defined by the referee and my 20-plus years of experience playing the seo game.

- Comprehensive Coverage: The checklist is exhaustive in its scope, covering every facet of modern SEO. It includes dedicated sections for initial GSC setup, technical crawl management, canonicalization, international SEO, JavaScript SEO, mobile optimisation, e-commerce best practices, Core Web Vitals, and in-depth website quality checks. It leaves no critical element overlooked.2

- A Living Document: SEO is a constantly evolving field.18 A static checklist would become obsolete within months. That is why the Premium SEO Checklist is a living document, constantly updated to reflect the very latest guides and changes from Google. Purchasers receive these updates for life, ensuring they always have a current and relevant framework to work from.

How to Use the Checklist in an Audit

The checklist provides the structure for a systematic and thorough audit, transforming a potentially overwhelming task into a manageable process.

- Systematic Evaluation: An auditor works through the spreadsheet tab by tab, evaluating the website against each individual point. This methodical approach ensures that no stone is left unturned and that every potential issue, from the most critical to the most minor, is considered.

- Task Prioritisation: One of the greatest challenges in SEO is moving from a long list of “issues” to a prioritised action plan. The checklist’s structure inherently helps with this – indeed, the entire system is based around prioritisation of SEO tasks. It allows an auditor to distinguish between a critical, high-priority error (e.g., a robots.txt file blocking the entire site from being crawled) and a lower-priority recommendation (e.g., optimising the alt text on a minor, non-critical image).

- A Tool for Teams: The checklist acts as a shared language and a single source of truth for diverse teams. It ensures that developers, content creators, UX designers, and marketers are all working from the same blueprint, with the same goals, to improve the site’s overall quality and performance.

The primary value of this checklist is that it dramatically reduces the cognitive load for the SEO professional.

The field is plagued by information overload and a constant stream of new tactics and opinions.

The checklist provides a structured, authoritative path through that noise. It offloads the task of “remembering everything” from the professional’s brain into a reliable, external system.

This frees up their mental energy to focus on what truly matters: analysing the findings, understanding their business implications, and developing a winning strategy.

It helps them transition from a technician to a strategist.

Mastering E-E-A-T: Building Trust in the Age of AI

Why E-E-A-T is Non-Negotiable

In recent years, Google has placed an enormous emphasis on a framework it uses to assess the quality and credibility of a webpage and its creator: E-E-A-T, which stands for Experience, Expertise, Authoritativeness, and Trust.7 With the explosive rise of AI-generated content, demonstrating real, first-hand human

Experience has become more critical than ever. Google explicitly added the first ‘E’ for Experience to its guidelines to combat low-quality, AI-created content, as an AI cannot have real-world experience.7

This is not an abstract concept. Failure to adequately demonstrate E-E-A-T is one of the primary reasons that legitimate websites lose significant traffic during Google’s Helpful Content and Core algorithm updates.

If Google cannot verify that you are a legitimate, trustworthy entity with real experience in your field, it will not rank your content in the long term.

In fact, it may use your content to train its own AI systems while simultaneously demoting your site.

Moving E-E-A-T from Concept to Action

The problem with most advice on E-E-A-T is that it is too vague. Recommendations like “be an expert” or “build a high-quality site” are not actionable.

My approach is to make E-E-A-T concrete, auditable, and implementable. This is achieved through two dedicated resources.

- The Hobo E-E-A-T Checklist: Included as part of the premium package, this is a 300+ point checklist that goes far beyond generic advice. It provides a granular, step-by-step process for auditing a site for the specific signals of trust, transparency, and authority that Google’s human Quality Raters are trained to look for.

- The Hobo EEAT Tool: This is the implementation arm of the strategy. It is a tool, running in Google Sheets and publishing to Google Docs, that helps you generate the necessary documentation to prove your E-E-A-T. It creates drafts for comprehensive About Us pages, detailed author bio pages, transparent contact pages, and all the various policy documents (Privacy, Terms & Conditions, etc.) required by both local laws and Google’s guidelines. It tells you exactly what to create and where to put it on your site.

The fundamental strategy here is to create documentary evidence and demonstrate E-E-A-T properly..

In a world where content can be faked by AI, the only durable defence is to provide a rich, verifiable trail of evidence that proves your experience, your expertise, and the legitimacy of your business.

The Hobo EEAT Tool is designed to manufacture this evidence trail systematically and at scale.

It reframes E-E-A-T from a vague content strategy into a concrete documentation and transparency strategy—a far more robust and defensible approach for the future.

Beyond the Audit: Continuous Monitoring for Strategic Growth

The SEO Audit is the Beginning, Not the End

A technical SEO audit, no matter how thorough, is ultimately a snapshot in time.

A website is not a static brochure; it is a living, breathing entity that is constantly changing.

New content is added, code is updated, and designs are refreshed.

Each of these changes carries the risk of introducing new technical errors, and Google responds to every change you make to your site.

Therefore, the real value is not in the one-off audit report, but in establishing an ongoing process of continuous monitoring and improvement. The audit provides the baseline and the initial action plan; the ongoing process ensures the health of the site is maintained and enhanced over time.

The Hobo Ecosystem for Ongoing Management

The entire Hobo suite of tools is designed to facilitate this transition from a one-time fix to a continuous improvement cycle.

- Track Progress: After implementing the fixes identified in the initial audit, the Hobo SEO Dashboard is used to monitor their impact. Did fixing the site’s canonicalization issues lead to a rankings increase for key commercial pages? The “Winners and Losers” report will provide a clear, data-driven answer by comparing performance before and after the fix was implemented.13

- Proactive Alerting: The dashboard’s autonomous, scheduled reporting acts as an early warning system. Imagine a developer accidentally pushes an update that adds a noindex tag to your entire blog. A weekly check of the dashboard’s Page Indexing report would immediately flag the sudden drop in indexed pages, allowing you to catch and fix this catastrophic error in days, not months. It helps you catch problems before they have a chance to impact revenue.

- Adapting to Change: The SEO landscape is in a state of perpetual flux. My commitment is to ensure the Hobo tools and checklists evolve in lockstep with Google. As new guidelines are released and new best practices emerge, the Premium SEO Checklist and the logic within the dashboard are updated, ensuring that users always have a framework that is current, relevant, and effective.

From Technical Debt to Technical Equity

I want to leave you with a final thought on how to frame this work within your organisation.

For years, many websites have accumulated “technical SEO debt” – a collection of quick fixes, outdated technology, and ignored best practices that make the site slow, insecure, and difficult for search engines to understand. This is what the Hobo Techncial SEO audit aims to rectify.

Investing in a proper technical SEO audit and establishing a process for continuous monitoring is not an expense; it is a strategic investment in paying down that debt.

More than that, it is an investment in building “technical equity.”

By creating a fast, secure, accessible, high-quality, and trustworthy website, you are building a durable digital asset.

This asset will be more resilient to algorithmic shifts, provide a better experience for your users, and serve as a powerful engine for sustainable, long-term organic growth.

The Hobo suite of tools provides the most private, cost-effective, and comprehensive framework available to build and maintain that invaluable asset.

Get a Technical SEO audit for your website

Disclosure: Hobo Web uses generative AI when specifically writing about our own experiences, ideas, stories, concepts, tools, tool documentation or research. Our tool of choice is in this process is Google Gemini Pro 2.5 Deep Research. This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was verified as correct. Edited and checked by Shaun Anderson, creator of Hobo SEO Dashboard and Hobo Socialbot, primary content creator at Hobo and founder of the Hobo Web site in 2006. See our AI policy.