On 27 May 2024, the Search Engine Optimisation (SEO) industry was irrevocably altered by the analysis and publication of thousands of pages of internal Google documentation.

These documents, allegedly from Google’s Content Warehouse API, were inadvertently published to a public GitHub repository by an automated bot, yoshi-code-bot, on or around 13 March 2024, and remained publicly accessible until 7 May 2024.

This exposure provided a rare and detailed view into the architecture of Google’s data storage and retrieval systems.

Section 1: The Content Warehouse API Leak

1.1. Context and Confirmation

The scale of the documentation is immense, spanning over 2,500 pages and detailing 2,596 distinct modules that contain 14,014 attributes or “features”. These attributes represent the specific types of data Google collects and stores about web documents, sites, links, and user interactions.

The authenticity of these documents has been corroborated by multiple former Google employees who, upon review, confirmed they possess “all the hallmarks of an internal Google API”. Subsequently, a Google spokesperson officially confirmed the legitimacy of the documents, stating, “We would caution against making inaccurate assumptions about Search based on out-of-context, outdated, or incomplete information”.

The significance of the leak is further underscored by its correlation with testimony from the U.S. Department of Justice (DOJ) antitrust trial, where key internal systems like NavBoost – also detailed in the leak – were described under oath. Despite Google’s caveat, the combined insights from the documentation and trial testimony offer the most significant look into Google’s internal systems to date, providing an invaluable resource for deconstructing the mechanics of search ranking.

1.2. The Ranking Pipeline: From Mustang to Twiddlers

The leaked documentation, combined with testimony from the U.S. Department of Justice (DOJ) antitrust trial, clarifies that “the Google algorithm” is not a monolithic entity but a complex, multi-stage processing pipeline.

Understanding this architecture is critical for contextualising where and how signals related to product reviews are applied. The key stages of this pipeline can be summarised as follows:

- Primary Scoring (

Mustang): The initial phase of ranking is handled by a system identified asMustang. This is described as the primary scoring, ranking, and serving system, responsible for the first-pass evaluation of documents retrieved from the index to create a provisional set of results. This system must have access to a core set of quality signals to perform its function efficiently. - Re-ranking (

Twiddlers): Following the initial scoring byMustang, the results undergo a series of adjustments by re-ranking functions known asTwiddlers. These are specialised C++ code objects that can modify the information retrieval (IR) score of a document or change its rank based on a wide range of signals not necessarily used in the initial scoring phase. The leak references several types ofTwiddlers, includingFreshnessTwiddlerfor boosting newer content and, significantly,QualityBoost, which enhances rankings based on quality signals. This suggests a dedicated mechanism for refining rankings based on a deeper assessment of content quality after the initial retrieval. - User Signal Feedback (

NavBoost): A third critical layer of ranking modification comes from theNavBoostsystem. Confirmed in both the leak and the DOJ trial,NavBoostutilises click-driven metrics from user behaviour to boost, demote, or otherwise adjust search rankings. The system analyses various click metrics, such asgoodClicks,badClicks, andlastLongestClicks, which serve as powerful proxies for user satisfaction and content relevance. This creates a continuous feedback loop where real-world user engagement validates or challenges the algorithmic quality assessments made in the earlier stages.

This architecture reveals a sophisticated, multi-layered evaluation process. A product review page is not assigned a single, static quality score. Instead, it undergoes an initial quality assessment during primary scoring by Mustang. Its rank can then be further adjusted by a QualityBoost twiddler based on more nuanced quality signals.

Finally, its position in the search engine results page (SERP) is continuously reinforced or weakened by NavBoost based on the observable behaviour of actual users. This is a dynamic system where intrinsic quality and extrinsic user validation work in concert to determine final ranking.

Section 2: The CompressedQualitySignals Module: A Deep Dive into Quality Evaluation

2.1. The Role and Significance of the Module

Central to the discussion of quality evaluation is the CompressedQualitySignals module.

The documentation describes this module’s purpose as containing “per doc signals that are compressed and included in Mustang and TeraGoogle”. This description is profoundly significant for two reasons. First, it directly connects the signals within this module to Mustang, the primary ranking system, confirming their use in the initial scoring process. Second, it links them to TeraGoogle, a core indexing system, indicating these signals are stored alongside the foundational data for a given document.

The documentation further includes a critical warning for Google’s own engineers: “CAREFUL: For TeraGoogle, this data resides in very limited serving memory (Flash storage) for a huge number of documents”. This technical constraint provides a powerful indicator of the importance of the signals contained within this module.

2.2. Attributes Contained Within the Module

The CompressedQualitySignals module serves as a repository for a wide range of critical quality assessments. In addition to the product review attributes that are the focus of this report, the module also contains foundational signals such as siteAuthority, unauthoritativeScore, lowQuality, and anchorMismatchDemotion.

The inclusion of product review signals alongside these other fundamental metrics demonstrates that Google considers the quality of review content to be a core component of its overall page and site evaluation.

The explicit warning about the use of high-speed Flash storage is not a trivial detail. In any large-scale data architecture, the fastest and most expensive storage (like Flash memory) is reserved for data that must be accessed with minimal latency.

The documentation states these signals are used in “preliminary scoring,” the very first stage of the ranking process where potentially billions of documents must be filtered and scored in milliseconds. For a signal to be included in this performance-critical storage tier, it must be deemed absolutely essential for the initial, high-speed filtering of search results.

Therefore, the presence of product review attributes within the CompressedQualitySignals module elevates their status from minor data points to foundational signals that are integral to the core functioning of the search ranking pipeline.

Section 3: Granular Analysis of Leaked Product Review Attributes

3.1. Identifying the Core Attributes

Within the high-priority CompressedQualitySignals module, the documentation explicitly defines a suite of attributes directly related to the evaluation of product reviews:

productReviewPReviewPage: A score representing “The possibility of a page being a review page.”productReviewPUhqPage: A score representing “The possibility of a page being a high-quality review page.”productReviewPPromotePage: A confidence score for promoting a specific review page.productReviewPDemotePage: A confidence score for demoting a specific review page.productReviewPPromoteSite: A confidence score for promoting an entire site based on its reviews.productReviewPDemoteSite: A confidence score for demoting an entire site based on its reviews.

These attributes provide concrete evidence of a dedicated, multi-stage system for algorithmically classifying, assessing, and acting upon the quality of review content.

3.2. Deconstructing “Confidences”: A Machine Learning Perspective

The technical description for the promotion and demotion attributes includes the note: “Product review demotion/promotion confidences. (Times 1000 and floored)”. The term “confidence” is specific terminology in the field of machine learning.

A confidence score is a probabilistic output, typically a value between 0 and 1 (or 0 and 100), generated by a classifier model. It represents the model’s certainty that a given input belongs to a particular class. For example, a productReviewPPromotePage confidence of 950 (after being multiplied by 1000) would indicate the model is 95% certain that the page is a high-quality review deserving of a promotion.

The parenthetical note “(Times 1000 and floored)” refers to a common engineering optimisation where a floating-point number (e.g., ) is converted into an integer (e.g., 950) to save storage space and reduce computational overhead during processing.

This technical detail confirms that Google is not using a simple binary flag (i.e., “good review” or “bad review”). Instead, a sophisticated machine learning model is analysing review pages against a multitude of features to produce a nuanced, granular score representing the probability that a page or site is “promotion-worthy” or “demotion-worthy.”

3.3. The Four-Quadrant System: Page vs. Site Evaluation

The full suite of attributes reveals a comprehensive four-quadrant system for review evaluation, allowing for nuanced adjustments at both the page and site level. This structure aligns perfectly with Google’s public guidance, which states that while the Reviews System is primarily page-focused, a site-wide signal can be applied if a “substantial amount” of content is found to be low-quality.

- Page-Level Actions (

...PromotePage,...DemotePage): Google can reward individual, high-quality review pages with a targeted promotion via theproductReviewPPromotePagesignal. Conversely, it can penalise a single poor-quality review with theproductReviewPDemotePagesignal without impacting the rest of the domain. - Site-Level Actions (

...PromoteSite,...DemoteSite): The system aggregates these page-level assessments. A site that consistently produces excellent reviews may trigger theproductReviewPPromoteSitesignal, receiving a domain-wide boost. In contrast, a site that accumulates a critical mass of unhelpful review content risks triggering theproductReviewPDemoteSiteclassifier, which can suppress the visibility of the entire domain, including its high-quality pages.

This creates a symmetrical risk and reward structure. While creating exceptional “hero” content is beneficial for page-level boosts, the more critical long-term strategy is to maintain a high quality bar across all review content to earn a site-wide promotion and, crucially, to avoid the catastrophic impact of a site-wide demotion.

3.4. A Multi-Tiered Classification Framework

The evaluation process is not a single judgment but a multi-stage pipeline that first classifies content before deciding on an action.

- Step 1: Foundational Classification (

productReviewPReviewPage): This attribute represents the initial classification step. Before any quality judgement is made, a model first calculates the probability that a given document is a review page at all. This score is essential for triggering the entire review-specific evaluation pipeline. - Step 2: Quality Tiering (

productReviewPUhqPage): This attribute signifies a more advanced layer of classification. Described as “The possibility of a page being a high-quality review page,” it shows that Google has a specific classifier trained to identify “UHQ” (presumably Ultra High Quality) content. A page must likely pass a high confidence threshold for this attribute to be considered for the strongest promotions.

These classification scores serve as primary inputs for the models that ultimately calculate the promotion and demotion confidences, creating a sophisticated workflow that moves from broad identification to granular quality assessment.

3.5. Connecting Attributes to Broader Systems

These promotion and demotion confidence scores do not exist in a vacuum; they are designed to be consumed by other systems within the ranking pipeline.

- The

productReviewPPromotePagescore is a likely input for theQualityBoosttwiddler. As a re-ranking function that “enhances quality signals,”QualityBoostis the logical mechanism to apply the promotion recommended by the confidence score, adjusting a high-quality review’s rank upward after the initial scoring fromMustang. - The

productReviewPDemoteSitescore is a modern, more targeted evolution of the principles established by the Panda algorithm update in 2011. Panda was designed to algorithmically assess site-level quality and reduce the rankings of low-quality sites as a whole. The principles of Panda continue to influence modern algorithms, and theproductReviewPDemoteSiteattribute can be understood as a direct descendant, applying a similar site-level quality assessment specifically to the product review vertical.

Table 1: Leaked Attributes Relevant to Product Review Evaluation

The following table consolidates the most critical attributes from the Content Warehouse API leak that pertain to the evaluation of product reviews, providing their source module, technical description, and an expert interpretation of their likely function within the ranking ecosystem.

Section 4: The Ecosystem of Trust: Correlating Review Signals with Author and Site Authority

The productReviewPPromotePage and productReviewPDemoteSite confidence scores are not calculated based on a single factor. They are the output of a machine learning model that ingests a wide array of signals to determine quality. The leak provides strong evidence for several key inputs into this model, revealing an interconnected ecosystem of trust signals.

4.1. siteAuthority as a Foundational Multiplier

For years, Google representatives publicly denied the existence of a “domain authority” metric. The leak unequivocally confirms a feature named siteAuthority exists within the critical CompressedQualitySignals module.

This attribute likely serves as a foundational signal in the review evaluation model. A high confidence score for productReviewPPromotePage on a domain with a low siteAuthority score would logically carry less weight than the same score on a domain with a high siteAuthority. The overall authority of the site provides essential context for the credibility of its individual review pages.

4.2. The Author Entity: From isAuthor to authorReputationScore

The concept of E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) is not merely a guideline for human raters; it is being algorithmically quantified. The leak reveals that Google explicitly stores author information and has a feature to determine if an entity mentioned on a page is also the author of that page (isAuthor). Furthermore, modules such as WebrefMentionRatings contain an authorReputationScore, a direct measure of an author’s authority.

These author-entity signals are almost certainly primary inputs into the product review quality model. A review written by an author with a high authorReputationScore has a significantly higher probability of being classified as “promotion-worthy” than an anonymous review or one from an unknown entity.

4.3. Content-Level Features: Originality and Effort

Google’s public guidelines for the Reviews System heavily penalise “thin content that simply summarises a range of products” and reward “in-depth analysis and original research”.

The leak reveals the mechanical underpinnings of this evaluation through attributes like OriginalContentScore and, most notably, contentEffort.

The contentEffort attribute, found within the QualityNsrPQData module, is described as an “LLM-based effort estimation for article pages,” providing a direct, algorithmic measure of the human labour and resources invested in creating a piece of content. These attributes provide a quantitative measure of originality and the level of work invested in creating a piece of content.

A low OriginalContentScore would be a strong feature indicating that a review is derivative, making it a prime candidate for a high productReviewPDemoteSite confidence score.

4.4. Foundational Relevance: The T* Topicality System

Before any quality assessment can occur, a document must first be deemed relevant to a user’s query.

Testimony from the US DOJ V Google antitrust trial revealed that this fundamental, query-dependent relevance is computed by a formal, engineered system designated as T* (Topicality). This system serves as a “base score” that answers the foundational question: How relevant is this document to the specific terms used in this search query?

The T* score is composed of three core signals, internally referred to as the “ABC signals”:

- A – Anchors: This signal is derived from the anchor text of hyperlinks pointing to the target document. Testimony from Google’s Vice President of Search, Pandu Nayak, confirmed that anchor text provides “a very valuable clue in deciding what the target page is relevant to.” This confirms the enduring importance of what other websites say about a page as a powerful signal of its topic.

- B – Body: This is the most traditional information retrieval signal, based on the presence and prominence of the query terms within the text content of the document itself. Nayak testified that “the most basic and in some ways the most important signal is the words on the page,” emphasising that what a document “says about itself” is “actually kind of crucial” for establishing its topicality.

- C – Clicks: This signal was one of the most significant confirmations from the trial, derived directly from aggregated user behaviour. Specifically, it incorporates metrics like “how long a user stayed at a particular linked page before bouncing back to the SERP” (dwell time). The inclusion of a direct user engagement metric at this foundational level of relevance scoring underscores the centrality of user feedback to Google’s core ranking logic.

However, testimony also revealed Google’s caution against using raw click data as a proxy for quality. An internal evaluation found that “people tend to click on lower-quality, less-authoritative content” disproportionately.

As one witness warned, “If we were guided too much by clicks, our results would be of a lower quality than we’re targeting.” This indicates that while user behaviour is a crucial input for relevance, it is carefully balanced against other quality and authority signals to prevent the promotion of clickbait over genuinely trustworthy content.

4.5. Topical Authority as a Trust Signal: siteFocusScore and siteRadius#

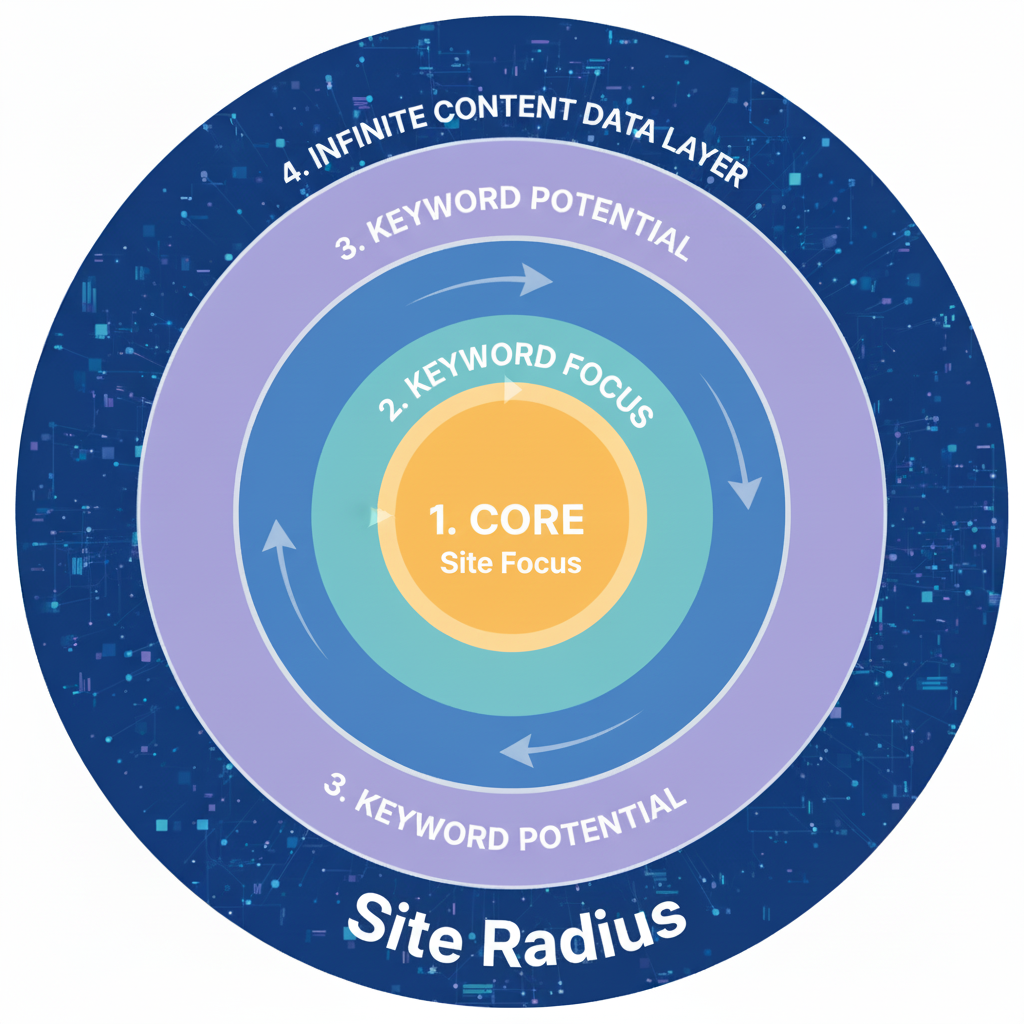

The credibility of a review is also influenced by its context. The leak details attributes like siteFocusScore, which measures how dedicated a site is to a single topic, and siteRadius, which measures how far a specific page’s topic deviates from the site’s central theme.

A product review for a high-performance graphics card published on a website with a high siteFocusScore for computer hardware is inherently more trustworthy than the same review appearing on a general lifestyle blog.

This topical alignment – or Topical Authority as some SEOs define it – is a powerful signal of expertise and authority, and it is likely another key feature used by the review quality model.

4.6. Site-Wide Health and Consistency Signals

Beyond topicality, the leak points to attributes that assess the overall user experience and quality consistency of a site.

The clutterScore, for instance, is a site-level signal that penalises sites with a large number of distracting or annoying resources, such as aggressive ads or pop-ups that obscure the main content. This aligns directly with the Search Quality Rater Guidelines, which instruct human raters to assign low ratings to pages where the user experience is obstructed.

Furthermore, the siteQualityStddev attribute measures the standard deviation of page quality ratings across an entire site. The existence of this metric implies that Google values consistency; a site with uniformly high-quality pages may be scored more favourably than a site with a volatile mix of excellent and very poor pages, reinforcing the need for site-wide quality control.

Section 5: Bridging the Gap: Reconciling Leaked Data with Google’s Public Doctrine

5.1. E-E-A-T and the Reviews System: From Guideline to Attribute

The leak demonstrates that concepts like E-E-A-T and the principles of the Reviews System are not abstract ideals but quantifiable engineering problems.

The public guidelines describe the desired outcome – a helpful, trustworthy, expert-written review – while the leaked attributes describe the signals Google’s systems use to algorithmically measure a page’s progress toward that outcome.

Each component of these frameworks can be mapped to specific, measurable attributes that serve as inputs to a machine learning model:

- Experience & Expertise: Google’s advice to “demonstrate you are an expert” is measured via attributes like

isAuthorandauthorReputationScore. ThecontentEffortscore, which is likely a core technical component of the Helpful Content Update (HCU), also serves as a powerful proxy for expertise. - Authoritativeness: This is captured by site-level signals like

siteAuthorityandsiteFocusScore. - Trustworthiness: This is assessed through a variety of signals, including a site quality score known as Q-Star (Q*), confirmed in recent DOJ testimony, as well as attributes like

OriginalContentScore. Ultimately, these algorithmic assessments are validated by real-world user behaviour data from NavBoost (goodClicksvs.badClicks). - Original Research & Evidence: The guideline to “provide evidence such as visuals,” “share quantitative measurements,” and “cover comparable things” directly maps to increasing the

OriginalContentScoreandcontentEffortscore, distinguishing the content from “thin” summaries that can be easily replicated.

The productReviewPPromotePage and productReviewPDemoteSite confidence scores are the direct outputs of this complex calculation – an algorithmic assessment of a review’s overall quality and helpfulness.

5.2. The “Helpful Content” Philosophy vs. Site-Level Demotion

Similarly, the principles of Google’s Helpful Content system, which was designed to demote sites with a high proportion of unhelpful, “search engine-first” content, are reflected in the leaked attributes.

The productReviewPDemoteSite attribute is the tangible, algorithmic manifestation of the Helpful Content system’s site-wide classifier, applied specifically to the product review vertical. A site that consistently publishes low-effort, summary-style reviews is creating unhelpful content, and this attribute is the mechanism that applies the corresponding site-wide ranking demotion.

5.3. Discrepancies and Clarifications

The most significant discrepancy highlighted by the leak is the chasm between Google’s public downplaying of specific technical ranking factors and the internal documentation of over 14,000 of them.

However, the leak does not necessarily invalidate Google’s public guidance. Instead, it provides the technical “how” to the public “what.” The public guidelines describe the desired outcome – a helpful, trustworthy, expert-written review.

The leaked attributes describe the signals Google’s systems use to algorithmically measure a page’s progress toward that outcome. The guidance and the mechanics are two sides of the same coin.

Section 6: The Algorithmic Manifestation of Google’s Reviews and Helpful Content Updates

6.1. A History of Quality-Focused Updates

Beginning with the first Product Reviews Update on 8 April 2021, Google initiated a series of algorithm updates collectively known as the “Reviews System.”

This initial rollout was followed by several more, including updates on 1 December 2021; 23 March 2022; 27 July 2022; 20 September 2022; 21 February 2023; 12 April 2023; and 8 November 2023.

According to Google’s documentation, the “reviews system aims to better reward high-quality reviews, which is content that provides insightful analysis and original research and is written by experts or enthusiasts who know the topic well.”

The system’s goal is to “ensure that people see reviews that share in-depth research, rather than thin content that simply summarises a bunch of products, services or other things.”

This was followed in August 2022 by the introduction of the broader “Helpful Content System,” and the Helpful Content Update, which was later integrated into the core algorithm in the March 2024 Google Algorithm Update. The leaked attributes provide a direct look at the machinery built to achieve these goals.

6.2. From Public Guideline to Leaked Attribute

The public guidelines for the Reviews System ask creators to produce content that provides “insightful analysis and original research” and is “written by experts or enthusiasts who know the topic well.” The Content Warehouse API leak reveals how these qualitative concepts are translated into quantifiable signals:

- “Insightful analysis and original research” is algorithmically measured by attributes like

contentEffort, which uses an LLM to estimate the human labour invested in a page, andOriginalContentScore, which quantifies uniqueness. - “Written by experts or enthusiasts” is quantified through author-entity signals like

isAuthorandauthorReputationScore, which algorithmically assess the credibility of the content’s creator. - “Provide evidence…such as visuals” and first-hand experience is a direct way to increase the

OriginalContentScoreandcontentEffortscore, distinguishing a review from a simple summary.

6.3. The Page vs. Site Dichotomy Confirmed

Crucially, Google’s documentation for the Reviews System states that “the reviews system primarily evaluates review content on a page-level basis.

However, for sites that have a substantial amount of review content, any content within a site might be evaluated by the system.” This two-tiered evaluation is perfectly mirrored in the leaked attributes.

The productReviewPPromotePage attribute represents the page-level promotion for individual high-quality reviews, while the productReviewPDemoteSite attribute is the algorithmic implementation of the site-wide signal, applied when a critical mass of low-quality review content is detected.

6.4. Convergence with the Helpful Content System (HCS)

The philosophy and mechanics of the Reviews System’s site-wide signal are a direct parallel to the broader Helpful Content System.

The HCS was introduced as a site-wide classifier designed to identify and demote sites with a high proportion of unhelpful, “search engine-first” content. The productReviewPDemoteSite attribute can be understood as the tangible, algorithmic manifestation of the HCS philosophy, but specifically calibrated and applied to the product review vertical.

This demonstrates a consistent, site-level approach to quality control across Google’s ranking systems, where systemic low-quality content is penalised at the domain level.

Section 7: Mapping Public Guidance to Leaked Attributes

The following table maps the qualitative questions from Google’s public guidance on “how to write high-quality reviews” to the specific, measurable attributes revealed in the Content Warehouse API leak.

This provides a direct translation from Google’s advice to its algorithmic implementation (although naturally, my own logical inference was used in places).

Section 8: Strategic Imperatives for Review and Affiliate Site SEO

The insights gleaned from the Content Warehouse API leak necessitate a recalibration of SEO strategies for any site heavily reliant on product reviews or affiliate content.

The following strategic imperatives are derived directly from the analysis of the leaked attributes and their function within the broader ranking ecosystem.

8.1. The Future is Entity-First: Investing in Author and Brand

The confirmation of attributes like siteAuthority, isAuthor, and authorReputationScore signals a definitive shift toward an entity-centric evaluation model. Optimisation must now extend beyond the page to the entities responsible for the content.

- Actionable Strategy: Prioritise the development of author and brand entities. For authors, this involves creating comprehensive author biography pages, ensuring consistent bylines across all content, and actively seeking mentions and links to these author profiles from other reputable sites to build the

authorReputationScore. For brands, this means executing strategies that encourage branded search queries and build real-world authority to positively influence thesiteAuthorityscore.

8.2. Weaponising Originality: Moving Beyond “Thin Affiliate” Content

The existence of an OriginalContentScore and the demotion signals for low-quality reviews represent a direct assault on “thin affiliate” content. The experience of specialised review sites like HouseFresh, which reported a 91% drop in search traffic after being outranked by large media publications, and while I think they recovered somewhat, serves as a stark warning that expertise alone may not be enough if the content is not perceived as uniquely valuable.

- Actionable Strategy: Invest heavily in creating unique assets that cannot be easily replicated. This includes proprietary testing data, high-quality original photography and videography of products in use, and in-depth quantitative analysis. These elements directly increase the “effort score” and

OriginalContentScore, providing the strongest possible defence against being classified as low-quality and triggering a demotion.

8.3. Managing the Site-Wide Quality Threshold

The productReviewPDemoteSite attribute confirms that low-quality review pages are not merely underperforming assets; they are an active liability that can suppress the performance of an entire domain.

- Actionable Strategy: Implement a rigorous and recurring content auditing process. SEOs must be prepared to aggressively prune, de-index, or substantially overhaul outdated, thin, or low-value review content. The primary goal is to keep the overall proportion of unhelpful content on the site below the algorithmic threshold that triggers the site-wide demotion signal.

8.4. Optimising for User Engagement as a Quality Proxy

The NavBoost system and its reliance on click metrics (goodClicks, lastLongestClicks) function as a real-world, large-scale user testing panel that validates algorithmic quality assessments. A page that algorithms deem high-quality but users reject will not maintain its rankings.

- Actionable Strategy: Fully integrate Conversion Rate Optimisation (CRO) and User Experience (UX) principles into the SEO workflow. Content should be structured for maximum readability and engagement. This includes using clear headings, tables of contents for long articles, compelling visuals, and interactive elements to increase user dwell time and minimise “pogo-sticking” (users returning to the SERP to choose another result). Generating positive

NavBoostsignals is essential for reinforcing and sustaining high rankings.

8.5. Technical SEO for Review Sites Post-Leak

While the core revelations are strategic, technical execution remains critical for ensuring that Google’s systems can correctly interpret the signals being sent.

- Actionable Strategy: Maintain impeccable technical hygiene for all review-related signals. This includes ensuring date consistency across the on-page byline, the URL structure, and structured data to send clear signals to

bylineDate,syntacticDate, andsemanticDateattributes. Furthermore, robust and valid implementation ofReviewandAggregateRatingschema is crucial for clearly communicating a page’s purpose to Google’s classifiers, allowing them to apply the correct evaluation models in the first place.

Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 2.5 Deep Research. I have over 20 years writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived ad edited verified as correct by me (and is under constant development). See my AI policy.

Disclaimer: Any article (like this) dealing with the Google Content Data Warehouse leak is going to use a lot of logical inference when putting together the framework for SEOs, as I have done with this article. I urge you to double-check my work and use critical thinking when applying anything for the leaks to your site. My aim with these articles is essentially to confirm that Google does, as it claims, try to identify trusted sites to rank in its index. The aim is to irrefutably confirm white hat SEO has purpose in 2025.