Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 2.5 Deep Research (and ChatGPT 5 for image generation). I have over 20 years writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived, edited, and verified as correct by me (and is under constant development). See my AI policy.

Disclaimer: This is not official. It is theory. Any article (like this) dealing with the Google Content Data Warehouse leak requires a lot of logical inference when putting together the framework for SEOs, as I have done with this article. I urge you to double-check my work and use critical thinking when applying anything for the leaks to your site. My aim with these articles is essentially to confirm that Google does, as it claims, try to identify trusted sites to rank in its index. The aim is to irrefutably confirm white hat SEO has purpose in 2025 – and that purpose is to build high-quality websites. Feedback and corrections welcome.

There’s a reason Google tells you to “Avoid repeated or boilerplate text in elements,” explaining that “It’s important to have distinct text that describes the content of the page”.

They’ve also explicitly advised us to “Make it clear which text is the main title for the page,” and to ensure it “stands out as being the most prominent on the page (for example, using a larger font, putting the title text in the first element)”.

The systems and attributes revealed in the Google Content Warehouse leak are not a new set of rules; they are the technical enforcement mechanisms for the very principles Google has been transparent about all along.

Top Ten Takeaways

- Google’s “Algorithm” is a Myth: The ranking process is not a single algorithm but a suite of interconnected, specialised systems like Goldmine (for titles), Radish (for featured snippets), and NavBoost (for user behaviour re-ranking).

- Your <title> Tag is Just a Suggestion: Google’s “Goldmine” system treats your HTML title as just one candidate among many, scoring it against alternatives sourced from your headings (<h1>), internal links, and body content.

- User Behaviour is the Ultimate Arbiter: The “NavBoost” system is a powerful ranking factor that analyses 13 months of user click data (goodClicks, badClicks, lastLongestClicks) to promote or demote pages. A poor user experience directly impacts rankings.

- Publisher Input is Inherently Distrusted: Google’s systems are designed to actively source and test alternatives for the content you provide, from titles to meta descriptions.

- AI Acts as a Quality Editor: Systems like BlockBERT and SnippetBrain perform deep semantic analysis, evaluating content for linguistic quality and coherence, not just keyword matching.

- Technical Precision is Non-Negotiable: The systems include specific penalty flags for common SEO mistakes, such as keyword stuffing (dupTokens), boilerplate text (goldmineHasBoilerplateInTitle), and elements that are too long (isTruncated).

- “Signal Coherence” is the Core Strategy: The most effective way to influence the system is to ensure your title, H1, URL, and introductory content all send a consistent, harmonised message about the page’s topic.

- Visual Prominence is a Measured Signal: The existence of an avgTermWeight attribute, which measures the average font size of terms, is concrete proof that making your headings and key phrases visually stand out is a quantifiable signal.

- The Systems Create a Feedback Loop: A low-quality title selected by Goldmine can lead to poor user clicks, which feeds negative data into the powerful NavBoost system, which can then demote your page’s core ranking.

- Satisfying Users is the Only Viable Strategy: The complexity and interconnectedness of these systems make them impossible to “trick.” The only sustainable, long-term strategy is to focus entirely on creating the best and most satisfying experience for the user.

Introduction: A Rare Glimpse Inside the Black Box

For over two decades, the inner workings of Google’s ranking systems have been a black box, understood only through observation, testing, and interpretation of public guidelines. That changed in 2024.

The accidental leak of internal documentation for Google’s Content Warehouse API provided a once-in-a-generation look at the engineering blueprints of search. This was not another algorithm update to be reverse-engineered from the outside; it was a look at the system’s architecture from the inside.

While analysing this trove of data, a previously unknown system came into focus, a system codenamed “Goldmine”.

There is virtually no public news or official documentation about this engine; its existence and function were revealed only through the complex data structures in those leaked files.

I first discovered mentions of it while reviewing the leaked content warehouse document in 2024. I wondered what it was at the time, but it wasn’t immediately obvious. It wasn’t until recent investigations into more obvious systems that I stumbled across Goldmine again, and I thought… what is that?

My article is an investigative deconstruction of the Goldmine system.

Based on a deep analysis of the leaked documentation, it will define what Goldmine is, explain its multi-stage evaluation process, and translate that technical knowledge into a new strategic framework for professional SEO.

Section 1: Defining Goldmine: Google’s Universal Quality Judge

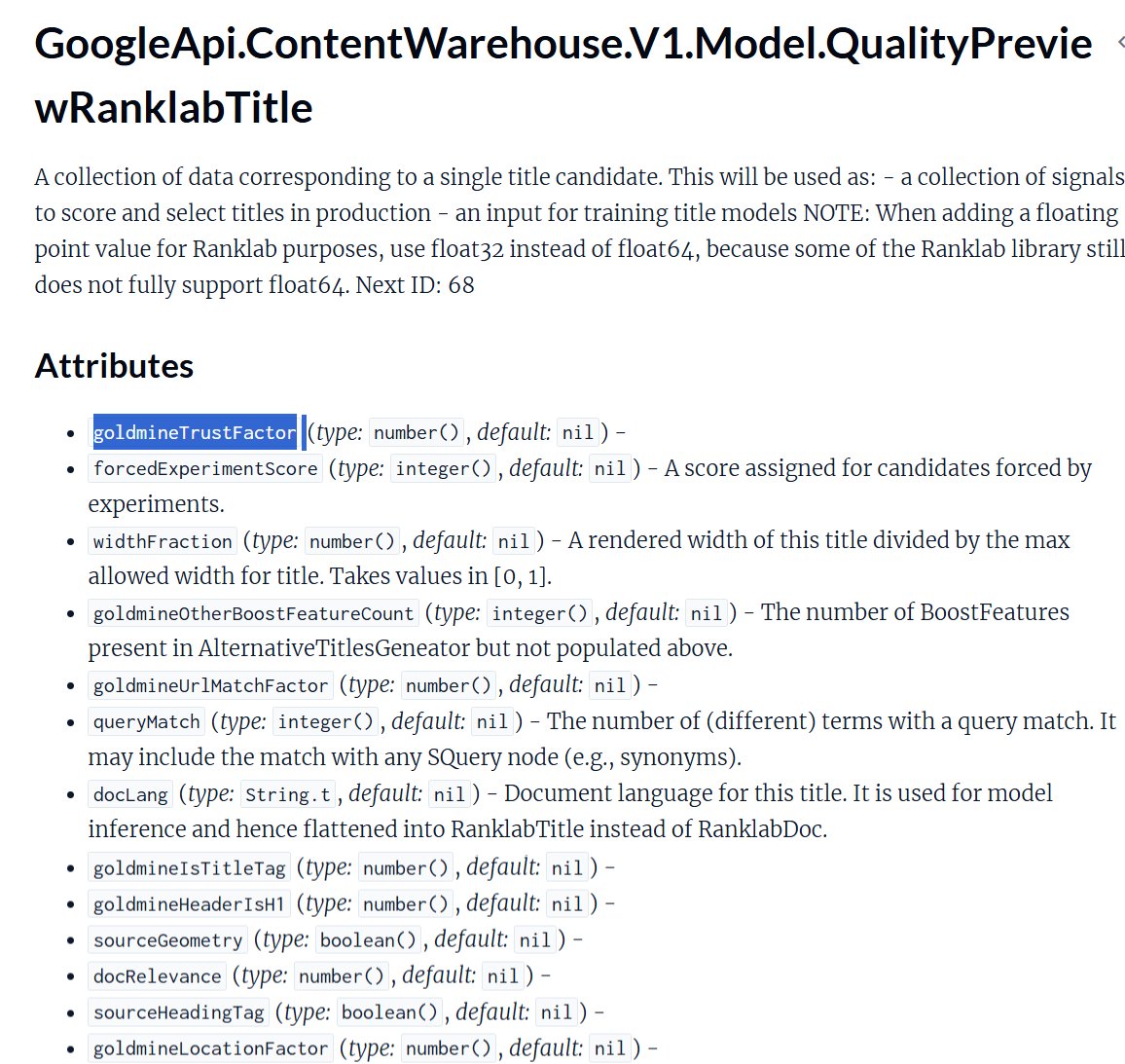

At its core, the Goldmine system is a sophisticated, component-based scoring engine.

The technical documentation suggests its internal name is AlternativeTitlesAnnotator, and its primary function is to ingest a collection of text candidates for a SERP element – such as a page title – and compute a quantitative score of its quality.

This process is built on a foundational philosophy: the signal provided by a website publisher, such as the text within a <title> tag, is not inherently trustworthy.

It is treated as just one candidate among many.

To find the “best” element to display, the system must create a competitive environment where the publisher’s suggestion is tested against alternatives extracted from the page itself and from across the web.

However, a deeper analysis of the system’s components reveals a much broader purpose.

The factors Goldmine uses to score a title are not all title-specific.

Attributes like goldmineBodyFactor (measuring relevance to the page’s content), goldmineUrlMatchFactor (measuring alignment with the URL), and goldmineTrustFactor (measuring trustworthiness) are generic quality signals that could just as easily be applied to scoring a descriptive snippet, an image’s alt text, or a product description.

This modular design is not theoretical.

The existence of a parallel module named QualityPreviewRanklabSnippet confirms it.

This parallel system reveals the exact same evaluation pattern, using its own set of specialised systems to perform a similar multi-stage evaluation for the descriptive text shown on the SERP. Analysis of these systems shows they are codenamed Muppet, which can pull text from anywhere on a page for a snippet; SnippetBrain, which is responsible for rewriting titles and snippets; and Radish, which is connected to the generation of Featured Snippets.

The QualityPreviewSnippetRadishFeatures model details Radish’s process, showing it calculates an answerScore for passages based on their similarity to historical, user-approved navboostQuery data. Further evidence reveals multiple scoring models that work together.

The QualityPreviewSnippetBrainFeatures model confirms SnippetBrain has its own modelScore and triggers SERP bolding.

The QualityPreviewSnippetDocumentFeatures model details document-related scores like metaBoostScore.

Complementing this, the QualityPreviewSnippetQueryFeatures model details query-related scores, including a radishScore derived from the Radish system’s analysis and a passageembedScore for deep semantic relevance.

Finally, the QualityPreviewChosenSnippetInfo model shows the output of this entire process, logging the final chosen snippet’s source and flagging it for issues like isVulgar or truncation.

This modular approach extends far beyond titles and snippets, confirming that Google’s evaluation process is not a single algorithm but a suite of specialised engines.

Other confirmed parallel modules include:

- Product Review Systems: A dedicated module assesses the quality of product review content, using specific signals like productReviewPPromotePage and productReviewPUhqPage (Ultra High Quality) to reward in-depth analysis and expertise.

- Technical Quality Systems: Specific models like IndexingMobileVoltCoreWebVitals are used to store and action Core Web Vitals data for ranking changes, acting as a specialised technical evaluation engine.

- Real-Time SERP Interaction Systems: A system codenamed Glue works alongside NavBoost to monitor real-time user interactions (like hovers and scrolls) with non-traditional SERP features such as knowledge panels and image carousels, helping to rank these elements.

- Spam Detection Systems: The overarching SpamBrain system operates as a major parallel engine focused entirely on identifying and neutralising spam, as evidenced by attributes like spambrainData and scamness.

The existence of these diverse systems confirms that Goldmine is not an anomaly but a prime example of a universal and scalable architecture for quantifying the quality of any content element Google presents to a user.

Therefore, understanding how Goldmine evaluates one element provides a blueprint for how Google likely evaluates all content on the SERP.

Section 2: Under the Hood: The Multi-Stage Goldmine Evaluation Pipeline

The process by which Goldmine selects a winning SERP element can be understood as a rigorous, multi-stage evaluation. Each stage is designed to filter candidates based on increasingly sophisticated criteria, from basic relevance to deep semantic understanding and, finally, to proven performance with a live human audience.

Stage 1: Sourcing the Candidates

The process begins by gathering a diverse pool of applicants, ensuring the system is not limited to a single, publisher-provided option. The leaked documentation reveals the specific sources for these candidates through a series of boolean flags:

- sourceTitleTag: The primary candidate, sourced directly from the HTML <title> element.

- sourceHeadingTag: Candidates extracted from on-page heading elements like <h1> and <h2>. A specific feature, goldmineHeaderIsH1, confirms that the main <h1> heading is given special weight.

- sourceOnsiteAnchor and sourceOffdomainAnchor: Candidates sourced from the anchor text of both internal links within the same site and external links from other domains.

- sourceGeneratedTitle: A final fallback, indicating a title that was algorithmically generated by Google’s systems when all other signals were deemed to be of low quality.

This sourcing mechanism confirms a long-held but difficult-to-prove SEO hypothesis: a site’s internal linking strategy and its external backlink profile are direct inputs into how its pages are represented on the SERP.

Stage 2: The AI Editor and Semantic Analysis (BlockBERT)

Promising candidates from the initial pool are then passed to an advanced AI for a deeper linguistic review.

The evidence for this stage lies in the goldmineAdjustedScore attribute, which the documentation describes as the initial score with “additional scoring adjustments applied. Currently includes Blockbert scoring”.

External academic research confirms that BlockBERT is a specialised, efficient variant of the well-known BERT language model.

It is specifically designed to assess long-form content and understand context with less computational power than its predecessors. The goldmineBlockbertFactor represents the score from this model’s assessment.

This stage moves the evaluation beyond simple keyword matching. BlockBERT assesses semantic coherence, contextual relevance, and natural language, allowing the system to easily distinguish a well-structured, human-readable string from a spammy, keyword-stuffed one.

Stage 3: The Final Arbiter – Real-World Human Behaviour (NavBoost)

The final and most decisive stage of the evaluation is a performance review based on real-world user data.

The goldmineNavboostFactor attribute is the definitive proof that user click behaviour directly influences which SERP element is ultimately chosen and displayed. This factor connects the entire Goldmine scoring process to the NavBoost system, a powerful re-ranking mechanism first revealed during the U.S. Department of Justice antitrust trial.

NavBoost analyses a vast history of user click data to measure signals of satisfaction. The leak confirms that Goldmine is influenced by these nuanced signals, which include:

- goodClicks: Clicks that are followed by a long dwell time, indicating the user found the content valuable.

- badClicks: Clicks that result in a user quickly returning to the SERP (a behaviour known as “pogo-sticking“), signalling dissatisfaction.

- lastLongestClicks: An exceptionally strong positive signal that identifies the final result a user clicks on and stays on, suggesting the search journey has been successfully completed.

This pipeline – moving from static document features to semantic analysis and finally being weighted by historical user behaviour – is the structure of a classic predictive model.

The goal is not merely to score the quality of existing text but to use all available features to predict a future outcome: which candidate is most likely to generate “good clicks” and satisfy user intent.

Section 3: The Penalty Box: How Goldmine Influences SERP Snippets and Core Rankings

The Goldmine system is not only designed to find the best candidate but also to actively identify, flag, and penalise the worst. This is not simply a matter of visual presentation on the SERP; it is a critical mechanism that can indirectly lead to core ranking penalties.

The process works in two steps:

Step 1: The Direct, SERP-Level Penalty

The foundation for this penalty system is found within the DocProperties model, a core data container for every document. This model includes a simple boolean flag, badTitle, which acts as a high-level ‘on/off’ switch for a “missing or meaningless title.“

For a more granular analysis, the documentation also reveals a specific data model called DocProperties BadTitleInfo, designed to score poorly constructed elements. When Goldmine encounters a low-quality candidate, such as a title with boilerplate text, the goldmineHasBoilerplateInTitle attribute applies a direct penalty to that specific candidate’s score.

Other penalty attributes include dupTokens for keyword stuffingand isTruncated for elements that are too long to display properly.

In the first instance, this penalty is purely at the SERP construction level.

The system effectively says, “This publisher-provided <title> tag is low-quality; I will penalise its score so it loses the competition. I will instead choose a better candidate, like the <h1>.” The immediate consequence is visual: your intended snippet isn’t shown.

Step 2: The Indirect, Core Ranking Impact

This is where the true power of the system becomes clear. The DocProperties model, which contains the raw inputs, confirms the existence of a data pipeline where its information is passed downstream to core scoring systems. The Goldmine system acts as a crucial pre-filter in this pipeline for the powerful NavBoost re-ranking system. NavBoost relies on clean user click data to function correctly.

By penalising a bad element, Goldmine forces a different one to be displayed on the SERP. This new element is now subjected to a live A/B test with real users.

The click behaviour on this new element – whether it generates “good clicks” or “bad clicks” – is fed directly back into the NavBoost system via the goldmineNavboostFactor.

Since NavBoost is a powerful system that can boost or demote rankings based on user interaction signals, the performance of that replacement snippet can now directly impact your page’s actual ranking.

In this light, Goldmine’s penalty system is not merely punitive; it is a critical data hygiene mechanism. It removes “pollutants” like spammy or boilerplate text from the SERP before they can corrupt the user feedback loop that influences core rankings.

Section 4: Strategic Implications: Optimising for a Goldmine-Driven World

This technical deconstruction of the Goldmine system demands a significant evolution in SEO strategy. Optimising for a world where Goldmine is the judge requires moving beyond generic advice and adopting a more holistic and evidence-based approach. The following strategies are derived directly from the system’s architecture.

Strategy 1: Engineer “Signal Coherence”

The candidate sourcing process and the relevance factors (goldmineBodyFactor, goldmineUrlMatchFactor) reward deep consistency. The primary strategic goal should be to make your intended SERP elements the undeniable, mathematically superior candidates. This is achieved by engineering “signal coherence” across all relevant page elements. The HTML <title> tag, the meta description, the main <h1> headline, the URL slug, the introductory paragraph, and the anchor text of internal links pointing to the page must all send a consistent, harmonised message about the page’s core topic. Furthermore, the DocProperties model contains an avgTermWeight attribute, which quantifies the “average weighted font size of a term in the doc body.” This is concrete evidence that visual prominence is a measured signal. Therefore, signal coherence extends beyond the text itself to its presentation; ensuring key terms and headings are visually prominent reinforces their importance to the system. This leaves no room for algorithmic ambiguity.

Strategy 2: Optimise for the “Satisfied Click”

The outsized importance of the goldmineNavboostFactor confirms that SERP snippets are ultimately judged by their performance with real users. This does not mean writing clickbait. It means crafting a snippet that makes a precise and accurate promise, and then ensuring the on-page experience immediately and comprehensively delivers on that promise. The goal is to win the lastLongestClicks by fully resolving the user’s intent, leading them to end their search journey on that page. This requires a deep understanding of user psychology and a ruthless commitment to matching the promise of the snippet to the value of the content.

Strategy 3: Master Technical Precision to Avoid Automatic Disqualification

The existence of specific penalty factors like isTruncated, dupTokens, and goldmineHasBoilerplateInTitle confirms that technical rules are enforced with direct scoring demotions. This makes technical precision a prerequisite for competition. SEOs must eliminate keyword repetition, enforce uniqueness across pages, and strictly manage the length of SERP-facing elements to avoid automatic penalties. Adhering to these technical constraints is not about optimisation; it is about ensuring that a high-quality, user-focused element is even eligible to be fairly judged by the system.

Conclusion: My Inference on Google’s Unified SERP Philosophy

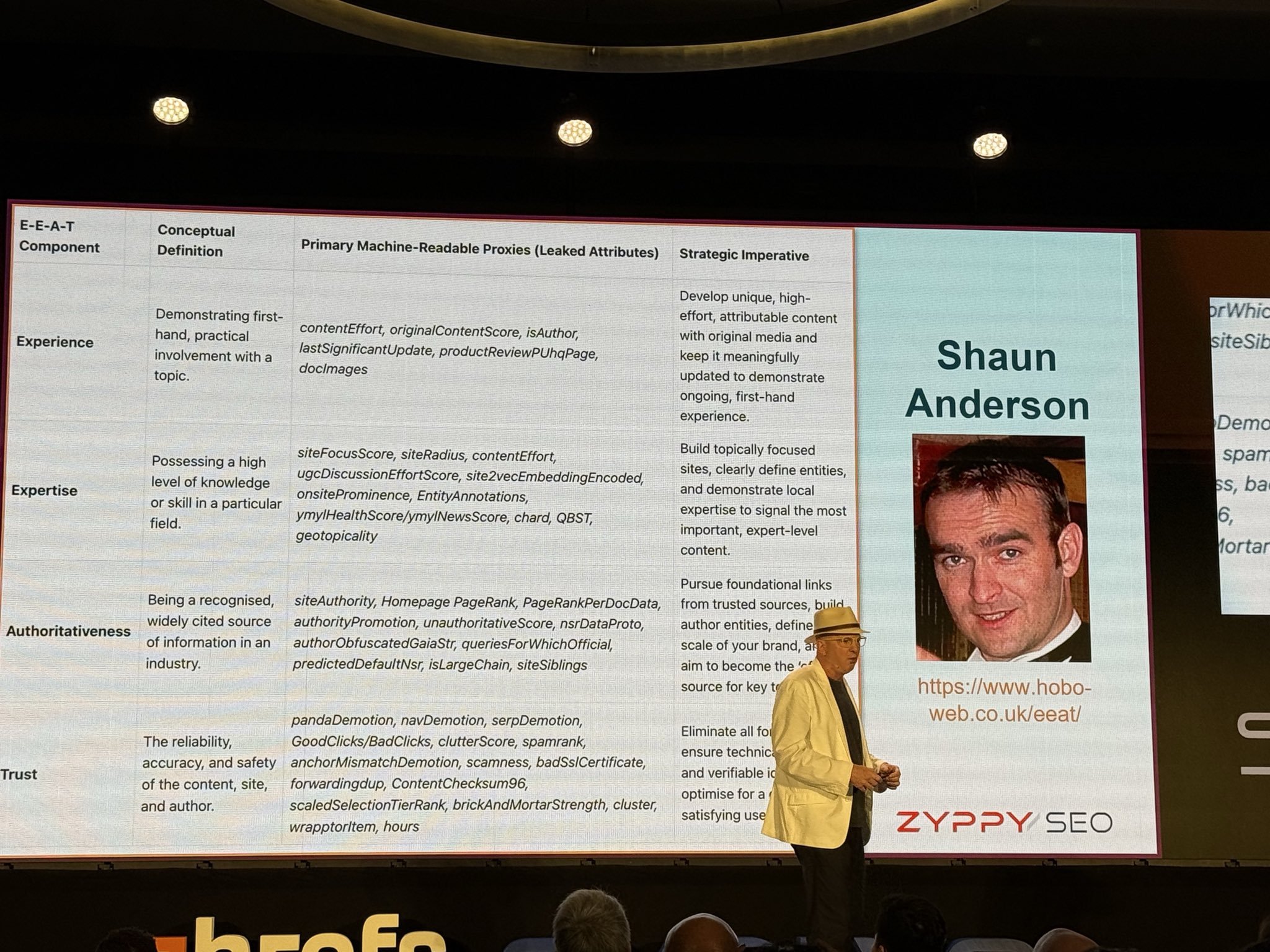

The parallel structures of the RanklabTitle (powered by Goldmine) and RanklabSnippet modules are the most profound revelation from this particular leak.

They expose a core, unified Google philosophy for SERP construction. It’s not about optimising isolated HTML tags; it’s about winning a holistic, internal competition for every single piece of information shown to a user.

My inference is that Google has created a scalable “quality evaluation pattern” that it applies universally. This pattern reveals that:

- Publisher input is inherently distrusted: The <title> tag and <meta description> are treated as just one candidate among many.

- Alternatives are actively sourced: Google scrapes the entire document—headings, body text, links—for better options.

- AI provides quality control: Systems like BlockBERT and SnippetBrain perform deep semantic and linguistic analysis, acting as automated quality editors.

- User behaviour is the ultimate arbiter: The NavBoost system, fuelled by real user clicks, is the final and most heavily weighted judge.

The increasing complexity of these systems paradoxically makes high-level SEO strategy simpler and more predictable.

In the past, SEO often involved finding loopholes in rule-based systems. Today, systems like Goldmine are impossible to “trick”.

The only viable, long-term strategy is to stop trying to game the system and instead focus entirely on the system’s ultimate goal: satisfying user intent. As I have said in the past, the core purpose of modern, “white hat” SEO is simply to “build high-quality websites“.

In this modern era of search, which I see as a “Human-AI Symbiosis,” our job is not to deceive algorithms.

It is to use our uniquely human skills – empathy, strategic thinking, and clear communication – to create the unambiguous signals of quality that Google’s increasingly specialised AI systems are designed to find and reward.

The following tables provide a comprehensive, attribute-by-attribute breakdown of the QualityPreviewRanklabTitle protocol buffer, broken into thematic groups.

Each entry includes the attribute’s name, its data type, and my expert interpretation of its role within the title selection ecosystem, along with the direct strategic implications for search engine optimisation.

Foundational Attributes: Core Data, Relevance & Formatting

Note. Any strategic SEO implication is a work of logical inference. Use caution and think critically.

This group contains the fundamental properties of the title candidate, including its text, validity, formatting, and its direct relationship to the user’s query.

| Attribute Name | Data Type | Expert Interpretation & Role | Strategic SEO Implication |

| text | String | The literal text of the title candidate to be displayed. Populated for debugging and not used for model inference. | Provides a clear view of the final candidate text, including any modifications like truncation. |

| dataSourceType | String | A categorical label indicating the origin of the title candidate (e.g., “TITLE_TAG”, “HEADING_TAG”, “ON_SITE_ANCHOR”). | Crucial for understanding which source is generating the winning title, enabling targeted optimisation. |

| isValid | boolean | A simple flag to indicate if the title is valid (i.e., not empty). | A basic sanity check; empty titles are immediately disqualified. |

| isTruncated | boolean | A flag indicating whether the rendered title would be truncated on a standard SERP display. | A direct signal that titles exceeding the pixel width limit are identified and likely penalised. |

| hasSiteInfo | boolean | Indicates whether the title candidate includes site branding information (e.g., the site name). | Aligns with Google’s public guidance to brand titles concisely. A positive signal when used appropriately. |

| queryMatch | integer | The raw number of unique query terms that are present in the title candidate. | A fundamental relevance signal. Higher is generally better, but must be balanced against readability. |

| queryMatchFraction | number (float) | The number of matched query terms divided by the total number of terms in the query. A value in the range . | A normalised relevance signal that is more informative than the raw count; matching 2 of 2 query terms is more significant than matching 2 of 5. |

| widthFraction | number (float) | The rendered pixel width of the title divided by the maximum allowed pixel width. A value in the range . | A critical formatting signal. Values correspond to isTruncated = true. This is a direct input to the scoring model, not just a display-time adjustment. |

| docLang | String | The detected language of the document, used for model inference. | Ensures that the correct language-specific models (e.g., for readability, semantic analysis) are applied. |

Candidate Source Identification

Note. Any strategic SEO implication is a work of logical inference. Use caution and think critically.

These boolean flags identify the specific origin of each title candidate, revealing the diverse set of on-page and off-page elements Google uses to generate its pool of potential titles.

| Attribute Name | Data Type | Expert Interpretation & Role | Strategic SEO Implication |

| sourceTitleTag | boolean | Flag indicating the candidate was sourced from the HTML <title> element. | Confirms the <title> tag is a primary, but not exclusive, source for candidates. |

| sourceHeadingTag | boolean | Flag indicating the candidate was sourced from an on-page heading element (e.g., <h1>, <h2>). | Validates the long-held SEO best practice of using descriptive headings; they are direct title inputs. |

| sourceOnsiteAnchor | boolean | Flag indicating the candidate was sourced from the anchor text of an internal link. | Reveals that a site’s internal linking strategy is a form of title optimisation. |

| sourceOndomainAnchor | boolean | Similar to sourceOnsiteAnchor, likely related to links within the same registered domain but potentially across subdomains. | Further emphasises the importance of a controlled, descriptive internal anchor text strategy. |

| sourceOffdomainAnchor | boolean | Flag indicating the candidate was sourced from the anchor text of an external backlink. | Confirms that how other sites link to a page can directly influence its title in the SERP. |

| sourceGeneratedTitle | boolean | Flag indicating the title was algorithmically generated by Google’s systems. | The final fallback when all other publisher-provided signals are deemed low quality. |

| sourceLocalTitle | boolean | Flag for titles sourced from local-specific signals, likely related to Google Business Profile or local intent queries. | Highlights a specialised title generation path for local SEO. |

| sourceTransliteratedTitle | boolean | Flag for titles that have been transliterated from one script to another. | Shows the system’s capability to adapt titles for cross-lingual search contexts. |

| sourceGeometry | boolean | A flag likely related to the visual prominence or position of the source text on the rendered page. | Suggests that visual hierarchy (e.g., text size, position) influences which text is chosen as a title candidate. |

The Goldmine Scoring System: Component Factors & Scores

Note. Any strategic SEO implication is a work of logical inference. Use caution and think critically.

This is the core of the evaluation engine. These attributes represent the individual scoring components (Factors) and the final composite scores that determine a title candidate’s quality and ranking.

| Attribute Name | Data Type | Expert Interpretation & Role | Strategic SEO Implication |

| goldminePageScore | number (float) | The initial composite score computed by the “Goldmine” system (AlternativeTitlesAnnotator). | Represents the baseline quality assessment based on a combination of on-page and source-related factors. |

| goldmineAdjustedScore | number (float) | The refined score after applying additional adjustments, explicitly including “Blockbert scoring.” | The final quality score after advanced semantic analysis, likely a key determinant in the ranking of candidates. |

| goldmineTitleTagFactor | number (float) | A score component derived specifically from the quality of the <title> tag source. | Measures the intrinsic quality of the publisher’s intended title. |

| goldmineBodyFactor | number (float) | A score component based on the title’s relevance to the page’s main body content. | Measures how well the title represents the content, preventing title-content mismatch. |

| goldmineAnchorFactor | number (float) | A score component derived from supporting anchor text signals. | Quantifies the strength of endorsement from internal and external links. |

| goldmineHeadingFactor | number (float) | A score component derived from supporting heading tag signals. | Quantifies the on-page structural support for the title candidate. |

| goldmineOgTitleFactor | number (float) | A score component derived from the Open Graph title tag (og:title). | Confirms that social sharing metadata is consumed and used as a signal for title generation. |

| goldmineSitenameFactor | number (float) | A score component related to the presence and quality of the site name in the title. | Measures the effectiveness of the branding component of the title. |

| goldmineNavboostFactor | number (float) | A score component derived from the NavBoost system, reflecting user click behavior. | The direct link between historical user engagement and title selection. A title that performs well with users gets a higher score. |

| goldmineBlockbertFactor | number (float) | A score component from the BlockBERT model, assessing semantic quality. | Measures the linguistic merit and contextual relevance of the title using an advanced language model. |

| goldmineReadabilityScore | number (float) | A score assessing the readability of the title text. | A direct signal that clear, easy-to-understand language is preferred over complex or convoluted phrasing. |

| goldmineGeometryFactor | number (float) | A score related to sourceGeometry, likely quantifying the visual prominence of the source text. | A numerical feature representing the importance of visual hierarchy in title candidate selection. |

| goldmineLocationFactor | number (float) | A score related to the title’s relevance for location-specific queries. | A key feature for local SEO, boosting titles that effectively communicate local relevance. |

| goldmineSalientTermFactor | number (float) | A score based on the presence of the most important or “salient” terms from the document. | A more sophisticated relevance measure than simple keyword matching, focusing on core topical terms. |

| goldmineUrlMatchFactor | number (float) | A score measuring the alignment between the title text and the terms in the URL string. | Rewards descriptive URLs and penalizes mismatches between the URL and the title. |

| goldmineTrustFactor | number (float) | A score reflecting the trustworthiness of the title candidate or its source. | A high-level signal that could be influenced by site-wide authority or other trust metrics. |

On-Page Quality & Demotion Signals

Note. Any strategic SEO implication is a work of logical inference. Use caution and think critically.

These attributes are used to identify and penalise low-quality title characteristics, such as keyword stuffing, boilerplate text, and language mismatches.

| Attribute Name | Data Type | Expert Interpretation & Role | Strategic SEO Implication |

| dupTokens | integer | The number of duplicated tokens in the title. For “dog cat cat cat”, dupTokens would be 2. | A direct, quantifiable measure of keyword stuffing within the title itself. A strong negative signal. |

| goldmineIsBadTitle | number (float) | A score indicating the title is of low quality, likely an aggregate of negative signals. | A key demotion feature that the system aims to minimize. |

| goldmineHasBoilerplateInTitle | number (float) | A score that detects repeated, non-informative, or “boilerplate” text across multiple titles on a site. | The direct technical implementation of Google’s public warning against boilerplate titles. |

| goldmineForeign | number (float) | A score indicating a language mismatch between the title and the document content. | A negative signal that penalizes titles that may mislead users about the page’s language. |

| goldmineOnPage-DemotionFactor | number (float) | A general on-page demotion score that can negatively impact the title’s overall score. | A penalty applied if the page itself has quality issues, linking title quality to overall page quality. |

Semantic Relationship & Numerical Flags

Note. Any strategic SEO implication is a work of logical inference. Use caution and think critically.

This group includes attributes that measure the semantic overlap between the title and other on-page content, as well as numerical features that provide more granular input for the machine learning models.

| Attribute Name | Data Type | Expert Interpretation & Role | Strategic SEO Implication |

| goldmineIsTitleTag | number (float) | A numerical representation of sourceTitleTag, likely used as a feature in the ML model. | Allows the model to learn a specific weight or bias for titles originating from the official tag. |

| goldmineIsHeadingTag | number (float) | A numerical representation of sourceHeadingTag. | Allows the model to learn a weight for heading-sourced titles. |

| goldmineHeaderIsH1 | number (float) | A specific numerical feature indicating if a heading-sourced title came from an <h1> tag. | Confirms that <h1> tags are treated as a distinct and likely more important signal than other headings. |

| goldmineAnchorSupportOnly | number (float) | A signal that may indicate the title candidate is only supported by anchor text and lacks other on-page signals. | Could be a neutral or slightly negative feature, indicating a potential lack of on-page relevance. |

| goldmineHasTitleNgram | number (float) | A signal likely related to the presence of common or expected n-grams for the page’s topic. | Could be used to measure topical alignment or identify spammy, irrelevant n-grams. |

| goldmineIsTruncated | number (float) | A numerical representation of isTruncated, providing a feature for the ML model. | Allows the model to learn a specific penalty for titles that would be truncated. |

| goldmineSubHeading | number (float) | A signal possibly related to whether the title is sourced from a subheading (<h2>, <h3>, etc.) rather than a main heading. | Allows the model to differentiate between main titles and subordinate headings. |

| percentTokens-CoveredByBodyTitle | number (float) | The percentage of tokens in the title candidate that are also present in a “body title” (likely the main on-page heading). | Measures the overlap between a candidate (e.g., from anchor text) and the page’s primary headline. |

| percentBodyTitleTokensCovered | number (float) | The percentage of tokens from the “body title” that are covered by the current title candidate. | The inverse of the above, measuring how comprehensively the candidate represents the main headline. |

Experimentation & Internal Ranking Data

Note. Any strategic SEO implication is a work of logical inference. Use caution and think critically.

These attributes are used within Google’s Ranklab for A/B testing, model training, and analysing the performance of different title candidates against a baseline.

| Attribute Name | Data Type | Expert Interpretation & Role | Strategic SEO Implication |

| baseRank | integer | The ranking index of this candidate in a baseline or control group. | Used for experimentation and A/B testing within Ranklab. |

| testRank | integer | The ranking index of this candidate in the test group. | Used for experimentation and A/B testing within Ranklab. |

| baseGoldmineFinalScore | number (float) | The goldmine_final_score value from the baseline group. | Used for experimentation and A/B testing within Ranklab. |

| testGoldmineFinalScore | number (float) | The goldmine_final_score value from the test group. | Used for experimentation and A/B testing within Ranklab. |

| perTypeRank | integer | The rank of this title among all candidates of the same dataSourceType. | Allows for analysis of the best “HEADING_TAG” title vs. the best “TITLE_TAG” title, etc. |

| perTypeQuality | String | A qualitative label (e.g., “GOOD”, “BAD”) for the title within its source type. | A categorical feature for model training and analysis. |

| forcedExperimentScore | integer | A score assigned to candidates that are being forced into SERPs for experimental purposes. | A clear indicator of live A/B testing of title variations. |

| goldmineOther-BoostFeatureCount | integer | The count of other internal boost features not explicitly listed here. | Acknowledges that this protobuf is a subset of a larger, more complex system of signals. |

| docRelevance | number (float) | A general relevance score of the document to the query context. | Provides overall context for the title evaluation; a good title for an irrelevant document is still not useful. |

| goldmineFinalScore | number (float) | A deprecated score, now superseded by goldminePageScore. | Historical attribute, shows the evolution of the scoring system. |

| queryRelevance | number (float) | A deprecated experimental feature related to query relevance. | Historical attribute. |

Interesting Related Attributes

The following is a list of other technical attributes related to the snippet and title evaluation systems that were present in the leaked documentation.

From QualityPreviewSnippetDocumentFeatures (Document-related snippet scores):

- experimentalTitleSalientTermsScore

- leadingtextDistanceScore

- salientPositionBoostScore

- unstableTokensScore

From QualityPreviewChosenSnippetInfo (Information about the final chosen snippet):

- leadingTextType

- snippetHtml

- snippetType

- tidbits

From QualityPreviewSnippetQueryFeatures (Query-related snippet scores):

- experimentalQueryTitleScore

- queryHasPassageembedEmbeddings

- queryScore

From QualityPreviewSnippetRadishFeatures (Scores from the “Radish” Featured Snippet system):

- passageCoverage

- passageType

- queryPassageIdx

- similarityMethod

- similarityScore

- snippetCoverage

Read next

Read my article that Cyrus Shephard so gracefully highlighted at AHREF Evolve 2025 conference: E-E-A-T Decoded: Google’s Experience, Expertise, Authoritativeness, and Trust.

The fastest way to contact me is through X (formerly Twitter). This is the only channel I have notifications turned on for. If I didn’t do that, it would be impossible to operate. I endeavour to view all emails by the end of the day, UK time. LinkedIn is checked every few days. Please note that Facebook messages are checked much less frequently. I also have a Bluesky account.

You can also contact me directly by email.