Disclaimer: This is not official. Any article (like this) dealing with the Google Content Data Warehouse leak requires a lot of logical inference when putting together the framework for SEOs, as I have done with this article. I urge you to double-check my work and use critical thinking when applying anything for the leaks to your site. My aim with these articles is essentially to confirm that Google does, as it claims, try to identify trusted sites to rank in its index. The aim is to irrefutably confirm white hat SEO has purpose in 2026 – and that purpose is to build high-quality websites. Feedback and corrections welcome. First published on: 10 October 2025.

E-E-A-T, an acronym for Experience, Expertise, Authoritativeness, and Trust, serves as a conceptual model for what Google deems valuable to its users and is used by its human Quality Raters to evaluate search results. In this article, you will find out exactly what that means.

1.1 The Guiding Philosophy of Content Quality

Understand that E-E-A-T itself is not a direct, machine-readable ranking factor. Rather, it represents the desired outcome – the ‘product specification’ – that guides the development and refinement of Google’s complex ranking algorithms.

It is the human-centric ideal that Google’s automated systems are engineered to approximate and reward at an immense scale.

This framework is particularly emphasised for ‘Your Money or Your Life’ (YMYL) topics, where the accuracy and trustworthiness of information can have a significant impact on a person’s health, finances, or safety.

Google Search is, based on my primary source investigations, a system of competing philosophies where E-E-A-T is Google’s doctrine codified. E-E-A-T. is not simply added to content or even to your site, although your website must describe itself accurately, where it is contextually relevant to users to do so (see Contextual SEO).

1.2 Bridging the Gap Between Human Raters and Machine Algorithms

The persistent challenge for Google’s engineers has been the translation of these abstract, human-judged qualities into concrete, quantifiable signals that an algorithm can process.

An algorithm cannot directly measure ‘trust’ or ‘expertise’ in the same way a human can. It must rely on machine-readable proxies – measurable data points that correlate strongly with these abstract concepts.

The leak of Google’s ‘Content API Warehouse’ documentation, corroborated by sworn testimony from the Department of Justice (DOJ) antitrust case, offers an unprecedented, albeit unofficial, view into the potential ‘engineering schematic’ designed to solve this very problem.

This article posits that the attributes detailed in the leaked documentation are not a random collection of data points but represent a deliberate and sophisticated attempt to mechanise the principles of E-E-A-T.

The existence of the Quality Rater Guidelines and the E-E-A-T framework can be seen as the initial brief given to Google’s ranking engineers.

These engineers are then tasked with finding scalable, algorithmic solutions to identify content that aligns with these values. Abstract concepts like ‘Authoritativeness’ must be converted into measurable inputs.

The leaked attributes, such as siteAuthority, GoodClicks, and siteFocusScore, appear to be precisely these inputs.

Therefore, the leak does not merely provide a list of potential ranking factors; it offers a reverse-engineered look at Google’s approach for translating its public-facing quality philosophy into scalable, operational code.

This analysis bridges the gap between Google’s stated intentions and its potential technical implementation.

1.3 Scope

This is a work of logical inference (inference optimisation), my own interpretations, and my own commentary and opinion. I urge you to think critically.

The scope is strictly confined to its detailed breakdown of the leaked Content API Warehouse attributes and its synthesis of relevant testimony from the Google DOJ antitrust trial.

Section II: The Data Foundation: A Synthesis of the API Leak and DOJ Testimony

2.1 The “Content API Warehouse” Leak: Key Architectural Features

The foundation of this analysis is the leaked documentation for an internal Google system, referred to as the ‘Content API Warehouse’.

This system appears to function as a central repository that stores and serves a vast array of features, or attributes, about websites and individual web documents.

The leak reveals that Google’s ranking process is not a single algorithm but a multi-stage pipeline that evaluates two fundamental questions, which correspond to two fundamental top-level ranking signals revealed in the trial: ‘Is this document trustworthy?’ (Authority, or Q*) and ‘Is this document relevant?’ (Relevance, or T*).

The process begins at indexing, where a system named SegIndexer places documents into quality-based tiers with names like ‘Base, Zeppelins, and Landfills‘.

A document’s scaledSelectionTierRank determines its position, which can effectively disqualify low-quality content before ranking even begins.

For documents in the main tiers, an initial retrieval and scoring stage is handled by a system named Mustang, which relies on a set of pre-computed, compressed quality signals stored in the CompressedQualitySignals module.

This preliminary scoring acts as a gatekeeper, determining which documents are worthy of more computationally expensive analysis by a powerful re-ranking layer known as ‘Twiddlers‘. These Twiddlers, such as the Navboost system, then modify the results based on more nuanced, often query-dependent factors. This entire pipeline operates on foundational data structures like the CompositeDoc (the master record for a URL) and the PerDocData model (the primary container for document-level signals).

Based on the analysis, the most salient features can be categorised as follows.

Site-Level Signals

These attributes assess a domain as a whole, providing a foundational context for every page within it.

- siteAuthority: This is described as a site-wide authority metric, likely functioning as a modern successor to the original PageRank concept. It is a single score that represents the overall perceived importance and authority of an entire domain, presumably derived from the web’s link graph and other signals.

- siteFocusScore: This feature appears to measure the topical specialisation of a website. A high score would indicate that a domain is highly focused on a specific niche, whereas a low score would suggest a broad, generalist site covering many disparate topics. This provides a quantifiable measure of a site’s thematic depth.

- hostAge: A straightforward but foundational signal, this attribute stores the date of the first crawl of a domain. It serves as a basic heuristic for longevity and stability, where older, more established domains may be treated differently than newly registered ones.

Content and Page-Level Signals

This category includes attributes that evaluate the content of a specific URL.

- originalContentScore: This score is designed to measure the originality of the content on a given page. It is a critical signal for distinguishing unique, valuable information from content that is syndicated, scraped, or largely derivative of other sources already in the index.

Author-Level Signals

The leak suggests a system-level recognition of authorship, treating authors as distinct entities.

- isAuthor: A feature that likely flags whether a page has a clearly identified author byline that Google’s systems can parse and recognise.

- author: A corresponding field designed to store the name of the identified author. Together, these attributes indicate that Google is not just indexing content but is actively identifying and tracking the creators of that content.

User Interaction and Click-Based Signals

A significant portion of the leaked data points to the importance of user behaviour, particularly data aggregated from Google’s Chrome browser.

- chromeInTotal: This attribute is described as a measure of a page’s popularity based on the total number of clicks from Chrome users. It represents a massive, direct signal of real-world user traffic and engagement with a specific URL.

2.2 Corroborating Evidence from the DOJ Antitrust Case

The data points from the API leak gain their full context when viewed alongside testimony from the DOJ antitrust case against Google.

This testimony revealed the existence and function of core ranking systems that likely consume the very data stored in the Content API Warehouse. At the highest level, the trial revealed two ‘fundamental top-level ranking signals’: Q* (Quality) and P* (Popularity).

Q* represents the static, query-independent authority of a site, while P* captures its dynamic, real-world popularity.

The Navboost System

Testimony unequivocally confirmed that Navboost is a critical ranking system that heavily relies on user click data to adjust search results.

It functions as the operational engine that translates raw user behaviour into a powerful ranking signal, acting as one of Google’s most important signals.

Crucially, in a direct contradiction of years of public statements, evidence confirmed that user interaction data collected from the Chrome browser is a direct input into Google’s popularity signals, feeding systems like NavBoost.

Defining User Satisfaction

The DOJ testimony provided qualitative meaning to the raw click data, revealing a nuanced interpretation of user intent. Google’s systems, including Navboost, do not simply count clicks; they classify them. Key concepts include:

- ‘Good clicks’ and ‘Bad clicks’: A ‘good click’ is one where the user clicks a result and does not immediately return to the search results page, implying their query was satisfied. A ‘bad click’, often associated with ‘pogo-sticking’, is when a user clicks a result and quickly returns to the SERP to choose another, signalling dissatisfaction.

- ‘Last longest clicks’: This is considered a particularly strong signal of satisfaction, where a user’s final click in a search session results in a long dwell time on the destination page.

This evidence establishes a clear and logical data flow.

The Chrome browser collects vast amounts of user interaction data.

This data is aggregated and processed, populating features like chromeInTotal and click-stream analyses within the Content API Warehouse.

Finally, ranking systems like Navboost consume this processed data, interpreting it as signals of user satisfaction or dissatisfaction, and use these signals to re-rank and refine search results. This creates a powerful feedback loop where real-world user engagement is not an indirect or secondary signal, but a primary, direct input into a core ranking system.

Section III: Mapping Leaked Attributes to the E-E-A-T Framework

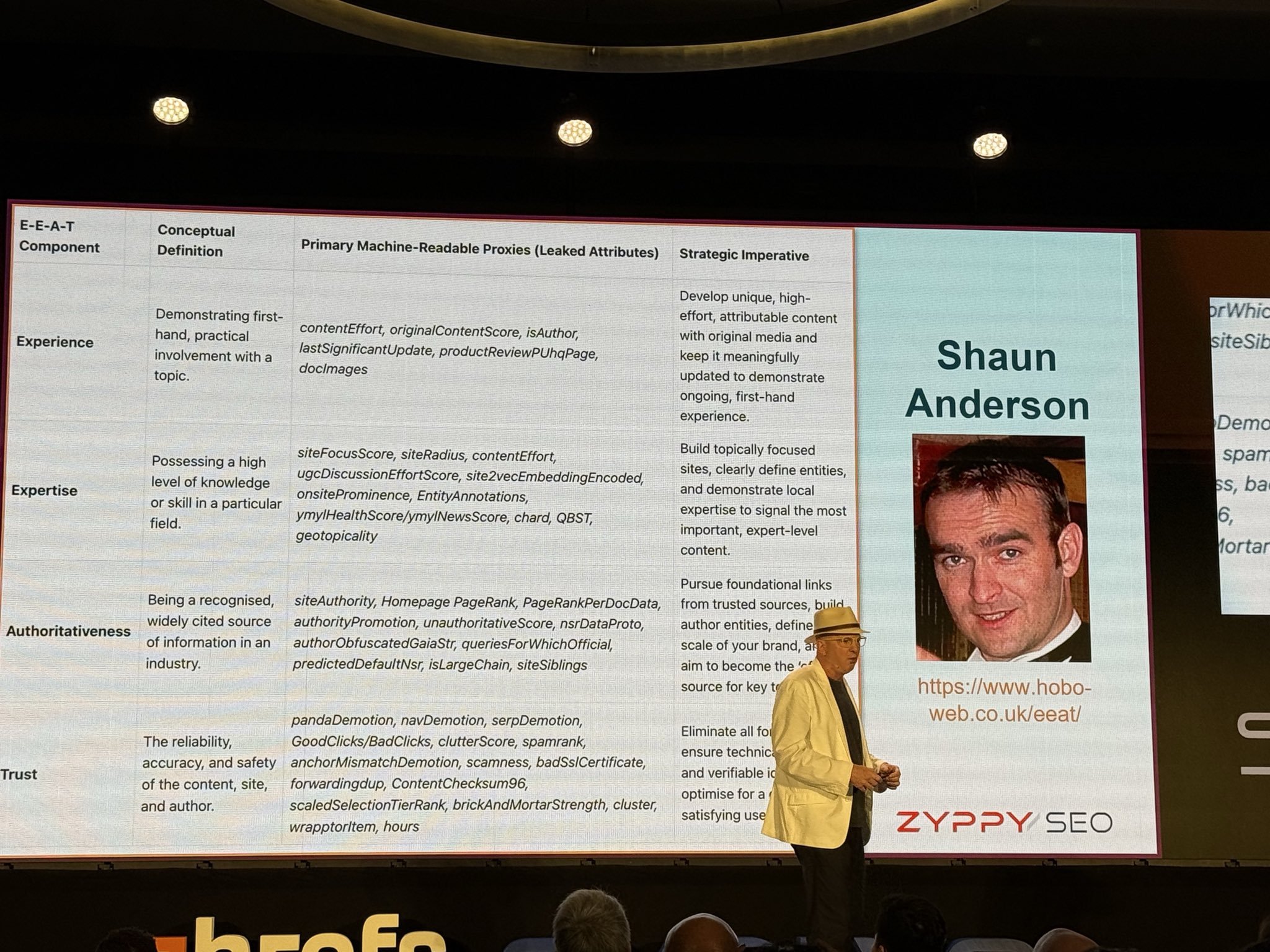

This section provides a systematic analysis of each component of the E-E-A-T framework, mapping the abstract concepts to the concrete, machine-readable proxies identified in the API leak and DOJ testimony. The following correlation matrix summarises these connections, which are then elaborated upon in the subsequent subsections.

Table 3.1: E-E-A-T Decoded

This is a work of logical inference by me. It is not official, so think critically. It is an attempt to map EEAT attributes to the leaked API documentation to clarify what white hat SEOs should be focused on to move the needle for clients.

| E-E-A-T Component | Conceptual Definition | Primary Machine-Readable Proxies (Leaked Attributes) | Strategic Imperative |

| Experience | Demonstrating first-hand, practical involvement with a topic. | contentEffort, originalContentScore, isAuthor, lastSignificantUpdate, productReviewPUhqPage, docImages | Develop unique, high-effort, attributable content with original media and keep it meaningfully updated to demonstrate ongoing, first-hand experience. |

| Expertise | Possessing a high level of knowledge or skill in a particular field. | siteFocusScore, siteRadius, contentEffort, ugcDiscussionEffortScore, site2vecEmbeddingEncoded, onsiteProminence, EntityAnnotations, ymylHealthScore/ymylNewsScore, chard, QBST, geotopicality | Build topically focused sites, clearly define entities, and demonstrate local expertise to signal the most important, expert-level content. |

| Authoritativeness | Being a recognised, widely cited source of information in an industry. | siteAuthority, Homepage PageRank, PageRankPerDocData, authorityPromotion, unauthoritativeScore, nsrDataProto, authorObfuscatedGaiaStr, queriesForWhichOfficial, predictedDefaultNsr, isLargeChain, siteSiblings | Pursue foundational links from trusted sources, build author entities, define the scale of your brand, and aim to become the ‘official’ source for key topics. |

| Trust | The reliability, accuracy, and safety of the content, site, and author. | pandaDemotion, navDemotion, serpDemotion, GoodClicks/BadClicks, clutterScore, spamrank, anchorMismatchDemotion, scamness, badSslCertificate, forwardingdup, ContentChecksum96, scaledSelectionTierRank, brickAndMortarStrength, cluster, wrapptorItem, hours | Eliminate all forms of spam, ensure technical soundness and verifiable identity, and optimise for a clean, satisfying user experience. |

![]() Rate My Page Quality: A prompt to perform a deep audit of a single URL, algorithmically estimating its

Rate My Page Quality: A prompt to perform a deep audit of a single URL, algorithmically estimating its contentEffort and E-E-A-T signals.

3.1 E – Experience

Experience, the newest addition to the E-E-A-T acronym, refers to the degree to which a content creator has first-hand, real-world experience with the topic they are discussing. For example, a review of a product written by someone who has actually purchased and used it demonstrates higher experience than a review that merely aggregates specifications from the manufacturer’s website.

The leaked attributes provide clear proxies for this concept.

- contentEffort: This attribute, described as an ‘LLM-based effort estimation’, is a direct attempt to quantify the human labour and resources invested in a piece of content. It serves as an anti-commoditisation metric by assessing the ‘ease with which a page could be replicated’, directly penalising generic AI-generated content.

- originalContentScore: A high score on this metric is a powerful indicator of experience. Content that is truly original is far more likely to contain unique insights, personal anecdotes, and details that can only come from direct involvement.

- isAuthor and author: These attributes are crucial for scaling the measurement of experience. By identifying content with a specific author, Google’s systems can track an individual’s entire body of work. If an author consistently produces high-effort, original content on a topic, the system can build a machine-readable profile of that author’s accumulated experience.

- lastSignificantUpdate: This signal is a critical revelation, indicating that Google can differentiate between minor edits and substantial content revisions. Regularly making meaningful updates to content is a powerful way to signal ongoing, first-hand experience with a topic, demonstrating that the creator’s knowledge is current.

- Multimedia Evidence (docImages): The CompositeDoc contains dedicated fields for storing detailed analyses of embedded images. Original, thematically relevant images serve as corroborating evidence of first-hand experience, reinforcing the textual content.

- Specialised Content Signals: For specific verticals, Google uses even more granular signals. The productReviewPUhqPage (Ultra High Quality Page) signal identifies exceptional product reviews that demonstrate deep, first-hand use and testing, directly rewarding demonstrable experience.

The strategic implication is a clear algorithmic disadvantage for anonymous, low-effort, or AI-generated content that lacks a unique perspective. The path to signalling ‘Experience’ involves creating a portfolio of demonstrably unique and helpful content that is clearly attributed to a consistent, recognisable author persona.

3.2 E – Expertise

Expertise relates to the depth of knowledge and skill a creator or website possesses in a specific field. While related to experience, expertise is more about demonstrable knowledge and credentials, whereas experience is about practical application. A university professor may have expertise in physics, while an astronaut has experience in spaceflight.

The leaked data provides several proxies for expertise.

- Topical Authority (siteFocusScore, siteRadius, site2vecEmbeddingEncoded): Google’s systems quantify expertise through a deep understanding of topics. siteFocusScore rewards specialisation. siteRadius measures how much a page deviates from that core topic, penalising content that strays from the site’s established expertise. site2vecEmbeddingEncoded creates a numerical representation of a site’s themes, allowing Google to mathematically measure topical coherence.

- Semantic Understanding (EntityAnnotations, QBST): The EntityAnnotations module identifies the specific ‘things’ a page is about. Content rich with relevant, clearly defined entities is recognised as more expert-level. This is complemented by the QBST (Query-based Salient Terms) system, a ‘memorisation system’ that identifies words that should appear prominently on relevant pages for a given query, setting an expectation for what an expert document should contain.

- Internal Signals of Importance (onsiteProminence): This attribute measures internal link equity by simulating user traffic flow. A site demonstrates its own expertise by using its internal linking structure to signal which pages it considers most important and authoritative on a topic.

- Effort as a Proxy (contentEffort, ugcDiscussionEffortScore): A high contentEffort score signals the investment of significant resources, a hallmark of an expert. Similarly, a high ugcDiscussionEffortScore indicates a community is a hub of expertise, fostering substantive conversations.

- YMYL Classifiers (ymylHealthScore, ymylNewsScore, chard): The existence of dedicated classifier scores for ‘Your Money or Your Life’ content, including the chard attribute which likely acts as an initial YMYL predictor, indicates that higher standards of expertise are being algorithmically measured for these sensitive topics.

- Local Expertise (geotopicality): This sophisticated concept suggests Google builds a topical profile for a geographic area itself. A business located within a zone known for a specific topic (e.g., a tailor on Savile Row) can inherit this ‘geo-topical’ authority, reinforcing its expertise in that niche.

Supporting signals would include a long history of publishing content with a high originalContentScore within that specific topical cluster, all associated with a consistent set of authors known for their work in that field. The strategic imperative is clear: niche sites possess a built-in, measurable advantage in signalling expertise.

3.3 A – Authoritativeness

Authoritativeness is about being a recognised, go-to source of information that others in the field cite and defer to. It is a measure of reputation and standing within a community or industry. The trial confirmed that Google measures this via a site-wide, query-independent Q* (Quality) score.

- Algorithmic Momentum (predictedDefaultNsr): At the heart of the authority system is a baseline quality score known as predictedDefaultNsr. Crucially, this is a VersionedFloatSignal, meaning Google maintains a historical record of this score over time. This creates ‘algorithmic momentum’, where a site with a long, consistent history of high-quality signals builds a positive trajectory that makes it more resilient and harder for competitors to dislodge.

- Holistic Site-Level Scores (siteAuthority, nsrDataProto): The siteAuthority metric is a holistic, site-wide quality score that blends a site’s link profile with content quality and user engagement. nsrDataProto (Normalised Site Rank) is a sophisticated algorithm for assessing a site’s overall reliability, which contributes to its authority. These are key inputs to the overall Q* score.

- Link-Based Authority (Homepage PageRank, PageRankPerDocData): The Homepage PageRank functions as a foundational authority signal for the entire domain. PageRankPerDocData confirms that link-based authority is still calculated and stored on a per-document basis.

- Author and Brand Signals (authorObfuscatedGaiaStr, queriesForWhichOfficial): The system directly measures author and brand authority. authorObfuscatedGaiaStr links content to specific author entities, allowing Google to track an author’s reputation. queriesForWhichOfficial is a powerful signal that stores the specific queries for which a page is considered the definitive ‘official’ result.

- Entity Scale and Scope (isLargeChain, siteSiblings): Google classifies businesses based on their operational model. The isLargeChain flag identifies a branch of a national brand, setting different authority expectations than for a small business. siteSiblings counts the number of related web properties, signalling a larger and potentially more authoritative brand footprint.

- Positive and Negative Modifiers (authorityPromotion, unauthoritativeScore): The system is not neutral; it actively rewards sites with an authorityPromotion boost and penalises others with a punitive unauthoritativeScore.

3.4 T – Trust

Trust is the capstone of the E-E-A-T framework. It is a foundational requirement built on technical soundness, content quality, and positive user validation. The leaked data reveals that Trust is eroded by a wide array of punitive, negative signals from systems like SpamBrain.

- Foundational Trust (Indexing Tiers): Trust begins at the indexing level. A document’s scaledSelectionTierRank determines its position in tiers like ‘Base‘ (high-quality) or ‘Landfills‘ (low-quality). Being relegated to the Landfills is the ultimate signal of a lack of trust, severely limiting a page’s ability to ever rank.

- Technical Trust (badSslCertificate, forwardingdup, ContentChecksum96): Trust begins with a technically sound foundation. A badSslCertificate is a direct negative trust indicator. Signals related to canonicalisation, such as forwardingdup (redirects) and ContentChecksum96 (duplicate content), are also trust factors; a site that provides clear, unambiguous signals is more trustworthy than one that is messy and duplicative.

- Trust through Content Quality (pandaDemotion): The pandaDemotion signal functions as a site-wide penalty for harboring low-quality, thin, or duplicate content. This acts as a trust signal; a site that fails to maintain its own content quality is not a trustworthy source of information. This penalty functions as a form of ‘algorithmic debt’ that suppresses the entire domain’s visibility until the low-quality content is remediated.

- User-Validated Trust (The Navboost/CRAPS Connection): User behaviour serves as the ultimate, dynamic measure of trust. The classification of GoodClicks versus BadClicks is a direct measure of user trust and satisfaction. Consistently negative user behaviour results in tangible penalties like navDemotion (for poor on-site experience) and serpDemotion (for poor performance on the results page).

- Trust through Page Experience (clutterScore): A poor user experience erodes trust. The clutterScore is a site-level signal designed to penalise sites with a large number of ‘distracting/annoying resources’. A high score indicates a user-hostile page layout, which is a direct negative trust signal.

- Absence of Spam Signals: A huge component of trust is the absence of manipulative tactics. A trustworthy site must avoid penalties from scores like scamness, KeywordStuffingScore, GibberishScore, spamrank (for linking out to spammy sites), and anchorMismatchDemotion (for manipulative linking).

- Trust through Real-World Verification (brickAndMortarStrength, cluster, wrapptorItem, hours): For local entities, the LocalWWWInfo module provides a powerful system for quantifying real-world trust. brickAndMortarStrength is a score for a business’s physical prominence. wrapptorItem and cluster are used for entity resolution, verifying a business’s identity by reconciling its Name, Address, and Phone (NAP) data from across the web. Accurate hours signal operational reliability.

The strategic implication is profound: trust is not a static quality. It is built on a foundation of technical security, validated by positive user behaviour, and, for local entities, cemented by verifiable proof of real-world legitimacy and dependability.

The four components of E-E-A-T are not evaluated in isolation. The algorithmic model they inform is an interconnected system where each element reinforces the others. A site can begin by building Expertise through a high siteFocusScore and a deep library of unique content (originalContentScore).

This high-quality content naturally attracts links from other reputable sources, which in turn builds siteAuthority (Authoritativeness). When this now-authoritative, expert site appears in search results, users are more likely to click and have a satisfying experience, generating the ‘good clicks’ that signal Trust to the Navboost system. If this content is written by a consistent author, it also signals Experience. This creates a virtuous cycle. Conversely, a weakness in one area, such as low siteAuthority, can suppress the visibility of even the most expert content, preventing it from ever reaching users and earning the trust signals it needs to rank. This reveals a holistic, interdependent system, not a simple checklist of factors.

Section IV: Strategic Implications: Re-evaluating Content and SEO Strategy in a Post-Leak World

4.1 The Shift from Page-Level to Entity-Level Optimisation

The evidence strongly suggests that a successful SEO strategy must evolve beyond optimising individual pages in isolation. The prominence of site-wide signals like siteAuthority and the confirmation of the Q* score necessitate a holistic, domain-level approach. A single, brilliantly written article on a low-authority, unfocused domain will inherently struggle to compete against content on a domain that has established strong signals of authority and expertise. The performance of every page is influenced by the context of the entire site it sits on.

Furthermore, the identification of author entities via authorObfuscatedGaiaStr signals a critical shift towards author-level optimisation. SEO strategy must now encompass building the reputation, topical relevance, and digital footprint of key authors. This involves ensuring consistent author bylines, creating comprehensive author bio pages, and encouraging authors to build their own authority on social and professional networks. The goal is to establish authors as recognisable entities whose credibility algorithmically transfers to the content they produce.

4.2 The Primacy of User Satisfaction: SERP UX as a Direct Ranking Input

The revelations about the NavBoost system—its 13-month data window, its analysis of ‘last longest click‘, and its use of Chrome browser data—elevate user satisfaction from a theoretical best practice to the most important, mechanically confirmed ranking input. Satisfying user intent is no longer an abstract goal; it is a direct and powerful signal fed into one of Google’s most important ranking systems.

Title tags, meta descriptions, favicons, and the strategic use of rich snippets (reviews, FAQs, etc.) must be crafted not just to attract a click, but to accurately set user expectations. A misleading title that generates a ‘bad click’ is now a direct negative ranking signal. This also means that post-click user experience is more critical than ever. Factors like page load speed, clear information architecture, mobile usability, and, most importantly, the immediate satisfaction of user intent are essential to secure a ‘good click’ and prevent the user from pogo-sticking back to the SERP.

4.3 A New Model for Content Auditing

These findings necessitate a new, more sophisticated model for performing content audits. The traditional focus on keywords and backlinks, while still relevant, is insufficient. A modern audit framework should be structured around these new, data-informed pillars:

- Auditing for Originality: Audits must systematically identify and address content with a potentially low originalContentScore. This involves using plagiarism and duplication checkers, but also qualitatively assessing content for unique insights, data, and perspectives. Thin, derivative, or syndicated content should be removed or substantially improved.

- Auditing for Focus: The audit process should include a rigorous analysis of the site’s information architecture to evaluate its contribution to siteFocusScore. Is the site attempting to be everything to everyone? Are content categories logically distinct and thematically tight? This may lead to strategic decisions to prune irrelevant content categories or restructure the site into more clearly defined topical hubs.

- Auditing for Authority: Backlink profile analysis must be viewed through the lens of building overall siteAuthority, not just passing link equity to specific pages. The audit should prioritise acquiring links from high-authority, topically relevant domains that bolster the entire site’s reputation.

- Auditing for User Trust: Audits must incorporate user behaviour and engagement metrics as direct proxies for the signals that likely feed Navboost. Analysing Google Search Console data for CTR by query, alongside analytics data for bounce rate, dwell time, and conversion rates, can help identify pages that are failing to satisfy user intent and are likely accumulating negative click signals.

- Auditing for Page Experience: Audits should now include checks for signals that contribute to a poor user experience, such as intrusive interstitials or a high clutterScore from excessive ads, which directly erode trust.

- Auditing for Algorithmic Debt: Content audits must also specifically look for and remediate the root causes of a potential pandaDemotion. This means proactively identifying and improving or removing thin, low-quality content to ‘pay down’ the algorithmic debt that may be suppressing the entire site’s performance.

- Auditing for Semantic Clarity: A modern audit should evaluate how well content is structured for machine understanding. This includes checking for the clear use of headings to define topics, the implementation of structured data to explicitly define entities for the EntityAnnotations process, and a logical internal linking strategy that reinforces topical relationships and boosts onsiteProminence.

This data suggests the existence of a potential algorithmic ‘glass ceiling’ for websites. A site’s siteAuthority and its placement in the indexing tiers (scaledSelectionTierRank) function as powerful gatekeepers. The concept of preliminary scoring within systems like Mustang means that for a highly competitive query, the system may first create a consideration set composed only of sites from the ‘Base‘ tier that exceed a certain quality threshold. A new, low-authority blog, even if it contains a world-class article, might be filtered out before its content is ever deeply evaluated or re-ranked by user-centric Twiddlers like Navboost. This implies that for new market entrants, the primary strategic goal is not simply to create great content, but to first break through this authority and trust threshold to even become eligible to compete.

Section V: Conclusion: The Mechanisation of Trust and Authority

5.1 Summarising the Evidence

The analysis of the Content API Warehouse leak, contextualised by testimony from the DOJ antitrust case, provides the most coherent and data-informed model to date for understanding how Google translates its abstract E-E-A-T framework into algorithmic reality.

The evidence demonstrates a strong correlation between each component of E-E-A-T and a corresponding set of machine-readable signals. Experience is proxied by content originality and meaningful updates. Expertise is measured by topical focus and semantic clarity.

Authoritativeness is quantified by a holistic, historically-aware ‘algorithmic momentum’ (predictedDefaultNsr) and a site-wide Q* score that is deeply intertwined with link-based signals and brand recognition. Most critically, Trust is not merely a technical checklist but is a dynamic quality, validated by user clicks which act as a gatekeeper for authority, and cemented by the absence of a wide range of spam signals.

5.2 The Future of Search: A Hybrid Model of Content, Links, and Clicks

The future of a successful SEO strategy lies not in focusing on any single pillar but in mastering the complex interplay between three core components.

First is the creation of genuinely unique, expert-level content that demonstrates first-hand experience (the E-E of E-E-A-T). Second is the building of a genuine, domain-wide reputation through links and brand recognition to establish authority (the A of E-E-A-T).

Third, and perhaps most importantly, is the relentless optimisation for user satisfaction to earn positive click signals, which serve as the ultimate, scalable measure of trust (the T of E-E-A-T). These three pillars are not independent; they exist in a continuous feedback loop where success in one area amplifies the others. This interconnectedness is best described as a ‘virtuous cycle of quality’: foundational authority allows content to be seen, high-quality content earns positive user signals, and those signals in turn reinforce the site’s foundational authority.

5.3 Final Word: From Speculation to Data-Informed Strategy

While this analysis is based on leaked, unofficial, and potentially outdated information, it provides an invaluable strategic framework.

It allows SEO professionals and content creators to move beyond speculating about abstract concepts like ‘quality’ and instead focus their efforts on optimising for the tangible signals that likely underpin them.

The key takeaway is that Google’s quest for quality is not just a philosophical stance; it is an engineering problem that the company has sought to solve with a sophisticated, multi-layered system that weighs who you are (siteAuthority, siteFocusScore), what you say (originalContentScore), and how users react to it (GoodClicks). Acknowledging and adapting to this data-informed model is essential for sustainable success in the modern search landscape.

Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 2.5 Deep Research. I have over 20 years writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived ad edited verified as correct by me (and is under constant development). See my AI policy.