Disclaimer: This is not official. Any article (like this) dealing with the Google Content Data Warehouse leak requires a lot of logical inference when putting together the framework for SEOs, as I have done with this article. I urge you to double-check my work and use critical thinking when applying anything for the leaks to your site. My aim with these articles is essentially to confirm that Google does, as it claims, try to identify trusted sites to rank in its index. The aim is to irrefutably confirm white hat SEO has purpose in 2026 – and that purpose is to build high-quality websites. This article was first published on: 12 October 2025: Feedback and corrections welcome.

This will be my only article on the subject of clicks in Google Search.

I’ve gone to a certain depth, but the aim of the article is just to show how important user satisfaction is. I have stopped short of expanding in some areas (as I did with my link-building article), as none of my articles are designed for abusing the system.

They are here to direct a strategy based on satisfying users and increasing rankings, not hacking Google.

A Historical Note on “Made Up Crap”

Google representatives publicly and repeatedly denied that user click data was a significant ranking factor.

Analysts on the Google Search team, such as Gary Illyes, often described clicks as a “very noisy signal,” unsuitable for direct use due to potential manipulation.

In a particularly blunt dismissal of theories around dwell time and click-through rates (CTR), Illyes was quoted as saying:

“Dwell time, CTR, whatever Fishkin’s new theory is, those are generally made up crap. Search is much more simple than people think.“

I remember that comment at the time and thinking it was a little stand out… Googlers don’t hate on folk in public in my experience.

These denials fuelled a long-standing debate in the SEO community, largely because they flew in the face of public experiments that suggested the opposite.

The Content Warehouse leaks and DO trial confirmed unequivocally – Google does use clicks when determining ranking.

But was Gary Ilyes pulling the greatest one-liner in SEO history, an iconic, ironic joke that evidently just flew over everyone’s heads?

I think so.

Read on to find out.

Rand Fishkin’s Public Click Experiments

During his time at Moz, Rand Fishkin (now of Sparktoro) conducted several famous public experiments to test the theory that a high volume of clicks could influence a page’s ranking.

In one test, he sent out a tweet asking his followers to search Google for “REDACTED” and click the link to his blog. According to his analysis, after about 175-250 people participated, the page “shot up to the #1 position.” Fishkin concluded, “This clearly indicates (that in this particular case at least) that click-through-rate significantly influences rankings.”

In a second, even more dramatic experiment, he asked his followers to search for “REDACTED” and click on the result for REDACTED, which was ranking at number ten.

Over a 2.5-hour period, 375 people clicked the link. The result, as Fishkin noted, was that the website “shot up from number ten to the number one spot on Google.”

Google’s public response to these tests was that they did not prove direct usage of clicks for ranking. In a discussion with Rand Fishkin, Google’s Andrey Lipattsev suggested that the temporary ranking change was likely due to other factors, guessing that “the burst of searches, social mentions, links, etc may throw Google off a bit and then they figure it out over time.“

This official stance – that clicks were too “noisy” and “gameable” for direct use – was maintained for years.

This Content Warehouse revelation carries a profound layer of irony in Illyes’s choice of words.

The documentation reveals a core ranking system module explicitly named “Craps,” which is defined as the system that processes “click and impression signals.”

The metrics it tracks – goodClicks, badClicks, and lastLongestClicks – are direct, quantifiable measures of user satisfaction that serve as sophisticated proxies for the very concepts of CTR and dwell time that were being derided.

The Meta Joke about Dwell Time and Clicks

“Dwell time, CTR, whatever Fishkin’s new theory is, those are generally made up crap. Search is much more simple than people think.” Gary Ilyes 2019 Reddit Thread

Whether this was a deliberate, meta-textual joke – a hidden admission veiled in dismissive language – is impossible to know.

Regardless of intent, the coincidence is striking and serves as a perfect encapsulation of the dynamic between Google’s public relations and its internal engineering reality: the very “crap” being publicly derided was, in fact, a named and critical component of the internal ranking architecture – called Craps.

“Dwell time, CTR … crap”

Dwell time and CTR IS the Crap system. Craps.

I take my hat off to you, sir. The best one-liner from a Googler yet.

I digress.

A.J. Kohn on Clicks and Brands’

A.J. Kohn, who runs the firm Blind Five Year Old, has been a long-time proponent of the importance of user signals.

In fact, it was his blog I remember reading for the first time about all this, and I’ve referenced it many times over the years. It was he who introduced me to clicks and pogosticking in 2008. A seminal SEO article, I have always thought. His thoughts always stuck with me.

Kohn frames the relationship between Google and SEO professionals as a high-stakes poker game, which explains the historical disconnect between the company’s public statements and its internal operations.

As A.J. Kohn stated, “We were playing a game of poker with Google, essentially, as SEOs. You know, when there are three suited cards when you hit the turn and I stare down Google and say, do you have a flush? I do not expect them to tell me the truth, right?” Kohn further explained, “Like they’re not going to tell me what’s in their hand because that’s, they’re trying to win“.

For a decade, A.J. Kohn’s original research has pointed to the use of what he calls “implicit user feedback” – a term for the user click data Google collects from search results.

Citing Google’s own foundational patents, Kohn has argued that they are “littered with references” to this concept.

His analysis of internal Google presentations, made public during the antitrust trials, provides direct confirmation.

One such presentation from 2017 states, “Yes, Google tracks all user interactions to better understand human value judgements on documents.“

Another from 2020 elaborates that the “basic game” is to start with a small amount of ‘ground truth’ data, then “look at all the associated user behaviors, and say, ‘Ah, this is what a user does with a good thing! This is what a user does with a bad thing!‘”

According to A.J. Kohn, this process is the very “source of Google’s magic.”

His thoughts have been validated.

I. Introduction: The CRAPS Protocol and the Primacy of User Clicks

The accidental publication of internal Google Content Warehouse API documentation in March 2024 provided an unprecedented look into the mechanics of modern search.

The leak provided a direct “blueprint” of the data structures that underpin Google’s ranking systems.

At the heart of this revelation is the QualityNavboostCrapsCrapsData protocol buffer, a module internally codenamed “Craps.”

This data structure is the engine that captures, aggregates, and transports the user interaction signals that fuel Google’s most powerful ranking systems.

It is the raw material from which user satisfaction is measured.

The “Craps” Codename

- “Craps”: The module QualityNavboostCrapsCrapsData is a system designed to aggregate and process user click and impression signals. While ‘Craps’ functions as an internal codename, it is also thought by some to be an acronym for ‘Click and Results Prediction System’. I do not believe there is official confirmation of this at this time. It fits.

- “The Dice Game Reference“: The dice game reference: In the earliest version of the leak, I discovered that accompanying notes in the

crapsNewUrlSignalsattribute (V2) mentions to “talk to dice-team”. This is a direct confirmation there is some internal nod to the dice game with Craps. That has been changed in the later versons of the leaked code to “talk to craps-team” (V4).

II. Anatomy of a Click: A Deep Dive into the QualityNavboostCrapsCrapsData Protocol Buffer

The QualityNavboostCrapsCrapsData protocol buffer is the core data structure that captures the user interaction signals for the NavBoost system.

Understanding this data model is equivalent to understanding what Google values and how it quantifies user behaviour.

Purpose of the CrapsData Model

This protobuf serves as the container for click and impression data associated with a specific query-URL pair.

Each CrapsData message represents a summary of user interactions for one search result in the context of one search query, further segmented by various slices. It is not a log of a single user’s activity but rather an aggregation of many users’ interactions over time.

Data Aggregation and Slicing

The Craps system operates on “sliced” data.

The key attributes country, device, and language define the primary slices for which data is aggregated separately. This ensures that when evaluating a URL for a query from a mobile user in France, the system uses a CrapsData record containing aggregated clicks from other mobile users in France, not from desktop users in the United States.

The sliceTag attribute provides an additional layer of flexibility, allowing for the creation of arbitrary new slices, indicating a system designed for continuous experimentation and refinement.

The Evolution of Signal Processing

The structure of the CrapsData protobuf reveals a system in constant evolution.

It contains fields that are clearly marked as legacy (e.g., mobileData), fields that are currently in use (e.g., mobileSignals), and fields that are designated for future rollouts (e.g., squashed, unsquashed).

This evolutionary trail provides a unique window into the changing nature of Google’s signal processing. The planned transition from aggregated, “squashed” signals to more granular, “unsquashed” signals is particularly significant, suggesting a move towards more sophisticated machine learning models that can interpret raw user behaviour with greater nuance.

III. From Clicks to Context: Deconstructing the CrapsData Attributes

A granular, attribute-by-attribute analysis of the CrapsData model provides the most direct evidence of the specific user behaviours Google measures and values. The attributes can be grouped into several key categories.

Core Identifiers

These attributes form the primary key for each CRAPS data record, defining the specific context of the user interaction.

| Attribute Name | Data Type | Official Description | Analyst’s Interpretation & Strategic Significance |

| query | String.t | The query string. | The primary key for the data record, defining the user’s intent. All click signals are contextual to a specific query. |

| url | String.t | The URL of the document. | The second primary key, identifying the specific search result being evaluated. |

Foundational Click Metrics

This group contains the basic metrics that measure the volume of user interactions.

| Attribute Name | Data Type | Official Description | Analyst’s Interpretation & Strategic Significance |

| impressions | float() | The number of times the URL was shown in the SERP for the query. | Strategic Significance: This is the denominator for all click-through rate (CTR) calculations. A high number of clicks is meaningless without the context of impressions. It establishes the opportunity for a click to occur. |

| clicks | float() | The total number of clicks on the URL for the query. | Strategic Significance: The raw measure of user interest or “attractiveness” of a result’s title and snippet. While a foundational metric, it is a relatively noisy signal of true satisfaction. |

User Satisfaction Signals

This is the most critical group of attributes, as they measure the quality and outcome of a click, moving beyond simple volume to interpret user satisfaction.

| Attribute Name | Data Type | Official Description | Analyst’s Interpretation & Strategic Significance |

| goodClicks | float() | Clicks that are not “bad” (i.e., not short clicks). | Strategic Significance: A primary positive signal. This metric filters out immediate bounces or “pogo-sticking,” indicating that the user dwelled on the page long enough to find at least some value. This is a much stronger indicator of user satisfaction than raw clicks. |

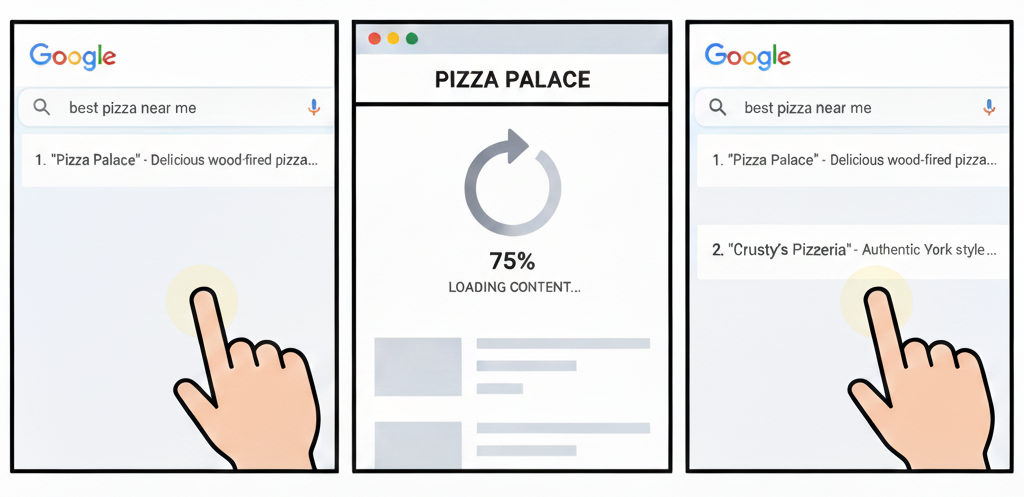

| badClicks | float() | Clicks where the user returns to the SERP very quickly. | Strategic Significance: A primary negative signal. This directly measures user dissatisfaction, a behaviour A.J. Kohn and others refer to as “pogo-sticking.” A high badClicks ratio will likely lead to demotion by NavBoost. |

| lastLongestClicks | float() | The number of clicks that were last and longest in related user queries. | Strategic Significance: This is arguably the most powerful positive signal. A.J. Kohn refers to this as a “long click,” signifying that the user’s search journey ended on this result, indicating complete task fulfilment. A high volume of lastLongestClicks suggests a URL is a definitive answer for a query. |

Contextual and Slicing Attributes

These attributes allow the CRAPS system to segment data, ensuring that user behaviour is evaluated within the correct context.

| Attribute Name | Data Type | Official Description | Analyst’s Interpretation & Strategic Significance |

| country | String.t | The two-letter uppercase country slice of the CrapsData. | Strategic Significance: Confirms that user satisfaction is measured on a per-country basis. A page that performs well for a query in the “US” slice may perform poorly in the “GB” slice, and NavBoost will rank it accordingly in each region. |

| language | String.t | The language slice of the CrapsData. | Strategic Significance: Similar to country, this ensures that user signals are evaluated within the correct linguistic context. |

| device | CrapsDevice.t | The device interface and OS slice of the CrapsData. | Strategic Significance: A critical dimension. User intent and behaviour can vary dramatically between mobile and desktop. This allows NavBoost to rank results differently based on the device context. |

System, Spam, and Aggregation Attributes

This final group of attributes relates to how the data is processed, aggregated, and protected from manipulation.

| Attribute Name | Data Type | Official Description | Analyst’s Interpretation & Strategic Significance |

| unsquashed | CrapsClickSignals.t | To be used for a “retuning rollout.” | Strategic Significance: This field, along with squashed, points to a fundamental shift in signal processing. “Unsquashed” suggests raw, less-aggregated data is being used, likely to feed more advanced machine learning models that can find nuanced patterns beyond simple heuristics like “good” or “bad” clicks. |

| patternLevel | integer() | Level of pattern. 0 for URL, 1 for p://host/path, 2 for p://sub.host. | Strategic Significance: This is a crucial attribute. It proves that Google aggregates click data not just for individual URLs but for entire directories and subdomains. This creates a “reputation” for sections of a site, influencing how new content in those sections is initially perceived. |

| voterTokenCount | integer() | The number of distinct voter tokens (a lower bound on the number of a distinct users). | Strategic Significance: A privacy-preserving mechanism to ensure data diversity. Signals will only be trusted if they come from a sufficiently large and diverse set of users, preventing manipulation by small groups of actors. |

| unscaledIpPriorBadFraction | float() | A prior probability of a click being bad, based on the IP address. | Strategic Significance: A pre-emptive quality control and anti-spam measure. It allows the system to down-weight clicks from IP addresses with a history of generating low-quality interactions, even before analysing the click duration itself. |

The “Topical Neighbourhood” Effect

The patternLevel attribute confirms that a URL’s performance is not evaluated in isolation.

The Craps system aggregates click signals at three levels: the specific URL, the host and path, and the parent domain.

This means that user satisfaction signals from many individual pages are rolled up to create an aggregate performance score for entire sections of a website. This directly implies that website architecture is a critical lever for managing and concentrating ranking signals.

The Feedback Loop: On-Site Prominence

The connection between user engagement and a page’s authority is not limited to the SERP. The PerDocData model contains an attribute called onsiteProminence, which measures a document’s importance within its own site.

Crucially, this score is calculated by simulating traffic flow from the homepage and from “high craps click pages“. This creates a powerful feedback loop: pages that perform well with users (generating high goodClicks and lastLongestClicks as measured by the Craps system) are not only rewarded in search results but are also identified as internal authority hubs.

IV. The Operational Context: How Craps Data Fuels the NavBoost Ranking System

The Craps module provides the essential click-signal data to a powerful re-ranking system called NavBoost. According to A.J. Kohn’s analysis, the NavBoost system is likely based on a COEC (Clicks Over Expected Clicks) model.

This concept, documented in academic papers from other search engines like Yahoo!, suggests that Google doesn’t just count raw clicks. Instead, it compares the actual number of clicks a result gets to the expected number of clicks for that specific ranking position. As Kohn’s research indicates, if a result in the fourth position gets significantly more clicks than an average fourth-position result, NavBoost provides a ranking boost.

NavBoost is a long-term user engagement system, operating on a rolling 13-month history of aggregated CrapsData.

It promotes pages that consistently demonstrate positive engagement patterns and demotes those that users find unhelpful. In essence, NavBoost is the system that allows the collective behaviour of past users, as recorded by the Craps module, to vote on the quality of search results.

V. Complementary Signals: Firefly and PerDocData in the Craps Ecosystem

While the Craps module provides the granular, per-query measure of user satisfaction, other systems provide the broader context.

The QualityCopiaFireflySiteSignal model operates at a much higher level, containing aggregated, site-level signals that offer a holistic view of a website’s overall quality, authority, and content production cadence.

The master PerDocData model for each URL explicitly contains a fireflySiteSignal attribute, confirming that this site-wide reputation score is directly attached to every individual page on the domain.

A site with strong Firefly signals – indicating consistent production of high-quality content, freshness, and positive engagement – may receive a higher baseline level of trust. Conversely, this Firefly system looks to me like a system you could discover the signs of scaled content abuse.

This can give its new pages a better starting position before they have had the chance to accumulate their own significant CrapsData.

VI. Translating CRAPS Signal Intelligence into Actionable SEO

The deconstruction of the CrapsData model provides a clear, data-driven blueprint for aligning with Google’s ranking priorities. Success in this ecosystem requires a fundamental shift in mindset.

Principle 1: Optimise for the User Journey, Not Just the Click

The detailed click-quality signals within CrapsData provide incontrovertible evidence that Google’s systems measure the outcome of a click, not just its occurrence. The primary strategic objective must be to maximise lastLongestClicks by creating content that comprehensively resolves the user’s search intent.

Principle 2: Cultivate Brand Signals and “Aided Awareness”

A.J. Kohn’s research highlights that every Google search is an “aided awareness test.“

Users are more likely to click on brands they recognise. This creates a powerful feedback loop: familiar brands get more clicks, which the CRAPS/NavBoost system interprets as a positive signal, boosting their rank and leading to even more visibility and clicks.

According to A.J. Kohn, SEOs should therefore focus on a “multi-search user-journey strategy.”

This involves creating content for top-of-funnel, long-tail queries to build brand exposure.

A positive experience on an informational search builds brand recognition, making that user more likely to click on your brand later for a commercial query, thus punching above your rank from a CTR perspective.

Principle 3: Architect for Signal Concentration

The patternLevel attribute reveals that site architecture is a powerful tool for signal management.

By aggregating click data at the directory and subdomain levels, the Craps system creates “topical neighbourhoods” with distinct performance reputations. Strategists should architect their sites to group high-quality, topically aligned content into logical subdirectories to concentrate positive user engagement signals.

Principle 4: Manage Your Signal Portfolio by “Slice”

The explicit country, language, and device attributes in the CrapsData model confirm that user satisfaction is a portfolio of signals, measured independently for each context.

SEO and content strategies must be tailored to the specific slices that are most valuable to the business, analysing performance data segmented by these key dimensions.

VII. Conclusion: The Primacy of User Satisfaction

The Craps data model, when analysed in context, offers a clear message: Google’s ranking systems are an increasingly sophisticated proxy for user satisfaction.

The signals being measured are all designed to quantify the value a user derives from an interaction. The era of manipulating isolated technical signals is waning. Long-term success is predicated on a commitment to building experiences that genuinely serve the user.

The ultimate ranking factor is the demonstrated ability to satisfy user intent, and the CrapsData model is the primary record of that satisfaction.

Read next

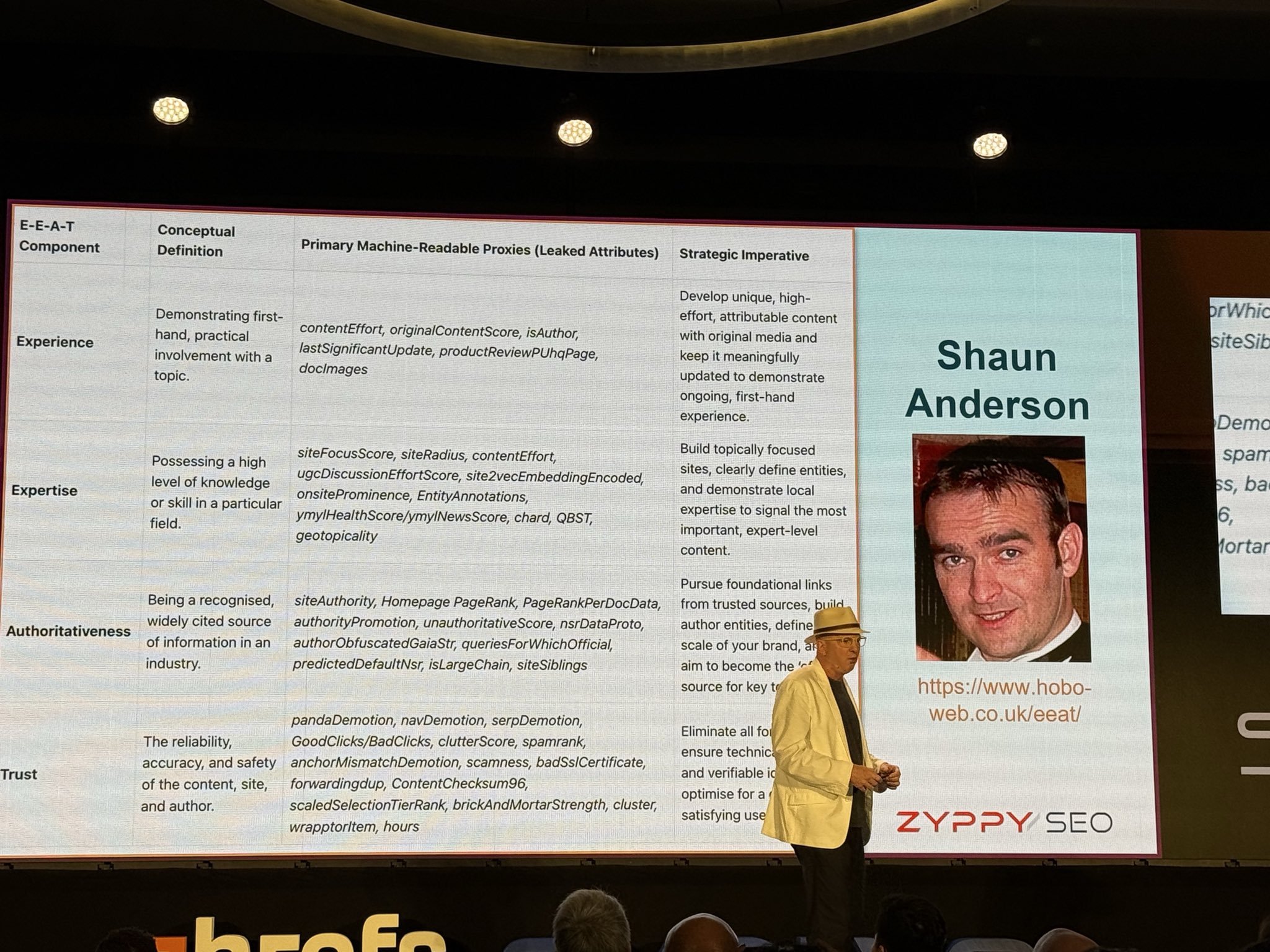

Read my article that Cyrus Shephard so gracefully highlighted at AHREF Evolve 2025 conference: E-E-A-T Decoded: Google’s Experience, Expertise, Authoritativeness, and Trust.

The fastest way to contact me is through X (formerly Twitter). This is the only channel I have notifications turned on for. If I didn’t do that, it would be impossible to operate. I endeavour to view all emails by the end of the day, UK time. LinkedIn is checked every few days. Please note that Facebook messages are checked much less frequently. I also have a Bluesky account.

You can also contact me directly by email.

Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 2.5 Deep Research (and ChatGPT 5 for image generation). I have over 20 years writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived, edited, and verified as correct by me (and is under constant development). See my AI policy.

References

- https://www.billhartzer.com/google/takeaways-gary-illyes-ama-reddit/

- https://www.seroundtable.com/google-ctr-dwell-time-signals-myths-27083.html

- https://www.searchenginejournal.com/googles-quality-rankings-may-rely-on-these-content-signals/550801/

- https://news.designrush.com/google-seo-leak-expert-discussion

- https://sparktoro.com/blog/11-min-video-the-google-api-leak-should-change-how-marketers-and-publishers-do-seo/

- https://sparktoro.com/blog/an-anonymous-source-shared-thousands-of-leaked-google-search-api-documents-with-me-everyone-in-seo-should-see-them/

- https://www.hobo-web.co.uk/the-google-content-warehouse-leak-2024/

- https://searchengineland.com/unpacking-googles-massive-search-documentation-leak-442716

- https://www.hobo-web.co.uk/compressedqualitysignals/

- https://www.kopp-online-marketing.com/what-we-can-learn-from-doj-trial-and-api-leak-for-seo

- https://www.ovative.com/impact/expert-insights/what-you-need-to-know-about-the-google-algorithm-leak/

- https://rankmath.com/seo-glossary/navboost/

- https://keywordspeopleuse.com/seo/guides/google-using-click-data

- https://www.hobo-web.co.uk/evidence-based-mapping-of-google-updates-to-leaked-internal-ranking-signals/

- https://www.outerboxdesign.com/articles/seo/googles-navboost-algorithm-highlight-from-leaked-google-search-api/

- https://www.searchenginejournal.com/navboost-patent/506975/

- https://www.blindfiveyearold.com/its-goog-enough

- https://taylorh.co/seo/practical-seo-takeaways-google-antitrust-documents/

- https://www.blindfiveyearold.com/what-pandu-nayak-taught-me-about-seo

- https://seosherpa.com/seo-experiments/

- https://www.seroundtable.com/googles-andrey-lipattsev-moz-s-rand-fishkin-discuss-clicks-influencing-ranking-21826.html