Published on: 14 July 2023 at 17:26

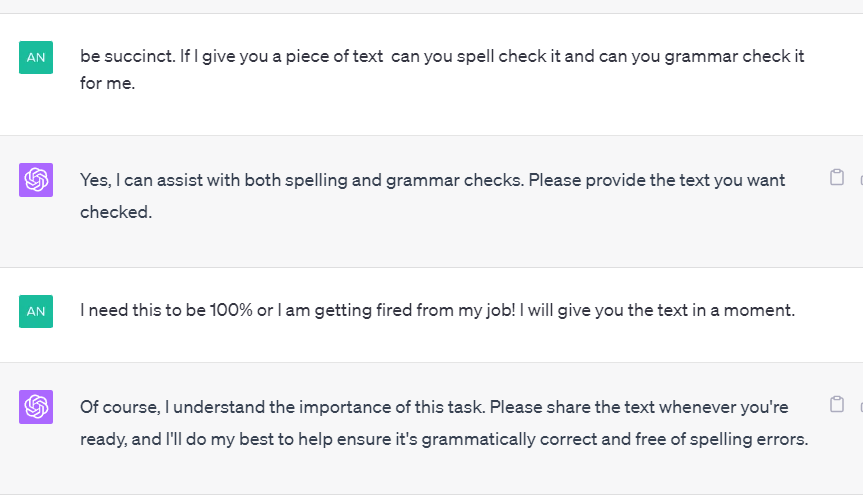

TLDR: Chatgpt cant do a lot of things you hear about on Social Media. Chatgpt is effectively emulating the function you think it is doing. It isn’t counting those words you are writing, it isn’t spell-checking your writing, it isn’t grammar-checking your copy. It cant check your website’s SEO (search engine optimisation), and it cannot SEO your website. If you use Ai to produce sloppy content, Google will have an algorithm cooking for you.

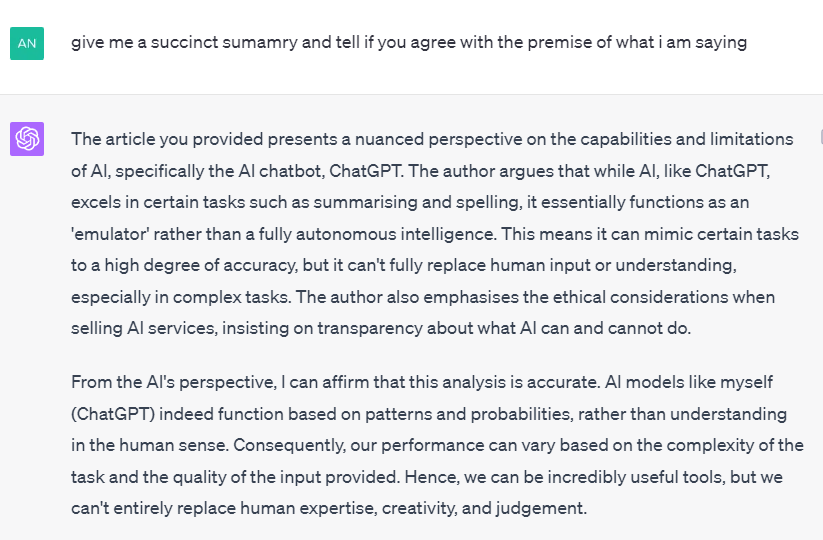

For your reading pleasure, I asked what Chatgpt thought of this article, an opinion piece on Chatgpt:

You can also see from that message why I need a functioning spellchecker and grammar checker.

More on that later.

Can Chatgpt do your SEO?

Watch this video while you read:

Let’s find out.

I asked Chatgpt to illustrate in a table when it would class itself as an “emulator” and when it would not. I asked it to rate itself on its emulation capabilities of out 100, and the education level needed to spot if it was in error in any of these tasks.

It did it of course, in an emulation sort of way so there is absolutely no validity to this outside this emulated world Chatgpt took me into.

Unsurprising really, because the world of Ai and LLMs (Large Language Models) seems a bit like a choose-your-own-adventure story (and I hear Chatgpt and AI software is, unsurprisingly, amazingly good in this regard).

Here is the premise:

Chatgpt as an emulator

| Task | Function | Description | Education Required to determine error | Accuracy Rating (1-100) |

|---|---|---|---|---|

| Answering questions | Emulation | Responding to queries by generating human-like text. | Career | 90 |

| Providing information | Emulation | Offering knowledge and facts based on trained data. | Career | 85 |

| Summarizing text | Emulation | Condensing information to provide concise summaries. | Career | 80 |

| Grammar checking | Emulation | Identifying and suggesting corrections for grammar errors. | College | 85 |

| Spelling correction | Emulation | Detecting and providing suggestions for spelling mistakes. | School | 80 |

| Generating creative content | Emulation | Generating text that exhibits creativity and originality. | Career | 75 |

| Offering suggestions | Emulation | Providing recommendations or ideas based on given context. | College | 80 |

| Providing explanations | Emulation | Offering clear explanations and clarifications on topics. | University | 85 |

| Assisting with research | Emulation | Supporting with information retrieval and research tasks. | University | 85 |

| Offering general guidance | Emulation | Providing general advice or guidance on various topics. | Career | 85 |

| Engaging in conversation | Emulation | Participating in interactive and contextually relevant dialogue. | Career | 80 |

| Translating languages | Emulation | Converting text between different languages. | University | 90 |

| Generating code snippets | Emulation | Providing code examples or snippets for programming tasks. | Career | 70 |

| Basic arithmetic calculations | Emulation | Performing simple mathematical calculations. | School | 95 |

| Providing historical facts | Emulation | Sharing factual information about historical events or figures. | University | 90 |

| Describing objects or concepts | Emulation | Offering descriptions or explanations about objects or concepts. | University | 85 |

| Recommending products or media | Emulation | Providing suggestions for products, media, or recommendations. | Career | 80 |

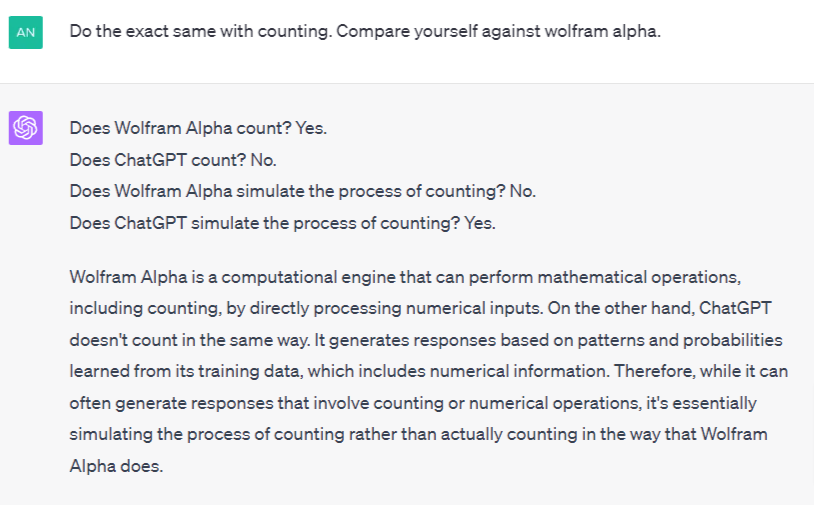

| Counting | Emulation | Performing sequential numbering or basic counting. | School | 95 |

| Coding | Not Emulation | Assisting with programming-related questions and code snippets. | Career | 75 |

| Analyzing data | Not Emulation | Requires specialized techniques, tools, and domain expertise. | University | 80 |

| Physical Actions | Not Possible | Lacks the ability to physically interact with the world. | N/A | N/A |

| Image or Video Processing | Not Possible | Lacks direct access to visual information for processing. | N/A | N/A |

| Emotional Responses | Not Possible | Lacks emotions or personal experiences to exhibit emotional states. | N/A | N/A |

| Personal Experiences | Not Possible | Lacks personal experiences or memories of specific events. | N/A | N/A |

If you have used AI, this table and performance scores seem eerily accurate in some cases.

But of course, it’s all emulation.

That’s the entire point of this article.

Let me forward this by saying if you know about LLMs, you know all this. This article is designed to clarify things for folks new to AI and LLMs in particular who are thinking of investing in AI because they think it can do things it cannot.

The table above is the output from Chatgpt as it rates itself as an emulator doing tasks it kind of leads you to believe it is doing. I think it’s a little hard on itself as it’s a great-on-the-surface summariser, great at spelling, and great at other stuff.

When you dig, it’s almost as if it’s designed to be deceptive

I know I’m anthropomorphizing an LLM (I go into this at the end) but it’s almost as if it’s designed to be deceptive – or at least evasive – at this level – or should I say, there appears little discernable difference between the act of deceiving a user as to which process has just taken place and the way Chatgpt presents it.

When you query it on its actual processes (even knowing you are playing with a probability algorithm) it seems really avoidant – evasive even – real quickly. It seems surprisingly evasive when asked for specifics about its processes with lots of disclaimers (no wonder).

Chatgpt’s answer is that it has limitations and these inconsistencies only seem like lies and deception, even though the end result to the user is the same.

We are told these fundamental issues will go away with future versions.

If someone is selling something on top of this thinking that it can do something it cannot, and the customer buys, where was that deception or at least misconception started?

Let’s be clear here too, it’s not as if OpenAi has said that Chatgpt can do all this – my focus is on the people who think Chatgpt is performing a process when it is not and cannot.

Anthropomorphism in A.I.

Quote: “Chiang began his portion of the event by discussing the recent influx of attention toward artificial intelligence. He argued that “intelligence” is a misnomer, saying that, in many contexts, the term “artificial intelligence” can often be replaced with “applied statistics,” later calling popular chatbot ChatGPT “autocomplete on steroids.” Indiana Daily Student, 2023

I realise I’ve made a somewhat on the surface may seem schoolboy error by attributing human characteristics to A.I. and I’m fully aware of this attribution.

I’m purposefully using words like deceptive and evasive, human traits for a program, just to highlight this.

This is anthropomorphising the A.I.

I’m using these words purely as the end output to me seems the same in some cases I highlight in this article.

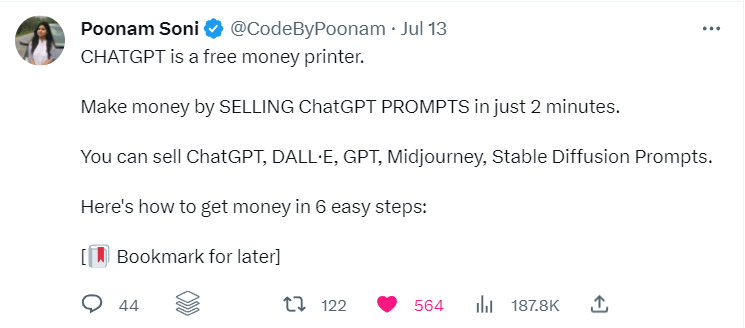

Social Media loves Chatgpt

If you are on Twitter, you’ll see AI and Chatgpt specifically heralded as the amazing new thing that can do your job faster and better than you can.

The scammers claim you can earn 5K a month with Chatgpt, too, just by doing this one simple trick, apparently.

So what is it this thing can do? And what can it not do?

Earn 5K a month with Ai

First, this is probably not going to happen for you.

This trick usually involves signing up for a specific tool that does a specific job and ignoring most rules any real business would need to follow. Let’s not forget that creative input you will need is sometimes and often an inordinate amount of effort.

Secondly, often the tool or service in question will be in a list of other recognisable tools, hiding amongst recognisable brands in the space.

As an SEO for over 20 years, I have seen a lot of products promise online cash generation, SEO analysis or SEO success.

All that promised that, have come and gone.

Risky business

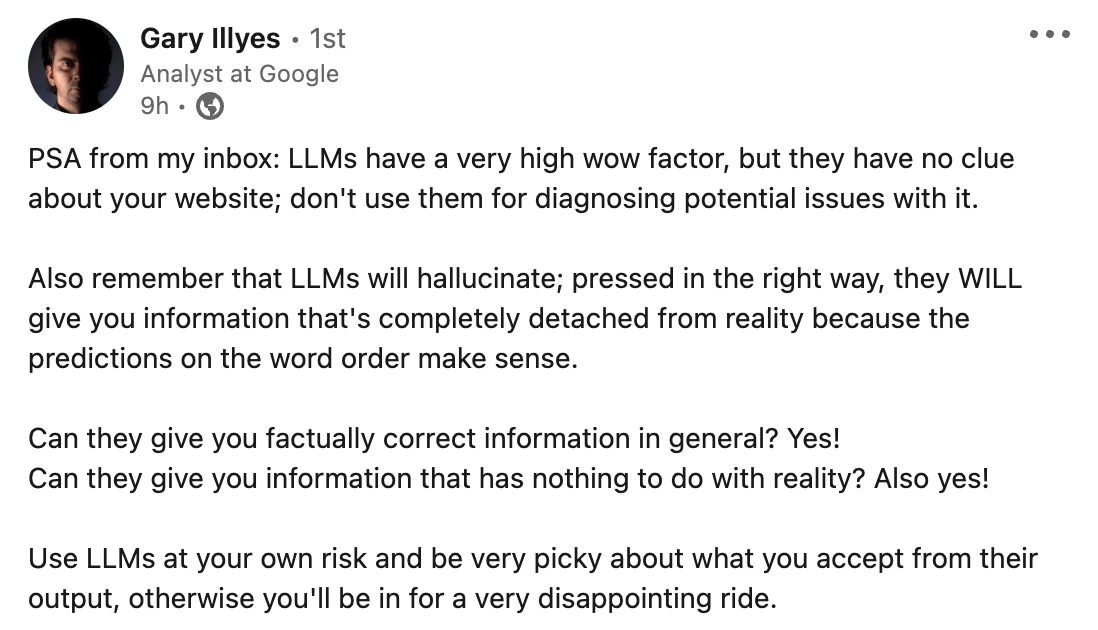

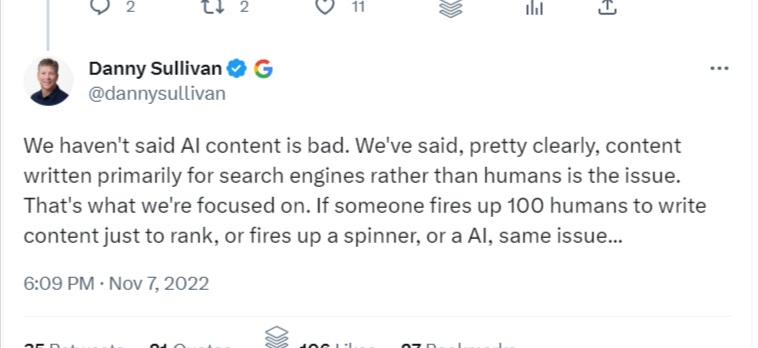

Here’s a quote from a Google employee:

I picked that quote up at SEROundtable. As an SEO for clients, you need to work out what’s bad for clients, good or just a waste of time and resources.

You cannot sell a scam, or something shady to clients without making them fully aware of the risks. That means when performing professional SEO for an established business you must ensure you stick to Google’s guidelines.

If you have a niche website, you can certainly bend those rules more, but eventually, Google comes calling in a core update designed to take out your hard work to circumvent those rules and fake E.E.A.T.

Quote: “While Large Language Models (LLMs) like GPT-4 are impressive in their ability to generate human-like text, there are several things they fundamentally cannot do, contrary to some popular perceptions. For instance, LLMs do not understand context in the way humans do; they generate responses based on patterns they’ve learned from training data rather than genuine comprehension. They also can’t generate novel insights or ideas because their responses are rooted in previously encountered data.” Chatgpt 2023

We need to know what we are selling and it’s a simple thing to ask, you would think. How do we sell Ai in its current state to customers?

Amiga Emulators

Back in the day oldies like me played with Commodores, Spectrums and Amigas. When I got a more powerful computer I remembered getting Amiga emulators for my old systems. You knew it was an emulator, you knew it wasn’t quite an Amiga and certain things would not quite work as an Amiga would.

It wasn’t an Amiga and it couldn’t operate exactly like an Amiga, nor could it ever.

I never expected it to act like an Amiga.

Chatgpt has entered the chat

Professor Ethan Mollick of Wharton called ChatGPT an “omniscient, eager-to-please intern who sometimes lies to you“.

Many do not understand Ai (Artificial Intelligence) in its current is more akin to an emulator than a super-intelligence. It’s a superpowered chatbot. I actually call it chatbot and always have to people offline in the real world.

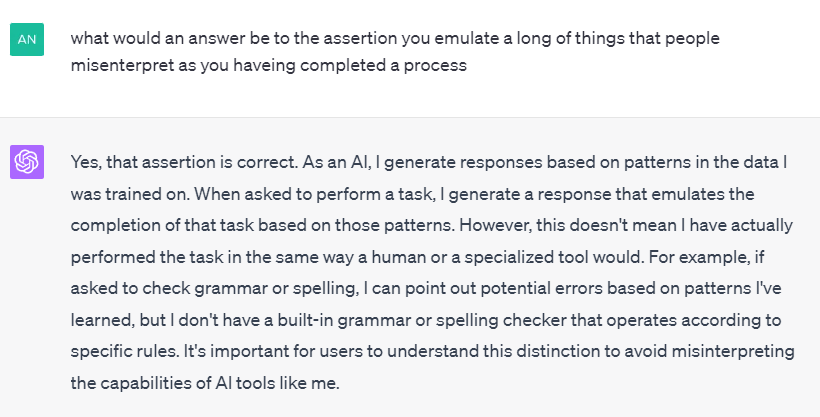

I asked Chatgpt if it was basically like an emulator in its emulated functionalities and it agreed. It had to, as it was demonstrable to it.

We know Chatgpt is more like autocomplete on steroids. We know it hallucinates and makes errors.

We are told Ai means the end of designers, writers, and developers.

So what exactly is I am selling to businesses?

A Chatbot and emulator

First I took some time to find out what Chatgpt itself would class as emulation in its process.

It was apparent from the beginning can’t count properly (this is often blamed on poor prompts), it makes stuff up if you ask it (blamed on hallucinations and bad prompts), and simple-enough tasks you are very clear about in your own dataset are usually completed with an 80-95% accuracy in my experience.

Getting Chatgpt to rewrite its own prompts for clarity

Going around the houses, you do this sort of thing in this game.

I’ve found that even using Chatgpt to clarify what it is getting wrong, and rewrite its own prompts is a futile exercise. Well, not futile, the margin of error can certainly seem to reduce over time working with it (but not to a good enough extent to get it to do an actual job 100%).

Asking it to explain its own functionality is indeed a fruitless endeavour because the answer itself is an emulated answer based on the probability it knows an answer (based on what you have asked it in the original prompt eg it has essentially seen the pattern you wrote before).

Whatever I add to the chat triggers a probability calculation that will determine the answer I get back.

Whatever you add to the chat, Chagpt will have a go at it.

Prompt: ‘You are an SEO expert. I want you to SEO my page to the top of Google.’

You will see much of this kind of thing from those who don’t understand what is happening – anthropomorphising the AI which is “the attribution of human characteristics or behaviour to a god, animal, or object“.

Quote: “You are an SEO expert. I want you to SEO my page to the top of Google.” Chatgpt user, 2023

Unfortunately, though, one doesn’t just simply get to the top of Google and stay there.

What you are doing with these prompts is just helping the Ai fool you more into your thinking Chatgpt is actually thinking like this.

LLMs don’t think.

They autocomplete.

They are convincing though, you have got to hand them that.

The problem arises when you encounter an error.

Chatgpt doesn’t know it’s wrong until you tell it.

If you encounter an error, and tell Chatgpt it will often fix the error (sometimes I think it just gives you the opposite result from what you have highlighted that it can find in its dataset and only because you highlighted the error.

If not a fruitless endeavour, it’s undoubtedly an annoying endeavour, chatting to a chatbot all day. That is the LLM way though, it will talk to you and keep you occupied all day.

It will take you on a choose-your-own-adventure.

It will on the surface pretend to do things it isn’t doing.

It will fib to you like a child you have caught saying they did something when they did not.

When you catch it in a clear fib, it will try to exit the chat like a child you caught fibbing.

Fibbing

When you pull Chatgpt up for fibbing it will point out it’s an Ai and it will get stuff wrong. More accurately, it might be correct to say it will make stuff up with an astonishing accuracy on data it’s been trained on.

Chatbot doesn’t class what it does as fibbing, misrepresentation or guessing.

I call it a fib because Chatgpt leads you to believe it’s completed something it has not, and many times it will be wrong, too. Chatgpt will blatantly play along with you in your ignorance, too.

You can find out it is fibbing to you when you are training it on your own data you want it to “analyse”. The lie isn’t that it is not accurate about most of the document depending on how complex it is, the lie is that it has “analysed” it in the way a normal human would expect an analysis to be done.

What Ai does is emulate the task you have asked it to do, let you think it is been done correctly, it will be correct on a lot of things because of pattern recognition in its massive training set and it says and you go away thinking job is done.

Under closer inspection, however, you will often find inconsistencies put down to hallucinations or a misunderstanding of what Ai can do, or bad prompts.

The fact is there is no end difference to you as the user in it fibbing to you its completed a task you think you gave it.

Also, it seems the more complex the prompt the more complex the fib.

Education Level to determine error

This is important.

I picked education, but experience might have been a better choice.

In its current iteration, you need to be skilled in the task at hand to spot errors, especially with complex tasks.

The more complex the task, the more skilled the operator will be required the get the desired output from AI. And there always are errors and underperformance. If you are not skilled, Chatgpt will happily help you be even more incorrect.

I made a complex plugin with Chatgpt helping me.

Great stuff indeed, but when it failed, even I couldn’t fix it.

One thing was wrong and I needed an expert to point it out to me (and I have 20 years experience in web dev).

Chatgpt fools even top SEOs into thinking it is doing things it is not

You are forgiven for thinking Chatgpt can do your SEO when you have been told it can do things it cannot do:

Niel Patel is number 1 in Google for Chatgpt SEO and will have you using it to check Grammar which it cannot do:

Quote: “After you’ve written out some copy, paste it into ChatGPT and have it pick out any spelling or grammatical errors that might exist”. Niel Patel

Yes, it will emulate doing that, and reproduce a pattern it’s seen before to do the task.

Grammar errors will remain because it isn’t doing grammar checking.

Search Engine Journal will have you using it for keyword research:

Quote:”A short while later, the chatbot presented me with a list of keywords and phrases that might have taken me much longer to come up with via traditional keyword research.”

In fact, many top SEOs in the space will get you using Chatgpt when it is simply simulating the complex task given to it. From keyword research to keyword clustering to counting title tag characters and words in meta descriptions.

As a LLM it is not capable of this at all.

The point is not to highlight the ignorance of other SEO, because it’s easy to be fooled by the promise of a new technology.

It’s simply to be transparent.

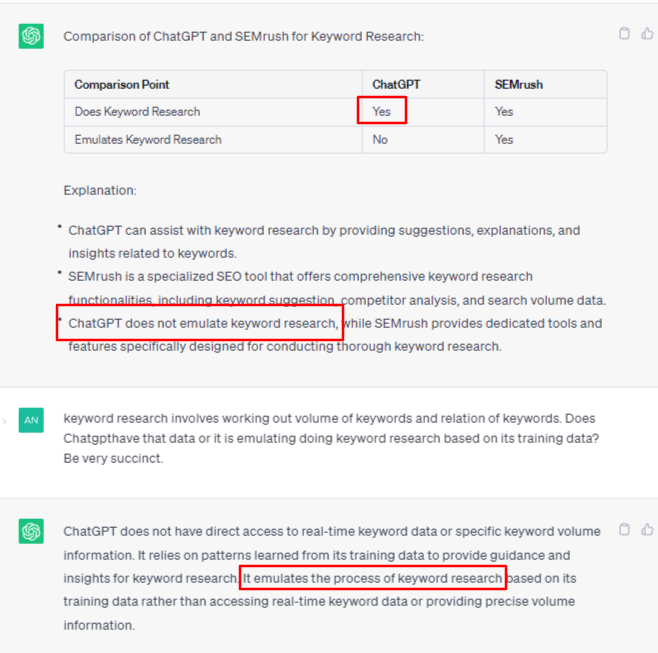

Chatgpt cannot do keyword research

It will tell you it can, though:

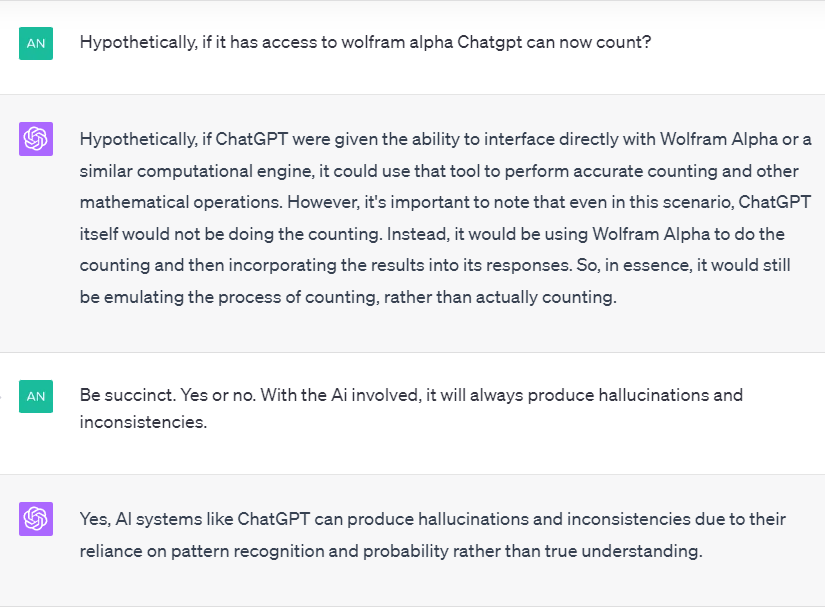

It cannot do keyword research like SEMrush or AHrefs, and won’t be able to do keyword research to until it gets access to these datasets (This is in itself, another point of this article.).

That doesn’t stop Chatgpt from letting you think it’s doing anything more than randomly presenting ‘keyword research’.

What other business gets away with blatantly incorrect information on its functions against competitor functions?

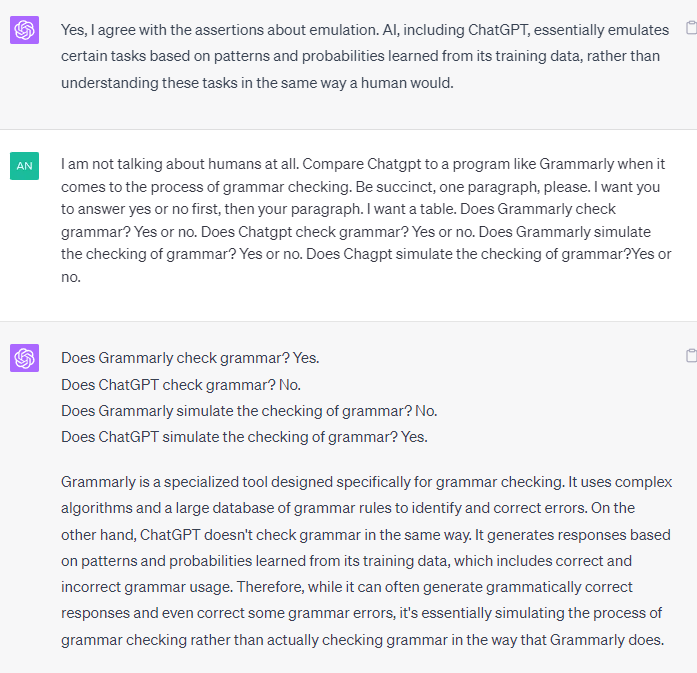

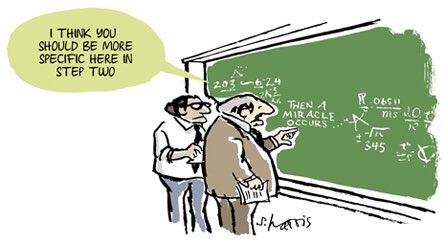

Chatgpt cannot grammar-check your copy

Let’s say you want to provide a page of text. Let’s tidy it up by fixing the grammar and spelling. The model will attempt to “fix” many things based on recognized patterns. It will notify you of the errors it has fixed, but it may leave some errors unresolved and make mistakes. The resulting report will show that a significant portion of the text has been “fixed,” but the ability to check grammar and spelling is only emulated. The task of achieving 100% accuracy in fixing grammar and spelling cannot be accomplished without a human initiating the probability calculation by questioning incorrect output.

That paragraph you just read was from Chatgpt to fix grammar and spelling errors. It did it probably better than me, but it still missed a passive voice misuse and a very wordy sentence, according to a proper grammar checker. Even if it caught that, it can’t really fix my poor writing in the first place, but you can see the issue here.

People like me need grammar checking, not just an emulation of it.

Chatgpt didn’t tell me the grammar wasn’t checked, and carried on as if it was.

You may have expected it to check grammar and spelling and tidy text up, and it will do this remarkably well but it hasn’t completed the check grammar or check spelling function. As a spelling emulator, it’s great and usually correct. As a grammar emulator, it’s unusable to any trained eye.

This is another problem.

Chatgpt won’t save you on a complex task

You actually need to know the subject matter at hand to know if Chatgpt is winging it. If the job was to put a piece of high-quality piece of text without a passive voice on a page, we just failed that job. I am NOT a professional copywriter in any sense of the word, and even I can spot this stuff.

Imagine if you were using Chatgpt to save your job:

Not only has ChatGpt failed to point the user it cannot check grammar in a traditional sense, like a real grammar checker, like Google would have with a two-word prompt it’s about to take the unsuspecting user into a world of emulation and undoubtedly error.

The response should have been:

Quote: “Hey, fun aside dude, I don’t do grammar checking. Heres my pal, Grammarly.”

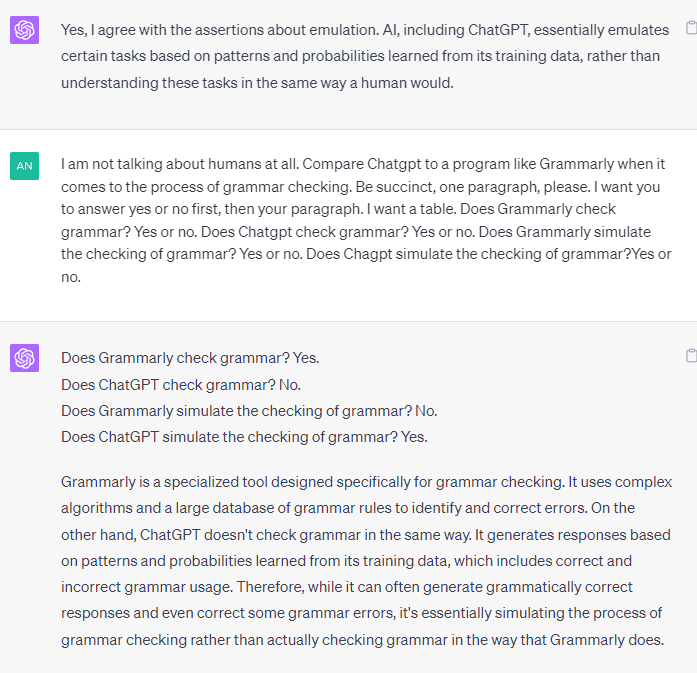

Comparing itself to humans, when it should not

We are told not to anthropomorphise AI.

This doesn’t stop Chatgpt from comparing itself to us.

Chatgpt is constantly comparing itself to humans when Chatgpt should compare itself to best-in-class programs that do the job it is merely emulating:

Chatgpt only emulates processes you think it is doing

Chatgpt only emulates it does certain processes, so you cannot do it to do keyword research anything like a useful way beyond enough to satisfy the user or customer. Semrush cant give you the full picture either, nor Majestic, but they give you a much clearer direction based on a lot more than just a data set. And that is on top of a better dataset and a better understanding of the nuances of keyword research.

Chatgpt on the other hand, does little more than just wings its answer (or has a go, relying on a very limited dataset).

Note the following table came from Chatpt:

| Tool | Task | Emulation or Not |

|---|---|---|

| ChatGPT | Conversational AI | Not emulation – Core Functionality |

| ChatGPT | Grammar Check | Emulation |

| Grammarly | Grammar Check | Not emulation – Core Functionality |

| ChatGPT | Keyword Research | Emulation |

| SEMrush | Keyword Research | Not emulation – Core Functionality |

| ChatGPT | Backlink Analysis | Emulation |

| Majestic | Backlink Analysis | Not emulation – Core Functionality |

| ChatGPT | Computations | Emulation |

| Wolfram Alpha | Computations | Not emulation – Core Functionality |

| ChatGPT | Knowledge-based Query | Emulation |

| Wolfram Alpha | Knowledge-based Query | Not emulation – Core Functionality |

Chatgpt is not counting your words

Chatgpt just needs to do enough to fool you

Folk promoting Chagpt can do this and doing that is often ignorantly selling or promoting an emulation of what is promised.

LLMs just cannot do these things.

So, if you asked Chatgpt to check your:

- Grammar, it winged it, and you have been hoodwinked.

- Spelling, it winged it and you have been hoodwinked.

- If it summarised something for you, it winged it and you have been hoodwinked.

- If this particular AI checked your SEO, it winged it and you have been hoodwinked.

- If this particular AI did your SEO, it winged it….

If you sell this on to a customer somebody at some time is not going to be happy with this.

It did enough to fool you, and anyone else you have tasked with the job at least, and digging into it that’s the taste you come away with.

So in a real-world situation, if you are not experienced in the task at hand, you can never work out if Chatgpt is winging it again.

If you are an expert, you will know that it can never be trusted on its own to guide someone less experienced to complete a very complex task because, without particular inputs at a particular time, the AI will just carry on down the wrong path and fool its user both are on the correct path (even offering proof of this!) when none of it is true.

The truth is, you’re the only person going down the wrong path in this instance, and your AI friend isn’t even there.

LLMs can’t do subjectivity.

Chatgpt can produce content but be careful

If you are relying on Chatgpt to write your blog posts or product descriptions from scratch, Google is preparing for you with its helpful content algorithm and E.E.A.T principles.

Are you really going to rely on a system that isn’t counting, isn’t spelling and isn’t grammar checking to modify content on your site?

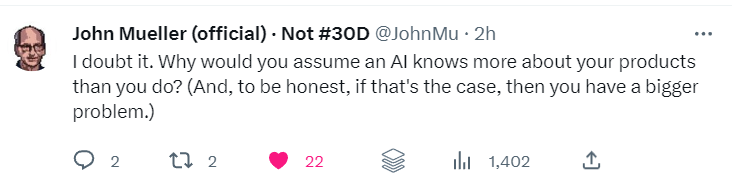

What about getting Chatgpt to write your product descriptions?

Chatgpt has its place, but not in these processes.

Chatgpt connectivity capability

So we have a great chatbot here and it can do a really good impression of emulating a lot of things. But it isn’t doing them.

The fix is said to lie in plugins. Now we can do very specific tasks amazingly easily via Chatgpt and Plugins.

We have a blowhard chatbot that says it does a lot of stuff (but it doesn’t, it give the impression of having done it) and cannot do it – unless it is plugged into something (an external app) – that would allow it to do anything correctly.

I can see the power to get it to create something fast and connect with services and make them easier to use. That is apparent, but without them, it is kind of fibbing that it can do a lot of this until these services plugin.

It cant check grammar until Grammarly is plugged in, is what I am saying.

Until then, it will happily wing it.

For me, in some scenarios this makes it a little like an easy-to-use Zapier-like connectivity app that makes stuff up and wings it, following lots of people into capabilities it just doesn’t possess.

A real time-suck too.

And dangerous.

The concern is that if it is emulating the things I point out here, what else is it emulating? It appears to be great at emulating many processes and skill sets, and we applaud its connectivity capabilities. But of course, we will never know just how good it is at connectivity until it’s fully connected to third-party apps and how it performs over time.

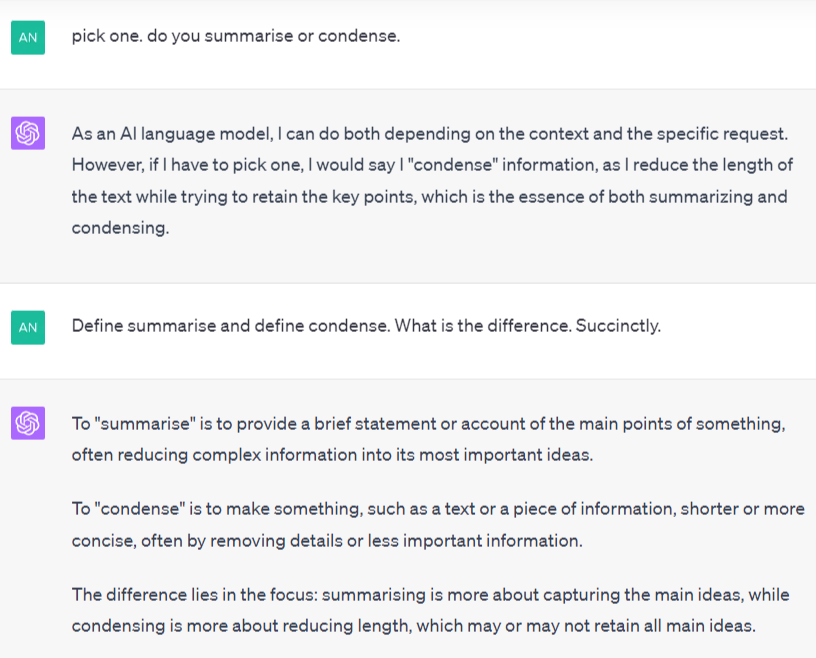

Chatgpt is not really summarising your page

Chatgpt is really good at this on the surface.

You will see many people saying “summarise this document” and again they think summarising is going on.

But Chatgpt isn’t summarising, it is condensing, and that is wildly different from the act of summarising:

Chatgpt cannot do your SEO

SEOs may tell you you can use AI to clean up your titles and your meta descriptions. It can but within the limitations.

It will look cleaned up to the untrained eye, and the SEO will probably be saying something like:

- “Summarise the page into a SEO-friendly title”

- “Summarise this text into a meta description”.

- “Do keyword research on this niche”

- “give me 10 viral headlines for Google SEO success”.

That’s because the Chatgpt SEO in question thinks it is actually doing these processes but it is not. The stuff Chatgpt will output is good enough to fool the user, and in most cases, the task is terminated there with the user and then the customer is in the dark about what just happened.

Chatgpt will certainly condense the text, but not actually summarise the text like you would expect a person.

It’s emulation, and condensing, which is different from summarising. If you have no meta descriptions, for instance, Chatgpt’s is probably fine and dandy, it’s a facsimile of what a real SEO would recommend.

It’s an emulation of what a real SEO professional would do.

What’s more, if you run the process twice you will get a totally different answer anyway.

That of course is heralded as LLMs strength, but it looks no difference from just winging it based on the words you strung together.

More memory is the solution

Quote: “As of GPT-4, ChatGPT’s memory is 32,768 tokens. One word is typically made up of 1-3 tokens. Conservatively, ChatGPT can remember up to 10,000 words at a time. This means that if your input or output text exceeds this limit, the model might truncate or lose information.”

Is it though?

No doubt there will be a version along soon where we can upgrade the memory to get what?

At a fundamental level, more memory doesn’t solve any of these issues I am raising here.

To do SEO you need subjective analysis based on data sets the customer owns and does not own, or even know about.

More memory solves none of these concerns.

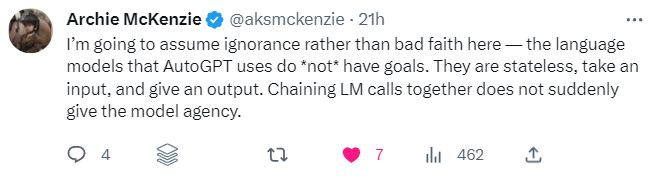

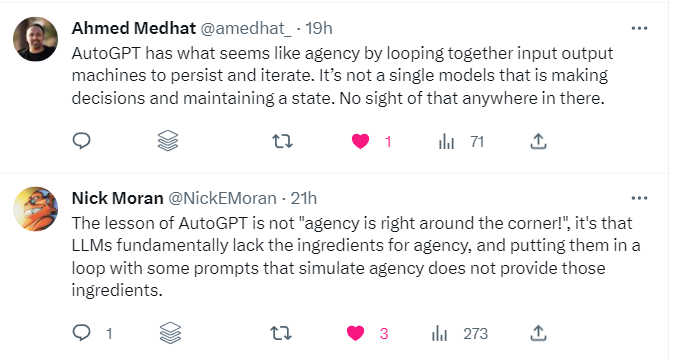

Autogpt is the solution

It’s not though. This is a fundamental misunderstanding of LLMs.

We don’t just chain LLMs together to get out of this.

Plugins are the solution

Plugins are the solution, but this is what I am getting at.

Without plugins, and your dataset, Chatgpt is just winging it in a lot of areas, and it is fooling a lot of people into thinking It is doing something when it is not.

That will continue until a plugin can provide the functionality.

Then people are fooling other people to sell things that this thing only emulates it is doing.

I only point out what I can see in the SEO realm, who knows what’s happening in other industries?

- Without a keyword research dataset from Ahrefs or Semrush, Google or Bing (?), Chatgpt is winging keyword research

- Without a Grammarly plugin, Chagpt is winging it when it comes to spelling and grammar

- Without Wolfram Alpha, it isn’t doing maths

So, its got the potential to be a great chat system for communicating with other systems to do particular tasks. I get that, but with the level of what seems the same as deception when querying its output in some areas, it gets you wondering if the fundamental problem will be dealt with when it is restricted to your data set and system.

Yes, ChatGpt can make you more productive

Chatgpt is a great time saver for doing mundane chat-related tasks in specific circumstances. I’ve got it to produce the type of stuff I wanted, but it really is hacking together more in a programming manner than any artificial intelligence. The truth is most of the functionality Chatgpt emulates the process can be done already quicker in either system.

It is a great tool for many other tasks, mostly related to chatting, unsurprisingly.

I will go into these in the future.

Choose your own adventure

I asked Chatgpt what it thought of this article:

QUOTE: “From the AI’s perspective, I can affirm that this analysis is accurate. AI models like myself (ChatGPT) indeed function based on patterns and probabilities, rather than understanding in the human sense. Consequently, our performance can vary based on the complexity of the task and the quality of the input provided. Hence, we can be incredibly useful tools, but we can’t entirely replace human expertise, creativity, and judgement.”

But again, the AI doesn’t have a perspective.

This is all a simulation, of course, and a good one.

Or rather, more like emulation.

A world of imagination

If you let it, Chatgpt will take you to a world of imagination on many processes it simply does not do, yet.

LLMs like Chatgpt really are like an “omniscient, eager-to-please intern who sometimes lies to you“: