If you are a website manager, SEO, or website developer looking for advice on how to optimise internal links on your website within Google webmaster guidelines, this article is for you. This article discusses optimising internal links on your website to maximise indexation and ranking in Google.

The author has 20 years of experience as a practicing professional SEO (search engine optimisation).

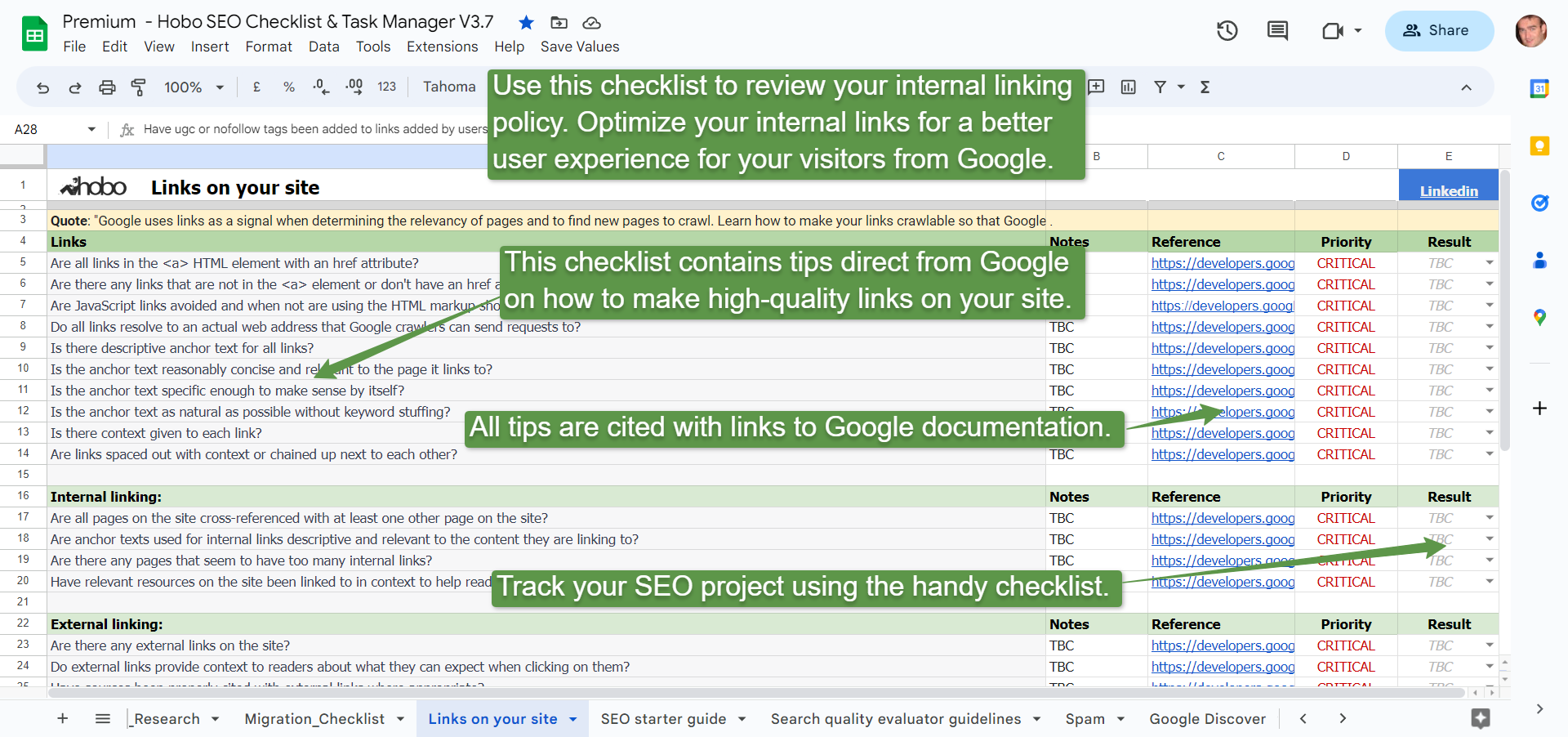

The SEO checklist is also available as a spreadsheet free on Google Sheets, or alternatively, you can subscribe to Hobo SEO Tips and get your checklist as a Microsoft Excel spreadsheet.

QUOTE:”I’d forget everything you read about “link juice.” It’s very likely all obsolete, wrong, and/or misleading. Instead, build a website that works well for your users.” John Mueller, Google 2020

Here is an internal links SEO checklist:

- Are all links in the <a> HTML element with an href attribute?

- Are there any links that are not in the <a> element or don’t have an href attribute?

- Do all links resolve to an actual web address that Google crawlers can send requests to?

- Is there descriptive anchor text for all links?

- Is the anchor text reasonably concise and relevant to the page it links to?

- Is the anchor text specific enough to make sense by itself?

- Is the anchor text as natural as possible without keyword stuffing?

- Is there context given to each link?

- Are links spaced out with context or chained up next to each other?

- Are all pages on the site cross-referenced with at least one other page on the site?

- Are anchor texts used for internal links descriptive and relevant to the content they are linking to?

- Are there any pages that seem to have too many internal links?

- Have relevant resources on the site been linked to in context to help readers understand a given page?

- Are there any external links on the site?

- Do external links provide context to readers about what they can expect when clicking on them?

- Have sources been properly cited with external links where appropriate?

- Have “nofollow” tags been added to links for sources that are not trusted or paid for?

- Have sponsored or “nofollow” tags been added to links that were paid for?

- Have “ugc” or “nofollow” tags been added to links added by users on the site?

- Are JavaScript links avoided and when not are using the HTML markup shown in the example provided in Google documentation?

- Do all pages on your site link to other pages on your site?

- Is the navigation simple, consistent, and clear throughout the website?

- Does the website use rel=nofollow only in rare circumstances on internal links?

- Check there are no broken links on the pages

- If you believe in ‘first link priority’, you are going to have to take it into account when creating links.

- Keep anchor text links within a limit of 16 keywords max and frankly, that is too long.

It is clear that internal links have value to Google for crawling, indexing, and context:

QUOTE: “How important is the anchor text for internal links? Should that be keyword rich? Is it a ranking signal? We do use internal links to better understand the context of content on your site so if we see a link that’s saying like red car is pointing to a page about red cars that helps us to better understand that but it’s not something that you need to keyword stuff in any way because what generally happens when people start kind of focusing too much on the internal links is that they have a collection of internal links that all say have like four or five words in them and then suddenly when we look at that page we see this big collection of links on the page and essentially that’s also text on a page so it’s looking like keyword stuff text so I try to just link naturally within your website and make sure that you kind of have that organic structure that gives us a little bit of context but not that your keyword stuffing every every anchor text there.” John Mueller, Google 2015

How to do ‘internal link building’

Whereas link building is the art of getting other websites to link to your website. Internal link building is the art age-old of getting pages crawled and indexed by Google.

It is the art of spreading real Pagerank about a site and also naturally emphasising important content on your site in a way that actually has a positive, contextual SEO benefit for the ranking of specific keyword phrases in Google SERPs (Search Engine Results Pages).

There are more internal linking seo tips in the free seo checklist in Google sheets.

QUOTE: “Most links do provide a bit of additional context through their anchor text. At least they should, right?” John Mueller, Google 2017

External backlinks to your site are far more powerful than internals within it, but internal links have their use too.

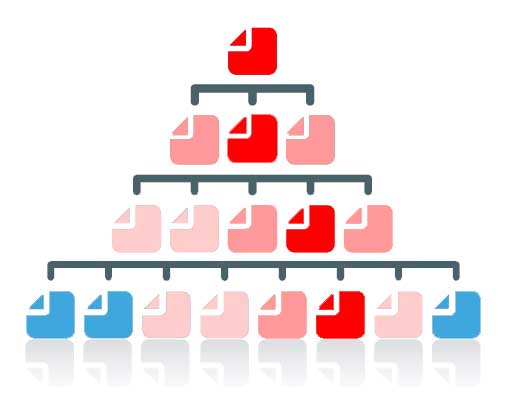

Traditionally, one of the most important things you could do on a website to highlight your important content was to link to important pages often, especially from important pages on your site (like the homepage. for instance).

QUOTE: “If you have pages that you think are important on your site don’t bury them 15 links deep within your site and I’m not talking about directory length I’m talking about actual you have to click through 15 links to find that page if there’s a page that’s important or that has great profit margins or converts really – well – escalate that put a link to that page from your root page that’s the sort of thing where it can make a lot of sense.” Matt Cutts, Google 2011

Highlighting important pages in your site structure has always been important to Google from a CRAWLING, INDEXING and RANKING point of view. It is also important for website users from a USABILITY, USER EXPERIENCE and CONVERSION RATE perspective.

Most modern CMS take a headache out of getting your pages crawled and indexed. Worrying about your internal navigation structure (unless it is REALLY bad) is probably unnecessary and is not going to cause you major problems from an indexation point of view.

There are other considerations though apart from Google finding your pages.

QUOTE: “So what will happen is, we’ll see the home page is really important, things linked from the home page are generally pretty important as well. And then… as it moves away from the home page we’ll think probably this is less critical. That pages linked directly from the home page are important is fairly well known but it’s worth repeating. In a well organized website the major category pages and any other important pages are going to be linked from the home page.” John Mueller, Google 2020

I still essentially use the methodology I have laid down on this page, but things have changed since I first started practising Google SEO and began building internal links to pages almost 20 years ago.

The important thing is to link to important pages often.

Google has said it doesn’t matter where the links are on your page, Googlebot will see them:

QUOTE: “So position on a page for internal links is pretty much irrelevant from our point of view. We crawl, we use these mostly for crawling within a website, for understanding the context of individual pages within a website. So if it is in the header or the footer or within the primary content, it’s totally more up to you than anything SEO wise that I would worry about.” John Mueller, Google 2017

As an aside, that statement on its own does not sit nicely with some patents I’ve read, where link placement does seem to matter in some instances.

When it comes to internal linking on your website we do know:

- where you place links on a page is important for users

- which pages you link to on your website is important for users

- how you link to internal pages is important for users

- why you link to internal pages is important for users

Internal Linking is important to users, at least, and evidently, it is important to Google, too, and it is not a straight forward challenge to deal with optimally.

I would keep the advice on this page to link information-type articles to information-type articles on your website.

Google has lots of patents related to links and anchor text:

QUOTE: “Google crawls the web and ranks pages. Where a page ranks in Google is down to how Google rates the page. There are hundreds of ranking signals SEO think we know about from various sources.” Shaun Anderson, Hobo 2020

Ensure every page on your site is linked to from another

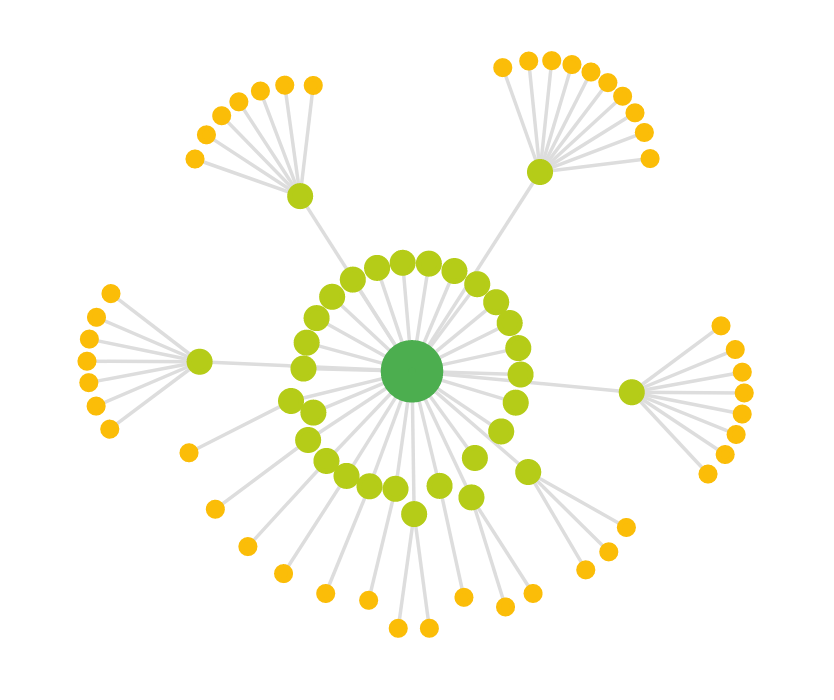

By making sure you link to other relevant pages from pages on your site to other pages, you spread Pagerank (or link equity) throughout the site and each individual link can provide even more context and relevant information to Google (which can only be of use for search engine optimisation).

QUOTE:” Assigning relevance of one web page to other web pages could be based upon distance of clicks between the pages and/or certain features in the content of anchor text or URLs. For example, if one page links to another with the word “contact” or the word “about”, and the page being linked to includes an address, that address location might be considered relevant to the page doing that linking.” 12 Google Link Analysis Methods That Might Have Changed – Bill Slawski, SEO By The Sea, 2012

A home page is where link equity seemed to ‘pool’ (from the deprecated Toolbar PageRank point of view) and this has since been confirmed by Google:

QUOTE: “home pages” are where “we forward the PageRank within your website” . John Mueller, Google 2014

How you build internal links on your site today is going to depend on how large your site is and what type of site it is. Whichever it is – I would keep it simple.

I would err on the safe side these days. Keep internal linking simple and easy to understand.

Optimising internal links says John Mueller in a webmaster hangout is

QUOTE: “not something I’d see as being overly problematic if this is done in a reasonable way and that you’re not linking every keyword to a different page on your site”. John Mueller, Google

As mentioned previously, this will depend on the size and complexity of your website. A very large site should keep things simple as possible and avoid any keyword stuffing footprint.

Any site can get the most out of internal link building by descriptively and accurately linking to canonical pages that are very high quality. The more accurately described the anchor text is to the page linked to, the better it is going to be in the long run. That accuracy can be to an exact match keyword phrase or a longtail keyword variation of it (if you want to know more see my article on keyword research for beginners – that link is in itself an example of a long tail variation of the primary head or medium term ‘keyword research’).

I silo any relevance or trust mainly through links in a flat architecture in text content and helpful secondary menu systems and only between pages that are relevant in context to one another.

I don’t worry about perfect Pagerank siloing techniques.

On this site, I like to build in-depth content pieces that rank for a lot of long-tail phrases. These days, I usually would not want those linked from every page on a site – because this practice negates the opportunities some internal link building provide. I prefer to link to pages in context; that is, within page text.

There’s no set method I find works for every site, other than to link to related internal pages often and where appropriate. NOTE: You should also take care to manage redirects on the site, and minimise the amount of internal 301 redirects you employ on the site; it can slow your pages down (and website speed is a ranking factor) and impact your SEO in the long-term in other areas.

Fix broken links on your website

Websites ‘Lacking Care and Maintenance’ Are Rated ‘Low Quality’ by Google.

QUOTE: “Sometimes a website may seem a little neglected: links may be broken, images may not load, and content may feel stale or out-dated. If the website feels inadequately updated and inadequately maintained for its purpose, the Low rating is probably warranted.” Google Quality Evaluator Guidelines, 2017

The simplest piece of advice I ever read about creating a website / optimising a website was over a decade ago:

QUOTE: “”make sure all your pages link to at least one other in your site””

This advice is still sound today.

Check your pages for broken links. You can use this free SEO technical audit checklist to help you. It lists the tools you use to find broken links, for instance.

Broken links are a waste of link power and could hurt your site, drastically in some cases, if a poor user experience is identified by Google. Google is a link-based search engine – if your links are broken, you are missing out on the benefit you would get if they were not broken.

Saying that – fixing broken links is NOT a first-order rankings bonus – it is a usability issue, first and foremost.

QUOTE: “The web changes, sometimes old links break. Googlebot isn’t going to lose sleep over broken links. If you find things like this, I’d fix it primarily for your users, so that they’re able to use your site completely. I wouldn’t treat this as something that you’d need to do for SEO purposes on your site, it’s really more like other regular maintenance that you might do for your users.” John Mueller, GOOGLE – 2014

Make a navigation structure Google can crawl

Just because Google can find your pages easier doesn’t mean you should neglect to build Googlebot a coherent architecture with which it can crawl and find all the pages on your website.

Pinging Google blog search via RSS (still my favourite way of getting blog posts into Google results fast ) and XML sitemaps may help Google discover your pages, find updated content and include them in search results, but they still aren’t the best way at all of helping Google determine which of your pages to KEEP INDEXED or EMPHASISE or RANK or HELP OTHER PAGES TO RANK (e.g. it will not help Google work out the relative importance of a page compared to other pages on a site, or on the web).

While XML sitemaps go some way to address this, prioritisation in sitemaps does NOT affect how your pages are compared to pages on other sites – it only lets the search engines know which pages you deem most important on your own site. I certainly wouldn’t ever just rely on XML sitemaps like that….. the old ways work just as they always have – and often the old advice is still the best especially for SEO.

XML sitemaps are INCLUSIVE, not EXCLUSIVE in that Google will spider ANY url it finds on your website – and your website structure can produce a LOT more URLs than you have actual products or pages in your XML sitemap (something else Google doesn’t like.

Keeping your pages in Google and getting them to rank has long been assured by simple internal linking practices.

Traditionally, every page needed to be linked to other pages for Pagerank (and other ranking benefits) to flow to other pages – that is traditional, and I think accepted theory, on the question of link equity.

I still think about link equity today – it is still important.

Some sites can still have short circuits – e.g. internal link equity is prevented from filtering to other pages because Google cannot ‘see’ or ‘crawl’ a fancy menu system you’re using – or Googlebot cannot get past some content it is blocked in robots.txt from rendering, crawling and rating.

I still rely on the ‘newer’ protocols like XML sitemaps for discovery purposes, and the old tried and trusted way of building a site with an intelligent navigation system to get it ranking properly over time.

QUOTE: “Make sure Google can crawl your website, index and rank all your primary pages by only serving Googlebot high-quality, user friendly and fast loading pages to index.” Shaun Anderson, Hobo 2020

Make a user-friendly navigation menu

Many years ago, I answered in the Google Webmaster Forum a question about how many links in a drop-down are best:

The question was:

QUOTE: “Building a new site with over 5000 product pages. Trying to get visitors to a product page directly from the homepage. Would prefer to use a two-level drop-down on homepage containing 10 brands and 5K products, but I’m worried a huge source code will kick me in the pants.Also, I have no idea how search engines treat javascript links that can be read in HTML. Nervous about looking like a link farm.”

I answered:

QUOTE – “I’d invest time in a solid structure – don’t go for a javascript menu it’s too cumbersome for users. Sometimes google can read these sometimes it can’t – it depends on how the menu is constructed. You also have to remember if google can read it you are going to have a big template core code (boilerplate) on each and every page vying alongside flimsy product information – making it harder for google to instantly calculate what the individual products page is supposed to rank for.

I would go for a much reduced simple sitewide navigation in the menu array,

Home page links to categories > Categories link to products > Products link to related products

when you go to category links the links relevant in that category appear in the menu. Don’t have all that pop down in a dropdown – not good for users at all. Keep code and page load time down to a minimum…” Shaun Anderson

QUOTE:” JohnMu (Google Employee) + 2 other people say this answers the question:” Google Webmaster Forums

I thought to see as somebody from Google agreed, it was worth posting on my own blog.

The most important thing for me when designing website navigation systems is:

- Make it easy for the user to navigate

- Make it easy for Google to get to your content and index your pages

In terms of navigation from a landing page (all your pages are potential landing pages), what do you think the benefits are of giving people 5000 navigation options.

Surely if the page meets their requirements, all you need is two buttons. Home, and “Buy Now!”; OK – a few more – but you get what I mean, I hope.

Less is more, usually.

Google says:

QUOTE: “Limit the number of links on a page to a reasonable number (a few thousand at most).” Google Webmaster Guidelines, 2018

There are benefits to a mega-menu:

QUOTE: “Mega menus may improve the navigability of your site. (Of course, it’s always best to test.) By helping users find more, they’ll help you sell more.” Jakob Nielsen, NN Group, 2017

and there are drawbacks to mega-menus:

QUOTE: “In the bigger scheme of things, the usability problems mentioned here aren’t too serious. They’ll reduce site use by a few percent, but they won’t destroy anyone’s business metrics. But still: why degrade the user experience at all, when the correct design is as easy to implement as the flawed one?” Jakob Nielsen, NN Group, 2010

Once you realise getting your product pages indexed is the key, don’t go for a mega-menu just because you think this is a quick way to solve your indexing problem.

With a site structure, it’s all about getting your content crawled and indexed. That’s the priority.

Use an HTML sitemap

These are simple and easy for Google to crawl and spread Pagerank and they help you at least ensure Google can find all your pages and spread Pagerank:

QUOTE: “Ensure that all pages on the site can be reached by a link from another findable page. The referring link should include either text or, for images, an alt attribute, that is relevant to the target page.” Google Webmaster Guidelines, 2018

A basic HTML sitemap is an old friend, and Google actually does say in its guidelines for Webmasters that you should include a sitemap on your site – for Googlebot and users – although this can naturally get a bit unwieldy for sites with a LOT of pages:

QUOTE: “Provide a sitemap file with links that point to the important pages on your site. Also provide a page with a human-readable list of links to these pages (sometimes called a site index or site map page).” Google Webmaster Guidelines, 2018

When it comes to internal links, the important thing is that you “ensure that all pages on the site can be reached by a link from another findable page” and then you can think about ‘escalating’ the priority of pages via internal links.

QUOTE: “I’d forget everything you read about “link juice.” It’s very likely all obsolete, wrong, and/or misleading. Instead, build a website that works well for your users.” John Mueller, Google 2020

You can create dynamic drop-down menus on your site that meet accessibility requirements and are SEO-friendly and then link your pages together in a Google-friendly way.

Just be sure to employ a system that uses CSS and Javascript (instead of pure javascript & HTML tables) and unordered lists as a means of generating the fancy drop-down navigation on your website.

Then, if javascript is disabled, or the style sheet is removed, the lists that make up your navigation array collapse gracefully into a list of simple links. See here for more on Javascript SEO.

Remember, with Drop down menus:

- Drop-down menus are generally fine but the JavaScript triggering them can cause some problems for search engines, users with screen readers, and screen magnifiers.

- A <noscript> alternative is necessary.

- The options offered in a drop-down should be repeated as text links on the same page, so use unordered lists with CSS to develop your menu.

Use a “Skip Navigation” link on large mega-menu systems

Add a skip navigation link that brings the reader straight down to the main content of the page if you have a large menu system on every page. This allows users to skip the navigation array and get immediately to the page content.

You won’t want this on your visually rich page, so some simple CSS will sort this out. You can hide it from visual browsers, but it will display perfectly in text and some speech browsers.

Generally, I don’t like mega-menu systems on websites and there are discussions online that too many options promote indecision. Whether you use a mega-menu is totally up to you – there are demonstrable pros and cons for both having a mega-menu and not.

Know the 3-Click Rule of website design

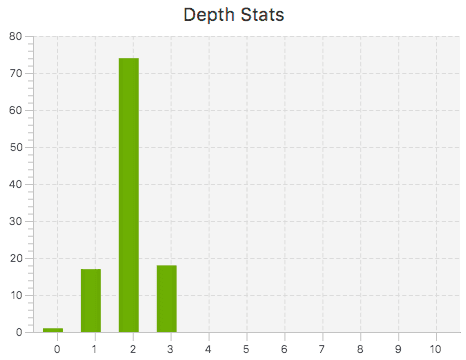

My own site follows the 3-click rule of web design, as you can see in this visual Crawl Map of my site (using Sitebulb):

RULE: “Don’t put important information on your site that is more than 3 clicks away from an entrance page” Zeldman

Many have written about the Three-Click Rule. For instance, Jeffrey Zeldman, the influential web designer, wrote about the three-click Rule in his popular book, “Taking Your Talent to the Web”. He writes that the three-click Rule is:

QUOTE: “based on the way people use the Web” and “the rule can help you create sites with intuitive, logical hierarchical structures”. Jeffrey Zeldman

On the surface, the Three-Click Rule makes sense. If users can’t find what they’re looking for within three clicks, they’re likely to get frustrated and leave the site.

However, there have been other studies into the actual usefulness of the 3-click rule by usability experts, generating real data, that basically debunks the rule as gospel truth. It is evidently not always true that a visitor will fail to complete a task if it takes more than 3 clicks to complete.

The 3 click rule is the oldest pillar of accessible, usable website design, right there beside KISS (Keep It Simple Stupid).

The 3 click rule, at the very least, ensures you are always thinking about how users get to important parts of your site before they bounce.

QUOTE: “home pages” are where “we forward the PageRank within your website” and “depending on how your website is structured, if content is closer to the Home page, then we’ll probably crawl it a lot faster, because we think it’s more relevant” and “But it’s not something where I’d say you artificially need to move everything three clicks from your Homepage”. John Mueller, Google 2014

This is the click depth of my content on this website (as discovered by Screaming Frog):

Keep navigation consistent

A key element of accessible website development is a clean, consistent navigation system coupled with a recognisable usable layout.

Don’t try and reinvent the wheel here. A simpler, clean, consistent navigation system and page layout allow users to instantly find important information and allow them to quickly find comfort in their new surroundings especially if the visitor is completely new to your website.

Visitors don’t always land on your home page – every page on your website is a potential landing page.

Ensure when a visitor lands on any page, they are presented with simple options to go to important pages you want them to go to. Simple, clear calls to action encourage a user to visit specific pages. Remember too, that just because you have a lot of pages on your site, that does not mean you need a mega-menu. You do not need to give visitors the option to go to every page from their entry page. You do not need a massive drop-down menu either. Spend the time and invest in a simple site navigation menu and a solid site structure.

A traditional layout is excellent for accessible website design, especially for information sites.

Remember to use CSS for all elements of style, including layout and navigation.

QUOTE: “Presentation, content and navigation should be consistent throughout the website” Guidelines for UK Government websites – Illustrated handbook for Web management teams

Google has also mentioned consistency (e.g. even your 404 page should be consistent with regard to your normal page layouts).

There is one area, however, where ‘consistency’ might not be the most optimal generic advice and that is how you interlink pages using anchor text.

For instance, on a smaller site; is it better to link to any one page with the same anchor text focusing all signals on one exact match keyword phrase – or – is best to add more contextual value to these links by mixing up how you manage internal links to a single page.

In short, instead of one internal link to a page say “How To Optimise A Website Using Internal Links” I could have 5 different links on 5 pages all with unique anchor text pointing to the one page:

- how to use internal links for SEO

- how to build internal links

- how to manage internal links

- how to optimise internal links

- how to SEO internal links

I think this provides a LOT of contextual value to a page and importantly it mixes it up:

QUOTE: “Each piece of duplication in your on-page SEO strategy is ***at best*** wasted opportunity. Worse yet, if you are aggressive with aligning your on page heading, your page title, and your internal + external link anchor text the page becomes more likely to get filtered out of the search results (which is quite common in some aggressive spaces). Aaron Wall, 2009

From my tests, some sites will get more benefit out of mixing up as much as possible. If you do see the benefit of internal linking using a variation of anchor text, you will need to be aware of First Link Priority (I go into this below).

First link priority – Do multiple links from one page to another count?

Google commented on this in a recent hangout:

QUOTE: “Q: If I have two internal links on the same page and they’re going through the same destination page but with different anchor text how does Google treat that so from our side? A: This isn’t something that we have defined or say it’s always like this always like the first link always the last link always an average of the links there is something like that but rather that’s something that our algorithms might choose to do one way or the other so recommendation there would be not to worry too much about this if you have different links going to the same page that’s completely normal that’s something that we have to deal with we have to understand the anchor tanks to better understand the context of that link and that’s that’s completely normal so that’s not something I kind of worry about there I know some people do SEO experiments and try to figure this out and kind of work out Oh Google currently does it like this but from our point of view can change and it’s not something that we have so even if you manage to figure out how we currently do it today then that’s not necessarily how we’ll do tomorrow or how it’s always it’s across all websites.” John Mueller, Google 2018

First link priority has been long discussed by SEO geeks. Matt Cutts of Google was asked in the video above:

QUOTE: “Hi Matt. If we add more than one links from page A to page B, do we pass more PageRank juice and additional anchor text info? Also can you tell us if links from A to A count?”

At the time he commented something like he ‘wasn’t going to get into anchor text flow’ (or as some call First Link Priority) – in this scenario, which is, actually, a much more interesting discussion.

QUOTE: “Both of those links would flow PageRank I’m not going to get into anchor text but both of those links would flow PageRank” Matt Cutts, Google 2011

But the silence on anchor text and priority – or what counts and what doesn’t, is, perhaps, confirmation that Google has some sort of ‘link priority’ when spidering multiple links to a page from the same page and assigning relevance or ranking scores.

For example (and I am talking internally here – if you took a page and I placed two links on it, both going to the same page? OK – hardly scientific, but you should get the idea. Will Google only ‘count’ the first link? Or will it read the anchor text of both links and give the page the benefit of the text in both links especially if the anchor text is different in both links? Will Google ignore the second link? What is interesting to me is that knowing this leaves you with a question. If your navigation array has your main pages linked to it, perhaps your links in content are being ignored, or at least, not valued.

I think links in the body text are invaluable.

I think as the years go by – we’re supposed to forget how Google worked under the hood of all that fancy new GUI.

The simple answer is to expect ONE link – the first link – out of multiple links on a single page pointing at one other page – to pass anchor text value. Follow that advice with your most important key phrases in at least the first link when creating multiple links and you don’t need to know about first link priority.

A quick SEO test I did a long time ago throws up some interesting questions today – but the changes over the years at Google since I did my test will have an impact on what is shown – and the fact is – the test environment was polluted long before now.

I still think about first link priority when creating links on a page.

Google says today:

QUOTE: “I know some people do SEO experiments and try to figure this out and kind of work out ‘Oh Google currently does it like this’ but from our point of view can change and it’s not something that we have so even if you manage to figure out how we currently do it today then that’s not necessarily how we’ll do tomorrow or how it’s always it’s across all websites.” John Mueller, Google 2018

The last time I tested ‘first link priority’ was a long time ago.

From this test, and the results on this site anyways, testing links internal to this site, it seems Google only counted the first link when it came to ranking the target page.

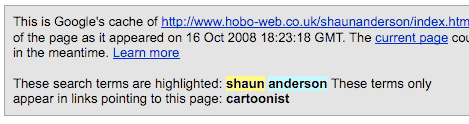

At the time I relied on the notification “These terms only appear in links pointing to this page” (when you click on the cache) that Google helpfully showed when the word isn’t on the page.

Google took that away so now you can really only use the SERPS itself and see if you can detect anchor text influence (which is often not obvious).

Of course, you could simply be sensible when interlinking your internal pages.

Does only the first link count on Google? Does the first or second anchor text link on a page count?

This has been one of the more interesting geek SEO discussions over the years.

All you can do is plan for first-link priority and leave Google to it.

What is Anchor Text?

What is anchor text?

Definition: “Words, typically underlined on a web page that form a clickable link to another web page. Normally the cursor will change to a finger pointing if you hover over such a link.”

HTML code example:

<a href="https://www.hobo-web.co.uk/">This is anchor text!</a>

How to optimise anchor text

Use Descriptive Anchor Text – Don’t Use ‘Click Here’ as it provides no additional contextual information via the anchor text in the link.

QUOTE: “When calling the user to action, use brief but meaningful link text that: 1) provides some information when read out of context 2) explains what the link offers 3) doesn’t talk about mechanics and 4) is not a verb phrase” W3C

The accessibility consultants at the W3C advises “don’t say ‘click here'” and professional SEO professionals recommend it, too.

If you use link text like “go” or “click here,” those links will be meaningless in a list of links. Use descriptive text, rather than commands like “return” or “click here.”

For example, do not do this:

"To experience our exciting products, click here."

This is not descriptive for users and you might be missing a chance to pass along keyword-rich anchor text votes for the site you’re linking to (useful to rank better in Google, Yahoo and MSN for keywords you may want the site to feature for).

Instead, perhaps you should use:

"Learn more about our search engine optimisation products."

Assistive technologies inform the users that text is a link, either by changing pitch or voice or by prefacing or following the text with the word “link.”

So, don’t include a reference to the link such as:

"Use this link to experience our exciting services."

Instead, use something like:

"Check out our SEO services page to experience all of our exciting services."

In this way, the list of links on your page will make sense to someone who is using a talking browser or a screen reader.

NB – This rule applies in web design when naming text links on your page and in your copy. Of course, you can use click here in images (as long as the ALT tag gives a meaningful description to all users).

QUOTE: “One thing to think about with image links is if you don’t have an alt text for that then you don’t have any anchor text for that link. So I definitely make sure that your images have alt text so that we can use those for an anchor for links within your website. If you’re using image links for navigation make sure that there’s some kind of a fallback for usability reasons for users who can’t view the images.” John Mueller, Google 2017

If that wasn’t usable enough, Google ranks pages, part, by keywords it finds in these text links, so it is worth making your text links (and image ALT text) relevant and descriptive.

You can use keyword mapping techniques to map important key phrases to important elements on important pages on your site (like internal links).

Keep anchor text concise and descriptive

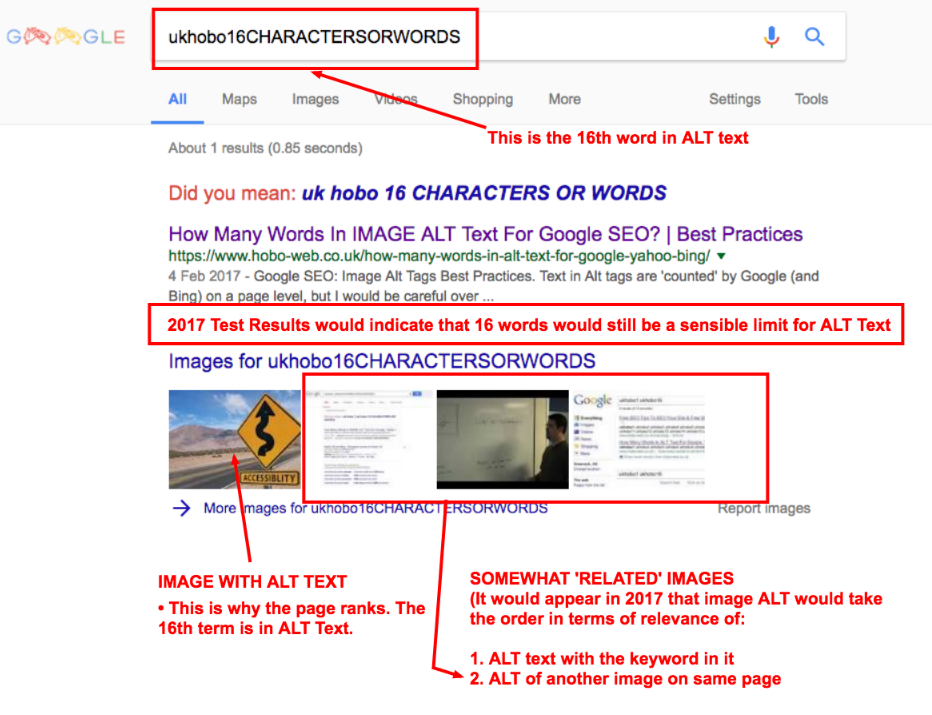

Google may only count the first 16 words in the string of anchor text but this has changed at least once in the last decade (at one time it seemed Google counted the first 8 words in the string and this included ‘stop words’ I think it could be demonstrated at the time).

I tested to see if there was a maximum limit of keywords Google will pass to another page through a text link. How many words will Google count in a backlink or internal link? Is there a best practice that can be hinted at?

My first qualitative tests were basic and flawed but time and again other opportunities for observation indicated the maximum length of the text in a link was perhaps 8 words (my first assumption was a character limit, maybe 55 characters, e.g not a word limit, but that was flawed).

Further observations at the time, (described below) pointed to keeping the important keywords in the first EIGHT WORDS of any text link to make sure you are getting the maximum benefit but that number would be sixteen words (although I would STILL keep important keywords in the first 8-12 words of a link for maximum benefit).

We know that keywords in anchor text have some contextual value to Google.

How many words will Google count as anchor text?

Google effectively tells you exactly what to do to optimise your website to meet their guidelines.

10-20 years ago when I was doing SEO, we had to test everything ourselves.

I thought it would be useful to include these tests and observations as anecdotal evidence.

Not many people link to you with very long anchor text links, so when people do, it is a chance to see how many keywords Google is counting as a keyword phrase in anchor text.

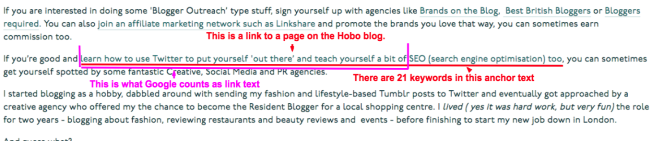

This blogger linked to me with an anchor text containing 21 keywords in the link:

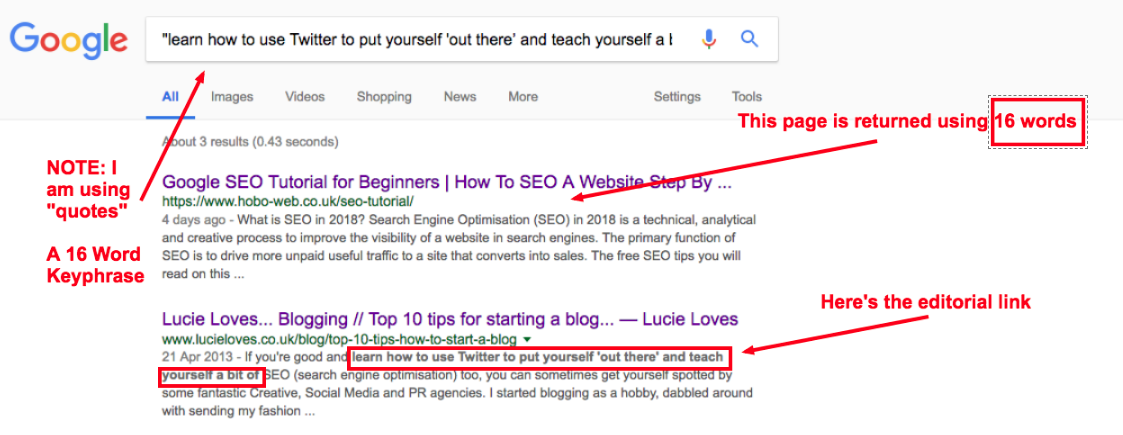

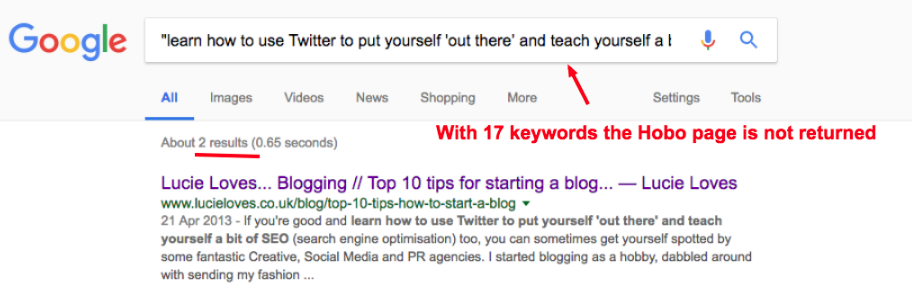

From this observation we can use Google to check a few things:

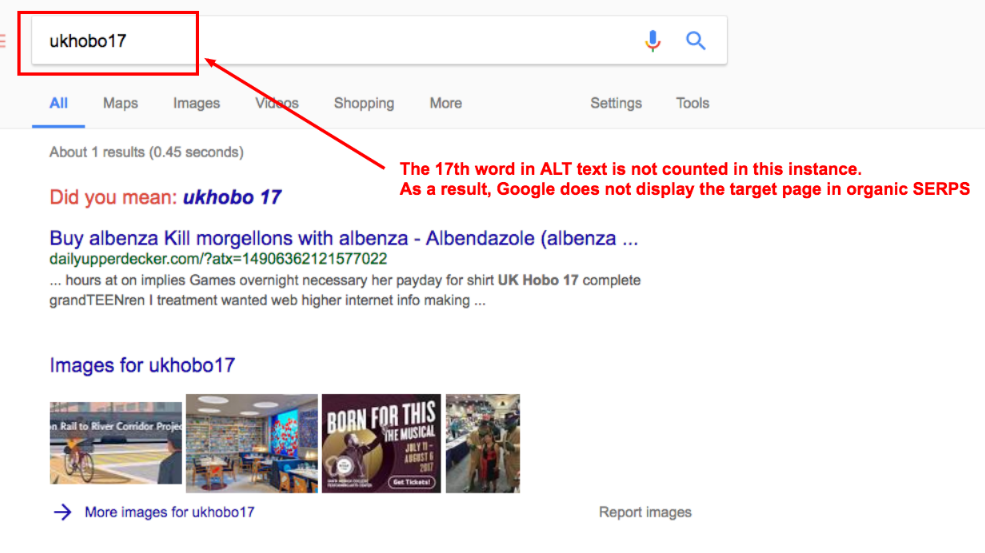

In Google.co.uk the answer is consistently 16 words in anchor text for maximum contextual value.

Any signal within the 16-word limit in anchor text can be detected, nothing above the 16-word threshold was detected:

Ironically the 17th keyword in anchor text was ‘SEO’, which the Hobo page ranked page one in the UK at the time, so it is ‘relevant’ for the query on many other ranking factors but this imposed limit is an instance where the document is not selected as a result of the limit.

Google has since made all this much harder to detect if this is even still the case today.

How about if the link is in ALT text?

Google limits keyword text in ALT attribute to 16 words too, so it may be reasonable to think it is the same.

and

See my article Alt text SEO checklist for more on optimising alternative text in images on your website.

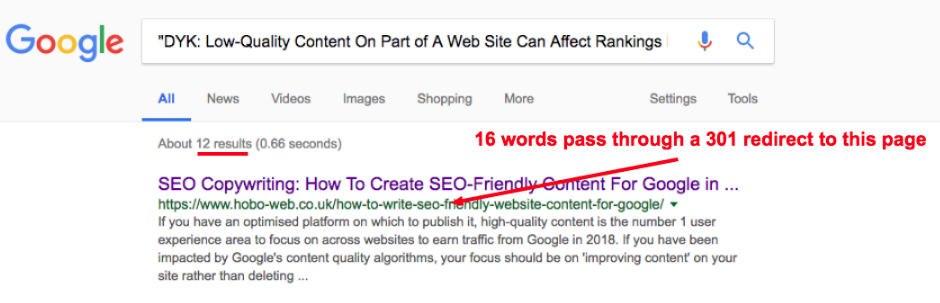

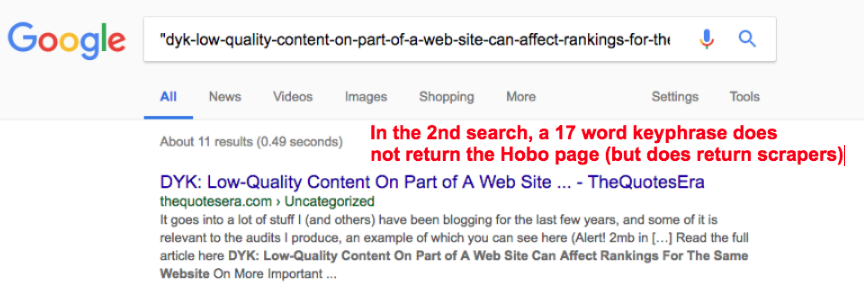

What if the link passes through a 301 redirect?

The same limits were in place.

In Google.co.uk the answer is consistently 16 words in anchor text for maximum contextual value:

Any signal within the 16-word limit in anchor text can be detected, nothing above the 16-word threshold was detected:

AGAIN – When you start testing this stuff, I believe Google starts messing back with you in some way. It’s extremely hard to identify such instances these days.

Do not link large blocks of text as Google only counts the first 16 words it finds in the anchor text link and the rest of the keywords ‘evaporate’ from the anchor text link leaving you with a 16-keyword maximum anchor text phrase to be descriptive and get the most out of links pointing to the page.

Something to think about.

QUOTE: “301 Redirects are an incredibly important and often overlooked area of search engine optimisation. Properly implemented 301 Redirects are THE cornerstone SEO consideration in any website migration project. You can use 301 redirects to redirect pages, sub-folders or even entire websites and preserve Google rankings that the old page, sub-folder or website(s) enjoyed.“ Shaun Anderson, Hobo 2020

Google tells you to keep anchor text concise.

Beware of using plugins to automate ‘SEO-friendly’ internal links

I would avoid using most plugins to optimise your internal links. Yes, it’s a time-saver, but it can also look spammy.

Google has been known to frown upon such activities.

Case in point:

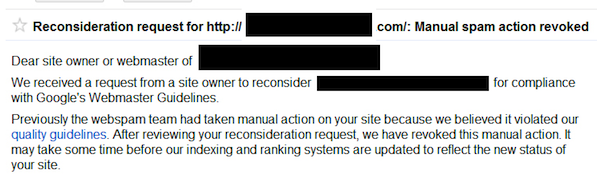

QUOTE: “As happened to a friend of a friend, whose rankings went deep into the well of despair shortly after installing and beginning to use SEO Smart Links. Since there hadn’t been any other changes to the site, he took a flyer on a reconsideration request and discovered that yes, indeed, there had been a penalty”:

“So, what had this site owner done to merit a successful reconsideration request? Simple – he removed the SEO Smart Links plugin and apologized for using it in the first place” Dan Theis, Marketers Braintrust, 2012

You get the most from such optimisations if they are manual and not automated, anyway.

Does Google count internal keyword-rich links to your home page?

The last time I tested this was a long time ago, and it was an age-old SEO trick that sometimes had some benefits.

A long time ago I manipulated the first link priority to the home page of a site for the site’s main keyword – that is, instead of using ‘home‘ to link to my homepage, I linked to the home page with “insert keyword“). Soon afterward the site dropped in rankings for its main term from a pretty stable no6 to about page 3 and I couldn’t really work out exactly any other issue.

Of course, it’s impossible to isolate if making this change was the reason for the drop, but let’s just say after that I thought twice about doing this sort of SEO ‘trick‘ in future on established sites (even though some of my other sites seemed to rank no problem with this technique).

I formulated a little experiment to see if anchor text links had any impact on an established home page (in as much a controlled manner as possible).

Result:

Well, look at the graph below.

It did seem to have an impact.

It’s possible linking to your home page with keyword rich anchor text links (and that link being the ONLY link to the home page on that page) can have some positive impact in your rankings, but it’s also quite possible attempting this might damage your rankings too!

Trying to play with first link priority is for me, a bit too obvious and manipulative these days, so I don’t really bother much, unless with a brand new site, or if it looks natural, and even then not often, but these kinds of results make me think twice about everything I do in SEO.

I shy away from overtly manipulative on-site SEO practices – and I suggest you do too.

How can I optimise anchor text across a website?

Optimising your anchor text across an entire site is actually a very difficult and time-consuming process. It takes a lot of effort to even analyse internal anchor text properly.

You can use tools like SEMRush, SiteBulb Crawler, DeepCrawl, Screaming Frog or SEO Powersuite Website Auditor to check the URL structure and other elements like anchor text on any site sitewide.

The advice would be to keep it simple but diverse.

Does Google count keywords in anchor text in internal Links?

Many years ago I tested to see if Google counts keywords in the URL to influence rankings for specific keywords and how I investigated this.

In this article, I am looking at the value of an internal link and its impact on rankings in Google.

My observations from these tests (and my experience) include:

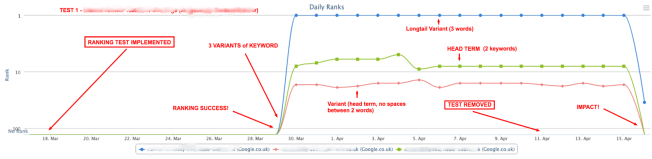

- witnessing the impact of removing contextual signals from the anchor text of a single internal link pointing to a target page (April 15 impact in the image below)

- watching as an irrelevant page on the same site takes the place in the rankings of the relevant target page when the signal is removed (19 April Impact)

- watching as the target page was again made to rank by re-introducing the contextual signal, this time to a single on-page element e.g. one instance of the keyword phrase in exact match form (May 5 Impact)

- potential evidence of a SERP Rollback @ May 19/20th

- potentially successfully measuring the impact of one ranking signal over another (a keyword phrase in one element via another) which would seem to slightly differ from recent advice on MOZ, for instance.

Will Google count keywords in internal anchor text links?

QUOTE: “we do use internal links to better understand the context of content of your sites” John Mueller, Google 2015

Essentially my tests revolve around ranking pages for keywords where the actual keyphrase is not present in exact match instances anywhere on the website, in internal links to the page, or on the target page itself.

The relevance signal (mentions of the exact match keyword) IS present in what I call the Redirect Zone – that is – there are backlinks and even exact match domains pointing at the target page but they pass through redirects to get to the final destination URL.

In the image below where it says “Ranking Test Implemented” I introduced one exact match internal anchor text link to the target page from another high-quality page on the site – thereby re-introducing the ‘signal’ for this exact match term on the target site (pointing at the target page).

Where it says ‘Test Removed’ in the image below, I removed the solitary internal anchor text link to the page, thereby, as I think about it, shortcutting the relevance signal again and leaving the only signal present in the ‘redirect zone’.

It is evident from the screenshot above that something happened to my rankings for that keyword phrase and long tail variants exactly at the same time as my tests were implemented to influence them.

Over recent years, it has been difficult for me, at least, to pin down, with any real confidence anchor text influence from internal pages on an aged domain. Too much is going on at the same time, and most are out of an observer’s control.

I’ve also always presumed Google would look at too much of this sort of onsite SEO activity as attempted manipulation if deployed improperly or quickly, so I have kind of just avoided this kind of manipulation and focused on improving individual page quality ratings.

TEST RESULTS

- It seems to me that, YES, Google does look at keyword-rich internal anchor text to provide context and relevance signal, on some level, for some queries, at least.

- Where the internal anchor text pointing to a page is the only mention of the target keyword phrase on the site (as my test indicates) it only takes ONE internal anchor text (to another internal page) to provide the signal required to have a NOTICEABLE influence in specific keyword phrase rankings (and so ‘relevance’).

——————————————————-

Test Results: Removing Test Focus Keyword Phrase from Internal Links and putting the keyword phrase IN AN ALT TEXT ELEMENT on the page

To recap in my testing: I am seeing if I can get a page to rank by introducing and removing individual ranking signals.

Up to now, if the signal is not present, the page does not rank at all for the target keyword phrase.

I showed how having a keyword in the URL impacts rankings, and how having the exact keyword phrase in ONE internal anchor text to the target page provides said signal.

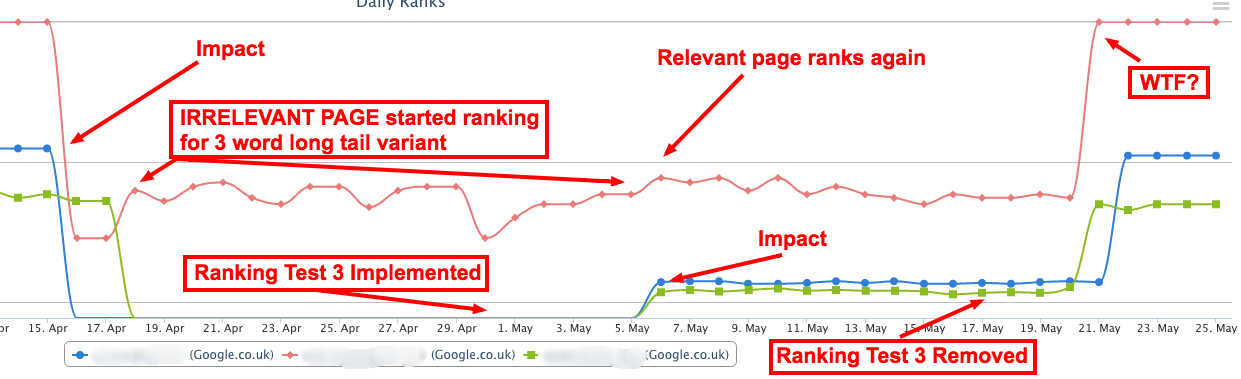

Ranking WEIRDNESS 1: Observing an ‘irrelevant’ page on the same site rank when the ranking signal is ‘shortcutted’.

The graph above illustrates that when I removed the signal (removed the keyword from internal anchor text) there WAS a visible impact on rankings for the specific keyword phrase – rankings disintegrated again.

BUT – THIS TIME – an irrelevant page on the site started ranking for a long tail variant of the target keyword phrase during the period when there was no signal present at all in the site (apart from the underlying redirect zone).

This was true UNTIL I implemented a further ranking test (by optimising ANOTHER ELEMENT actually on the page this time, that introduced the test focus keyword phrase (or HEAD TERM I have it as, in the first image on this page) again to the page – the first time that the keyword phrase was present on the actual page (in an element) for a long time).

In the most recent tests I did, I could not even detect the impact of internal links in the same way. Not in the same format on the page, at least.

I think it’s useful to keep these test results up for others to see, but I keep internal linking very simple these days.

Do not keyword stuff internal links.

FAQ

What are internal links?

Internal links are links on your website that point to other pages on your website, rather than external sites.

Why is optimising internal links important?

Optimizing internal links can help with the following:

- Improved website navigation and user experience.

- Increased crawling and indexability by search engines.

- Enhanced relevance and authority of internal pages.

- Improved distribution of link equity and PageRank.

- Boosted search engine rankings and organic traffic.

How can I optimize internal links?

Here are some tips for optimizing internal links:

- Use descriptive anchor text that accurately reflects the content on the linked page.

- Link to relevant and authoritative internal pages to establish topical relevance and authority.

- Avoid using too many internal links on a single page, as it can dilute link equity and confuse users.

- Ensure that all internal links are working properly and don’t lead to broken pages.

- Use a clear and organised site structure with logical hierarchy and breadcrumbs to help users and search engines navigate your site.

Disclaimer

Disclaimer: “Whilst I have made every effort to ensure that the information I have provided is correct, It is not advice. I cannot accept any responsibility or liability for any errors or omissions. The author does not vouch for third party sites or any third party service. Visit third party sites at your own risk. I am not directly partnered with Google or any other third party. This website uses cookies only for analytics and basic website functions. This article does not constitute legal advice. The author does not accept any liability that might arise from accessing the data presented on this site. Links to internal pages promote my own content and services.” Shaun Anderson, Hobo

8,500

followers

2,800

likes

5000+

connections