Q: How many SEO experts does it take to change a light bulb?

A: light bulb, bulb, lamp, lighting, fixture, replace lightbulb, best light bulb…

A Researcher’s Note: The Serendipitous Path to an Answer

Let me go through how the central thesis of this report came together. The journey began with research into the modern AI architecture known as “query fan-out.”

At the time, a public comment made by SEO theorist Michael Martinez proved highly relevant:

“Dear SEO experts who are engaged in ‘splaining “query fan-out”. Be advised that:

- It’s been widely used in database systems for 30+ years.

- Google has used it for at least 20 years (according to documents I’ve found). Query fan-out is NOT some brand spanking new AI technique.”

At first, I didn’t fully grasp the significance of his second point while noting the first.

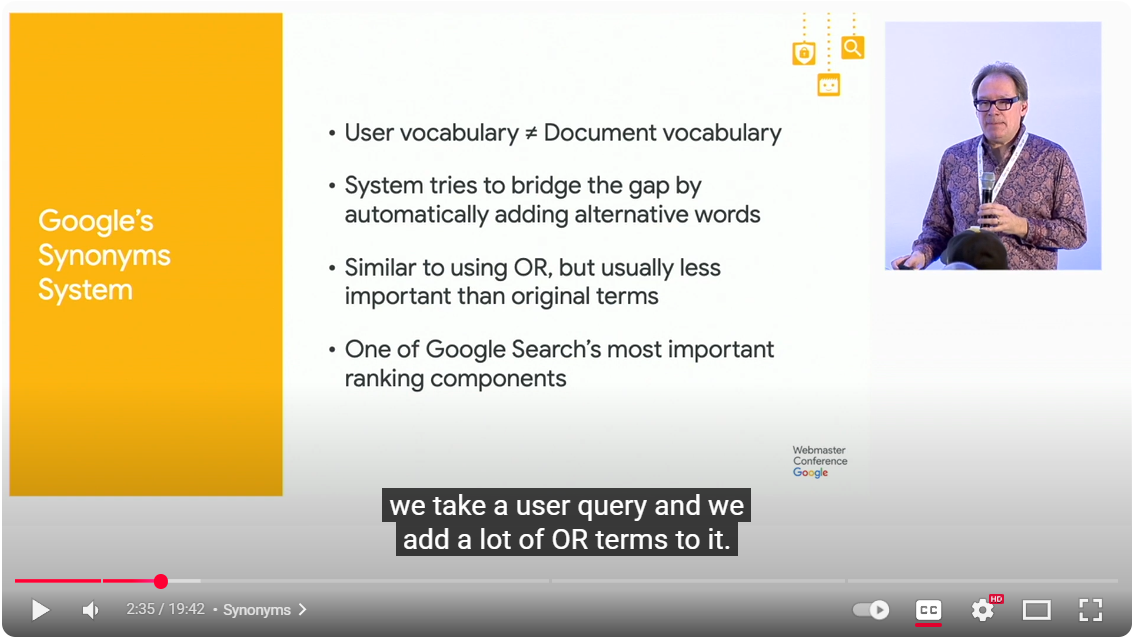

However, in a moment of unbelievable serendipity, shortly after watching a presentation by Google Distinguished Engineer Paul Haahr, I came across another video of his that discussed this exact type of query expansion.

In it, he described Google’s “synonym system” – one of its most important ranking components – and he put a date on its launch: 2004.

Martinez’s comment pointed to a 20-year history, and Haahr’s presentation provided the specific name and date for the technology that aligns perfectly with the timeline of the Brandy update (2004).

This serendipitous connection revealed that what we call “query fan-out” today is the direct, evolved descendant of the very system that the SEO community observed in 2004 and mislabeled as LSI. It is this connection that forms the core of the following analysis.

So it got me looking into what happened in 2004 (before this blog’s time).

The Core Thesis

The central thesis of this report is that the “LSI keyword” myth was born from a misinterpretation of Google’s 2004 semantic updates, starting with Brandy in 2004.

The true technology behind that shift was not Latent Semantic Indexing, but a foundational “synonym system” – a system that has evolved over two decades into the “query fan-out” architecture that powers Google’s most advanced AI search features today.

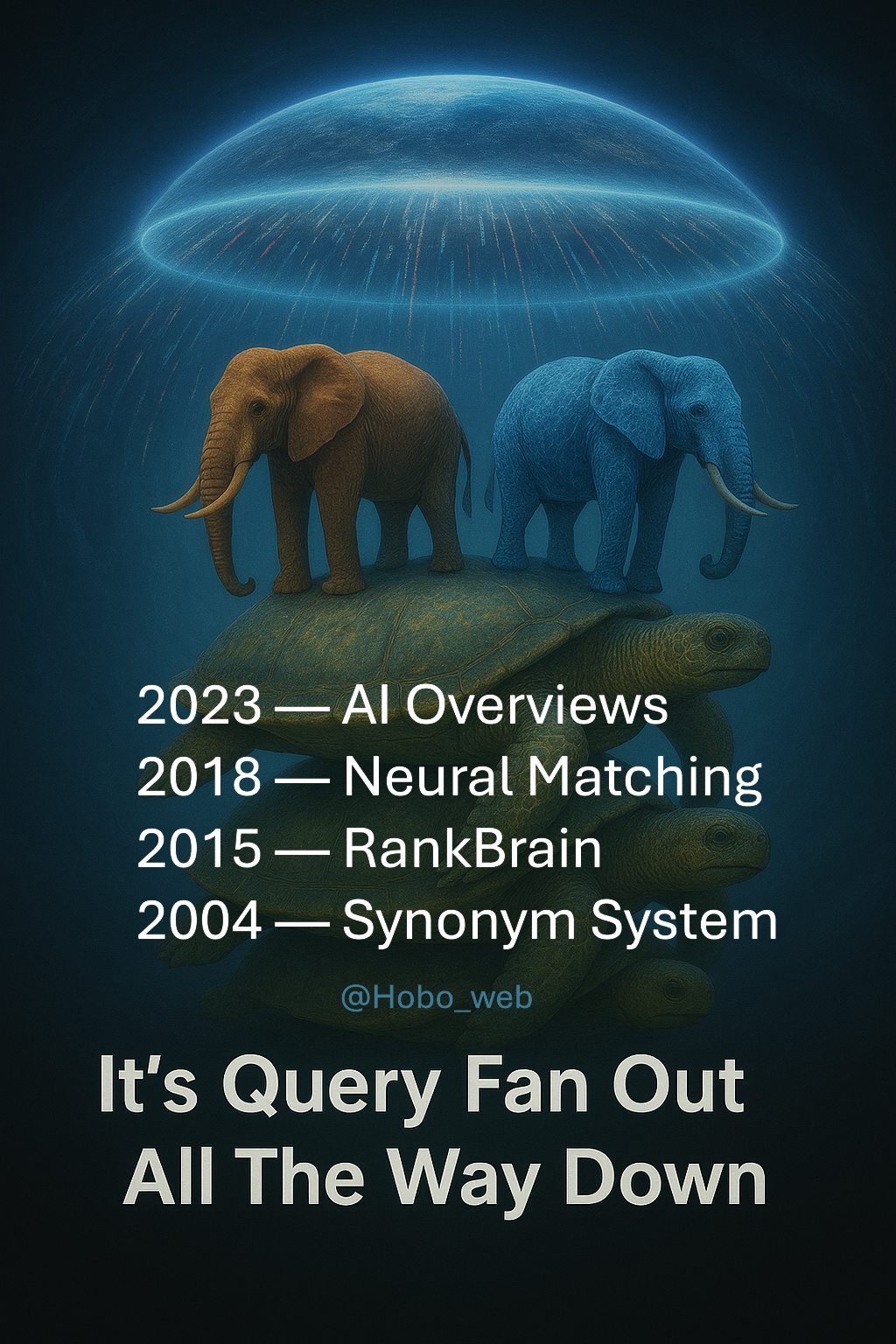

It’s Query Fan-Out All The Way Down

The famous anecdote, popularised by Terry Pratchett, tells of a philosopher explaining that the world rests on the back of a giant turtle.

When asked what the turtle stands on, the answer is “another turtle.” And beneath that? “Ah,” the philosopher says, “it’s turtles all the way down.”

This research, which I conducted by reviewing Google’s V. DOJ case files and an extensive examination of the Content Warehouse Data Leaks, patents and Google statements, has revealed a foundational, recursive principle at the heart of Google’s two-decade quest to understand language.

The complex, AI-driven “query fan-out” we see today isn’t a new invention; it’s merely the top-most, most visible turtle in a stack that goes back 20 years.

- The World Turtle (The Foundation): The 2004 Synonym System. This is the Great A’Tuin, the turtle upon which everything else rests. In 2004, Google implemented a system that took a single user query and “fanned it out” by automatically adding synonymous “OR” terms. This was the first, most fundamental version of the principle: one query goes in, and multiple related queries are executed to cast a wider net. This system was the real technology behind the semantic shift that the SEO community mislabeled as LSI.

- The Elephants (The Conceptual Evolution): RankBrain & Neural Matching. Standing on the World Turtle are the elephants that support the world. These are the subsequent AI systems that built upon the foundational principle. RankBrain (2015) learned to fan out a query’s meaning by translating it into vectors to understand novel concepts. Neural Matching (2018) took this further, fanning out the concepts in a query to find relevant documents, even if the keywords didn’t match. These systems were no longer just expanding words; they were expanding meaning itself.

- The Discworld (The Modern Implementation): AI Overviews. At the very top of the stack, visible to all, is the Discworld – today’s AI Overviews. The architecture here is explicitly called “query fan-out.” It takes a user’s prompt and deconstructs its intent, fanning it out into dozens of entirely new, specific sub-queries that are executed in parallel to conduct comprehensive research on the user’s behalf.

What this discovery reveals is that Google’s most futuristic AI search feature is, in fact, the ultimate expression of a core strategy it has been honing since 2004.

The mechanism has evolved from simple lexical expansion to complex conceptual decomposition, but the fundamental idea has remained the same.

It truly is query fan-out, all the way down.

The LSI Keyword Myth: How a 20-Year-Old Misunderstanding Explains the Future of AI Search

1. The Mystery of 2004: A Semantic Leap Forward

In early 2004, the search engine optimisation (SEO) community witnessed a fundamental shift in how Google worked.

Following a series of aggressive anti-spam updates known as “Florida” and “Austin,” Google rolled out a foundational change that became known as the “Brandy” update.

Unlike its predecessors, Brandy wasn’t just another tweak to the ranking algorithm; it was a modification to the core architecture of Google’s index.

According to widespread community observation at the time, the effects were profound. Suddenly, Google appeared to understand language on a much deeper level. SEO practitioners (whom I’ve cited below) noticed that the search engine could:

- Understand Synonyms: A page could rank for “used cars” even if it primarily used the phrase “pre-owned automobiles.”

- Disambiguate Context: The algorithm could differentiate between “Apple” the fruit and “Apple” the technology company based on the other words on the page.

- Value Topical Relevance: Comprehensive pages that covered a topic in-depth began to outperform thinly written pages that were stuffed with a specific keyword.

These observations were real and marked a significant leap in Google’s sophistication.

The SEO industry was left with a critical question: What was the technology behind this change? In their search for an answer, they latched onto a technical-sounding term that seemed to fit perfectly: Latent Semantic Indexing.

2. The Misleading Label: How “LSI” Became the Explanation

The term “LSI keywords” began to gain traction within the SEO community in the mid-2000s as a plausible explanation for the changes they were witnessing. The name itself – Latent Semantic Indexing – seemed to perfectly describe what Google was doing: finding the latent semantic relationships between words to improve indexing. However, this was a fundamental misattribution.

2.1 What Latent Semantic Indexing Actually Is

Latent Semantic Indexing (or Latent Semantic Analysis, LSA) is a real, but dated, information retrieval technology developed and patented by researchers at Bellcore in the late 1980s, before the public World Wide Web existed. Its purpose was to solve the “vocabulary problem” in small, static databases.

It uses a mathematical technique called Singular Value Decomposition (SVD) to analyse a term-document matrix, identifying patterns of word co-occurrence to uncover the “latent” conceptual structure in a collection of documents.

This technology, while brilliant for its time, has two foundational limitations that make it entirely unsuitable for the modern web:

- It Doesn’t Scale: The SVD process is computationally expensive and, critically, cannot be easily updated. For a dataset as massive and dynamic as the web, where millions of pages are added and changed every day, the entire LSI model would need to be recalculated from scratch, a computationally prohibitive task.

- It’s Syntactically Naive: The original LSI model treats documents as a “bag of words,” ignoring word order and grammar. It cannot distinguish between “dog bites man” and “man bites dog.”

As the late, renowned patent analyst Bill Slawski noted, Google using LSI would be akin to “using a smart telegraph device to connect to the mobile web.”

It is a technology from a different era, designed for a different problem.

2.2 The Proponents and the Commercialisation of a Myth

Despite the technical mismatch, the “LSI keyword” concept took hold.

SEO blogs and influencers began advocating for the practice of finding and “sprinkling” these supposedly related terms into content to signal topical relevance.

This created a commercial ecosystem, with tools branding themselves as “LSI Keyword Generators.” You can still buy these services today.

3. Uncovering the Truth: The Real Engine of 2004

If the semantic leap of 2004 wasn’t caused by LSI, what was it? The answer comes directly from Google’s own engineers and aligns perfectly with the 2004 timeline.

According to Google Distinguished Engineer Paul Haahr, one of the search engine’s “most important ranking components” was a synonym system that went live around 2004.

This system was designed to do exactly what SEOs were observing: solve the vocabulary problem by automatically expanding a user’s query.

4. The Evolutionary Lineage: From Synonyms to AI Overviews

This 20-year history of query expansion provides the crucial context for understanding Google’s latest AI advancements. The 2004 synonym system is the direct technological ancestor of the “query fan-out” architecture that powers modern AI Overviews.

4.1 The Modern Descendant: Query Fan-Out

As noted by SEO theorist Michael Martinez, the principle of query fan-out has been used by Google for at least 20 years. Today’s implementation, however, is vastly more sophisticated.

The system then executes these sub-queries in parallel and synthesises the findings into a single, comprehensive answer. This represents a fundamental shift from a search engine that provides a list of potential sources to an answer engine that performs the research for the user.

4.2 Google’s Path to True Understanding

The evolution from the synonym system to query fan-out did not happen in a vacuum. It was enabled by a series of landmark AI systems that progressively deepened Google’s understanding of language:

- RankBrain (2015): Google’s first major use of machine learning in ranking, designed to interpret novel and ambiguous queries by relating them to more common ones.

- Neural Matching (2018): An AI system focused on connecting the concepts in a query to the concepts on a page, even if the keywords don’t match.

- BERT (2019) & MUM (2021): Revolutionary transformer-based models that allow Google to understand the full, nuanced context of words in a sentence, and eventually across different information formats like text and images.

- The Knowledge Graph (2012-Present): Underpinning all of this is Google’s massive database of real-world entities and their relationships, which allows search to move from matching “strings to things.

This clear, logical, and technologically advanced evolutionary path demonstrates why Google never needed LSI. It was busy building something vastly superior.

5. The Expert Consensus: Debunking the Myth and Affirming the Reality

The conclusion that “LSI keywords” are a myth is not a matter of opinion; it is the unambiguous consensus of the industry’s most credible authorities.

The late Bill Slawski, the foremost analyst of search engine patents, consistently categorised the concept as an SEO myth with “as much scientific credibility as Keyword Density, which is next to none“. Historical note – I interviewed Bill in 2009 about keyword density.

He was meticulous in his refutation of LSI keywords, often stating with precision:

“LSI keywords do not use LSI, and are not keywords.” His research pointed instead to Google’s proprietary systems, such as the “phrase-based indexing” patents that began appearing in 2004, as the real methods Google was using to understand topics.

This is confirmed by Google itself. In 2019, Google’s Search Advocate John Mueller issued the definitive statement on the matter: “There’s no such thing as LSI keywords — anyone who’s telling you otherwise is mistaken, sorry.”

6. The Final Verdict & Strategic Implications

The evidence is overwhelming and points to a single, clear conclusion: the concept of “LSI Keywords” as an SEO strategy is a 20-year-old myth.

The semantic capabilities observed in 2004 were not the result of an outdated 1980s indexing technology, but of Google’s own proprietary synonym system – the direct ancestor of the query fan-out architecture that powers AI search today.

6.1 Why the Right Answer Matters

Dismissing this myth is not merely an exercise in pedantry; it is a critical step toward developing a sound and effective modern SEO strategy.

The “LSI keyword” mindset is strategically dangerous because it encourages a flawed, mechanistic approach of “keyword sprinkling” – keyword stuffing – artificially weaving a checklist of terms into a text.

This tactic distracts from the far more important work of creating genuinely high-quality, user-focused, helpful content.

Falling Forward: Why the Wrong Theory Led to the Right Actions

This history reveals a fascinating phenomenon of sections of the SEO industry “falling forward.”

Many practitioners, myself included, observing that topically rich content performed well, adopted a strategy of on-page “keyword expansion” – manually adding synonyms and related terms to their pages.

“SEO Copywriting” was born.

Our version of keyword expansion or on-page keyword fan out, for want of a better word.

While some incorrectly labelled this tactic “using LSI keywords,” the strategy itself worked. Really well sometimes.

It worked not because of LSI, but because this on-page effort was the perfect mirror to what Google was actually doing on the back end.

By creating content rich with a varied vocabulary, SEOs were building the ideal target for Google’s 2004 synonym system, which was performing query expansion on its end.

The on-page expansion was meeting the search engine’s query expansion halfway.

This accidental alignment led to success, reinforcing the tactic even while its theoretical underpinning was wrong. It’s a testament to the power of observation, but also a crucial lesson in the importance of understanding the true “why” behind a successful strategy.

6.2 Strategic Recommendations for Modern Semantic SEO

Instead of chasing the ghost of “LSI keywords,” SEO professionals should adopt a strategy grounded in the principles that actually power modern search.

- Conduct Topic and Entity Research: Begin by mapping out the entire knowledge domain of your subject. Identify the core entities, subtopics, and the key questions users are asking.

- Perform Comprehensive SERP Analysis: Analyse the top-ranking content to understand which subtopics and user intents Google currently considers most relevant for a given query.

- Structure Content for Machines: Organise content with a clear hierarchy (H2S, H3S) and in scannable, semantically complete “chunks” that an AI can easily parse and cite.

- Embrace Comprehensive, In-Depth Content: Aim to create the most thorough and helpful resource available on your topic. This depth is what signals authority and expertise to both users and Google’s advanced AI systems.

- Utilise Structured Data (Schema Markup): Use Schema.org markup to explicitly label the entities on your page (people, products, events, etc.). This is the modern, direct way to provide the semantic context that proponents of “LSI keywords” were trying to achieve through indirect means.

And remember. It’s turtles all the way down.

Want to see how query fan out works in practice? – Use AI to check your visibility in AI answer engines and chatbots.