If you are a web content editor charged with managing the content on a website during the ups and downs of the Google Helpful Content Update (HCU), this article is for you. This article investigates helpful content and SEO copywriting best practices for Google.

I have 20+ years of experience as a professional SEO.

The HCU is best described thus:

QUOTE: “Google’s helpful content algorithm aims to downgrade those types of websites while promoting more helpful websites, designed for humans, above search engines. Google said this is an “ongoing effort to reduce low-quality content and make it easier to find content that feels authentic and useful in search.” Barry Schwartz, Search Engine Land 2023

SEO copywriting is the art of writing high-quality content for search engines on-page in copy, in page titles, meta descriptions, SERP snippets and SERP featured snippets.

Writing for search engines is not about keyword stuffing text, and an inexperienced writer can absolutely destroy your website rankings in Google.

SEO (search engine optimisation) is no longer about repeating keywords. Anything you do to substantially improve the quality of the text on your page is going to be some kind of SEO benefit for your website.

Helpful content requires you:

- Create helpful content.

- Create content unique to the page.

- Create a high-quality page.

- Include relevant keyword phrases in the copy.

- Use the correct perspective.

- Adhere to accepted language basics.

- Have a hyper-relevant keyword-rich title tag.

- Niche-down using longer-form or in-depth content.

- Demonstrate experience and expertise

Do take note I am not a professional copywriter.

I have been an SEO since the early 2000s and a blogger since 2007.

Bear that in mind as you read any thoughts I share below.

QUOTE: “With the core updates we don’t focus so much on just individual issues, but rather the relevance of the website overall. And that can include things like the usability, and the ads on a page, but it’s essentially the website overall. And usually that also means some kind of the focus of the content, the way you’re presenting things, the way you’re making it clear to users what’s behind the content. Like what the sources are, all of these things. All of that kind of plays in.” John Mueller, Google 2021

The content on a web page is clearly very important to Google.

But it isn’t just about the content.

It’s about the website too.

Let’s focus on the text element first:

Do you need lots of text to rank pages in Google?

QUOTE: “Nobody at Google counts the words on a page. Write for your users.” John Mueller, Google 2019

NO, but:

QUOTE: “You always need textual content on-page, regardless of what other kinds of content you might have. If you’re a video-hosting site, you still need things like titles, headings, text, links, etc. The same goes for audio-hosting sites. Make it easy for search engines to understand your content & how it’s relevant to users, and they’ll be able to send you relevant traffic. If you make it hard for search engines to figure out what your pages are about, it would be normal for them to struggle to figure out how your site is relevant for users.” John Mueller, Google 2019

How much text do you need to rank in Google?

There is a post on Searchmetrics that touches on pages with only a little text-content ranking high in Google:

QUOTE: “From a classical SEO perspective, these rankings can hardly be explained. There is only one possible explanation: user intent. If someone is searching for “how to write a sentence” and finds a game such as this, then the user intention is fulfilled. Also the type of content (interactive game) has a well above average time-on-site.” SearchMetrics 2016

None, evidently, if you can satisfy the query in an unusual manner without the text.

How much text do you need to write for Google?

QUOTE: “There’s no minimum length, and there’s no minimum number of articles a day that you have to post, nor even a minimum number of pages on a website. In most cases, quality is better than quantity. Our algorithms explicitly try to find and recommend websites that provide content that’s of high quality, unique, and compelling to users. Don’t fill your site with low-quality content, instead work on making sure that your site is the absolute best of its kind.” John Mueller Google, 2014

How much text do you put on a page to rank for a certain keyword?

Well, as in so much of Google SEO, there is no optimal amount of text per page to rank high in Google, and how much you need is going to differ, based on the topic, and content type, the intent of visitors and SERP you are competing in.

Instead of thinking about the quantity of the Main Content (MC) text, you should think more about the quality of the content on the page. Optimise this with searcher intent in mind, too.

There is no minimum amount of words or text to rank in Google.

Pages with no text on them or 50 words can out-rank pages with 100, 250, 500 or 1000 words.

Every site is different. Some pages, for example, can get away with 50 words because of a good link profile and the domain it is hosted on.

If relevant, the more text you add to the page, as long as it is unique, keyword rich and relevant to the topic, the more that page will be rewarded with more visitors from Google.

There is no optimal number of words on a page for placement in Google.

Don’t worry too much about word count if your content is original and informative. Google will probably reward you on some level – at some point – if there is lots of unique text on all your pages.

Google said there is no minimum word count when it comes to gauging content quality.

However, the quality rater’s guide does state:

6.2 Unsatisfying Amount of Main Content

Some Low quality pages are unsatisfying because they have a small amount of MC for the purpose of the page. For example, imagine an encyclopedia article with just a few paragraphs on a very broad topic such as World War II. Important: An unsatisfying amount of MC is a sufficient reason to give a page a Low quality rating.

Relevance is the primary ranking factor. Without relevance, you don’t rank in Google for very long.

QUOTE: “when it comes to relevance, if you work on improving the relevance of your website, then you have a different website, you have a better website.” John Mueller Google, 2021

Do keywords in bold or italic help rankings?

QUOTE: “Random sentence bolding is not quite a replacement for high-quality, useful, unique, and compelling content.” John Mueller, Google 2021

There is a minuscule boost in emphasising particular parts of the text and that includes putting your keywords in bold or putting your keywords in italics. Google is on record as saying it is a beneficial Google ranking factor under the correct circumstances.

QUOTE: “So usually we do try to understand what the content is about on a web page, and we look at different things to try to figure out what is actually being emphasized here, and that includes things like headings on a page. But it also includes things like what is actually bolded or emphasized within the text on the page. So to some extent that does have a little bit of extra value there, in that it’s a clear sign that actually you think this page or this paragraph is about this topic here.” John Mueller, Google 2021

It is essentially impossible to test this, but Googlers are on record saying how you emphasise text on a page does matter somewhat. The ranking effect must be minimal, and beware, any item you can ‘optimise’ on your page – Google can use this against you to filter you out of results if they want.

You should only use bold or italics these days specifically for your users.

You only use emphasis if it’s natural or this is really what you want to emphasise!

Keep it simple, natural, useful, and relevant.

QUOTE: “You’ll probably get more out of bolding text for human users / usability in the end. Bots might like, but they’re not going to buy anything.” John Mueller, Google 2017

Can you just write naturally and rank high in Google?

QUOTE: “There’s nothing to optimize for with BERT, nor anything for anyone to be rethinking. The fundamentals of us seeking to reward great content remain unchanged.” Danny Sullivan, Google 2019

Yes, you must write naturally (and succinctly and using the correct perspective) but if you have no idea the keywords you are targeting, and no expertise in the topic, you will be left behind those that can access this experience.

You can just ‘write naturally’ and still rank, albeit for fewer keywords than you would have if you optimised the page.

There are too many competing pages targeting the top spots not to optimise your content.

Naturally, how much text you need to write, how much you need to work into it, and where you ultimately rank, is going to depend on the domain reputation of the site you are publishing the article on.

Is poor grammar a Google ranking factor?

Slightly poor grammar is evidently NOT a CRITICAL ranking signal.

Google historically has looked for ‘exact match’ instances of keyword phrases on documents and SEO has, historically, been able to optimise successfully for these keyword phrases – whether they are grammatically correct or not.

John Mueller from Google said in a hangout that it was ‘possible‘ but very ‘niche‘ if at all, that grammar was a positive ranking factor. Bear in mind – most of Google’s algorithms (we think) demote or de-rank content once it is analysed – not necessarily promote it – not unless users prefer it.

Google, has stated in the past grammar is NOT a ranking factor.

Not, at least, one of the critical quality signals Google uses to rank pages.

Google’s Matt Cutts did say though:

QUOTE: “It would be fair to use it as a signal…The more reputable pages do tend to have better grammar and better spelling. ” Matt Cutts, Google

Google is on record as saying (metaphorically speaking) their algorithms are looking for signals of low quality when it comes to rating pages on Content Quality.

Some possible examples could include:

QUOTE: “1. Does this article have spelling, stylistic, or factual errors?”

and

QUOTE: “2. Was the article edited well, or does it appear sloppy or hastily produced?”

and

QUOTE: “3. Are the pages produced with great care and attention to detail vs. less attention to detail?”

and

QUOTE: “4. Would you expect to see this article in a printed magazine, encyclopedia or book?”

Altogether – Google is rating content on overall user experience as it defines and rates it, and bad grammar and spelling equal a poor user experience.

At least on some occasions.

The recommendation is you focus on correct grammar.

Is spelling a Google ranking factor?

Poor spelling has always had the potential to be a NEGATIVE ranking factor in Google. IF the word that is incorrect on the page is unique on the page and of critical importance to the search query.

IN the early days of Google if you wanted to rank for misspellings – you optimised for them – so – poor spelling would be a POSITIVE ranking looking back not that long ago.

Now, that kind of optimisation effort is fruitless, with changes to how Google presents these results as Google will favour “Showing results for” results over presenting SERPs based on a common spelling error.

Testing to see if ‘bad spelling’ is a ranking factor is still easy on a granular level, bad grammar is not so easy to test.

Google has better signals to play with than ranking pages on spelling and grammar. It’s not likely to penalise you for the honest mistakes most pages exhibit, especially if you have met more important quality signals – like useful main content.

You can find clear evidence of pages ranking very well with both bad spelling and bad grammar.

That said any user satisfaction factor could be seen to be a ranking factor given enough time, with how Google understands how its users interact with your website.

Your page content must satisfy user search intent better than competing pages

User search intent is a way marketers describe what a user wants to accomplish when they perform a Google search.

SEOs have understood user search intent to fall broadly into the following categories and there is an excellent post on Moz, 2016 about this.

- Transactional – The user wants to do something like buy, signup, register to complete a task they have in mind.

- Informational – The user wishes to learn something

- Navigational – The user knows where they are going

The Google human quality rater guidelines modify these to simpler constructs:

- Do

- Know

- Go

SO – how do you optimise for all this?

You could rely on old-school SEO techniques, but Google doesn’t like thin pages, and you need higher-quality unnatural links to power low-quality sites these days. That is all a risky investment.

Google has successfully made that way forward a minefield for smaller businesses.

A safer route with a guaranteed ROI, for a real business that can’t risk spamming Google, is to focus on satisfying user satisfaction signals Google might be rating favourably.

You do this by focusing on meeting exactly the intent of an individual keyword query

How. Why and Where from a user searches are going to be numerous and ambiguous and this is an advantage for the page that balances this out better than competing pages in SERPs.

High-quality copywriting is not an easy ‘ask’ for every business, but it is a tremendous leveller.

Anyone can teach what they know and put it on a website if the will is there.

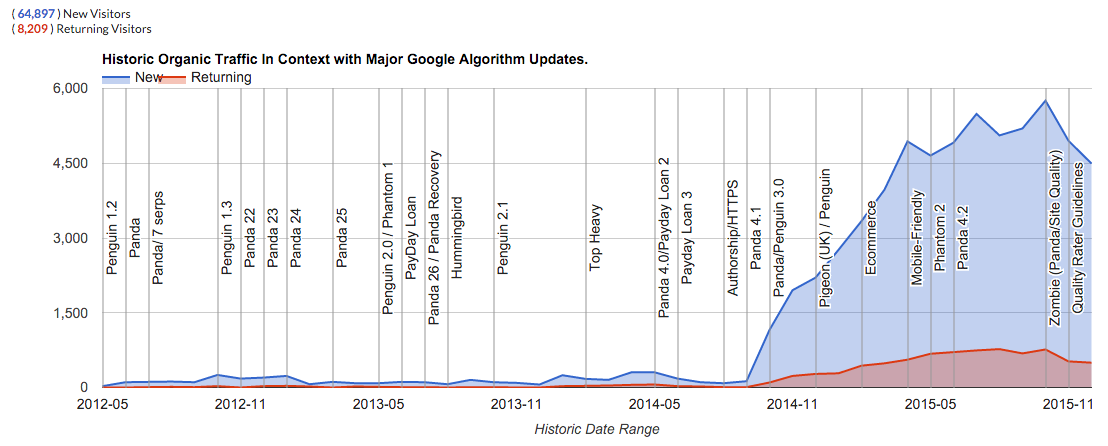

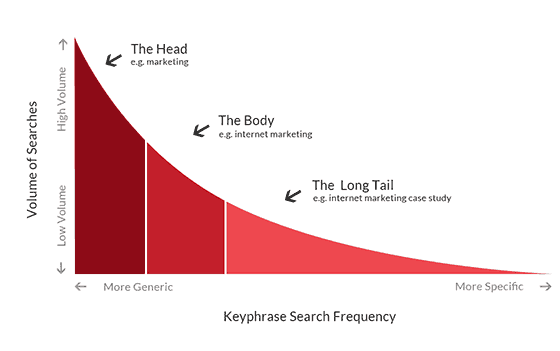

Some understand the ranking benefits of in-depth, curated content, for instance, that helps a user learn something. In-depth pages or long-form content is a magnet for long-tail key phrases.

The high-quality text content of any nature is going to do well, in time, and copywriters should rejoice.

High-quality SEO copywriting has never been more important.

Offering high-quality content is a great place to start on your site.

It’s easy for Google to analyse and rate, and it is also a sufficient barrier to entry for most competitors (at least, it was in the last few years).

Google is looking for high-quality content:

“High quality pages and websites need enough expertise to beauthoritative and trustworthy on their topic.”

..or if you want it another way, Google’s algorithms target low-quality content.

QUOTE: “Basically you want to create high-quality sites following our webmaster guidelines, and focus on the user, try to answer the user, try to satisfy the user, and all eyes will follow.” Gary Illyes, Google 2016

When it comes to writing SEO-friendly text for Google, we must optimise for user intent, not simply what a user typed into Google.

Google will send people looking for information on a topic to the highest quality, relevant pages it knows about, often BEFORE it relies on how Google ‘used‘ to work e.g. relying on finding near or exact match instances of a keyword phrase on any one page, regardless of the actual ‘quality’ of that page.

Google is constantly evolving to better understand the context and intent of user behaviour, and it doesn’t mind rewriting the query used to serve high-quality pages to users that more comprehensively deliver on user satisfaction e.g. explore topics and concepts in a unique and satisfying way.

Of course, optimising for user intent, even in this fashion, is something a lot of marketers had been doing long before query rewriting and Google Hummingbird came along.

QUOTE: “Google said that Hummingbird is paying more attention to each word in a query, ensuring that the whole query — the whole sentence or conversation or meaning — is taken into account, rather than particular words. The goal is that pages matching the meaning do better, rather than pages matching just a few words.” Danny Sullivan, Google 2013

Do you need long-form in-depth pages to rank?

No – but it can be very useful as a base to start a content marketing strategy if you are looking to pick up links and social media shares.

And be careful. The longer a page is, the more you can dilute it for a specific keyword phrase, and it’s sometimes a challenge to keep it updated.

Google seems to penalise stale or unsatisfying content.

From a strategic point of view, if you can explore a topic or concept in an in-depth way you must do it before your competition. Especially if this is one of the only areas you can compete with them on.

Here are some things to remember about creating topic-oriented in-depth content:

- In-depth content in competitive verticals needs to be kept updated. Every six months to a year, at least. If you can update it a lot more often than that – it should be updated more

- In-depth content can reach tens of thousands of words, but the aim should always be to make the page as concise as possible, over time

- In-depth content can be ‘optimised’ in much the same way as content has always been optimised

- In-depth content can give you authority in your topical niche

- Pages must MEET THEIR PURPOSE WITHOUT DISTRACTING ADS OR CALL TO ACTIONS. If you are competing with an information page – put the information FRONT AND CENTRE. Yes – this impacts negatively on conversions in the short term. BUT – these are the pages Google will rank high. That is – pages that help users first and foremost complete WHY they are on the page (what you want them to do once you get them there needs to be of secondary consideration when it comes to Google organic traffic).

- You need to balance conversions with user satisfaction unless you don’t want to rank high on Google.

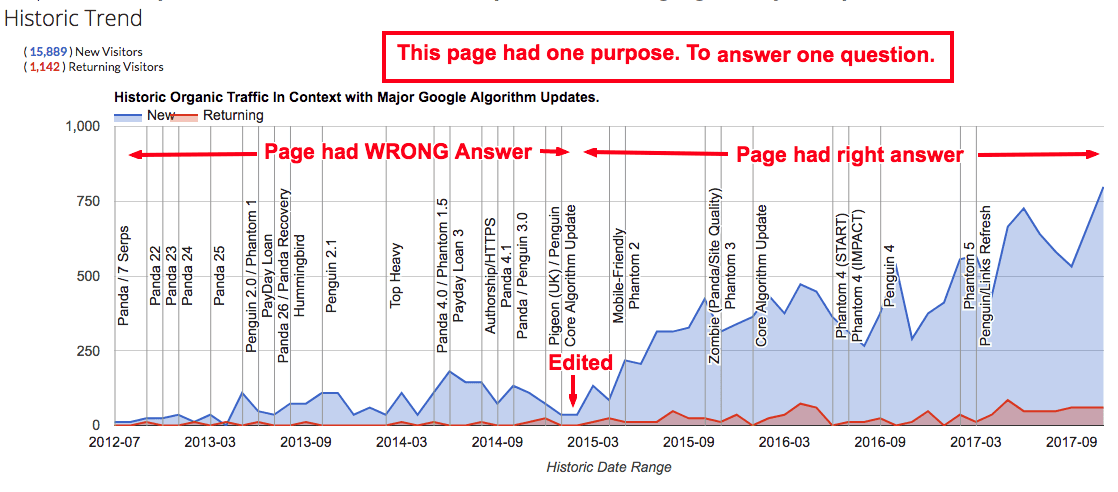

You can SEO old content to perform better 0n Google

An SEO can always get more out of content in organic search than any copywriter, but there’s not much more powerful than a copywriter who can lightly optimise a page around a topic, or an expert in a topic that knows how to – continually, over time – optimise a page for high rankings in Google.

Some of us have been doing this for years:

Writing for Google and meeting the query intent means an SEO copywriter would need to make sure page text included ENTITIES AND CONCEPTS related to the MAIN TOPIC of the page you are writing about and the key phrase you are talking about.

If you want to rank for a SPECIFIC search term – you can still do it using the same old, well-practised keyword targeting practices. The main page content itself just needs to be high-quality enough to satisfy Google’s quality algorithms in the first place.

This is still a land grab.

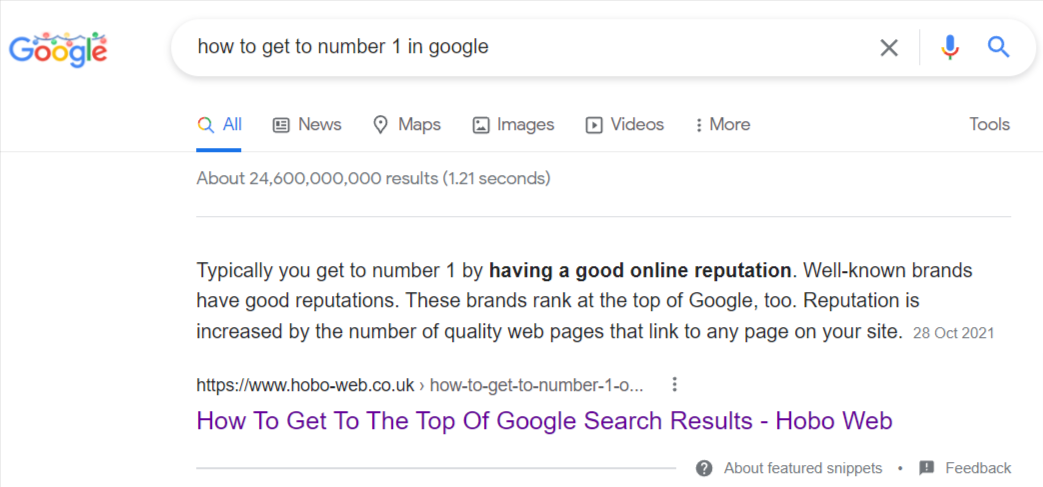

Get that featured snippet on Google

QUOTE: “When a user asks a question in Google Search, we might show a search result in a special featured snippet block at the top of the search results page. This featured snippet block includes a summary of the answer, extracted from a webpage, plus a link to the page, the page title and URL” Google 2018

Any content strategy should naturally be focused on creating high-quality content and also revolve around triggering Google FEATURED SNIPPETS (essentially number 1 in Google) that trigger when Google wants them to – and intermittently – depending on the nature of the query.

You can use traditional competitor keyword research and old-school keyword analysis and keyword phrase selection, albeit focused on the opportunity in long-form content, to accomplish that, proving that you still use this keyword research experience to rank a page.

Despite all the obfuscation, time delay, keyword rewriting, manual rating, and selection bias Google goes through to match pages to keyword queries, you still need to optimise a page to rank in a niche, and if you do it sensibly, you unlock a wealth of long-tail traffic over time.

Note:

- Google is only going to produce more of these direct answers or answer boxes in future (they have been moving in this direction since 2005).

- Focusing on triggering these will focus your content creators on creating exactly the type of pages Google wants to rank. “HOW TO” guides and “WHAT IS” guides is IDEAL and the VERY BEST type of content for this exercise.

- Google is REALLY rewarding these articles – and the search engine is VERY probably going to keep doing so for the future.

- Google Knowledge Graph offers another exciting opportunity – and indicates the next stage in organic search.

- Google is producing these ANSWER BOXES that can promote a page from anywhere on the front page of Google to number 1.

- All in-depth content strategies on your site should be focused on this new aspect of Google Optimisation. The bonus is you physically create content that Google is ranking very well even without taking knowledge boxes into consideration.

- Basically – you are feeding Google EASY ANSWERS to scrape from your page. This all ties together very nicely with organic link building. The MORE ANSWER BOXES you UNLOCK – the more chance you have of ranking number one FOR MORE AND MORE TERMS – and as a result – more and more people see your utilitarian content and as a result – you get social shares and links if people care at all about it.

- You can share an Enhanced Snippet (or Google Answer Box as they were first called by SEOs). Sometimes you are featured and sometimes it is a competitor URL. All you can do in this case is to continue to improve the page until you squeeze your competitor out.

We already know that Google likes ‘tips’ , “how-to” and expanded FAQ but this featured snippet system provides a real opportunity and is CERTAINLY what any content strategy should be focused around to maximise the exposure of your business in organic searches.

This is a double-edged sword if you take a long-term view. Google is, after all, looking for easy answers so, eventually, it might not need to send visitors to your page.

To be fair, these Google featured snippets, at the moment, appear complete with a reference link to your page and can positively impact traffic to the page.

SO – for the moment – Google Featured Snippets are an opportunity to take advantage of.

Optimise for the ‘the long click’

When it comes to rating user satisfaction, there are a few theories doing the rounds at the moment that may be logical. Google could be tracking user satisfaction by proxy. When a user uses Google to search for something, user behaviour from that point on can be a proxy of the relevance and relative quality of the actual SERP.

What is a Long Click?

A user clicks a result and spends time on it, sometimes terminating the search.

What is a Short Click?

A user clicks a result and bounces back to the SERP, pogo-sticking between other results until a long click is observed. Google has this information if it wants to use it as a proxy for query satisfaction.

For more on this, I recommend this article on the time to long click.

Note; Ranking could be based on a ‘duration metric’

QUOTE: “The average duration metric for the particular group of resources can be a statistical measure computed from a data set of measurements of a length of time that elapses between a time that a given user clicks on a search result included in a search results web page that identifies a resource in the particular group of resources and a time that the given user navigates back to the search results web page. …Thus, the user experience can be improved because search results higher in the presentation order will better match the user’s informational needs.” High Quality Search Results based on Repeat Clicks and Visit Duration. Bill Slawski, Go Fish Digital, 2017

Note; Rankings could be based on a ‘duration performance score‘

QUOTE: “The duration performance scores can be used in scoring resources and websites for search operations. The search operations may include scoring resources for search results, prioritizing the indexing of websites, suggesting resources or websites, protecting particular resources or websites from demotions, precluding particular resources or websites from promotions, or other appropriate search operations.” A Panda Patent on Website and Category Visit Durations. Bill Slawski, Go Fish Digital, 2017

Google has many patents that help it determine the quality of a page. Nobody knows for sure which patents are implemented into the core search algorithms.

If you are focused on delivering high-quality information to users, you can avoid the worst of these algorithms.

Focus on creating high-quality content

However you are trying to satisfy users, many think this is about terminating searches via your site or on your site or satisfying the long-click.

How you do that in an ethical manner (e.g. not breaking the back button on browsers) the main aim is to satisfy that user somehow.

You used to rank by being a virtual PageRank black hole. Now, you need to think about being a User black hole.

You want a user to click your result in Google, and not need to go back to Google to do the same search that ends with the user pogo-sticking to another result, apparently unsatisfied with your page.

The aim is to convert users into subscribers, returning visitors, sharing partners, paying customers or even just helping them along on their way to learn something.

If you create high-quality pieces of informative content on your website page-to-page, you will rank.

The only focus with any certainty is whatever you do, stay high-quality with content, and avoid creating doorway pages.

For some sites, that will mean reducing pages on many topics to a few that can be focused on so that you can start to build authority in that subject area.

You rank as a result of others rating your writing.

Avoid toxic visitors. A page must meet its purpose well, without manipulation. Do people stay and interact with your page or do they go back to Google and click on other results?

A page should be explicit in its purpose and focus on the user.

The number 1 ‘user experience’ signal you can manipulate with low risk is improving content until it is more useful or better presented than is found on competing pages for variously related keyword phrases.

Your website is an entity. You are an entity. Explore concepts. Don’t repeat stuff. Be succinct.

Your business is the keywords that are on your pages.

What are the high-quality characteristics of a web page?

QUOTE: “High quality pages exist for almost any beneficial purpose…. What makes a High quality page? A High quality page should have a beneficial purpose and achieve that purpose well.” Google Search Quality Evaluator Guidelines, 2019

The following are examples of what Google calls ‘high-quality characteristics’ of a page and should be remembered:

- “A satisfying or comprehensive amount of very high-quality” main content (MC)

- Copyright notifications up to date

- Functional page design

- Page author has Topical Authority

- High-Quality Main Content

- Positive Reputation or expertise of website or author (Google yourself)

- Very helpful SUPPLEMENTARY content “which improves the user experience.“

- Trustworthy

- Google wants to reward ‘expertise’ and ‘everyday expertise’ or experience so you need to make this clear (perhaps using an Author Box or some other widget)

- Accurate information

- Ads can be at the top of your page as long as it does not distract from the main content on the page

- Highly satisfying website contact information

- Customised and very helpful 404 error pages

- Awards

- Evidence of expertise

- Attention to detail

If Google can detect investment in time and labour on your site – there are indications that they will reward you for this (or at least – you won’t be affected when others are, meaning you rise in Google SERPs when others fall).

Your primary aim is to create useful content for humans, not Google.

That’s just the start of it.

Read my SEO tutorial next.