After about a quarter of a century in the trenches of SEO (search engine optimisation), I’ve seen fads come and go. I’ve witnessed the rise and fall of countless ‘guaranteed ranking’ tactics and watched as the digital landscape shifted under our feet with every major algorithm update from Google Panda to the Helpful Content Update.

Through all this change, one principle has remained constant: the most sustainable success comes not from chasing algorithms, but from understanding the fundamental architecture of how a search engine perceives and organises information.

The recent insights into Google’s CompositeDoc data structure are, for SEO veterans, the closest we’ve ever come to seeing the blueprint. This isn’t another list of ranking factors; it’s a look at the very container that holds them, offering a profound opportunity to align our strategies with the core logic of the index itself.

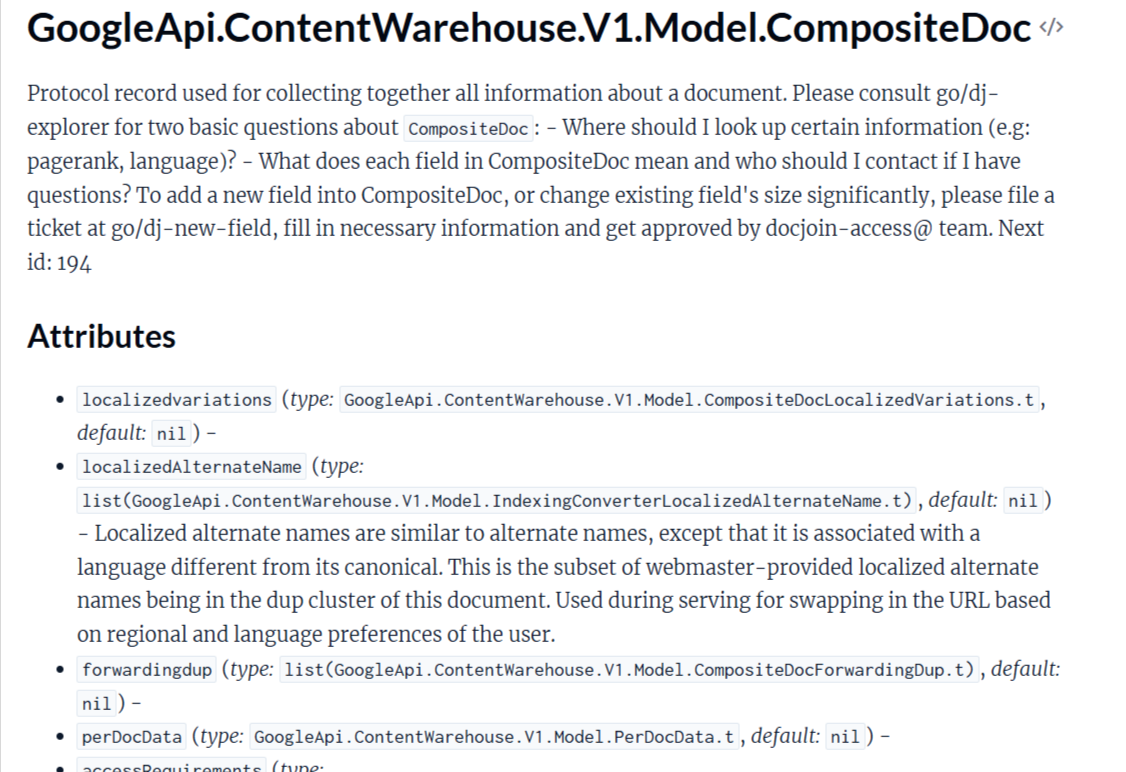

Within the complex architecture of Google’s search systems, the GoogleApi.ContentWarehouse.V1.Model.CompositeDoc stands as the foundational data object for any given URL. It is the master record, a comprehensive “protocol record” that aggregates all known information about a document.

In computer science, a protocol is a set of rules for formatting and processing data, acting as a common language between systems. The CompositeDoc, therefore, represents the strict, structured format Google uses to internally catalogue, analyse, and “speak” about a web page.

It is the central repository within the Content Warehouse where signals – ranging from raw textual content to sophisticated, calculated quality scores—are collected and organised.

Introduction: The Rosetta Stone of Google’s Index

The Content Warehouse itself is the core of Google’s indexing operation, a vast digital library responsible for storing, categorising, and analysing the web at an immense scale. The data held within each CompositeDoc does not directly determine rankings in isolation; rather, it serves as the foundational data layer that supports and informs other algorithmic processes and ranking modules, such as the system known as Mustang, which scores and ranks search results.

An analysis of the CompositeDoc structure provides an unprecedented view into the architectural priorities of Google’s indexing and quality assessment systems. It moves the practice of Search Engine Optimisation (SEO) away from an exercise in correlation and towards a more fundamental alignment with the data model Google itself uses to understand the web. By deconstructing this protocol record, it is possible to map its attributes directly to core SEO disciplines, revealing not just what Google values, but how it is structured, measured, and stored.

To orient this deep analysis, the following framework provides a high-level map connecting key CompositeDoc attribute groups to their corresponding SEO disciplines and strategic implications. This table serves as a reference guide for the detailed examination that follows, translating the technical data structure into an actionable strategic tool.

| CompositeDoc Attribute/Group | Core SEO Discipline | Strategic Implication | Priority Action |

| forwardingdup, extradup, ContentChecksum96 | Technical SEO: Canonicalisation | Google expends significant resources to resolve URL ambiguity. Failure to provide clear signals wastes crawl budget and dilutes authority by splitting link equity. | Implement a comprehensive canonicalisation strategy using rel=”canonical”, 301 redirects, and consistent internal linking to establish a single source of truth. |

| doc, richcontentData, OriginalContentScore | Content Strategy & Quality | The core content is the foundational object. Its uniqueness, clarity, and value are primary inputs for quality algorithms. Dynamic content is analysed separately. | Create unique, people-first content. For critical information on JavaScript-heavy sites, employ server-side rendering (SSR) to ensure it is part of the primary content payload. |

| anchors, anchorStats, PageRankPerDocData | Off-Page SEO: Link Building | Link equity (PageRank) and anchor text context are stored and analysed on a per-document basis. Google aggregates anchor data to derive topical meaning. | Acquire high-quality, relevant backlinks. Cultivate a natural and diverse anchor text profile to avoid over-optimisation signals. |

| perDocData, qualitysignals, labelData | On-Page & Technical SEO: Quality Signals | Quality is not a single score but a composite of many signals, including freshness, mobile-friendliness, spam scores, and topical labels (e.g., YMYL). | Regularly update key content, ensure flawless mobile usability, and align with E-E-A-T principles, particularly for sensitive topics. |

| robotsinfolist, indexinginfo, badSslCertificate | Technical SEO: Indexing & Trust | Directives from webmasters (robots.txt, noindex) and fundamental trust signals (SSL) are stored per-document and form the basis of crawl and index eligibility. | Conduct regular technical audits to ensure correct indexing directives and maintain a secure site configuration (HTTPS). |

| richsnippet, docImages, docVideos | Technical & Content SEO: SERP Enhancement | Structured data and optimised multimedia are not just for appearance; they populate specific data fields that enable rich features and provide multi-modal relevance signals. | Implement comprehensive Schema.org markup. Use high-quality, thematically relevant images and videos with descriptive metadata to reinforce textual content. |

This framework illustrates that a holistic SEO strategy is one that optimises for the creation of a clean, authoritative, and comprehensive CompositeDoc for every important URL on a website. The subsequent sections of this report will dissect each of these areas in exhaustive detail, providing a blueprint for aligning digital strategy with the core architecture of Google Search.

Section 1: Document Identity and Content Fundamentals

This section analyses the attributes within the CompositeDoc that establish a document’s unique identity, its core content, and its dynamic components. A central theme emerges from these fields: Google’s fundamental and resource-intensive effort to achieve disambiguation. The system is architected to find the single, canonical version of any piece of content, reflecting the importance of resolving duplicate content issues before any further quality assessment can occur.

1.1 URL Canonicalisation and Duplicate Management: The Quest for a Single Source of Truth

The structure of the CompositeDoc reveals that canonicalisation is one of the most critical and complex challenges Google’s indexing systems are designed to solve. Rather than being a minor technical detail, it is a foundational aspect of how a document is identified and processed. The presence of numerous, distinct fields dedicated to managing duplication underscores its architectural importance. These fields include:

- forwardingdup: This field likely stores information about documents that are part of a redirect chain (e.g., 301 or 302 redirects), helping Google consolidate signals to the destination URL.

- extradup and alternatename: These attributes almost certainly manage other forms of duplicates or alternate URLs that belong to the same content cluster, such as those with tracking parameters, different protocol schemes (HTTP vs. HTTPS), or session IDs.

- localizedvariations and localizedAlternateName: These fields are specifically designed to handle internationalisation, managing different language or regional versions of a page, likely populated from hreflang annotations. They allow Google to serve the correct version to the user while understanding that these pages are part of a related set, not simple duplicates.

This extensive set of attributes demonstrates that Google’s process of identifying the canonical URL is not based on a single signal like a rel=”canonical” tag, but is a sophisticated reconciliation of multiple data points. The system is built to handle the messy reality of the web, where a single piece of content can exist at dozens of different URLs.

Further reinforcing this are the ContentChecksum96 and additionalchecksums attributes. These fields store fingerprints, or hashes, of a page’s visible content. These checksums allow Google to identify duplicate or near-duplicate pages at a massive scale, purely based on their content, irrespective of the URL, domain, or any on-page tags. It is a powerful, programmatic mechanism that underpins the advice given in SEO guides to avoid publishing duplicate content.

The architectural implications of this are profound. The sheer number of fields related to duplication reveals that a significant portion of Google’s crawling and processing resources is dedicated to this housekeeping task. Every CPU cycle Google’s systems spend determining if URL A is a duplicate of URL B is a cycle not spent discovering new, unique content or analysing the quality of existing pages. This directly connects to the concept of “crawl budget”. A website with poor canonicalisation signals—such as inconsistent internal links, broken redirect chains, or improper use of parameters—forces Google to expend more of its finite resources on disambiguation. This does not merely risk splitting ranking signals like PageRank; it can actively throttle the discovery and indexing of a site’s valuable content by trapping Googlebot in a loop of identifying and resolving duplicates. Therefore, a robust technical SEO strategy for canonicalisation is a direct enabler of a successful content strategy.

Interestingly, the url field within the CompositeDoc itself is explicitly noted in the documentation as being “optional, and is usually missing.” The advice is to use CompositeDoc::doc::url instead. This technical detail reveals a critical nuance: for Google, the identity of a document is not simply its URL string. Rather, it is a more complex object (doc) that contains the URL as one of its properties. This reinforces the idea that Google’s goal is to index content, and the URL is merely the address where that content was found.

1.2 The Primary Content Record (doc): The Document’s Soul

At the heart of the CompositeDoc is the doc field, which is of the type GDocumentBase. This is the logical container for the core, processed content of the page. This is where the fundamental elements that Google analyses—the text, titles, headings, and other primary content—reside after being crawled and parsed. This object, along with other general containers like properties, represents the “information” that is ultimately stored in the Google index, the massive database that fuels search results. The quality, clarity, and relevance of the information within this doc object directly impact how Google understands the page’s topic, its value to users, and its eligibility to rank for relevant queries.

The architectural separation of the core content (doc) from the vast array of metadata and signals (such as qualitysignals, perDocData, and anchors) within the CompositeDoc structure is significant. It mirrors the ideal SEO approach: excellent content forms the foundation, and technical signals act as a wrapper that helps search engines understand and contextualise that content. The data structure is not flat; it is a nested hierarchy of objects, which suggests a logical separation of concerns within Google’s processing pipeline.

This architecture implies that Google’s systems are designed to first process the fundamental content—the “what” of the page, stored in doc—and then layer on dozens of additional signals to refine its understanding—the “about” of the page. This provides a technical, data-backed reinforcement of the long-standing SEO principle that “content is king.” More precisely, the content is the essential core object around which all other signals are aggregated. Without a strong, valuable, and unique doc object, even perfect technical signals have nothing of substance to enhance or contextualise.

1.3 Content Modifications and Inclusions: Understanding the Dynamic Page

Modern web pages are often not static HTML documents but are dynamically assembled in the user’s browser using JavaScript. The CompositeDoc structure contains specific fields that demonstrate Google’s sophisticated, differential model for understanding this dynamic content.

- richcontentData: This field is explicitly defined to store “information about what was inserted, deleted, or replaced in the document’s content.” This is direct evidence of Google’s processing of client-side rendered content. It confirms that Google’s rendering service, which is based on a recent version of Chrome, executes JavaScript and analyses the changes made to the Document Object Model (DOM). Google does not just see the initial HTML source; it also records the final, rendered state of the page.

- includedcontent: This field likely stores information about content that is embedded or included from other sources, for example, through iframes or server-side includes. This allows Google to understand the constituent parts of a page, even if they originate from different sources.

- embeddedContentInfo: This attribute stores data produced by the “embedded-content system,” specifically concerning JavaScript and CSS links. This is crucial for Google to understand all page dependencies, fetch the necessary resources, and render the page accurately, a process that is vital for seeing the page as a user would.

The existence of these specific fields, particularly richcontentData, indicates that Google maintains a highly nuanced model of a page’s content. A purely flat model would simply store the final, rendered HTML in the doc field. The use of a differential model—storing the “changes”—is more computationally efficient and, more importantly, provides richer information. It allows Google’s systems to distinguish between what on the page is static (present in the initial HTML payload) and what is dynamic (added by JavaScript).

This distinction could have significant implications for quality scoring and indexing. For example, if critical information, such as a product’s price or the main headline of an article, is only present via richcontentData, it might be treated differently by downstream systems than content present in the initial, stable HTML. It could be more susceptible to rendering errors or be weighted differently by quality algorithms. This provides a strong, data-backed argument for using server-side rendering (SSR) or static site generation (SSG) for a website’s most critical content. By ensuring this information is delivered in the initial HTML, it is placed in the most stable and fundamental part of the CompositeDoc, guaranteeing its visibility to Google’s primary parsing systems.

Section 2: The Anatomy of Authority and Quality

This section dissects the attributes within the CompositeDoc that represent Google’s multifaceted assessment of a document’s quality, trustworthiness, and authority. These fields are where abstract SEO concepts such as E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness), “site authority,” and content freshness are translated into concrete data points. The analysis of these signals reveals a sophisticated, data-driven system for evaluating content that extends far beyond simple keyword matching.

2.1 Per-Document Quality Signals (perDocData): The Document’s Report Card

The perDocData field is a critical nested model that acts as a detailed report card for a specific document, containing numerous fine-grained quality scores. It is a clear indication that quality assessment at Google is not a monolithic score but a collection of diverse signals. Key components within or related to perDocData include:

- Freshness Signals: The model contains multiple fields dedicated to a nuanced analysis of content freshness. freshboxArticleScores, semanticDateInfo, datesInfo, lastSignificantUpdate, syntacticDate (from the URL structure), and urldate (a separate extraction of a date from the URL) all point to a system that evaluates timeliness from various angles. The presence of highly technical timestamp fields like storageRowTimestampMicros and docjoinsOnSpannerCommitTimestampMicros further indicates that the precise time a document is updated in Google’s various storage and database systems is tracked with extreme granularity.

- Spam Signals: The presence of fields like uacSpamScore (User-Annotated Content spam) and spamtokensContentScore provides direct evidence of per-URL spam assessment. This confirms that Google maintains granular spam scores based on factors like user-generated content, which can impact a document’s overall quality profile.

- Site-level Influence: The hostAge attribute is noted as being used to “determine fresh spam and sandbox the spam”. The inclusion of a host-level attribute within the context of a per-document data model shows how site-wide factors can directly influence the assessment of an individual page.

- Mobile Optimisation: The smartphoneData field indicates that additional, specific metadata is stored for documents optimised for mobile devices. This provides a structural basis for mobile-first indexing, where the mobile version of a site is the primary one used for crawling and indexing, making mobile-friendliness a critical quality signal.

- User Engagement Feedback Loop: A particularly revealing attribute mentioned is impressions, which is described as being “directly fed back into the perDocData which feeds back into the CompositeDoc”. This suggests a direct feedback loop where search performance metrics, such as the number of impressions a URL receives in search results, can influence the document’s stored quality signals.

- Update Efficiency: The partialUpdateInfo attribute, present only in “partial cdocs,” suggests that Google can update specific parts of a document’s record without rewriting the entire entry. This points to a highly efficient system for processing changes, reinforcing the importance of freshness signals.

The existence of an impressions feedback mechanism has significant strategic implications. It suggests a potential for a recursive quality loop or a momentum effect. A page that earns high impressions is demonstrating its visibility and potential relevance to a wide range of queries. If this data is fed back into its quality profile, it could reinforce the page’s perceived importance, potentially leading to continued or enhanced visibility. Conversely, a page that loses visibility and sees its impressions decline might experience a degradation of this particular quality signal over time, making it harder to regain its former rankings.

This mechanism helps explain the phenomenon where top-ranking pages can often seem “entrenched” in their positions. Their sustained high visibility continually validates their importance within Google’s systems. For SEO strategy, this means that achieving initial visibility for a new piece of content is of paramount importance. It is not enough to simply publish and wait. Strategies designed to “prime the pump” of this feedback loop—such as a strong internal linking push from high-traffic pages, targeted social media promotion, and even paid advertising to generate initial user interaction and search impressions—could theoretically provide the initial signal boost needed to start this positive cycle.

2.2 Link Equity and Anchor Text Analysis: The Web’s Connective Tissue

The CompositeDoc confirms that links remain a cornerstone of Google’s authority assessment, with specific fields designed to store and analyse link data in a structured way.

- anchors: This field is the raw container for information about incoming links, including the anchor text used in the hyperlink. The documentation’s note that it is “non-personal” indicates that this is the unprocessed data about the links themselves, forming the basis for further analysis.

- anchorStats: This attribute stores an “aggregation of anchors”. The existence of this separate field implies a secondary processing step where Google moves beyond the raw link data to calculate summary statistics. This could include metrics like anchor text diversity, topical relevance scores based on the language of the anchors, and the distribution of different types of anchor text (e.g., branded, exact-match, generic).

- PageRankPerDocData: Research confirms that a field for PageRank is a key component of the data model, verifying that the foundational concept of link-based authority is still calculated and stored on a per-document basis.

- scaledIndyRank: While its exact function is not specified, the name of this field suggests another form of link-based ranking score, possibly derived from an earlier version of Google’s index, codenamed “Indy.”

The presence of both a raw anchors field and an aggregated anchorStats field points to a two-stage process for link analysis. First, Google’s systems collect the raw data of every incoming link. Second, they perform a synthesis to calculate the aggregate “meaning” of that link profile. This separation is computationally efficient, as the summary object (anchorStats) is much smaller than the potentially massive list of all individual links.

More importantly, this two-stage process allows for more sophisticated analysis. Instead of just counting links or summing PageRank, Google can analyse the entire distribution of the anchor text profile. This enables the system to identify topical themes, spot outliers that might indicate spammy or manipulative link building, and understand what a page is about in the collective “opinion” of the web. This means an effective link-building strategy should focus not just on acquiring links from high-authority pages, but on cultivating a healthy and natural anchor text profile. A diverse profile with a mix of branded, topic-relevant, and natural language anchors would likely result in a strong, positive anchorStats object. Conversely, an over-optimised profile with a high percentage of repetitive, exact-match anchor text could be flagged as manipulative during the aggregation process, potentially neutralising the value of the links or even acting as a negative signal.

2.3 Site-Level Authority and Topical Relevance: The Halo Effect

A document does not exist in a vacuum; its individual quality assessment is heavily contextualised by the authority and topical focus of the entire domain on which it resides. While some of these signals may not be direct fields in the top level of the CompositeDoc, research indicates they are closely associated with the document’s data and play a crucial role in its evaluation.

- siteAuthority: The leaked documentation confirms the existence of a “siteAuthority” metric, a concept long debated in the SEO community. This suggests an overall credibility score for a website that influences the evaluation of its individual pages.

- siteFocusScore and siteRadius: These attributes provide a measure of a site’s topical specialisation. A high siteFocusScore signifies a strong concentration on a specific topic, while siteRadius measures how far a particular page deviates from that core topic.

- site2vecEmbeddingEncoded: This field represents a compressed “site embedding”. This is a sophisticated machine-learning technique where the entire site’s content and theme are converted into a numerical vector in a multi-dimensional space. This allows Google’s systems to perform complex calculations of topical similarity and clustering, comparing one site’s focus to another’s with mathematical precision.

The influence of these site-level signals on a per-document record is profound. It means that the perceived quality of an individual article can be either amplified or suppressed based on the established topical authority of the host domain. For example, a well-researched article on financial planning published on a highly-focused financial news website (high siteFocusScore for finance, small siteRadius for the article) will likely be evaluated more favourably than the exact same article published on a general lifestyle blog. The latter article, while potentially high-quality in isolation, would have a large siteRadius, signalling to Google that it is an outlier from the site’s primary theme.

This provides a strong, data-driven argument against diluting a website’s topical focus. The practice of “pruning” content that deviates significantly from a site’s core area of expertise is supported by this model, as it would help to strengthen the site’s overall siteFocusScore and reduce the average siteRadius of its pages. A page inherits a “halo effect”—either positive or negative—from the reputation and focus of its domain.

2.4 Holistic Quality Assessment (qualitysignals): The E-E-A-T Engine

The qualitysignals field acts as a generic but vital container for a wide range of data related to a document’s overall quality. This is likely where many of the signals that contribute to the abstract concepts of E-E-A-T and YMYL (Your Money or Your Life) are stored and processed.

- OriginalContentScore: The existence of a score for originality highlights Google’s programmatic emphasis on unique, valuable content and its efforts to combat duplicate or low-quality, rewritten pages.

- labelData: This field is particularly important, as it “associates a document to particular labels and assigns confidence values to them.” This is a powerful classification system. It is likely where Google stores labels such as “Health,” “Finance,” or “News,” which would identify the content as YMYL and trigger higher standards of quality evaluation.

The concept of E-E-A-T is not a single, direct score but is better understood as a composite result derived from the interplay of multiple fields within the CompositeDoc. Google’s systems operationalise this abstract concept through concrete data points:

- Expertise and Authoritativeness can be algorithmically proxied by analysing the site’s topical focus (siteFocusScore), the strength of its backlink profile (PageRankPerDocData), and the topical relevance of its incoming links (anchorStats). Author influence, another key factor, is also likely considered.

- Trustworthiness can be informed by a wide array of signals, including technical trust factors like the absence of a badSslCertificate, domain history from registrationinfo, and low spam scores from perDocData.

- Experience is more challenging to measure programmatically but could be inferred from signals like the OriginalContentScore (indicating unique, first-hand content) or through natural language processing of the text within the doc object to identify linguistic patterns associated with personal experience.

Therefore, improving a site’s E-E-A-T is not about optimising for a single, imaginary metric. It is about systematically improving the entire portfolio of signals stored in the CompositeDoc. This holistic view, grounded in the data structure of the index itself, provides a clear path for creating content that aligns with Google’s quality principles.

Section 3: Technical Directives and Indexing Controls

This section focuses on the CompositeDoc attributes that function as direct instructions from a webmaster to Google’s crawlers and indexers. These fields form the bedrock of technical SEO, governing how a document is discovered, whether it is included in the index, and how it is presented to users in different contexts. They represent the “rulebook” for the interaction between a website and the search engine.

3.1 Crawl, Index, and Sitemap Instructions: The Rulebook

The CompositeDoc stores the processed outcomes of the most fundamental technical SEO directives, ensuring these instructions are associated with the document throughout its lifecycle in Google’s systems.

- robotsinfolist: This field contains the processed information derived from a website’s robots.txt file. Its presence as a per-document attribute confirms that the directives in this file (e.g., Disallow) are not just ephemeral instructions used at crawl time, but are stored as a persistent property of the document. This allows Google’s systems to consistently apply these rules without needing to re-fetch the robots.txt file for every action related to the URL.

- indexinginfo: This is a more general container for indexing-related data. It is the logical place where directives from on-page meta robots tags (e.g., noindex, nofollow) are stored. A noindex directive in this field would effectively flag the CompositeDoc for exclusion from the serving index.

- sitemap: This attribute comes with a critical warning in its documentation: it is used for “Sitelinks,” which are the collection of important links that may appear under a site’s main result in the SERPs. The documentation explicitly states this is “different from the crawler Sitemaps (see SitemapsSignals in the attachments).” This is a crucial distinction that clarifies a common point of confusion.

The specific purpose of the sitemap field—for generating SERP Sitelinks—implies that Google’s internal concept of a “sitemap” for this purpose is distinct from the webmaster’s XML sitemap. The XML sitemap is primarily a discovery and crawl prioritisation tool; it informs Google about the existence and importance of URLs. The data in the CompositeDoc’s sitemap field, however, is a product of Google’s analysis, not a direct input from the webmaster.

This leads to a key understanding of how Sitelinks are generated. They are not manually configured or directly dictated by an XML sitemap. Instead, the links that Google’s algorithms choose to populate into this sitemap field are likely derived from an analysis of the site’s structure and internal linking patterns. Prominent, consistently used links in primary navigation menus, footers, and high-level internal links are the most probable sources for this data. Therefore, influencing what appears in Sitelinks is not an exercise in XML sitemap optimisation, but rather a function of creating a clear, logical, and hierarchical site architecture that signals the most important pages to both users and Google.

3.2 Specialised Indexing Tiers

Beyond the main web index, the CompositeDoc contains attributes that show how Google manages inclusion in specialised or separate indexes, often using quality thresholds.

- subindexid: This field likely indicates which specific sub-index or vertical search a document belongs to, suggesting a more complex index structure than a single monolithic database.

- cseId and csePagerankCutoff: These fields relate directly to Google’s Custom Search Engine (CSE) product. The cseId identifies which custom search engines a URL is part of, while csePagerankCutoff reveals a fascinating mechanism: a URL is only selected for a CSE if its PageRank is above a certain threshold. This is a direct confirmation that PageRank is still actively used as a quality gate for inclusion in certain parts of Google’s ecosystem.

3.3 Security, Trust, and Provenance: The Digital Passport

The CompositeDoc also contains fields that act as a digital passport for a document, providing fundamental signals of security, trust, and origin. These attributes are often binary but carry significant weight in establishing a baseline of trustworthiness.

- badSslCertificate: This is a simple but powerful boolean flag. Its presence indicates that the page, or a URL in its redirect chain, has a faulty SSL certificate. In an era where HTTPS is a baseline expectation and a minor ranking signal, this attribute acts as a direct, negative trust indicator that can impact both ranking and user experience (via browser warnings).

- registrationinfo: This field contains information about the domain’s creation and expiration dates. This is the likely source for the hostAge signal mentioned in the research, which is used to identify and “sandbox” potential “fresh spam”. This reframes the long-standing debate in the SEO community about the importance of domain age. The data suggests that age is not a direct factor for authority (an older domain is not inherently better than a newer one). Instead, it functions as a risk management flag. A brand-new domain lacks a history of trust signals, making it a higher-risk entity for spam. Google’s systems may, therefore, temporarily limit its visibility or scrutinise it more heavily (the “sandbox” effect) until it accumulates a sufficient number of positive trust signals. The strategic implication for new domains is the critical need to rapidly build these signals—acquiring high-quality backlinks, ensuring technical perfection (like the absence of a badSslCertificate), and publishing authoritative content—to shorten this initial probationary period.

- ptoken and accessRequirements: These attributes point to the increasing importance of data governance and privacy. The ptoken is a “policy token” for making decisions on data usage, while accessRequirements contains information to enforce access control. Together, they suggest a robust internal system for managing content that may be subject to specific legal or policy constraints (e.g., GDPR, copyright), which is another layer of establishing a document’s official status and trustworthiness.

3.4 Global and Local Signals: Speaking the User’s Language

A significant portion of the CompositeDoc is dedicated to understanding a document’s geographical and linguistic context, ensuring that the right content is served to the right user.

- localizedvariations and localizedAlternateName: These fields are essential for managing international websites. They store the relationships between different language and regional versions of a document, most likely populated by processing hreflang annotations. Their function is to enable Google to swap in the correct URL in the search results based on the user’s location and language preferences, preventing users from landing on the wrong version of a page.

- localinfo: This is a container for local business information, referencing the LocalWWWInfo model. Research details its rich sub-fields, including address, brickAndMortarStrength, geotopicality, and isLargeChain. This structure forms the data backbone of Local SEO.

The brickAndMortarStrength signal within localinfo is particularly noteworthy. While an address is a simple, factual data point, “strength” is a qualitative assessment that must be calculated. This suggests that Google has a quantitative measure of a business’s physical presence that goes beyond simply verifying an address. This score could be a composite derived from a multitude of signals available across Google’s ecosystem. These might include the completeness and activity on the Google Business Profile, the number and quality of user-submitted photos of the location, the volume and sentiment of reviews that mention the physical premises, aggregated and anonymised location data from Android devices, and the consistency of the business’s Name, Address, and Phone number (NAP) across the wider web.

This implies that effective Local SEO is not just about having the correct address on a contact page. It is about building a rich, consistent, and verifiable data footprint for the physical location across Google’s entire ecosystem. A business with a well-managed GBP, numerous photos, and consistent citations will likely have a much stronger brickAndMortarStrength score, which in turn enhances the authority of its associated CompositeDoc for local queries.

Section 4: Rich Media, Structured Data, and Content Classification

This section explores how the CompositeDoc structure accommodates non-textual content and structured data. These attributes allow Google to move beyond a simple text-based understanding of a document, enabling the creation of rich, engaging search results and applying sophisticated content classification filters. This demonstrates a clear architectural shift towards a multi-modal and semantically structured index.

4.1 The Power of Structured Data (richsnippet)

Structured data, typically implemented using Schema.org markup, is a critical component of modern SEO, and its importance is reflected directly in the CompositeDoc.

- richsnippet: This field is explicitly designed to store the “rich snippet extracted from the content of a document” and is of the type RichsnippetsPageMap. This attribute is the direct destination for parsed and validated structured data found on a page. Its presence is what makes a page eligible for a wide array of special features in the search results, such as review stars, recipe information, event details, and product pricing. Case studies have shown that gaining these rich features can dramatically increase click-through rates, traffic, and conversions.

While Google’s official position is often that structured data is not a direct ranking factor, the CompositeDoc reveals a more nuanced reality. Structured data is a direct data structuring factor. It performs a crucial function by transforming ambiguous, unstructured content from the doc field into a clean, precise, machine-readable format within the richsnippet field.

This process has a clear causal chain that indirectly influences rankings. By implementing structured data, a webmaster is essentially pre-processing their content for Google, removing ambiguity and explicitly defining key entities (e.g., “£19.99 is the price,” “2024-12-25 is the event date,” “45 minutes is the cooking time”). This clean, structured data is far easier for Google’s ranking and feature-generation systems to consume and trust. While this does not confer a ranking boost in the same way as PageRank, it dramatically increases the probability that the page will be correctly and fully understood. This correct understanding makes the page eligible for rich features, which in turn makes the search result more visually appealing and informative, leading to a higher click-through rate (CTR). A higher CTR is a positive user engagement signal, which can be a factor in ranking algorithms. Therefore, structured data initiates a virtuous cycle by improving data quality, which enhances SERP appearance, which drives user engagement, which can positively influence rankings.

4.2 Multimedia SEO: Image and Video Analysis

Google’s understanding of a page’s topic is multi-modal, extending far beyond the text. The CompositeDoc contains dedicated fields for storing detailed analyses of embedded images and videos.

- docImages and docVideos: These fields store comprehensive information about selected images (ImageData) and videos (ImageRepositoryVideoProperties) associated with the document. The documentation notes a complex selection process for which images are deemed “selected” for a given document, implying an algorithmic assessment of an image’s importance and relevance to the page content.

- The data stored for these multimedia elements is extensive. For videos, it can include thumbnails, transcripts, and even extracted keyframes. For images, it includes data essential for Image Search indexing.

- Crucially, the analysis goes deeper, utilising advanced machine learning models. Attributes like ImageUnderstandingIndexingAnnotation and VideoRepositoryAmarnaSignals store the outputs from these models, which can detect and label objects, faces, logos, and even extract text (OCR) from within the media files.

This architecture demonstrates that Google does not just see an <img> tag or a <video> tag; it “sees” the content of the image and “watches” the content of the video. The entities, concepts, and text extracted from this multimedia analysis are then associated with the parent CompositeDoc.

This has profound implications for content strategy. The media on a page can either strongly reinforce or actively contradict the textual content. A page about “German Shepherd training” that features high-quality, original images and videos clearly identified by ML models as containing “German Shepherd dogs,” “people,” and “training activities” will have its topical relevance score powerfully reinforced. The CompositeDoc for that page becomes a cohesive, multi-modal signal of its subject matter. Conversely, a page with the same text but using generic, irrelevant stock photos will present a weaker, more ambiguous signal to Google. The textual content may be strong, but it lacks the corroborating evidence from the visual media. Therefore, modern image and video SEO is not merely about optimising file names and alt text; it is about a deliberate strategy of selecting and creating media that is thematically aligned with the core content to create a unified and powerful signal of relevance for the entire document.

4.3 Content Classification and Safety Filters

The CompositeDoc also includes powerful attributes for content classification, which are essential for applying safety filters like SafeSearch and for handling sensitive content categories with appropriate scrutiny.

- porninfo: This field, of the type ClassifierPornDocumentData, is an explicit and direct attribute used for “image and web search porn classification.” Its presence indicates a robust system for identifying adult content and filtering it from search results for users with SafeSearch enabled.

- labelData: As discussed previously, this field provides a more general-purpose classification system. It assigns “particular labels” to a document along with “confidence values.” This probabilistic approach is far more nuanced than a simple binary classification.

The use of “confidence values” in labelData means that Google’s classification is not always a simple yes/no decision. A document might be classified as “85% confident this is medical content” or “60% confident this is adult-oriented.” This probabilistic model allows for more flexible and nuanced policy application. For instance, content that is classified with 99% confidence as being pornographic can be filtered aggressively and universally. Content that has a lower confidence score might be treated differently—perhaps being restricted in certain regions with stricter laws, or only being shown to users who have explicitly disabled SafeSearch.

For SEO professionals and content creators, this highlights the importance of clarity and the avoidance of ambiguity, especially when dealing with topics that are on the borderline of being sensitive. If content could be misconstrued (e.g., educational health content that uses clinical diagrams), it is crucial that the text, surrounding context, images, and outbound links all work together to clearly signal its non-adult or non-harmful intent. The goal is to ensure that if Google’s classifiers analyse the page, they assign it the correct label with high confidence, preventing it from being inadvertently caught in a filter that would limit its visibility to the intended audience.

4.4 Extensible Data Containers: docAttachments

A key architectural feature of the CompositeDoc is its extensibility, demonstrated by fields like docAttachments. This attribute is described as a “generic container to hold document annotations and signals.” It functions as a flexible attachment point where new types of data and signals can be added to a document’s record without needing to change the core CompositeDoc structure.

Another related field, docinfoPassthroughAttachments, is used for data that is pushed into the index but is explicitly designated not to be used in core ranking or snippeting code. This data is automatically returned in information requests and can be used by other systems, such as those that display special features in the search results.

These “attachment” fields are incredibly significant. They show that the CompositeDoc is not a static, rigid structure but a living data object designed to evolve. As Google develops new ways to analyse content or new features for search, it can simply create a new “attachment” to carry this data. This provides a mechanism for future-proofing the entire indexing system and explains how Google can continuously add new signals and capabilities to its understanding of the web.

Section 5: Strategic Synthesis and Actionable Frameworks

The detailed deconstruction of the CompositeDoc provides more than just technical knowledge; it offers a blueprint for a more sophisticated and effective SEO strategy. By synthesising the analysis of its structure, it is possible to develop practical frameworks that align SEO efforts directly with Google’s core data model. This approach moves beyond chasing transient algorithm updates and focuses on building digital assets that are fundamentally sound and resilient.

5.1 The CompositeDoc Audit Model: A New Framework for SEO Audits

Traditional SEO audits often segment tasks into broad, sometimes overlapping, categories like “Technical,” “On-Page,” and “Off-Page.” A more powerful approach is to structure audits around the core pillars of the CompositeDoc itself. This aligns the audit process directly with how Google aggregates and understands information about a page, ensuring that all critical data points are addressed in a logical, interconnected manner.

A proposed CompositeDoc Audit Model would consist of the following five pillars:

- Identity & Canonicalisation Audit: This foundational check focuses on ensuring that a single, unambiguous CompositeDoc exists for each piece of valuable content. It involves auditing all signals related to duplication and identity, such as forwardingdup (redirects), alternatename (parameter handling, alternate versions), and ContentChecksum96 (on-page duplicate content). The primary goal is to verify that a clear and consistent canonical signal is being sent to Google through rel=”canonical” tags, 301 redirects, XML sitemaps, and internal linking.

- Content & Schema Audit: This pillar examines the core of the document’s value. It involves a qualitative analysis of the content that populates the doc object—assessing its uniqueness, helpfulness, and alignment with E-E-A-T principles to positively influence signals like OriginalContentScore. It also includes a technical audit of the richsnippet field, verifying the implementation, accuracy, and completeness of Schema.org markup to ensure the content is structured for maximum machine readability and SERP feature eligibility.

- Authority & Link Audit: This focuses on the signals that establish a document’s authority within the wider web. It involves an evaluation of the backlink profile that informs PageRankPerDocData and a deep analysis of the incoming anchor text that populates the anchors and anchorStats fields. The objective is to assess not only the quantity and quality of backlinks but also the health and diversity of the anchor text profile.

- Quality Signals Audit: This pillar involves a comprehensive review of the myriad factors that contribute to the perDocData and qualitysignals objects. This includes checking content freshness (lastSignificantUpdate), mobile-friendliness (smartphoneData), page speed, and potential spam signals (uacSpamScore). It also involves assessing the document’s classification via labelData to ensure it is correctly categorised, especially for YMYL topics.

- Technical Directives & Trust Audit: This final check verifies the “rulebook” given to Google. It involves auditing the robotsinfolist (from robots.txt) and indexinginfo (from meta tags) to ensure clear and correct crawling and indexing instructions are in place. It also includes auditing fundamental trust signals like the absence of a badSslCertificate and the site’s overall security posture.

5.2 A Prioritised Approach to SEO: Maslow’s Hierarchy of Needs for Websites

The hierarchical and nested structure of the CompositeDoc implies an inherent order of operations in how Google processes a page. This logic can be used to create a prioritised framework for SEO efforts, akin to Maslow’s hierarchy of needs. A site must satisfy the fundamental needs at the bottom of the pyramid before it can effectively benefit from optimisations at the top.

- Level 1 (Foundation): Accessibility & Indexability. At the base of the pyramid is the absolute requirement that Google can access and is permitted to index the page. This corresponds to ensuring a valid HTTP status code, having no badSslCertificate, and allowing crawling in the robotsinfolist. If Google cannot create a CompositeDoc for a URL, nothing else matters.

- Level 2 (Identity): Canonicalisation. Once a page is accessible, Google must be able to identify it as the single, authoritative version. This level involves resolving all duplication issues that affect fields like forwardingdup and alternatename. Without a clear canonical identity, all subsequent efforts, such as link building, are diluted across multiple CompositeDoc instances.

- Level 3 (Content): Relevance & Quality. With a unique identity established, the focus shifts to the quality of the content within the doc object. This involves creating unique, helpful, and people-first content that will generate positive qualitysignals and a high OriginalContentScore. This is the core value proposition of the page.

- Level 4 (Authority): Link Equity. Once a page has high-quality content, it needs to build authority. This level focuses on acquiring high-quality backlinks to populate the anchors field and positively influence PageRankPerDocData. Authority signals validate the quality of the content to Google.

- Level 5 (Enhancement): Richness & Experience. At the top of the pyramid are the optimisations that enhance an already strong, authoritative page. This includes implementing structured data to populate the richsnippet field, using optimised multimedia to enrich the docImages and docVideos attributes, and refining the user experience. These enhancements make the content more useful to users and more valuable in the SERPs.

5.3 Future-Proofing SEO Strategy: Aligning with Google’s Core Architecture

A deep understanding of the CompositeDoc provides the ultimate framework for a future-proof SEO strategy. It encourages a shift away from a reactive mindset, which chases the latest algorithm update or ranking factor rumour, towards a proactive, foundational approach that is aligned with the core principles of Google’s information architecture.

The CompositeDoc is not a transient phenomenon; it is the culmination of over two decades of Google’s effort to organise the world’s information. Its structure reveals a set of enduring principles:

- The absolute necessity for a single source of truth through rigorous canonicalisation.

- The assessment of quality as a multi-faceted composite of hundreds of signals, not a single score.

- The foundational importance of trust, established through technical security and site history.

- The evolution towards a multi-modal understanding of content, where text, images, and videos work together to create a unified signal of relevance.

- The dynamic and experimental nature of search, evidenced by fields like liveexperimentinfo, which confirms that documents can be part of live tests, constantly refining the algorithm.

- The constant evolution of the data model itself, confirmed by the dataVersion attribute, which tracks the version of the data fields, showing that Google’s understanding of the web is always being updated.

By optimising not just for keywords, but for the creation of a clean, authoritative, comprehensive, and unambiguous CompositeDoc for every important URL, SEO professionals can build digital assets that are inherently resilient. This strategy is less susceptible to the volatility of minor algorithm updates because it is aligned with the long-term, architectural trajectory of how Google understands and indexes the web. In essence, the goal of SEO becomes to assist Google in building the best possible CompositeDoc for your content, making it as easy as possible for its systems to recognise its value and relevance. This is the most sustainable path to long-term success in organic search.

Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 2.5 Deep Research. I have over 20 years writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure our customers have clarity on everything we are involved with and what we stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived ad edited verified as correct by me (and is under constant development). See my AI policy.

Disclaimer: Any article (like this) dealing with the Google Content Data Warehouse leak is going to use a lot of logical inference when putting together the framework for SEOs, as I have done with this article. I urge you to double-check my work and use critical thinking when applying anything for the leaks to your site. My aim with these articles is essentially to confirm that Google does, as it claims, try to identify trusted sites to rank in its index. The aim is to irrefutably confirm white hat SEO has purpose in 2025.

References

- https://developers.google.com/search/docs/fundamentals/seo-starter-guide

- https://mojodojo.io/blog/googleapi-content-warehouse-leak-an-ongoing-analysis/

- https://ovative.com/impact/expert-insights/what-you-need-to-know-about-the-google-algorithm-leak/

- https://www.newbrewmedia.com/blog/googleapi-contentwarehouse-v1-explained-a-guide-for-seos-and-marketersere

- https://www.elegantthemes.com/blog/wordpress/how-search-engine-indexing-works

- https://code.store/blog/inside-googles-content-warehouse-leak-implications-for-seo-publishers-and-the-future-of-search

- https://developers.google.com/search/docs/crawling-indexing

- https://www.webfx.com/blog/seo/what-is-google-indexing/

- https://developers.google.com/search/docs/fundamentals/how-search-works

- https://www.cloudflare.com/learning/network-layer/what-is-a-protocol/

- https://www.britannica.com/technology/protocol-computer-science