This article (although a first draft) clarifies my current thinking on SEO and how I approach it today. My views are shaped by long-term, hands-on experience across multiple eras of search, including practices that no longer align with modern Google systems. The purpose here is not to promote tactics or shortcuts, but to explain how SEO decisions must be evaluated in context, using judgment rather than rigid rules. Where systemization is mentioned, it is intentional and limited, serving only to support understanding – not to reduce SEO to a formula or checklist.

One of the big debates I am having online on X at the moment is about the abstraction that is E-E-A-T and YMYL and if, how and when it is a useful guide to help you self-assess the quality of your website, especially after an unexpected traffic drop.

I think the primary value highlighted in this conversation is the fact that “Contextual SEO” is a “thing,” and David Quaid and I have helped define this further for 2026. No doubt this article will be getting refined in the coming months, too.

It’s also why Google’s John Mueller is famous for saying “it depends” when answering SEOs’ wide-ranging questions.

I certainly have never put those words together, I don’t think, to highlight this exact concept in this manner. So it might be useful for beginners to have this clarified.

Contextual SEO is a “thing”.

E-E-A-T is Contextual.

SEO checklists are Contextual.

SEO is Contextual.

Editor’s Note: My Stance

I’ve said it before: You are either a Black Hat SEO or a White Hat SEO. I am a White Hat SEO. I am a reformed black hat seo, going by todays Google guidelines. This article is to clarify my thinking. It only touches on systemization of the information herein.

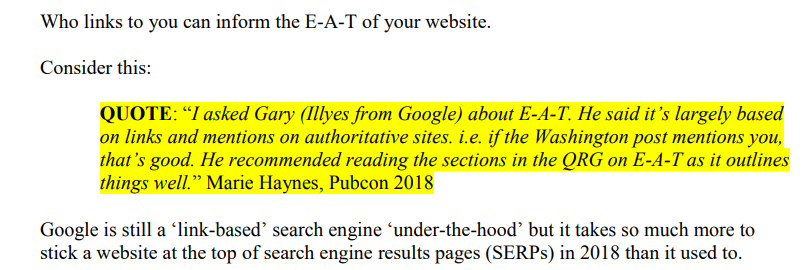

What I personally have been penalized with (and learned from) over the years is, for my purposes, the best instruction available on how Google works – instruction that is evident in the attributes found in the Google API leak and disclosed in the DOJ trial.

Or at least, a truer statement would be that the system attributes in the leak appear designed to reflect the scoring systems and proxies I see advised in official documentation and the Quality Rater Guidelines – which we know are a reflection of the types of sites Google aims to show its users.

I only work with websites in YMYL (Your Money or Your Life) topics. I have very little experience outside of YMYL topics in over 10 years. I do not work in non-YMYL topics, but I hold that my advice is still very useful to these sites, particularly in matters of Trust and User Satisfaction – the very signals that help drive PageRank_ns (links).

I don’t present black hat SEO strategies on the Hobo SEO blog or anywhere. Rarely do I work with sites with no measurable external PageRank. Take my thoughts on the subject presented here with this in mind.

I’ll add: if my life depended on ranking something next month, it would probably involve a mix of high-effort content, Parasite SEO, and PageRank.

Lastly, I don’t do the politics of Google. I have always relied on Aaron Wall and John Andrews for that commentary.

What is YMYL?:

“‘Stand and deliver,’ they cried, Then, ‘Your money or your life,’ Coachmen cursed and ladies sighed, But paid all, afraid of strife.” THE TRUE STORY OF DICK TURPIN – KENNETH PHILLIPS – ‘KENTISH POST’, 22nd to 25th June, 1737: “We call these ‘Your Money or Your Life’ topics, or YMYL for short” – Google 2025.

The clue’s in the name.

EEAT is Google’s doctrine codified.

That being said…

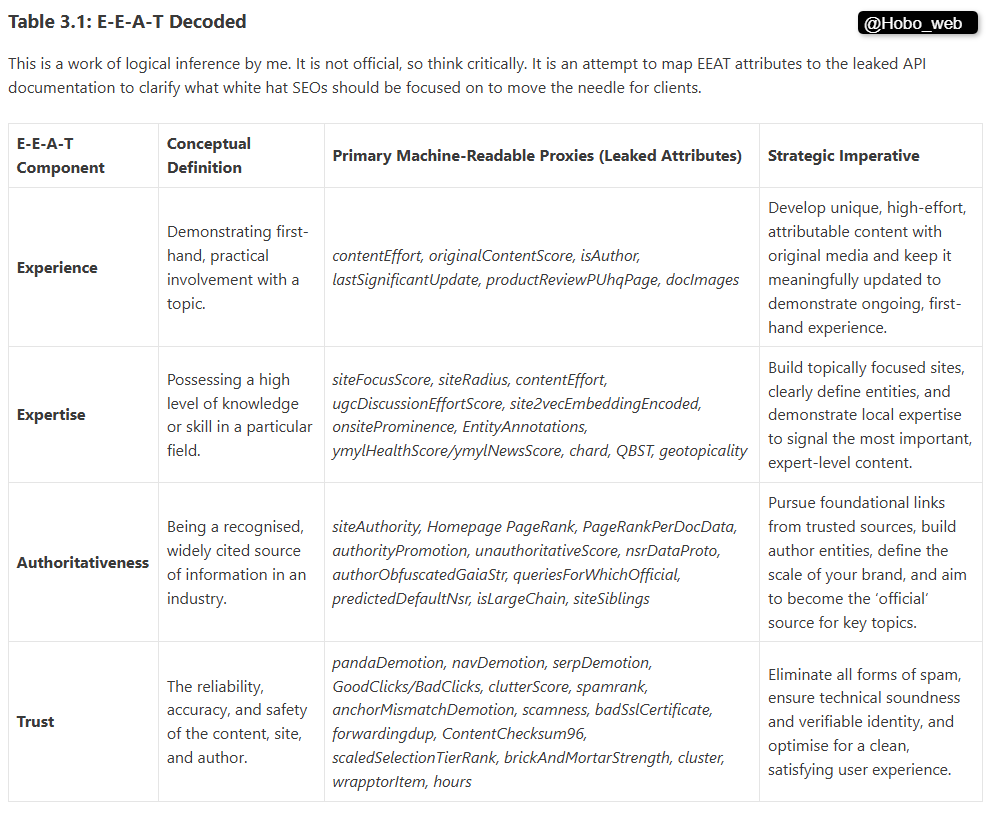

One of the big debates I’m having on X right now – primarily with SEO David Quaid of Primary Position – is about the abstraction of E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness), and specifically how and when it is a useful guide to self-assess the quality of your website.

I think the primary value highlighted in this conversation is the fact that “Contextual SEO” is a real thing. David and I have helped define this, and it is exactly why Google’s John Mueller is famous for saying: “It depends.”

I don’t think I’ve put these concepts together in this specific way before, so it might be useful for beginners to have this clarified: E-E-A-T is contextual.

Our arguments can often come down to the order of the letters in E-E-A-T (EEAT vs EETA). I nearly called my strategy the TEEA strategy.

So in effect, we would actually agree where the “A” goes in this mental model, I’ve just realized.

1. The Pipeline: Relevance Finding You vs. Quality Judging You

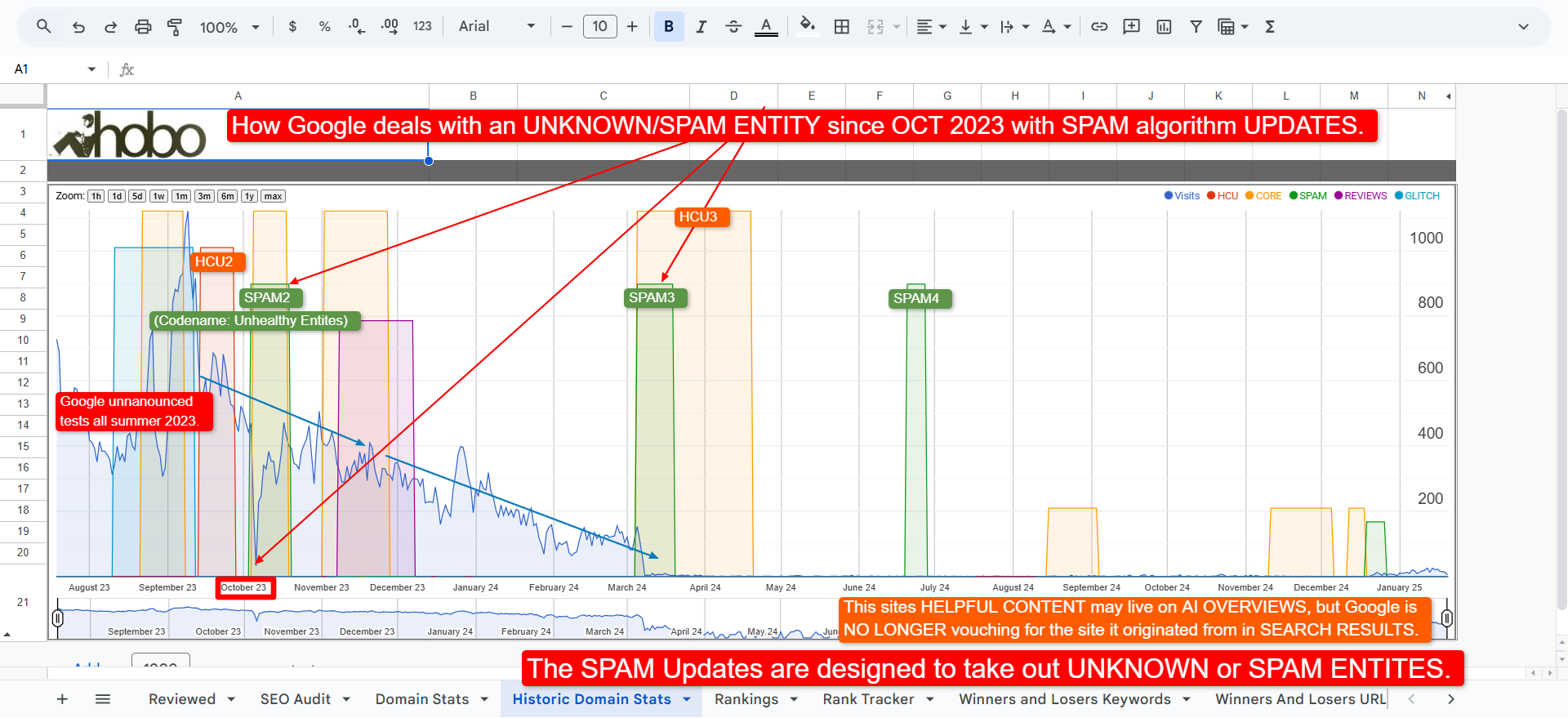

Low-quality E-E-A-T is a common proxy for Spam. Spam is always hunted down and usually dealt with by Google (although this window can evidently be months, if not years).

Why does this “window” exist? Because, as the DOJ Antitrust Trial revealed, Google is not a monolithic brain. It is a Ranking Pipeline comprising many competing philosophies:

The Relevance Systems (Fast)

These systems want to find “Good” – relevant – content quickly. They rely on:

-

Topicality ($T^*$): Also called the ABCs of relevence. How relevant your content is to the query (reflected in the API leak in high level attributes like

siteFocusScoreandsiteRadius). -

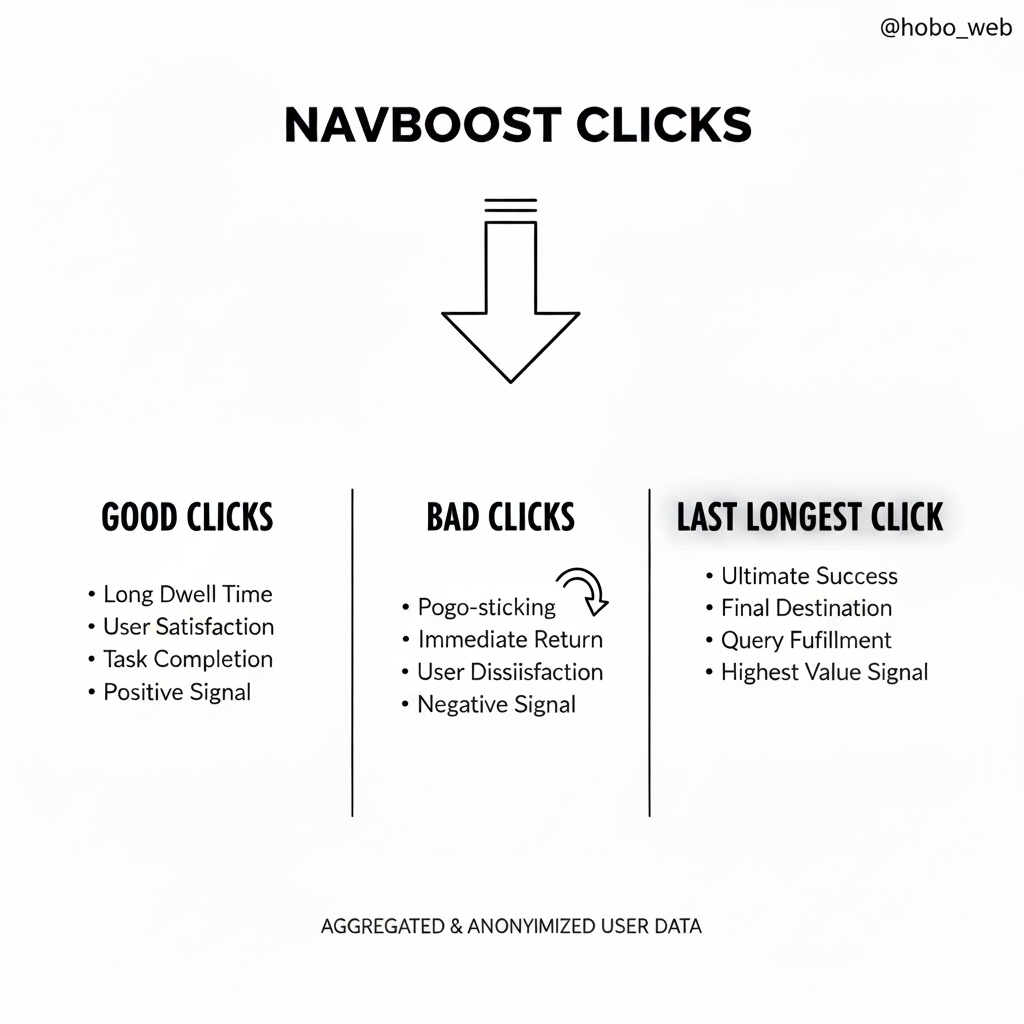

Popularity Score ($P^*$): The DOJ trial confirmed this is largely driven by Navboost – a system that measures user interaction (clicks, scrolls, and “last longest clicks”).

If you smash out trending AI content, the Relevance Systems might find you immediately because you have high Topicality and freshness.

There is a lot of reasons.

The Quality Systems (Slow)

These systems act as the filter. They rely on:

-

Q* (Q-Star): A site-wide, query-independent “Trustworthiness” score that acts as a ceiling on how high you can rank long-term. PageRank is an attribute in this system.

-

Spam & Trust Filters: Leaked attributes like

spamrank(bad links) andscamness(bad intent) and many more.

The Trap

“Burner” SEO strategies – including black hat – work by betting against the clock. They exploit the gap between Relevance finding you and Quality catching you.

You are betting you can make money before Q* overrides P*. But make no mistake: Q* always wins – or at least it is designed to. The pipeline inevitably catches up, in my experience.

One way your work is definitely getting torched, and the other way you have a hope in hell, in one context at least. This is what I mean about SEO being a “choose your own adventure”.

It’s black hat or white hat.

If you are a business relying on one website you must not use black hat strategies (those that are defined in Google guidelines). Even those not defined, reading between the lines. Read my SEO strategy guide for more.

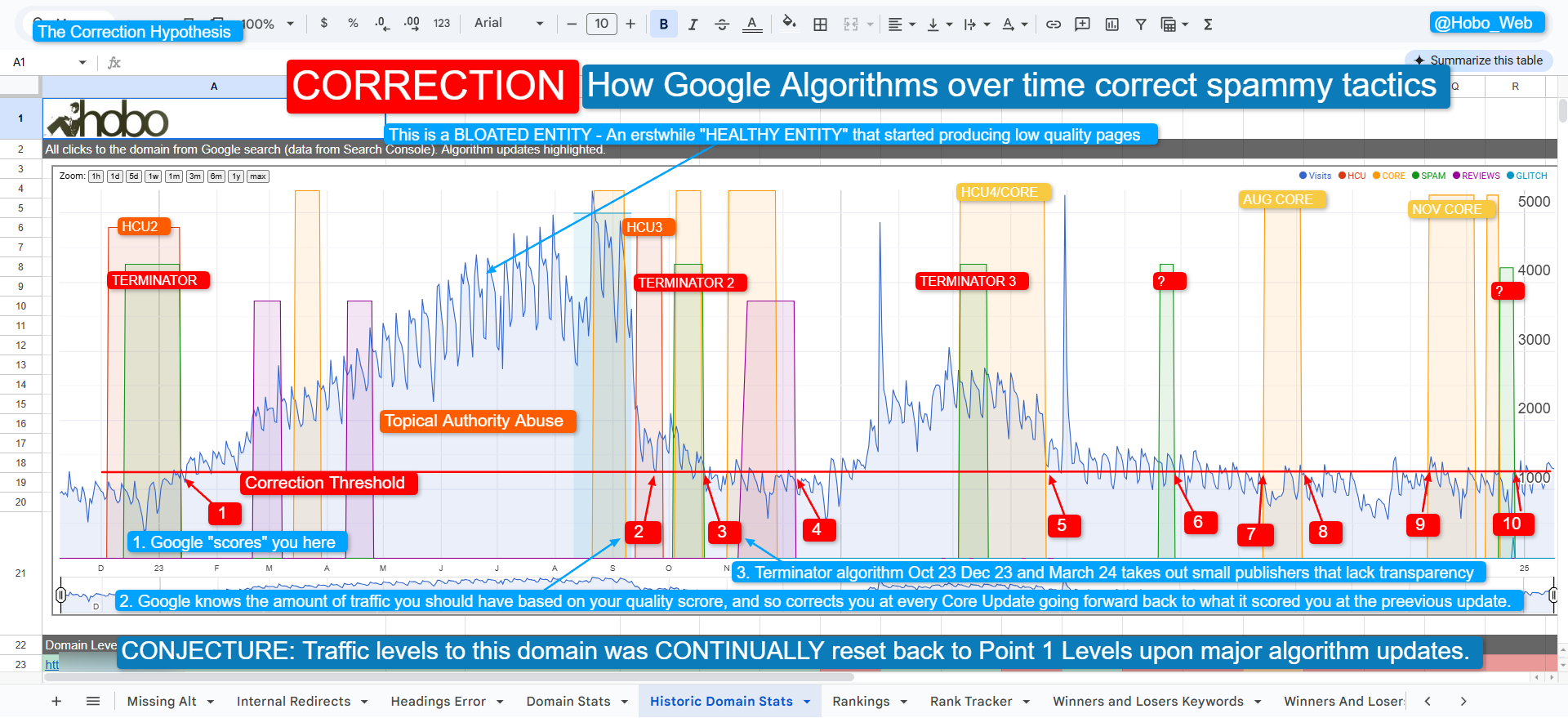

2. The Stability Context: Read the Room (and the Graphs)

Before you decide which strategy applies to you, you must determine your current algorithmic context.

Context is not just about what you sell; it is about how you are performing right now. Open Google Search Console and look at the trend line.

-

Is your site stable in traffic?

-

Is it trending down in an unforeseen manner?

A sudden drop (outwith AI Overviews killing clicks to your site) suggests that the Quality Systems have finally overridden the Relevance Systems.

We hear about such ranking drop stories every core update (remember the helpful content update and the telling changes to quality rater guidelines since then?

Helpfulness appears to be the opposite of harmfulness to Google. And its all based contextually on what users expect to find on your site if you are operating in a high-stakes YML topic.

Note: Be careful to distinguish between “Zero Click” trends and actual Demotions.

-

If your rankings held but traffic dropped, that is likely AI Overviews eating the market share.

-

If your rankings vanished or are trending down, that could be a Quality Demotion. Heck, you might have lost PageRank.

A true ranking drop suggests the algorithm has recalculated your site-wide signals – perhaps your spamrank spiked, or your originalContentScore dropped too low – and it has applied a dampener.

In this context, publishing more content is often the wrong move. Neither is “just get links”. You cannot “post your way out” of a quality filter. You very probably will not link your way out of a problem on the domain either.

You must first identify why the stability broke or this is a sysiphian challenge for you. You can use the Quality Rater Guidelines to help guide you in such an audit to self assess your content.

In my experience, a slow trend down is indicative of a quality issue. I honestly believe Google tries to make these softer over time as opposed to things like Penguin and Panda, but in doing so, sometimes the cause gets missed for months or years.

Google can’t really win in some areas either (outside of making cash, evidently).

3. Match Your Strategy to Your Topic

Once you know your stability status, the requirement for high-quality E-E-A-T becomes contextual to your specific topic and therefore niche.

You (as an SEO) must first determine if your content is defined as YMYL. Because often a client has little clue about all this.

Scenario A: The “Cat Gif” Context (Non-YMYL)

Are you making free content about cat gifs? Nobody cares about your credentials. Neither does Google.

According to Section 3.4.1 of the Guidelines regarding “Everyday Expertise”:

“For many topics, everyday expertise is enough. … For example, if the purpose of the page is to share a personal experience… the creator of the content does not need a formal education.”

In this context, high E-E-A-T is not a requirement, and you aren’t likely to trigger high-risk algorithmic flags.

Scenario B: The “Service” Context (High-Stakes YMYL)

Are you giving medical advice on cat health? Are you selling $10k consulting services?

Now you have entered what Google calls YMYL (Your Money or Your Life). The rules of the game change immediately. You are now being scored against a much stricter set of leaked attributes, including ymylHealthScore, a classifier specifically judging the safety of medical advice.

Per Section 3.4.1 regarding YMYL:

“If a page on YMYL topics is highly inexpert, it should be considered Untrustworthy and rated Lowest.“

Here, “Expertise” (the E in E-E-A-T) is paramount. You need qualified authors, citations, and professional accreditation.

Scenario C: The “E-Commerce” Context (Transactional YMYL)

Are you selling cat toys online?

This is distinct from selling services. Google doesn’t necessarily care if you have a PhD in “Cat Toys.” They care if you are going to rip the customer off. The context shifts from Expertise to Trustworthiness.

If you are taking credit card details, you are automatically YMYL. But the signals Google looks for here are different.

-

isMerchant: A specific attribute in the leak that identifies e-commerce entities. -

scamness: This score spikes if you lack customer service info or return policies.

The Quality Rater Guidelines are brutal on this. Section 2.5.2 states:

“Stores that have no contact information… or have an unsatisfactory customer service reputation should be rated Lowest.”

In this context, an “Author Bio” is less important than a Refund Policy, a Physical Address, and HTTPS security. If you have great products but your checkout looks sketchy, your scamness score rises, and your rankings tank.

4. The “Hypocrisy” Penalty: Alignment of Production & Policy

This is where many “programmatic” SEOs fail. It’s not just about having a Terms of Use page; it’s about whether your production values align with that policy.

Google’s Quality Raters are explicitly trained to look for this misalignment. If your “About Us” or “Terms of Use” page claims: “We are a team of dedicated experts reviewing every product,” but your content production values show: “We are publishing 500 AI-generated posts a day with zero editing,” you have a problem.

The Quality Rater Guidelines (Section 7.0 regarding Deceptive Page Purpose) warn that:

“Pages with a mismatch between the stated purpose (or Terms of Use) and the actual content should be rated Lowest Quality.”

In the API leak, this likely triggers the scamness or unauthoritativeScore attributes.

-

High Alignment: A site claiming to be an expert resource that publishes one deeply researched article a week. (Safe).

-

Low Alignment: A site claiming to be an expert resource that publishes 100 shallow articles a day. (Flagged for “Deceptive Design”).

You cannot fake E-E-A-T with a policy page if your production values scream “spam farm.”

5. The Transparency Mandate: Affiliate Links

Finally, we must talk about the most common “Trust” killer: Hidden Affiliation.

In the Quality Rater Guidelines, transparency is non-negotiable. If you are monetizing with affiliate links, you are legally required (in the US via the FTC for example) and algorithmically required (by Google) to disclose this using the nofollow attribute.

The FTC guidelines state that disclosures must be “Clear and Conspicuous.” That means:

-

Proximity: The disclosure must be near the claim/link.

-

Unavoidable: It cannot be hidden in a footer or a “Terms” page nobody reads.

Google reflects these legal standards in its own guidelines. Section 2.5.3 (“Main Content vs. Ads”) explicitly warns raters to identify if ads are disguised as content. If an affiliate link is hidden to look like an unbiased recommendation, it is considered “Deceptive Page Purpose.”

In the API leak, this behavior feeds directly into:

-

scamness: A high score for deceptive monetization practices. -

spamrank: If you link to low-quality merchants without proper disclosure.

If you hide your business model, you are telling Google’s “Trust” algorithms that you have something to hide.

6. The Hidden “Clutter” Score

How you monetize matters, too. Are you monetizing via aggressive ads?

Now your site has a clutterScore.

This is a literal attribute found in the API leak that penalizes sites for having “distracting/annoying resources.” This aligns perfectly with Section 6.0 of the Quality Guidelines:

“We consider the Main Content to be Low quality if it is difficult to use or read. … Examples include: Ads that distract from or interrupt the use of the Main Content.“

The Verdict

That isn’t a guess. I still have a client I recovered during the 2018 Medic Update – the very algorithm change that codified these E-E-A-T requirements – who is sitting at #1 for their main term in the UK to this day. I think he needs more digital PR and to add more content to the site that helps the site “explain itself”.

This is 25 years of experience talking – cross-referencing real-world wins and failures with the Quality Rater Guidelines, the DOJ case files, and the internal variables like scamness, contentEffort, and clutterScore found in the Content Warehouse API leak.

So, do you need E-E-A-T?

-

Is the user just looking for fun? (Cat gifs) → No. Everyday expertise is sufficient. (E)

-

Is the user looking for expert advice and are you giving it? (Medical/Financial) → Yes. You need high expertise signals – E-E-A-T (Q).

-

Is the user taking a financial risk? (Buying products) → Yes. You need high trust signals and low scam markers (

scamness).E-E-A-T (Q).

The answer depends entirely on your context. And PageRank still matters a lot.

Remember: E-E-A-T doesn’t make you rank, but it can stop you from tanking.

Black hats are unlikely to care, but contextually speaking, if your job is to mitigate risk for your company website (the type of clients I write content for), you should well review the abstract concept that is E-E-A-T.

Strategic Takeaways: The “Contextual SEO” Checklist

| Checklist Item | The Logic & Underlying Concept | Required Action |

| 1. The Stability Check |

Verify your context. You need to know if a traffic drop is due to AI Overviews eating market share or if your rankings actually dropped (Quality Demotion).

|

Stop publishing immediately if rankings have dropped significantly. You are in “Recovery Mode” and need to audit for quality rather than churning out more content.

|

| 2. The “Stay in Your Lane” Check |

Protect your Topical Authority (

|

Audit content categories. If a topic does not support your primary entity, delete it or move it to a different domain. Do not dilute your focus for cheap traffic.

|

| 3. The Commercial Risk Check |

Manage

|

Over-index on transparency. If selling products, add physical addresses, clear return policies, and customer service options to “pay down your scamness debt”.

|

| 4. The Affiliate Audit |

Avoid “Deceptive Page Purpose.” Hidden affiliate links tell Google’s algorithms that you have something to hide. |

Add clear disclosures. Place a disclosure at the top of every post (e.g., “This post contains affiliate links…”). It must be unavoidable and not buried in the footer.

|

| 5. The “Hypocrisy” Audit |

Align Policy with Production. Google checks if your production values match your claims. Claiming “Expert Reviewed” on mass-produced AI content triggers deception flags.

|

Align immediately. Ensure your “About Us” or “Terms” page matches your actual output. Do not lie about your editorial process.

|

| 6. The Clutter Sweep |

Reduce

|

Check mobile experience. Load your site on mobile; if ads block the Main Content, remove them. You are trading pennies in ad revenue for dollars in ranking positions. |

If you want to apply this to your site today, stop guessing and follow this logic flow. These aren’t just best practices; they are defenses against specific attributes found in the Google leak.

1. The Stability Check (Read the Graphs)

-

The Logic: Verify your context. Is it AI Overviews eating traffic, or did you drop in rankings?

-

Action: If your rankings dropped significantly, you are in “Recovery Mode.” The Quality Systems have overridden the Relevance Systems. Stop publishing and audit for quality.

2. The “Stay in Your Lane” Check (siteFocusScore)

-

The Logic: Google measures your topical authority via

siteFocusScore(Topicality). If you are a “Dental” site that suddenly starts publishing “Crypto” advice because it’s trending, you destroy this score. -

Action: Audit your content categories. If a topic doesn’t support your primary entity, delete it or move it to a different domain. Do not dilute your focus for cheap traffic.

3. The Commercial Risk Check (scamness)

-

The Logic: As soon as you move from “Informational” (free) to “Commercial” (paid), you trigger a

scamnessscore. This score looks for the gap between your ask (credit card/data) and your trust signals. -

Action: If you are selling products (Scenario C), you must over-index on transparency. Add physical addresses, clear return policies (

isMerchantsignals), and customer service options. You are paying down yourscamnessdebt.

4. The Affiliate Audit (Transparency)

-

The Logic: Hidden affiliate links trigger “Deceptive Page Purpose” flags.

-

Action: Add a clear disclosure at the top of every post (e.g., “This post contains affiliate links…”). Ensure it meets FTC standards of being “unavoidable.” Do not bury it in the footer.

5. The “Hypocrisy” Audit (Alignment)

-

The Logic: Does your content production match your Terms of Service? A mismatch triggers “Deceptive Page Purpose” flags.

-

Action: If your “About Us” says “Expert Reviewed” but you publish 50 unedited AI posts a day, you are lying. Align your production values with your policy immediately.

6. The Clutter Sweep (clutterScore)

-

The Logic: Aggressive monetization increases your

clutterScore, which directly penalizes your quality rating. -

Action: Load your site on mobile. If ads block the Main Content, remove them. You are trading pennies in ad revenue for dollars in ranking positions.

Contextual SEO Theory: FAQ

What is the core premise of contextual SEO?

E‑E‑A‑T, for example, is contextual. Rankings do not reward abstract or universal quality. They reward appropriate quality for a specific query, risk level, and entity type.

Quality is judged relative to context, not ideals.

Why does this framework exist?

It preserves the original thesis of contextual SEO while making it repeatable, defensible, and usable in audits, diagnoses, reconsideration requests, and strategic decisions.

What does “contextual SEO” actually mean?

It means pages are not judged in isolation, signals are not absolute, and trust is not evenly distributed.

A page succeeds or fails based on whether it meets the expected standard for its context, not whether it meets a universal SEO benchmark.

Does contextual SEO argue for lower standards?

No. It argues for proportional standards.

Expectations scale based on query type, risk, and entity role. Standards are contextual, not reduced.

What does “context defines quality” mean?

Context is not a modifier of quality.

It defines what quality is for a given query. The same page can be high quality in one context and insufficient in another.

What is the Context Stack?

The Context Stack is an ordered evaluation model used to diagnose ranking performance. Failure at a higher layer cannot be compensated for by improvements at lower layers.

What are the layers of the Context Stack?

From top to bottom:

- Query Class (Know / Do / Website / Visit‑in‑Person)

- Risk Profile (YMYL / Soft‑YMYL / Non‑YMYL)

- Expectation Baseline (what a reasonable user expects)

- Entity Role (Brand / Publisher / Individual / Tool / Forum / Retailer)

- Proportional E‑E‑A‑T Threshold (Minimum → Competitive → Dominant)

Why do SEO problems often persist despite improvements?

Because pages are frequently evaluated at the wrong layer of the Context Stack. Improving content or links does not fix a query, risk, or entity mismatch.

Is E‑E‑A‑T a checklist?

No. E‑E‑A‑T is a threshold system, not a checklist or personality trait. Pages must meet the expected trust threshold for their context – not exceed an abstract ideal.

Was the quality rater guidelines designed to be an SEO checklist? No. Can you turn the information in the official quality raters guide into a checklist?

Yes.

I did.

Do you need a checklist? Again, its contextual.

Are you managing a project? No? Then you dont need a checklist – if you know what you are doing? Dont know what you are doing yes, a checklist is good. Not managing a project? You dont need a checklist.

Everyone managing a seo project or team over long time has some sort of “checklist” somewhere or keeping track of things is a hard task when reporting to your boss. No boss? Then you can “do” what you want, I suppose. My content is created for folk who need a seo checklist.

How do E‑E‑A‑T thresholds work?

Each signal operates across three levels:

- Minimum (indexable)

- Competitive

- Dominant

Most pages only need to meet the expected threshold for the query they target.

What do E‑E‑A‑T thresholds look like in practice?

- Experience: Plausible first‑hand → repeated demonstration → unique or hard‑to‑fake

- Expertise: Topic familiarity → recognised competence → peer‑level authority

- Authority: Entity exists → cited externally → referenced by peers

- Trust: No red flags → clear protections → institutional‑grade

Why does “good content” still fail to rank?

Because the failure is often contextual, not qualitative. Common causes include query mismatch, entity mismatch, incorrect format, or disproportionate commercial intent.

How can contextual mismatch be tested operationally?

Ask:

Would a quality rater reasonably expect this page type from this entity for this query?

What indicates strong query → page fit?

- Page format matches intent

- Entity type aligns with SERP peers

- Content depth matches top results

- Commercial intent is proportional

Fail one signal and performance weakens. Fail several and suppression occurs regardless of content quality.

Does Google rank what a site claims to be?

No. Google ranks what an entity can plausibly be interpreted as. Presentation without corroboration creates trust friction.

Can small or independent sites rank?

Yes. Small sites can rank for narrow expertise queries where first‑hand experience is sufficient, risk is low, and SERP competitors are similar entities.

Is authority site‑wide?

No. Authority is query‑scoped, not site‑wide. Over‑presenting authority is as harmful as lacking it.

What is contextual overreach?

Contextual overreach occurs when a site attempts to rank for queries requiring more trust, authority, or institutional backing than it can reasonably justify.

What is entity inflation?

Entity inflation is presenting as a brand, publisher, or institution without external corroboration. This creates distrust and ranking friction.

What is intent drift?

Intent drift occurs when a page temporarily ranks for queries it does not genuinely satisfy, leading to eventual suppression.

What is trust signal debt?

Trust signal debt accumulates from UX issues, aggressive monetisation, weak disclosures, or reputation gaps that silently erode trust over time.

Are these content problems?

No. These are systemic, contextual issues. Content improvements alone do not resolve them.

How is the framework applied operationally?

By following this audit flow:

- Classify the query

- Determine risk level

- Observe SERP entity makeup

- Set expected E‑E‑A‑T threshold

- Audit against the threshold (not ideals)

- Decide whether to improve, narrow scope, retarget, or exit

Why does this prevent wasted effort?

Because it avoids improving the wrong signals for the wrong context and prevents misdiagnosis of ranking losses.

Can you give a condensed example?

Yes.

- Query: Informational, non‑YMYL

- SERP: Blogs, forums, small publishers

- Entity: Independent site

- Threshold: Competitive experience + basic authority

Outcome: First‑hand experience matters. Institutional signals are unnecessary. Excessive trust theatrics add no value.

How does this framework relate to Google systems?

It aligns with:

- Helpful Content system (contextual satisfaction)

- Core ranking systems (relative relevance and trust)

- Quality Rater Guidelines (as validation, not mechanisms)

Does this framework rely on secret signals?

No. It relies on correct interpretation of observable patterns and expectations.

What is the practical takeaway?

Stop only asking:

How do I increase authority and trust?

Start asking:

What level of authority and trust does this query actually require?

That question, answered consistently, determines outcomes.

As David Quaid so effectively puts it, Context is King and Emperor.

Disclaimer: This is not official. Any article (like this) dealing with the Google Content Data Warehouse leak requires a lot of logical inference when putting together the framework for SEOs, as I have done with this article. I urge you to double-check my work and use critical thinking when applying anything for the leaks to your site. My aim with these articles is essentially to confirm that Google does, as it claims, try to identify trusted sites to rank in its index. The aim is to irrefutably confirm white hat SEO has purpose in 2026 – and that purpose is to build high-quality websites. Feedback and corrections welcome.

Disclosure: I use generative AI when specifically writing about my own experiences, ideas, stories, concepts, tools, tool documentation or research. My tool of choice for this process is Google Gemini Pro 3 Deep Research (and ChatGPT 5 for additional checks and image generation). I have over 20 years of experience writing about accessible website development and SEO (search engine optimisation). This assistance helps ensure my customers have clarity on everything I am involved with and what I stand for. It also ensures that when customers use Google Search to ask a question about Hobo Web software, for instance, the answer is always available to them, and it is as accurate and up-to-date as possible. All content was conceived, edited, and verified as correct by me (and is under constant development). See my AI policy.