Many new into SEO today think old school seo was spam. Today’s narrative encourages them to think like that. I do miss that old style seo which – of course – was a lot simpler to deploy. I miss the cavalier approach to seo, and the quick results to be had from it.

There was fun to be had in the SERPS. I remember making my old manager rank in Google for ‘ballbag’ and made my friend Robbie Williams’ greatest fan in the SERPS.

I remember ranking no1 for “T In The Park Tickets‘ on the day that T in The Park tickets went on sale, just to get in the way of touts who annoyed me, as a festival goer (and politely refused to work with them when they called me up to ask me how I did it).

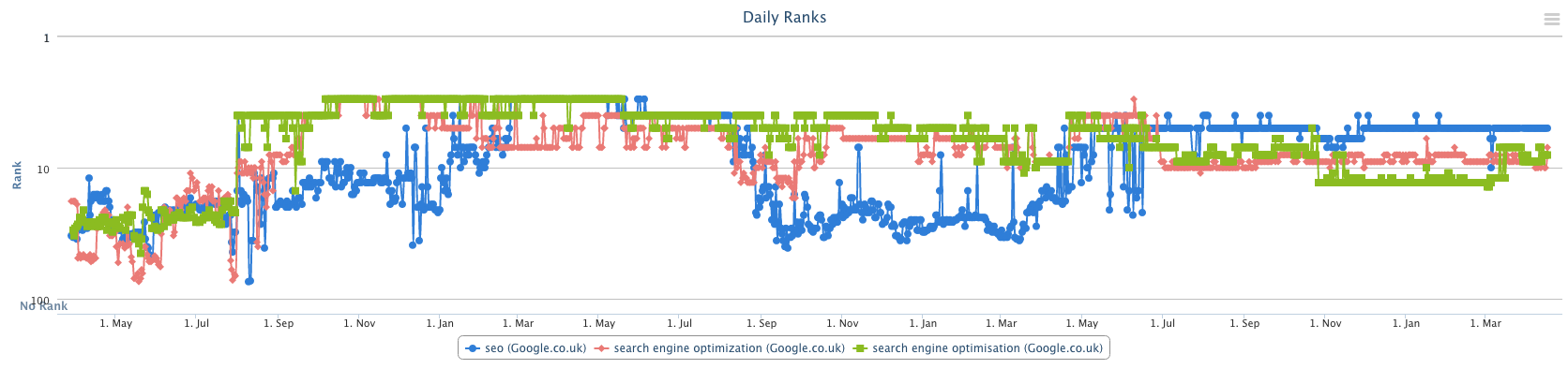

I’ve ranked on page 1, there or thereabouts, for ‘seo’ in the UK for the last few years.

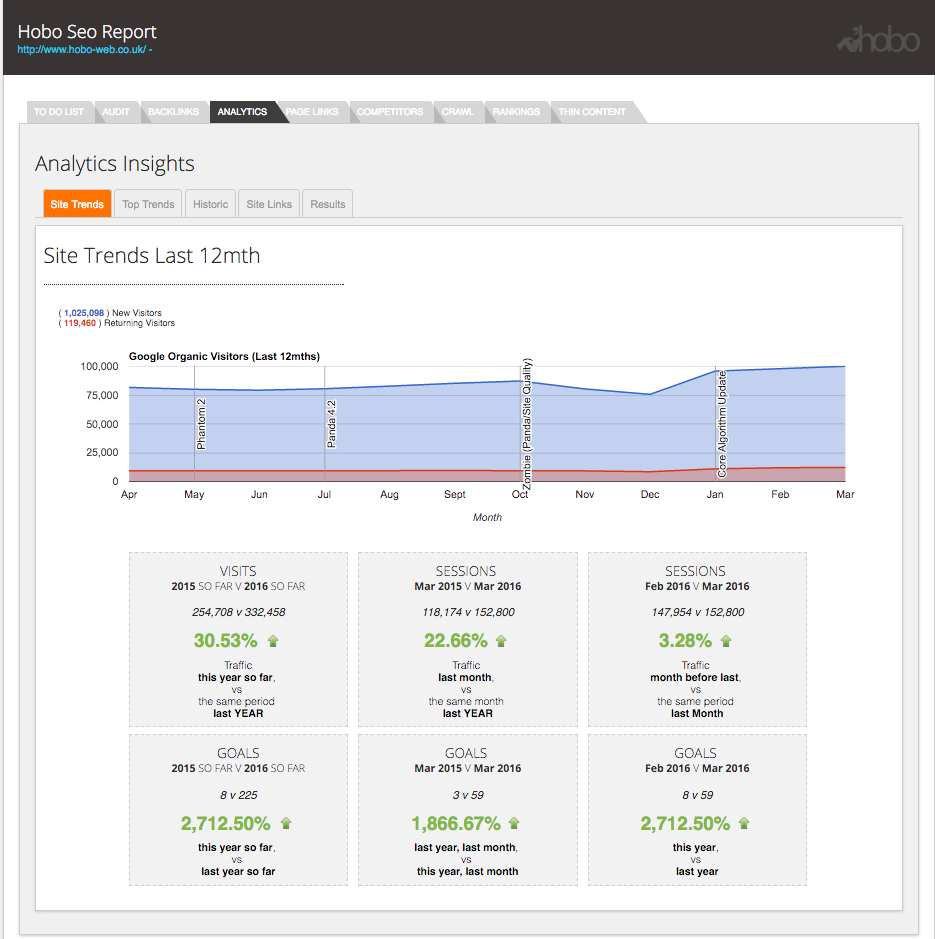

My content strategy was discussed by Reddit users. This site gets 150K visitors a month, 100K new organic visitors from Google every month (last month, anyway).

I’ve experienced what it takes to rank a site in this niche after a penalty and move on without using pre-2012 SEO tactics.

I obviously work in many other niches.

But – there is another side to ranking in Google.

Penalties Hurt

My own site was penalised in the past (pre-Penguin) and so I understand the impact that a penalty has.

Google wanted SEO companies to play by their rules (and the actual law, to be fair) and in 2012 it was clear they were intent on making it hurt.

Link Penalties

Penguin in 2012 was the beginning of the end for low-quality link building for real companies, and a real problem for seo everywhere to deal with.

I kind of avoided the worst of that nuclear bomb, and after 2012, for me SEO become a clean up operation first and foremost – for Google had set a clear direction where they were going with this.

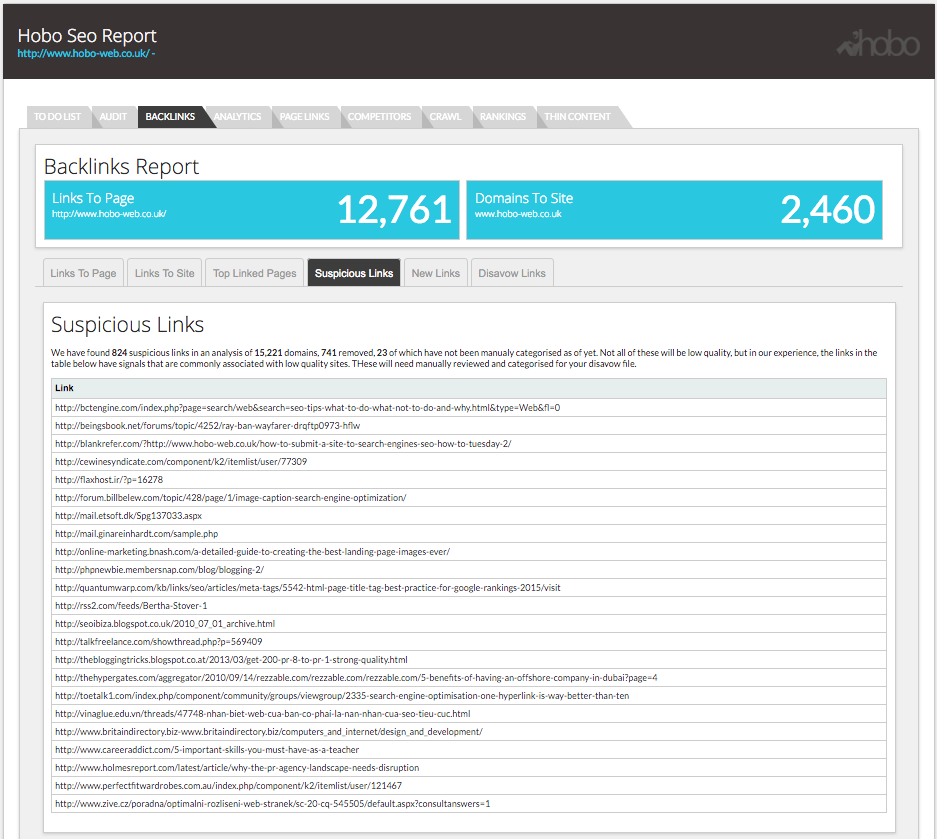

I spent 400 hours looking at the backlinks pointing to my site. Seriously.

There were no backlink risk analysis tools back in 2102, so people like me built our own.

I wouldn’t have trusted any, anyways.

I needed to know for myself the quality of the links pointing to my site.

From a strategic point of view, we as SEO needed to now clean up backlinks, potentially negatively impacting rankings in the short term, AND we could no longer throw unnatural links at a site without expecting problems for the site in future.

That was a problem, but fortunately, not longer after, it was evident that Google was actually giving a damn about the actual technical and contextual quality of the site, in many niches outside the obviously problematic content farms.

And in a big way.

The site quality raters document released a few years ago was further evidence of this, and it has since been confirmed that Google uses the results from manual rating exercises to train their algorithms to better identify low-quality sites.

Negative SEO

The problem with carrying on as normal with link building, to boot, was that as soon as this became public knowledge, it was obvious with the marketers I was talking to that negative seo was going to take off in a big way.

Sure enough – it did.

Penguin heralded the day Google started turning SEO into unpaid human quality raters working for them.

My own site has plenty of negative seo attacks over the last few years.

I was hacked too, about a year after my penalty, and I cleaned it up in an unconventional way. Google obviously hadn’t recognised what I tried to do, and sent me another manual action for unnatural links a long time after I cleaned it up, actually.

After the later iterations of Penguin, if you were buying low-quality links, you were leaving yourself exposed to manual actions from the web spam team and a negative SEO attack simply focused on whatever tactic you were employing to promote your site, so tipping you over the threshold for a manual review.

Fortunately, I wasn’t buying links, and I had a disavow file that could demonstrate my intent over the last year. I could also immediately demonstrate that this was indeed a hack and not my doing, and the penalty was lifted the next day (taking 2 weeks to disappear from Search Console).

That is the fastest I ever got a penalty lifted.

A few hours.

Who knew? Google can be quite responsive.

Site Quality Metrics

Excellent news, one would think.

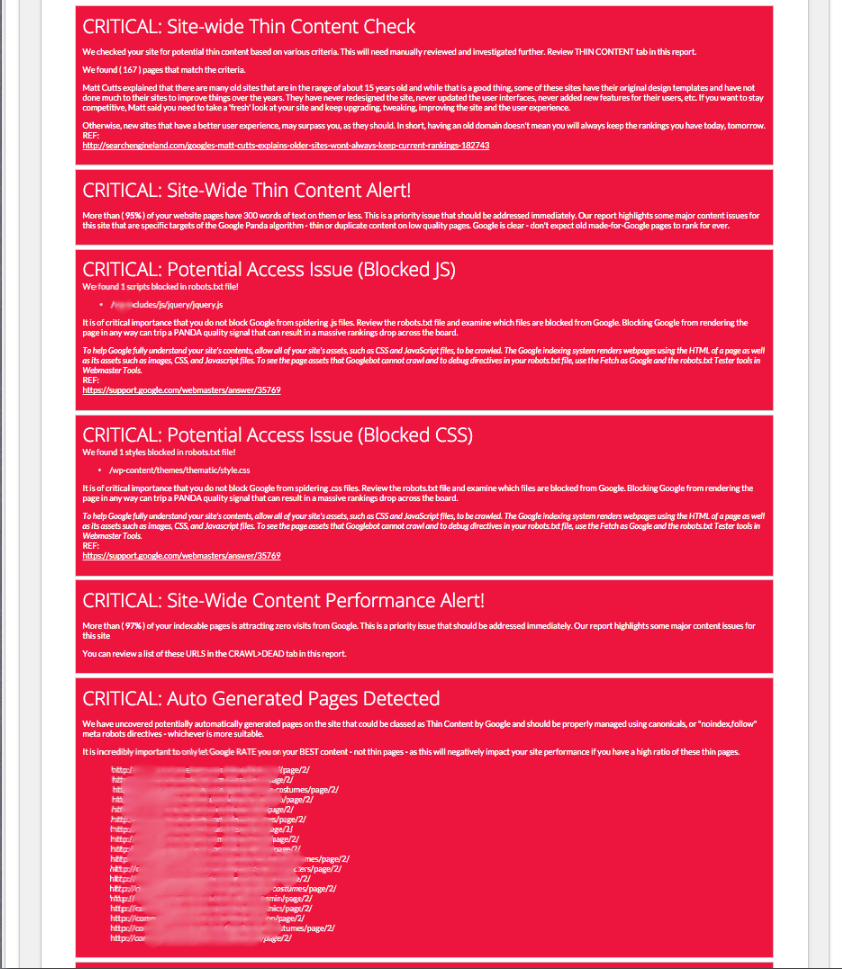

BUT – the immediate problem is Google looks for reasons to demote you, and every site had a convenient boatload of crap content poorly organised for Google to algorithmically identify and penalise.

Most (and certain) sites were going down with this metric.

‘Panda Bamboo‘, some of it has been called, because of the Google Panda algorithm.

Not only were links to be cleaned up, but so too content needed to be improved, when not removed entirely – and speed, responsiveness and site architecture had to improve, too.

Content Penalties

The nastiest experience of recent years, was being hired and not long placed at the helm of a large site just as that site then was decimated by quality algorithm changes and watched, helplessly as the work done by years of previous seo companies (who had done a relatively good job of driving organic traffic with 100% unnatural links) and shortcuts by the client using duplicate content, and letting the site fall into disrepair, destroyed traffic to the site in a way I have not experienced before outside of a backlinks penalty.

Picture the Hindenburg airship disaster and you have an idea of the impact of the sudden catastrophic failure you face when Google brings the hammer down.

Google, clearly, logically and legitimately (I am not qualified to talk about ethics) has no compunction in burning your entire site to the ground when you ignore multiple guidelines and somebody like me, no seo, can just make your site ‘high quality‘ overnight, unless there is something there to begin with.

As a student of seo of over 15 years I am used to the zero-sum game that is the SERPs and the nastiness that come with it, and I try and distance myself from it and modify my own business practices, but watching up close a legitimate businesses online presence be decimated by changing Google algorithms when so many livelihoods rides on it makes you think twice about what methods you can do and should do to rank in Google SERPs for the long term.

It’s all rather easy for anybody to expose manipulative tactics to Google and for low-quality techniques to leave you exposed to ever improving quality checks.

Understanding this in my background and you understand the way I do SEO, and that is to say, I proceed with caution.

While I know of and can always learn more ways to rank a site, there is only one real way to rank a real businesses website and not be penalised in future and actually let the client have something to show for investment next year.

Looking back, getting penalised was demonstrably the best thing that ever happened to my own site, as it was the first step in learning how to rank a site in the new Google.

While I may still have to think about future algorithm changes, I don’t spend too much time these days worrying about Google finding my link network.

Competing In High-Quality SERPS

Google said, effectively, to clean up old school manipulation, focus on the user and build a high-quality site that separates you from the lower-quality sites out there.

At the time there was no way to know if Google was telling the truth, and I just thought it would be probably safer to avoid them rather than to continue to rely on ‘unnatural’ manipulation (as I can’t use that for clients).

There was only one way to test it. I had to improve traffic and rankings for a site by focusing on quality and satisfying searcher intent. Remove all crap. And fast.

I started on my own site, which to be honest, I had really pushed boundaries with, and the site and had been penalised.

The content was out of date and crap.

I could rip it, and my link profile, apart and without committee and quickly I saw that Google did seem to send traffic to improved pages (once neutered by quality algorithms).

So I re-developed the site and its content.

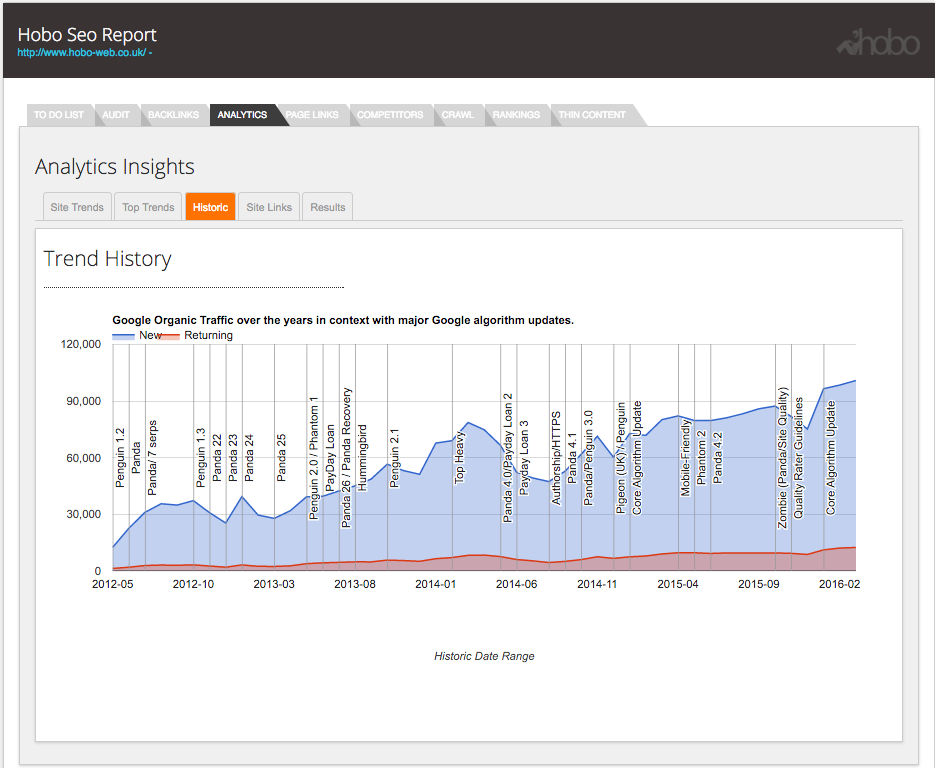

Today, a few years since Penguin, I get a lot more relevant traffic from Google than I did before the penalty back in very early 2012:

Fantastic! There was a way to rank and drive traffic in this new Google.

The problem was it was a LOT of work in comparison to old-school SEO.

This work consisted of a lot of clean up and in a very specific way in many areas that took advantage of the opportunity that Google was giving us, and what materials we had to hand.

And so is laid out the dichotomy that is seo, at least for a former ‘web spammer’.

Once a short-term solution, sensible SEO (with due diligence) was now a longer-term prospect for those real businesses with one primary website. Once a proactive measure from the get go, now it is, in the UK at least, a reactive, protective measure first, and a tentative process going forward.

I could either sell potentially toxic services currently being stepped all over by Google, or I could focus on the far greater challenge of actually improving any website I am asked to manage SEO for.

I chose the latter.

SEO changed a lot over the last 3 years, too.

When I say changed, I mean that the same stuff got harder and longer to see results from. You have to be more exact with many things, these days, for instance. You need quality in many areas if you are competing with another bent on getting to the top in this fashion.

Whichever tactics you employ they need to be wrapped in a strategy that insulates you from obvious future demotion in the SERPs for failure to comply with Google policies.

But that isn’t the biggest change.

Users Need To Be Satisfied, Too

Pleasing Googlebot and complying with Google policies that are checked by algorithms 18 years in the making is a moot point if you are not satisfying the real people who will eventually see this site.

The longer you spend faking quality the longer you are from actually making it and having this quality is actually starting to matter.

To be honest, I thought it would matter even more, now, than it does.

It’s unlikely, going forward, that you are going to get a lot of traffic from Google without at some point a human looking at your site and rating it, or penalising it. It is unlikely that Google is going to get more lenient.

If people are not happy with the page you present them with, it is going to impact your rankings at some point in the near future.

Google might actually already prefer ranking some sites at the top if the pages have shown consistent evidence of satisfying users…. and that threshold can CHANGE OVERNIGHT based on the pages you end up competing in Google with.

If I sound alarmist when it comes to manipulating Google in any simple way and fast, it is because I am when it comes to mitigating risk and investment for a paying customer.

Taking that risk into account, Google made it very risky and self-defeating to ignore their guidelines, and rewarding, if you listen to them and manage to avoid their punishment algorithms.

If you are not aiming to be remarkable, in some way, it is not a cuddly Panda or fluffy Penguin that’s going to play with your site, despite the friendly toys and doublespeak from the Google PR team.

SEO used to be about brute force to push your way to the top.

Today, it’s safer and as rewarding, in time, to realise that when others get demoted, you are promoted, and focus on improving your site and content to make sure it is better than content that competes directly with yours – because this will matter.

And when you have a better site, you can share it with more likelihood you can attract better links.

That is how I proceed.

Tools For The Job

I’ve always thought if I was using the same tools as everybody else there would be no competitive advantage.

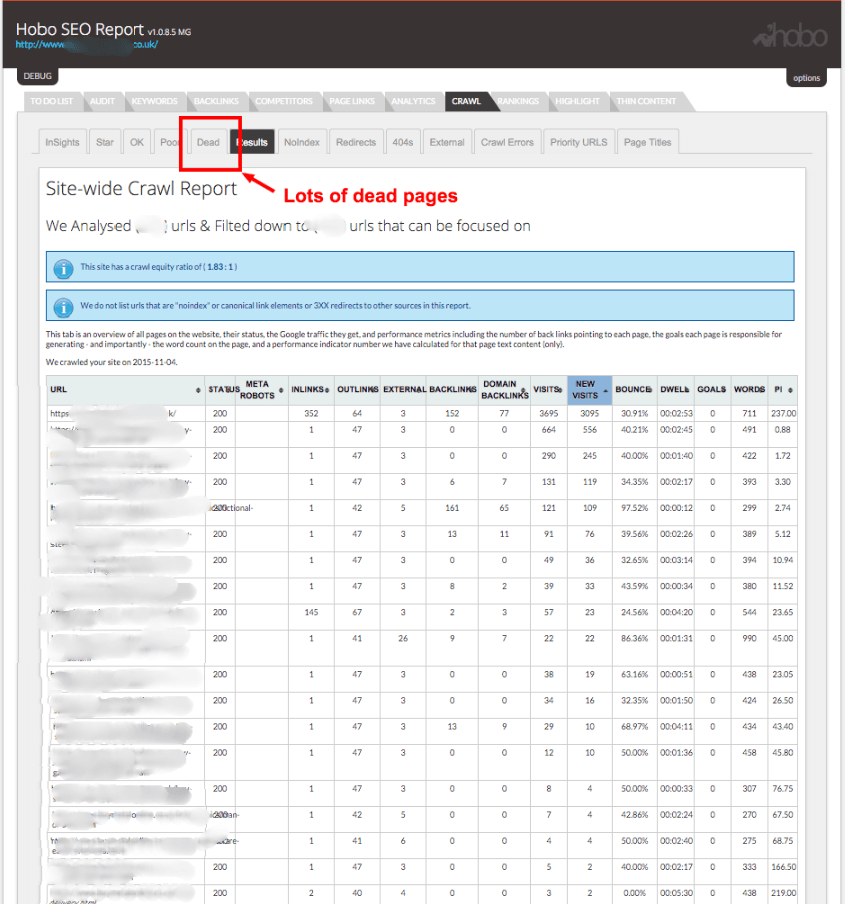

Also, the defacto tools I and many others do use, like Google Analytics, SemRush and Screaming Frog and Majestic, do killer individual tasks, but all suffer from the same capability to prioritise site problems across multiple areas to fix.

Online tools often focus on the easier stuff to pick out and rate, as they are designed to succeed at scale. Most I have tried have totally ignored the granular stuff that is important.

SO, I designed my own tools in-house, that would connect with all the tools I use, combine all the data sets my in-house team are working on (about 150 data sources), and have a go at automatically prioritising the most important tasks that I think are the most important to focus on.

Automating the identification of site problems can be very handy as the job I do e.g. watch Google and test things, has increasingly been made more difficult and a lot more time consuming with Google’s tendency to string things out if they are happy enough with the status quo at their end.

Prioritised tasks are highlighted in a way that the web developer knows what the is doing next, and the content team knows what they are doing next, and the risk management team know what they are doing next.

I never agreed with any tools page specific advice, almost at any time. I always thought these seo tools encourage you to create patterns, that Google can identify, and from my own testing (in the UK) many were giving absolutely damaging advice considering the direction Google is going in.

When you adapt to working in the dark, like keyword not provided and keyword query rewriting, you end up, forced, to consider what you can do legitimately to promote any site and not harm a client in the long term.

To, me, and since I first saw this in 2013, that basically means focusing on the user, and upping those satisfaction signals, as we know Google is using satisfaction metrics as proxies for success.

It also means avoiding Google’s algorithms – and they often target old-school seo manipulation. Google’s either ignoring it, or worse, penalising you for it. Sometimes, it’s hard to tell the difference, at first.

Google also needs to be (who knows for sure) 90% sure you are spamming it to hit you with the harshest of penalties (although algorithms might decimate your traffic over a longer period – during which it is reasonable to assume, on their part, you are familiarising yourself with Google guidelines if you want more Google traffic).

With any seo tactic these days, I usually think, before I proceed:

- Does this make the site more accessible?

- Is the potentially better user experience signals worth the increased load time?

- Is what I am doing improving the page for the target audience or just for me?

- Is what I am doing making this page more remarkable?

- Can what I what I am doing being interpreted by Google as negatively manipulative?

- Is my code ignorant of Google Webmaster Guidelines?

- Is what I am doing creating a positive social feedback loop , or a negative social sentiment?

- Is what I am doing making this page better than other pages it will be competing with?

- Is what I am doing making it easier to understand the integrity and intent of this entity, where it competes with competing entities?

- Is what I am doing to improve this page from a machine readable point of view?

- If I add a new page on the site, is it going to improve my quality score or detract from it

Google has some sort of quality rating for your site.

That quality metric changes the game and it is worth remembering it is in its infancy. That metric is not going to ‘get worse’ over time its going to get much better at finding fakers out (at least, in future, identify strategies being used today).

How I Manage SEO

When you start going to clients and saying this is what’s on the horizon, as I did in late 2013 (I think, not long after Hummingbird), it is a very hard sell when they, understandably, only understand approaching seo from a broad point of view that involves stuffing meta tags, keyword stuffing and unnatural links – all of which can get you penalised.

Not, as it happens, quite as hard a sell as it was to tell clients after 2012 that any kind of paid link building was going to be not viable with their primary website.

If you are in this game for the long haul you really need to avoid leaving a footprint, on improving site quality scores and user satisfaction scores that satisfy varied expectations query to query.

Fortunately, a lot of that is still in the exact same place it’s always been. Sure, some things have changed about – for instance, the most important element to optimise for an information page, is the P tags, not the TITLE tag.

Without satisfying P tags, old school title optimisation will be less effective than it once was – BUT ARGUABLY – once you get the past the quality filter – old school optimisation techniques still provide REAL BENEFIT. You still want certain keywords, especially synonyms and related terms on pages.

SEO was about repetition, now it needs to be about diffusion, uniqueness and variance.

Every time you repeat something unnecessarily you risk algorithms designed to frustrate you.

Google also has a trend in targeting any tactic that can have an exponentially positive impact throughout a site in a way that leaves a pattern that indicates low-quality techniques have been implemented.

- You can’t seo a low-quality site (for long) without improving the quality of it – not without faking it, even more.

- You can optimise a quality site, or at least, one not impacted by ever-changing and improving quality algorithms.

SEO used to be largely about making unremarkable sites rank in Google (when that is now what Adwords is for, evidently).

You need a shiny, quality site to rank long term.

Some may scoff at that for it is clearly not the case in lots of SERPS – yet.

In a few SERPS I think there is either heavy manual control and fixing of some keyword phrases, or the algorithms have plenty of choice with which to create a relevant, diverse and reasonably stable set of results ie. Google has lots of quality pages to rank high after it’s filtered out lesser quality pages. The even have algorithms for specific SERPs.

In the end, it doesn’t matter.

Google’s algorithms are hardly going to get worse, one would think, as the years go on.

That’s how I have set my course over the last few years.

Automation Is A Necessity

Automation makes things faster and here is why you need it.

Some stuff is ideal for automation and if you are not automating it you are burning clients money totally unnecessarily.

Checking if a page has a canonical tag, for instance, is easy, and why would you pay someone to do that when a tool can do it exactly to the same level of quality but a thousand times faster? Especially when you have a thousand, or a million pages to check.

No seo spiders a site manually, to give you an example.

No SEO lives without some API, online service or automation. Web spammers use automation everywhere, whereas professional SEO use it where it saves time and client’s money without impacting (or even, when it improves) quality control.

If it takes 10 hours to review a site and ponder its problems, it takes hours to check, every month going forward, that nothing has been done to mess up the previous months work. I proceed thinking that everybody messes up – so automate the checking of every element I offer advice on.

SEO tend to use the same data sources for specific tasks (like Search Console, Google Analytics, Majestic and AHref for backlinks, for instance) as there are not really that many options open to us.

I use the data sources I use because they are amongst the best in the world, or in Google’s case, are the only place to get the information.

The best SEOs automate what can be automated.

I designed a platform that focuses my studio on the priorities to address.

How I Work

I spend most of the day researching, advising my team, reviewing client sites, writing up notes, some of which I publish on my blog and looking at and testing Google before I offer advice. I review the reports my team create, review client sites and manually check (with my team) if anything on the actual site is problematic.

Every time I come across an issue on a site my tools don’t pick up, I lay down plans to automate the identification of it for the future, and my in-house software engineer builds it for me.

I generally avoid social media, the telephone, meetings or emails these days, and focus on what I am supposed to be doing.

That’s how I do SEO and why I do it the way I do it.

Who knows? One day you might need this kind of SEO.

8,500

followers

2,800

likes

5000+

connections